Continuous Deployment of Pervasive Applications in Dynamic Environments Ozan Necati Günalp

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Private Data Protection in Ubiquitous Computing

UBICOMM 2016 : The Tenth International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies Private Data Protection in Ubiquitous Computing Malika Yaici Samia Ameza¤, Ryma Houariy and Sabrina Hammachiz Laboratoire LTII Computer Department, University of Bejaia University of Bejaia Bejaia, 06000, Algeria Bejaia, 06000, Algeria ¤[email protected] [email protected] [email protected] [email protected] Abstract—A system in ubiquitous computing consists of a large care and safety where the end-user has authorized credentials amount of heterogeneous users and devices that communicate anonymity. with each other. Users in this dynamic field communicate with lightweight and autonomous devices, which accentuate security In [3], the author uses the framework of contextual integrity problems and make them more complex. The existing mechanisms related to privacy, developed by Nissenbaum in 2010 [4], as and solutions are inadequate to address new challenges mainly a tool to understand citizen’s response to the implementation for problems of authentication and protection of privacy. In this of IoT related technology in a supermarket. The purpose was paper, a new security architecture called Tree Based distributed to identify and understand specific changes in information Privacy Protection System is proposed. It supports protection of practices brought about by the IoT that may be perceived users private data and addresses the shortcomings of systems as privacy violations. Issues identified included the mining of like GAIA, OpenID and User-directed Privacy Protection (UPP). medical data, invasive targeted advertising, and loss of auton- Furthermore, it takes into account the domain dissociation omy through marketing profiles or personal affect monitoring. -

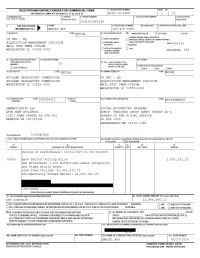

Order No. 31310019F0149 Under Contract No. GS35F192BA

2 of 27 19. 20. 21. 22. 23. 24. ITEM NO. SCHEDULE OF SUPPLIES/SERVICES QUANTITY UNIT UNIT PRICE AMOUNT Funded: $702,432.93 Accounting Info: Funded: $40,981.86 Accounting Info: Funded: $347,917.46 10001 Option Period 1 Ceiling Price 0.00 See Attachment 1 for Authorized Labor Categories and Fixed Hourly Rates Amount: $1,059,977.13(Option Line Item) Anticipated Exercise Date09/30/2020 Period of Performance: 10/01/2020 to 09/30/2021 20001 Option Period 2 Ceiling Price 0.00 See Attachment 1 for Authorized Labor Categories and Fixed Hourly Rates Amount: $847,176.84(Option Line Item) Anticipated Exercise Date09/30/2021 Period of Performance: 10/01/2021 to 09/30/2022 The obligated amount of award: $1,091,332.25. The total for this award is shown in box 26. 32a. QUANTITY IN COLUMN 21 HAS BEEN RECEIVED INSPECTED ACCEPTED, AND CONFORMS TO THE CONTRACT, EXCEPT AS NOTED: 32b. SIGNATURE OF AUTHORIZED GOVERNMENT REPRESENTATIVE 32c. DATE 32d. PRINTED NAME AND TITLE OF AUTHORIZED GOVERNMENT REPRESENTATIVE 32e. MA LING ADDRESS OF AUTHORIZED GOVERNMENT REPRESENTATIVE 32f. TELEPHONE NUMBER OF AUTHORIZED GOVERNMENT REPRESENTATIVE 32g. E-MA L OF AUTHORIZED GOVERNMENT REPRESENTATIVE 33. SHIP NUMBER 34. VOUCHER NUMBER 35. AMOUNT VERIFIED 36. PAYMENT 37. CHECK NUMBER CORRECT FOR COMPLETE PARTIAL FINAL PARTIAL FINAL 38. S/R ACCOUNT NUMBER 39. S/R VOUCHER NUMBER 40. PAID BY 41a. I CERTIFY THIS ACCOUNT IS CORRECT AND PROPER FOR PAYMENT 42a. RECEIVED BY (Print) 41b. SIGNATURE AND TITLE OF CERTIFY NG OFFICER 41c. DATE 42b. RECEIVED AT (Location) 42c. -

Contributed to Open Source in Ruby Community by Building Lazyload-Image-Rails Gem and Contribution to Rails

Name: Sunkuru Abhishek Mobile: +91-9840515108 Email: [email protected] Git: https://github.com/abhisheksunkuru Skype: abhisheksunkuru Professional Summary Having Around 6 years of experience in building Web Applications Using RubyonRails. Experienced in technologies like Facebook Open graph. Good at relational databases MySql, PostgreSQL. Active team player, mentor and a self-starter, capable of working independently. Exposure to Software Development Life Cycle. Experienced in javascript libraries like Jquery,React Js. Experienced in Amazon services like Aws-s3,Cloudfront. Experienced in building REST API Experienced in Heroku and Capistrano deployment process. Knowledge in programming languages like Ruby,java. Contributed to open source in ruby community by building lazyload-image-rails gem and contribution to Rails. Utilized the Git and Svn Repository for our project to maintain the code versioning. Professional Experience Working as Senior Software Engineer in Tranway technologies from may 2018 to till date. Working as Senior Software Engineer in Nuware Systems LLP from Oct 2016 to Dec 2017. Working as Senior Software Engineer in Sedin Technologies Pvt Ltd from Dec 2013 to Oct 2016. Working as Software Engineer in Maisa Solutions Pvt Ltd,Hyderabad from June 2013 to November 2013. Worked as Software Engineer in Rising Sun Technologies Pvt Ltd., Jaipur from May 2012 to May 2013. Educational Qualifications B.Tech (IT) from Jawaharlal Nehru Technological University with 63.7%. Intermediate (M.P.C) from Sri Chaitanya Junior college with 90.1 %. S.S.C from Zilla Parishad High School with 85.3 %. Technical Skills Languages : Ruby. Web Technologies : HTML, XML, CSS , JAVASCRIPT, Jquery, Haml, ReactJs. Application Server : Thin, Webrick,puma. -

Questions for Mongrel

www.YoYoBrain.com - Accelerators for Memory and Learning Questions for Mongrel Category: Introduction - (16 questions) Mongrel is described in what way in the "A web application container for Ruby on Mongrel pdf available from O Reilly Rails" Mongrel is compared with what web servers production performance: Fast CGI or SCGI in the Rails world in terms of production performance and development Development: WEBrick simplicity/speed Creator of Mongrel Zed A Shawwww.zedshaw.com Mongrel is developed on what mixture of Ruby and C programming/scripting languages Documentation for Mongrel mongrel.rubyforge.org/docs/index.html The creators of Mongrel describe it how? a fast HTTP library and server for Ruby that is intended for hosting Ruby web applications of any kind using plain HTTP rather than FastCGI or SCGI. It is framework agnostic Three key technologies that are used for A custom HTTP 1.1 parser (based on RFC Mongrel's internals standard, written using Ragel in C and Java as a Rby extension) Simple server that uses the parser and URIClassifier to process requests, find the right handlers, then pass the results to the handler for processing Handlers are responsible for using HttpRequet and HttpResponse objects to "do their thing and then return results" Component of Mongrel responsible for Handlers dealing with HttpRequest and HttpResponse How does Mongrel support threading one thread per request, but it will start closing connections when it gets "overloaded"while Mongrel is processing HTTP requests and sending responses it uses Ruby's threading system What platforms that already work with Camping and Og+Nitro Mongrel are throught to be "thread-safe" Have not been heavily tested Is Ruby on Rails thread safe? no How does Mongrel handle Rails" " Ruby on Rails is not thread safe so there is a synchronized block around the calls to Dispatcher.dispatch. -

Consumer Data and Privacy in Ubiquitous Computing

ESPOO 2007 VTT PUBLICATIONS 647 VTT PUBLICATIONS VTT PUBLICATIONS 647 Consumer Data and Privacy in Ubiquitous Computing 629 Communications Technologies. VTT's Research Programme 2002–2006. Final Report. Ed. by Markku Sipilä. 2007. 354 p. 630 Solehmainen, Kimmo. Fabrication of microphotonic waveguide components on silicon. 2007. 68 p. + app. 35 p. 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 631 Törrö, Maaretta. Global intellectual capital brokering. Facilitating the emergence of 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 innovations through network mediation. 106 p. + app. 2 p. 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 632 Lanne, Marinka. Yhteistyö yritysturvallisuuden hallinnassa. Tutkimus sisäisen 12345678901234567890123456789012123456789012345678901234567890121234567890123456789012345678901212345 -

Ubiquitous Commerce: Ubiquitous Computing Based Commerce Dolly Amit Pruthi Deptt

Volume 4, Issue 8, August 2014 ISSN: 2277 128X International Journal of Advanced Research in Computer Science and Software Engineering Research Paper Available online at: www.ijarcsse.com Ubiquitous Commerce: Ubiquitous Computing Based Commerce Dolly Amit Pruthi Deptt. of Computer Science & Applications, G.J. University of Sci. & Tech., M.D. University, Rohtak, India Hisar, India Abstract- With the rapid advancement in field of networking and communications, no aspect of human life is untouched. Commercial activities are also affected by the new advancements. Traditional commercial activities are changed and modified with the passage of time. Firstly, the age of E-Commerce arrived, then M-Commerce. Now, after E-Commerce and M-Commerce, the age of Ultimate Commerce is arrived. Any time/ Always/ Anywhere service providing is the key to this ultimate or ubiquitous commerce. This paper studies the concept of ubiquitous computing and its adaption to commerce with new issues associated. Keywords- Ubiquitous computing, Ubiquitous commerce. I. INTRODUCTION Ubiquitous commerce, also referred to as „u-commerce‟ is a new paradigm that broadens and extends the Internet usage in today‟s environment. With the rapid development of ubiquitous computing and mobile communication technologies, the traditional business model is changing drastically. As a logical extension of e-commerce and m-commerce, Watson proposed the concept of ubiquitous commerce (u-commerce) in 2000. As the next generation business model, it immediately gained a lot of attention. U-commerce emerges as a continuous, seamless stream of communication, content and services exchanged among businesses, suppliers, employees, customers, and products. It will enable interactions and transactions to happen anywhere and at any time without being constrained to stay connected through power and telephone lines. -

Why Devops Stops

1 What is Krista? Intelligent Automation Deployment is Simple Krista is a modern conversational Intelligent Krista's Natural Language Processing supports Automation platform designed to easily leverage voice, text, and *bots to deliver automation anyone existing IT assets. Krista's unique informal understands. By utilizing existing communication approach enables business process owners to methods in conversations, you take advantage of quickly build new lookup or data entry workflows how your employees already communicate. Krista without waiting in line for expensive IT or quickly deploys to existing desktops, mobile development resources. Krista uses a unique phones, Slack, and web browsers that your programming method similar to a text conversation employees are already using. You won't need to between one or more people. By following the way train employees or maintain brittle documentation humans already communicate, Krista enables since the automation follows existing voice and anyone to build and create workflows around texting conversations similar to WhatsApp or business process constraints. The conversational Facebook Messenger. If your employees can text, workflows eliminate maintenance and upkeep they can interact with numerous systems to required from traditional record and playback support customers, consume enterprise services, automation tools. Krista's conversations are deploy IT changes, or update important KPIs. beautifully simple, with enough power, scale, and security to find any answer inside the largest enterprises. DevOps – It’s improving. DevOps Evolution Model Stage 1 Stage 2 Stage 3 Stage 4 Stage 5 Automated infrastructure Normalization Standardization Expansion Self-service delivery Many DevOps initiatives and cultures slow or stop at Stage 3 and fail to scale since organizational structures (aka people) become constraints in the Neutral Zone. -

Ubiquitous Computing: Trends and History

Ubiquitous Computing: Trends and History Lecture 2 CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 1 Introduction Review: What is Ubiquitous Computing? • Immerses computers in a real environment • Sensors support interact with and control the environment. • Limited power supply, storage, memory and bandwidth. • Operate unattended (much like embedded systems). • Devices are mobile/wireless. • May reside on a person (wearable computing). • Have special peripherals. • Contrast this with virtual reality which immerses humans in a computer generated articial environment. CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 2 Historical Origins and Trends Computers are becoming smaller and cheaper over time • Originally few computers many operators . Machines Expensive and Large . People (relatively) cheap • Trend toward more computers per person . Users may not be tech savvy . Even tech savvy users have limited time . Minimal intervention is required People don't want to be separated from their data • But spying on users upsets them • And can violate laws - security is important • Mobility and wireless access are critical. CSI 660, William A. Maniatty, Dept. of Computer Science, University at Albany 3 Some Popular Views Many visions were popularized in the press • First to work on it, although other visionaries preceded him • Entertainment Industry (Ian Fleming, Gene Rodenberry) • Vanaver Bush's seminal article [1] As We Might Think predicted the WWW and Ubiquitous Computing in 1945! • Vernor Vinge (retired Computer Science Professor and Science ction writer) has interesting ubiquitous computing visions. • Movies: The Terminator, numerous Philip K. Dick books and screen plays (Blade Runner, Total Recall, Minority Report). Has been popular in the research community for over a decade CSI 660, William A. -

Perfect Programmers

TYTUŁHOW DOKUMENTU WE WORK AsdasdaAsdasda asdasdad asdasdasd TABLE OF CONTENTS Collaboration in a nutshell................................................................................................................................. 3 First contact.......................................................................................................................................................... 3 Prepare for development.................................................................................................................................... 4 Development......................................................................................................................................................... 5 Development teams............................................................................................................................................. 5 Agreements........................................................................................................................................................... 6 Estimates............................................................................................................................................................... 6 FA ......................................................................................................................................................................... 6 Do I have to pa" for bugs?................................................................................................................................. -

VPS Vs Heroku for Small Team

VPS vs Heroku for small team Piotr Macuk <[email protected]> Deployment history ● csv / subversion ● tgz files with timestamp ● zip / scp / unzip ● diff / scp / patch Technology history ● 2005 Git + Rails 1.0 ● 2007 Rails 2.0 / Ruby 1.9 / Capistrano / Heroku ● 2008 GitHub / Bitbucket ● 2010 Rails 3.0 ● 2013 Rails 4.0 / Ruby 2.0 / Slack ● 2016 Rails 5.0 My perception ● 1997-2013 Linux on my desktop computer ● 2000-∞ Linux on servers at work ● 2000 First apps deployment (zip / diff) ● 2005 First Rails app deployment (zip / diff) ● 2007 First Capistrano deployment (real production apps) ● 2012 Konfeo is live on VPS server ● 2012 First Heroku deployment (pet projects) ● 2015 Heroku deployment (real production apps) ● 2019 Wetea is live on Heroku VPS deployment ● Create server ● Setup and secure Nginx ● Update & upgrade packages ● Setup and secure Passenger ● Set locales, timezone, other env settings ● Install packages required by Ruby ● Install additional tools ● Setup and secure SMTP ● Create and setup deployment account ● Create database and database user ● Setup and secure SSH ● Install Ruby in selected version ● Setup firewall ● Setup Capistrano ● Setup logrotate ● Setup SSL in Nginx ● Setup and secure PostgreSQL server ● Setup server backup and monitoring VPS tech concerns ● Regular packages and kernel updates ● Regular logs and database analysis ● Regular Ruby, RVM and GEM updates ● Regular SSL health monitoring ● Regular monitoring (live / security / restarts) VPS business concerns ● Site reliability engineering (SRE) (procedures) ● Business -

Sometimes Tools Matter

Sometimes Tools Matter John E. Vincent DevOpsDays Goteborg 2011 We all know about DevOps We all know about DevOps I R DEV! I R OPS! So what's the big deal? “With XXXXX you are be able to do easily common task on your local or remote machine. The aim of XXXXX is to become the unique and universal tool language that permit you to make your own brew, apt-get or yum package, same syntax for all your machine.” Not Puppet Not Chef Not Capistrano “With XXXXX you are be able to do easily common task on your local or remote machine. The aim of XXXXX is to become the unique and universal tool language that permit you to make your own brew, apt-get or yum package, same syntax for all your machine.” Not Fabric Not DeployML Not CFengine YANFT (yet another f*ing tool) We're doing something wrong. Something is missing. I'll be over here with Capistrano, kthx You just need to write a Chef recipe and …. You can't solve cultural issues with tools You can't solve cultural issues with tools or can you? Some Issues ● Repository mismatch ● Different languages ● Volatile configuration ● Visibility ● Sensitive information ● Testability ● Packaging Caveats and Comments ● No single tool is going to solve all your problems – sorry. ● You may have already heard of some of these tools. ● You will likely have to “mold” some of these tools to fit your environment ● The tool itself is not the point, it is the end result ● I don't have all the answers.. -

SOFTWARE DEVELOPMENT METHODOLOGIES Methodologies

SOFTWARE DEVELOPMENT METHODOLOGIES Methodologies • Waterfall • Prototype model • Incremental • Iterative • Spiral • RAD Waterfall • Sequential design process • Progress is seen as flowing steadily downwards (like a waterfall) through SDLC Prototyping • Creating prototypes of software applications i.e. incomplete versions of the software program being developed • A prototype typically simulates only a few aspects of, and may be completely different from, the final product. Incremental Build Model • The model is designed, implemented and tested incrementally (a little more is added each time ). • Finished when satisfies all the requirements. • Combines the elements of the waterfall model with the iterative philosophy of prototyping. Iterative and Incremental Development • Iterative and incremental development is any combination of both iterative design or iterative method and incremental build model for development. Incremental vs. Iterative A Bit Different Understanding Effort in Iterative Development Spiral Model • Combining elements of design and prototyping- in-stages • Combines the features of the prototyping and the waterfall model • The spiral model is intended for large, expensive and complicated projects • Advantages of top-down and bottom-up concepts RAD • Minimal planning and fast prototyping. • Developing instead of planning • The lack of pre- planning generally allows software to be written much faster, and makes it easier to change requirements. Agile • Group of software development methods • Based on iterative and incremental development • Most important phrases • self -organizing, cross - functional teams • adaptive planning, • evolutionary development and delivery, • a time-boxed iterative approach, • rapid and flexible response to change. • A conceptual framework • The Agile Manifesto in 2001..