ROS: a Rack-Based Optical Storage System with Inline Accessibility for Long-Term Data Preservation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Point Archiver Software Version 3.0

PoINT Archiver Software Version 3.0 FOR MICROSOFT WINDOW S S E R V E R KEY FEATURES Network Wide, Policy Based Data Management & Archiving A Complete Solution for PoINT Archiver V3.0 is a PoINT Archiver uses an intuitive Once configured, PoINT Archiver creates a managed Data Windows server application user interface to create Archive Jobs which provide monitoring, data storage environment Management which provides centralized administration of network protection, migration and where specified folders and and Archive data storage management, recovery of data files. the data within them is with a specific focus on the protected from alteration or Enhanced process of data archival and destruction, automatically Search Features retrieval. It is intended for written on to specified devices and media and for Data use in applications where large volumes of information organized for easy access or Retrieval must be preserved for retrieval. This ensures that extended periods of time, be virtually every compliance Data Migration, accessible upon demand, and and business continuity Encryption and which also require that the requirement relating to data Authentication accuracy and integrity of that storage and access can be satisfied. Tools information is assured. User Defined Policies for PoINT Archiver offers three Support for all PoINT Archiver is unique in its ability to use multiple Archive Management levels of Licensing which Storage Devices storage devices and all types User defined Policies determine provide solutions for and Media of media in order to meet what data to move or copy, applications from a simple any combination of retention, which device(s) should be used data archive on optical disc to Enhanced recovery, security and access and when to perform each a complete enterprise wide requirements. -

HP Storageworks Optical Storage a Buyer’S Guide to Understanding and Evaluating Optical Storage “Knowledge Is Power.” —Francis Bacon

HP StorageWorks Optical Storage A buyer’s guide to understanding and evaluating Optical Storage “Knowledge is power.” —Francis Bacon Storage 101: A beginning problem is especially acute in the service sector, imaging environments, customer service departments and other In the Information Age, information is like environments that maintain account information online. any other product. And while you continue to need more and more storage It’s bought and bartered every day. It differentiates space, you also need instant access to that data. In other successful companies from marginal ones. It forms the words, it’s not enough to find a place to put it; you have foundation of every successful enterprise. And when it’s to put it where you can get it back quickly. transformed from its raw state into intellectual property, Where to put it its value soars beyond calculation. There are four broad categories of data storage. Like any other strategic asset, information must be Depending on the sensitivity of data, number of data protected from loss, corruption and theft. But how? users, frequency of access and your storage budget, you might use one or all of these strategies in your company. This primer is an important first step in evaluating and understanding your options in storage, especially optical • Online storage storage. It explores the challenges of data proliferation, High performance, mid-range to high cost compares various approaches to data protection, and An image or document is considered online when you outlines specific optical technologies that help address the can access it instantly and automatically, without human challenges of data storage in the information age. -

Review of Various Data Storage Techniques

International Journal of Science and Research (IJSR), India Online ISSN: 2319-7064 Review of Various Data Storage Techniques Rajeshwar Dass1, Namita2 1Department of Electronics and Communication Engineering DCRUST, Murthal, Sonepat, India [email protected] [email protected] Abstract: The data storage is one of the basic requirements of today’s technical world. Improved and new multimedia applications are demanding for more and more storage space. Hence to meet this requirement day by day new techniques are coming in to the picture. This paper summarizes the various techniques used for the storage of data, their characteristics & applications with their comparative analysis. Keywords: Memory, semiconductor storage, magnetic storage, optical storage. 1. Introduction Cost: Since the beginning of computing, hard drives have come down in price, and they continue to do so. Big Storing of data is very important and basic requirement of advantage of main memory is that has become a lot cheaper, today's life. All the applications we are using require the but its storage limitation is still a big factor. A RAM stick storage of many programs and day by day the applications often holds less than 1 percent of the data capacity of a hard are improving and the memory requirement is increasing and drive with the same price. to meet the current storage requirement we are reached to holography and 3D optical storing techniques, as for various Various types of magnetic memories are: multimedia applications the storage need is increasing day by day. 2.1 Magnetic tape This paper is organized as Section II describes the magnetic Today, except in some of the cases where we require legacy storage, its different types, advantages and disadvantages, compatibility, magnetic tape is generally used in cartridges Section III describes the semiconductor storage, its different for computers. -

MAM-A DVD-R -Best Jitter

The Most Secure Digital Storage Media 10/26/2009 Presenter: Joe Weisenbach, Engineering Manager MAM-A Inc. The only recordable disc maker in America The Most Secure Digital Storage Media About MAM-A Inc. MAM-A Inc. was founded in March 1996 for the production and sales of recordable compact discs. Its 50,000 sq. ft. plant is located in Colorado Springs, Colorado where the CD-R media is manufactured in a state-of-the-art clean room environment. The MAM-A GOLD discs use Mitsui Chemical's patented dye and has a characteristic gold color. This combination of dye and pure gold provide superior longevity and the highest quality, professional Archive Grade recordable media available. MAM-A Inc. manufactures CD-R media in 650 MB and 700 MB capacities, with reflective surfaces of silver or gold. DVD-R media is manufactured in 4.7 GB and 8.5 GB capacities, and Blu-ray media is offered in 25GB and 50GB capacities. MAM-A Inc.'s ISO 9001 certified manufacturing facility is located in Colorado Springs, Colorado USA. Available forms of Storage Media Available types of Storage Media Newspaper Hard Disk - Raid Office Copy paper CD-ROM Acid Free paper CD-R silver Microfilm DVD-R Silver Video tape CD-R Gold Magnetic tape DVD-R Gold Magneto Optical Blu-Ray Disc Considerations when Choosing an Archive Media Define your needs with respect to: Capacity Price Accessibility Longevity Media Capacities/Cost of initial Purchase Type Max Capacity Available Best Cost/GB LTO Tape 3.2 TB $0.063 Hard Disk 1.5 TB $0.067 Optical Disc 50 GB $0.051 *Cost/GB not necessarily -

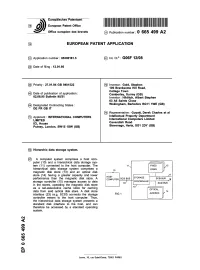

Hierarchic Data Storage System

Europaisches Patentamt 19 European Patent Office Office europeen des brevets © Publication number : 0 665 499 A2 12 EUROPEAN PATENT APPLICATION (21) Application number: 95300181.5 (5i) Int. CI.6: G06F 12/08 (22) Date of filing : 12.01.95 (30) Priority : 27.01.94 GB 9401522 (72) Inventor : Gold, Stephen 105 Branksome Hill Road, College Town (43) Date of publication of application Camberley, Surrey (GB) 02.08.95 Bulletin 95/31 Inventor : Hilditch, Albert Stephen 63 All Saints Close @ Designated Contracting States : Wokingham, Berkshire RG11 1WE (GB) DE FR GB IT (74) Representative : Guyatt, Derek Charles et al © Applicant : INTERNATIONAL COMPUTERS Intellectual Property Department LIMITED International Computers Limited ICL House Cavendish Road Putney, London, SW15 1SW (GB) Stevenage, Herts, SG1 2DY (GB) @) Hierarchic data storage system. (57) A computer system comprises a host com- puter (10) and a hierarchical data storage sys- tem (11) connected to the host computer. The FIXED .13 hierarchical data V storage system comprises a 10 DISKS magnetic disk store (13) and an optical disk store (14) having a greater capacity and lower .17 HOST STORAGE performance than the magnetic disk store. A COMPUTER SCSI BUS SCSI BUS .18 CONTROLLER storage controller (15) manages access to data 16' SCSI BUS in the stores, the disk store operating magnetic 15' as a set-associative cache store for caching OPTICAL data from said optical disk store. A disk store 14 JUKEBOX V. interface (23) (e.g. SCSI) connects the storage FIG 1 controller means to the host computer. Thus, the hierarchical data storage system presents a standard disk interface to the host, and can therefore be accessed by a standard operating system. -

Green Data Storage

Fortuna Power Systems Ltd – June 2007 GREEN DATA STORAGE Written by Ray Quattromini Fortuna Power Systems Ltd Units B, E & F, Brickells Business Court Oakley Lane, Oakley Basingstoke Hampshire RG23 7JU Tel: 01256 782030 E-mail: [email protected] June 2007 Copyright Fortuna Power Systems Ltd 2007 This document may not be distributed or copied without the permission of the author. Fortuna Power Systems Ltd – June 2007 Green Data Storage We all know that the our data storage requirements are doubling every 18 months and whilst disk capacities are also on the increase our appetite for faster, bigger storage arrays are increasingly growing to keep up with business needs. These storage arrays consume vast amounts of power; require cooling, maintenance and constant management. The need for Green Data Storage is greater now than it has ever been. With increasing electricity costs, the continual threat of power outages and global warming predictions, the whole area of data centre storage management needs to be addressed. For example a 300GB 15k SAS disk drive uses 17.5 watts operating and a 750GB SATA-II drive uses 12 watts operating. If we have a requirement for new 10TB storage array lets look at our storage options. High Performance 300GB 15k rpm SAS Storage Array Therefore a 10TB storage array requires 34 disk drives consumes 595 watts per hour plus 150w for the RAID controller, PSU, fans etc This equates to: • 17,880 watts or 61,012 BTU’s per day • 6,526,200 watts or 22,269,352 BTU’s per year • Running costs per annum £261 @ 0.04p -

Linux − Optical Disk HOWTO Linux − Optical Disk HOWTO Table of Contents

Linux − Optical Disk HOWTO Linux − Optical Disk HOWTO Table of Contents Linux − Optical Disk HOWTO..........................................................................................................................1 Skip Rye, abr@preferred.com.................................................................................................................1 1. Disclaimer............................................................................................................................................1 2. Copyright.............................................................................................................................................1 3. Magneto Optical Technology − Daniel Kobras...................................................................................1 4. Magneto Optical Drive experiences under Linux................................................................................1 5. Optical jukeboxes.................................................................................................................................2 6. MO and other media technologies − Gene Cumm..............................................................................2 7. Phase Change Optical Technology......................................................................................................2 8. Optical Disk HOWTO Development Page..........................................................................................2 1. Disclaimer............................................................................................................................................2 -

Optical Library Storage Management

Optical Library Storage Management Optical Library Storage Management The Ideal High Capacity Data Storage Solution for TThhee IIddeeaall HHiigghh CCaappaacciittyy DDaattaa SSttoorraaggee SSoolluuttiioonn ffoorr AAA rrrccchhhiiivvviiinnnggg,,, CCCooommmpppllliiiaaannnccceee aaannnddd DDDiiisssaaasssttteeerrr RRReeecccooovvveeerrryyy... POINT FSX V2.1 FOR LINUX • Supports all Optical Jukeboxes and Technologies (CD/DVD, MO/UDO, Blu-ray) • Transparent read/write access to Optical Libraries Full Function, Lower Cost Jukebox • Permanent Write Once Data Storage (WORM) Management for Linux Servers • Graphical User Interface – Java Based Web Administration Tool • Command Line Interface – PoINT FSX is designed to provide Single Command line Configuration File the complete functionality to • Linux based user/group rights and permissions integrate and operate an optical • Volume Sets (logical groups of Physical Media) jukebox in a heterogeneous • Dynamic growth of Volume Sets & File Spanning network environment. Users and applications have transparent • Automatic copy of finalized Volume Set media access to the huge storage • Dynamic Image Recording' for DVD capacity of CD/DVD, MO, UDO • Hard Disk performance by integrating and Blu-ray jukebox devices. sophisticated file caching • Automatic device configuration • Off-line management of files and media The PoINT FSX Concept PoINT FSX provides hard disk like access to data stored on optical media in a jukebox. The product concept takes advantage of the Linux architecture. Transparent read/write access to optical media in a jukebox is provided through a mountable UNIX file system. The jukebox is represented as one directory and imported media are represented media sets or individual subdirectories. Multiple platforms like UNIX, Windows or Macintosh can have access to the data stored on optical media in the jukebox. -

A Primer on Nearline and Archival Storage Solutions

A Primer on Nearline and Archival Storage Solutions WHITE PAPER A Primer on Nearline and Archival Storage Solutions Nearline storage represents the position in the storage hierarchy between online and offline storage. Nearline storage is almost instantaneously accessible through the use of automated robotics-based removable media handling. No human intervention is required. Explosive Data Growth The value of nearline storage has been prompted by the phenomenal growth of electronic data in recent years. Applications such as e-mail, multimedia, databases and e-commerce are contributing to the explosive data growth at the enterprise level. Organizations are now doubling the amount of their data every 12 to 18 months. This ongoing need to store more and more data is creating additional inefficiencies, as the infrastructure for storage management is unable to keep up with the growth in data storage. Figure 1 – The rise of electronic data growth (data source IDC and SNIA) Copyright © 2002 KOM NETWORKS Inc., All Rights Reserved 1 http://www.komnetworks.com A Primer on Nearline and Archival Storage Solutions Concerns and Issues Backup Issues The explosive growth in data results in longer backup cycles for the simple reason that there is more data that needs to be backed up. This is becoming problematic given that organizations have smaller windows of opportunity to conduct backup operations. Reasons for this include: Increasing access requirements and 24 x 7 operations. If storage requirements are doubling every 12-18 months then the load on the backup system will also be doubling every 12-18 months. Backup system scalability is not an easy undertaking at the best of times. -

Hp Storageworks 1000Ux/1900Ux/2300Ux Optical Jukebox User's Guide

User’s Guide hp StorageWorks 1000ux/1900ux/2300ux Optical Jukebox First Edition (May 2004) Part Number: AA966-90901 This guide describes procedures for operating and troubleshooting the HP StorageWorks 1000ux/1900ux/2300ux Optical Jukebox. © Copyright 2004 Hewlett-Packard Development Company, L.P. Hewlett-Packard Company makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with the furnishing, performance, or use of this material. This document contains proprietary information, which is protected by copyright. No part of this document may be photocopied, reproduced, or translated into another language without the prior written consent of Hewlett-Packard. The information contained in this document is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Microsoft®, MS-DOS®, MS Windows® and Windows® are U.S. registered trademarks of Microsoft Corporation. UNIX® is a registered trademark of The Open Group. Hewlett-Packard Company shall not be liable for technical or editorial errors or omissions contained herein. The information is provided “as is” without warranty of any kind and is subject to change without notice. The warranties for Hewlett-Packard Company products are set forth in the express limited warranty statements for such products. Nothing herein should be construed as constituting an additional warranty. -

Calculating the Lifetime of Optical Discs

Optical Discs for Archiving Applications? Optical Discs for Archiving? Choosing an Archive Media Define your needs regarding: Longevity Accessibility Price Capacity Longevity Longevity of Various Media 500 400 300 Years 200 100 0 Optical Media Other Storage Types and Access Time Storage Type Media Access Time Primary storage Computer memory Nanoseconds Secondary Storage Hard drives/Optical discs up to 1 second Tertiary Storage Tape Library, Optical Jukebox 4–60 seconds Off-line Storage Tapes, Hard drives, Optical Disc minutes-hours Capacity vs Access Time Source: World Technology Evaluation Center, Inc. Media Capacities/Cost of initial Purchase Cost per GB $0.05 $0.04 LTO Tape $0.03 Optical Disc $0.02 $0.01 Hard Disk $0.00 LTO Tape Optical Disc Hard Disk Type Max Capacity Available Lowest Cost/GB LTO Tape 3.2 TB (compressed) $0.022 Hard Disk 4 TB $0.047 Optical Disc 100 GB $0.031 *Cost/GB not necessarily based on largest capacity Selection Guide Media Selection Scoreboard Hard Linear Flash 1= Does Not Meet Criteria 3 = Fully Meets Criteria CD-R DVD-R Blu-Ray Disk Tape Memory Longevity 3 3 3 1 1 1 Capacity 1 1 1 3 3 1 Viability (Error Correction) 3 3 3 2 3 2 Obsolescence 3 3 3 2 2 2 Cost 2 2 2 1 3 2 Susceptibility to Damage 3 3 3 3 2 1 Total 15 15 15 12 14 9 Pros And Cons Data Archiving on CD-R, DVD R or BD-R Media .How long should Archive Grade Media last? .What makes it “Archive Grade”? .What is needed for best performance? Archive Media: How long is long enough? .Eventual migration to another media is inevitable .Migration is expensive .Keep your data on one media as long as possible. -

RWZ52 Optical Disk Drive User's Guide

RWZ52 Optical Disk Drive User's Guide Order Number: EK-RWZ52-UG. A01 Digital Equipment Corporation Maynard, Massachusetts April 1994 The information in this document is subjet to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. The software described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. © Digital Equipment Corporation 1992. All Rights Reserved. Printed in U.S.A. The postpaid Reader's Comments forms at the end of this document request your critical evaluation to assist in preparing future documentation. The following are trademarks of Digital Equipment Corporation: DECnet, PATHWORKS, ULTRIX, VAX, VMS, and the DIGITAL logo. The following are third-party trademarks: MS-DOS and Microsoft are registered trademarks and Windows is a trademark of Microsoft Corporation. Novell is a registered trademark of Novell, Inc. Sun is a registered trademark and SPARCstation is a trademark of Sun Microsystems, Inc. UNIX is a registered trademark of UNIX System Laboratories, Inc. ZK5909 Contents Drive Characteristics 1-1 Features of the RWZ52 Multifunction Optical Disk Drive .......................................................1-2