Computer-Suggested Facial Makeup

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Red Door–Union Square (New

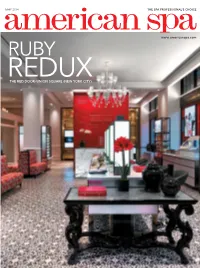

americanMAY 2014 spaTHE SPA PROFESSIONAL’S CHOICE www.americanspa.com RUBY REDUXTHE RED DOOR–UNION SQUARE (NEW YORK CITY) featuresa closer look at some of the spas that are making news in the industry both here and abroad 112 URBAN RENEWAL The Red Door –Union Square (New York City) 118 THE LUSH LIFE On-the-go New Yorkers Onda Spa can get a quick mani or pedi at The Red Door (Guanacaste, Costa Rica) Speed Nail Salon. MAY 2014 • WWW.AMERICANSPA.COM • AMERICAN SPA 111 The beauty bar on the spa’s ground floor is the perfect pre-party pit stop for busy New Yorkers. 112 AMERICAN SPA • WWW.AMERICANSPA.COM • MAY 2014 The new spa, a two-story, 10,000-square-foot retreat that is reminiscent of a luxe townhouse, carries on the Arden legacy but with a twist. The classic Red Door cherry red is used as an accent throughout, including red enamel on the front doors, rouge-colored furniture, and robust red blooms. Additionally, a dramatic gold-faceted feature wall references a classic Elizabeth Arden cosmetic compact. But the spa also offers a sleek Clients can prep in the lounges (pictured) and blowout bar (right) contemporary style with clean, modern fixtures and for a post-treatment night out. furniture. “This new Red Door concept aims to be the modern equivalent of a women’s club, combin- ing uptown chic with downtown cool, all within an aspirational space,” says Pross. “The objective of the new location was to re-introduce the Elizabeth or more than 100 years, Elizabeth Arden brand by creating a relevant flagship beauty Arden Red Door Spa on Fifth Avenue destination for young, professional, urban women.” in New York City has been known as The spa features two levels that cater to two a mecca for some of the city’s most very different types of clients—those who are indomitable doyennes (though it did on the go and those who have time to unwind. -

Grab a Latte and Enjoy a Warm Cream Mani/Pedi

SLUFF IT OFF WITH A MID-DAY MICRO-DERM STRESSED? BLOW IT OUT. TO PEEL OR NOT TO PEEL? GRAB A LATTE AND ENJOY A WARM CREAM MANI/PEDI. LUNCH BOX SUSHI AND A DETOX SEAWEED WRAP. WAX ON WAX OFF. ENTER YOU EXIT NEW. PAVEMENT POUNDING CURE PEDICURE. GET CHEEKY. YOU’LL HAVE THE PRETTIEST PROFILE PIC LIVE. LOVE. LIPSTICK. JUST ANOTHER MANI MONDAY. PURE WRAPTURE. BLOW IT OUT. SLUFF IT OFF WITH A MID-DAY MICRO-DERM STRESSED? BLOW IT OUT. TO PEEL OR NOT TO PEEL? GRAB A LATTE AND ENJOY A WARM CREAM MANI/PEDI. LUNCH BOX SUSHI AND A DETOX SEAWEED WRAP. WAX ON WAX OFF. ENTER YOU EXIT NEW. PAVEMENT POUNDING CURE PEDICURE. GET CHEEKY. YOU’LL HAVE THE PRETTIEST PROFILE PIC LIVE. LOVE. LIPSTICK. JUST ANOTHER MANI MONDAY. PURE WRAPTURE. BLOW IT OUT. FACE RED DOOR SIGNATURE FACIAL Our most requested treatment incorporates Miss Arden’s classic facial massage technique. Using our professionally formulated Red Door signature products expertly selected for your needs, radiance is beautifully awakened. ELIZABETH ARDEN PRO RENEWAL Using Elizabeth Arden PRO cosmeceutical grade products, our most active facial accelerates and maximizes results. Skin appears more even with enhanced elasticity, texture, tone and clarity for a refreshed, nourished and youthful look. Suitable for all skin types. ULTIMATE ARDEN FACIAL Advanced, multifaceted skincare treatment using award winning PREVAGE® anti-aging products. Includes microdermabrasion (or natural enzyme peel), face and eye collagen mask and a décolleté treatment deliver visible results. A tranquil warm stone upper body massage and relaxing scalp massage induce deep relaxation. -

NVQ Level 2 Beauty Therapy Student Copy Facials 20 Consultation

NVQ level 2 beauty therapy student copy Facials 20 Consultation Facial Consultation One of the most i mportant parts of the treatment is the con- sultation. At this point, you will discuss the client’s needs and decide what needs to be done to meet those needs. In order Contents for the client to be satisfied with the treatment you need to know what was expected. As a therapist, you must therefore be good at encouraging clients to give you this information. In order to do this you must therefore have the following: • Good communication skills – both verbal (talking) and non- verbal (body language). Examples of good body language are good posture, eye contact, smiling, encour- aging head nods. • Good questioning techniques - this is vital to gain the information that you require to carry out the treat- ment. • Open questions – this type of question begins with How? Where? When? Why? and encourages the client 1 Benefits of a facial to give much more information. Use open questioning The first part of techniques wherev er possible during the consultation the consultation and treatment. is greeting the Facial • Closed questions - questions beginning with Do? Is? are client and making types of closed questions. If you use these types of t h e m f e e l questions, you will only get a yes or no answer, which will comfortable. It lead to a one, sided conversation. is important that Why Have A Facial Treatment? • Good observation skills – you need to be able to read your you welcome the client’s body language as it will help you to understand how client, preferably your client it feeling. -

Here's the DIY Facial Toner Recipe

What's in this homemade facial toner? witch hazel - Witch hazel is a natural astringent that minimizes the appearance of pores, reduces inflammation and skin irritations, decreases oil and redness, and leaves skin feeling clean and refreshed. vitamin E - Vitamin E is an antioxidant that blocks free radicals from the skin which can help reduce wrinkles and brown (a.k.a. age or sun) spots and keep the skin youthful looking. It also supports new skin cell growth and speeding up cell regeneration (which also helps keep skin young looking). Essential oils Geranium essential oil promotes healthy, clear, glowing skin. It also reduces the appearance of fine lines, while toning and beautifying skin. Cypress essential oil helps control overactive sebum glands that make our skin oily and prone to breakouts. Cypress also helps speed healing time, and due to its circulatory benefits, cypress essential oil can also help radiate and revive a dull complexion. Lavender essential oil is a all-around super powerhouse for skin care. It's gentle and great for all skin types. It soothes and calms irritated skin. It also has powerful antioxidants that slow aging. And lavender improves the appearance of complexion, reduces redness, and fights acne. Here's the DIY Facial Toner Recipe: Fill a 4 oz bottle almost full with witch hazel add 1/2 tsp vitamin E oil add 3 drops each of geranium, cypress, and lavender essential oils shake to mix Here's how I use it (same skincare routine morning and night): • remove my makeup using coconut oil (It might sound odd to wipe -

Title 175. Oklahoma State Board of Cosmetology Rules

TITLE 175. OKLAHOMA STATE BOARD OF COSMETOLOGY RULES AND REGULATIONS TABLE OF CONTENTS Section Page Chapter 1. Administrative Operations 1-7 Subchapter 1. General Provisions 175:1-1-1 1 Subchapter 3. Board Structure and Agency Administration 175:1-3-1 2 Subchapter 5. Rules of Practice 175:1-5-1 3 Subchapter 7. Board Records and Forms 175:1-7-1 7 Chapter 10. Licensure of Cosmetologists and Related Establishments 7-51 Subchapter 1. General Provisions 175:10-1-1 7 Subchapter 3. Licensure of Cosmetology Schools 175:10-3-1 7 Subchapter 5. Licensure of Cosmetology Establishments 175:10-5-1 33 Subchapter 7. Sanitation and Safety Standards for Salons, 175:10-9-1 34 And Related Establishments Subchapter 9. Licensure of Cosmetologists and Related 175:10-9-1 39 Occupations Subchapter 11. License Renewal, Fees and Penalties 175:10-11-1 48 Subchapter 13. Reciprocal and Crossover Licensing 175:10-13-1 49 Subchapter 15. Inspections, Violations and Enforcement 175:10-15-1 50 Subchapter 17. Emergency Cosmetology Services 175:10-17-1 51 [Source: Codified 12-31-91] [Source: Amended: 7-1-93] [Source: Amended 7-1-96] [Source: Amended 7-26-99] [Source: Amended: 8-19-99] [Source: Amended 8-11-00] [Source: Amended 7-1-03] [Source: Amended 7-1-04] [Source: Amended 7-1-07] [Source: Amended 7-1-09] [Source: Amended 7-1-2012] COSMETOLOGY LAW Cosmetology Law - Title 59 O.S. Sections 199.1 et seq 52-63 State of Oklahoma Oklahoma State Board of Cosmetology I, Sherry G. Lewelling, Executive Director and the members of the Oklahoma State Board of Cosmetology do hereby certify that the Oklahoma Cosmetology Law, Rules and Regulations printed in this revision are true and correct. -

A Definitive Guide to Skin Care

Facing A DefinitiveForward Guide to Skin Care TM Reviewed by Dr. Ashley Magovern Table of Contents ABOUT DERMSTORE 3 FOREWORD 4 CHAPTER 1 • How Life Affects Your Skin 5 5 Most Common Skin Concerns and How to Deal With Them Your Great Skin Checklist CHAPTER 2 • Building the Best Skin Care Routine for You 10 Get to Know Your Skin Type What Happens to Your Skin at Your Age In What Order Should You Apply Your Products? How Much of Your Products Do You Really Need? CHAPTER 3 • The Power of Cleansing 15 The Proper Way to Cleanse The Different Types of Cleansers, Decoded DermStore’s Top 10 Cleansers CHAPTER 4 • Why You Should Never Skip Your Moisturizer 19 What’s In a Moisturizer? DermStore’s Top 10 Moisturizers Make the Most of Your Moisturizer CHAPTER 5 • Sun Protection Is Half the Battle 24 5 Sunscreen Myths, Debunked Know Before You Buy: 15 Sunscreen Terms to Remember DermStore’s Top 10 Sunscreens 5 Sun-Safety “Rules” to Live By CHAPTER 6 • Antioxidants: Your Skin’s New Best Friend 30 The 4 Main Benefits of Antioxidants on Your Skin The Best Antioxidants for You DermStore’s Top 10 Antioxidants 4 Tips to Maximize the Benefits of Antioxidants CHAPTER 7 • Exfoliation and Peels 101 34 What Exfoliant Should You Use? DermStore’s Top 10 Exfoliants The 7 Commandments of Exfoliation CHAPTER 8 • The Essential Luxuries: Serums, Masks and Oils 39 Serums DermStore’s Top 5 Serums Masks DermStore’s Top 5 Masks Oils DermStore’s Top 5 Oils CHAPTER 9 • The Big Guns: At-Home Skin Care Devices 45 Deep-Cleansing Devices Anti-Aging Devices Glow-Enhancing Devices DermStore’s Top 10 Skin Care Devices CHAPTER 10 • Glowing From Within 47 7 Nutrients Your Skin Needs Why We Love Water DermStore’s Top 10 Skin Care Supplements 5 Unlikely Foods (and Drinks) That Slow Down Aging Skin ACKNOWLEDGEMENTS 52 About DermStore DermStore launched in 1999 with the vision of creating a trusted dermatologist-backed online store that carries the finest cosmetic dermatology products. -

Cleansers & Toners Protect Moisturize Special Treatment Acne

Cleansers & Toners Protect Special Treatment Sunscreen SPF 30 $39.00 Vitamin C Antioxidant Cleansing Pads $32.00 This excellent sunscreen provides 17% Micronized Zinc Green Tea Exfoliation Scrub $48.75 This scrub fortified with green and white A blend of antioxidants and citrus extracts that and liposome encapsulated antioxidants in a moisturizing tea, delievers a soothing wave of heat to gently cleanses and prepares the skin in an easy-to- formula offering the highest level of broad-spectrum open pores and exfoliates the skin. use pad. protection. Vitamin C Antioxidant Clay Mint Mask $32.00 Sunscreen SPF 30 $39.00 This mask provides an effective way to clean Gly/Sal 2-2 Cleanser $20.40 This elegant sunscreen delivers and tighten pores while absorbing excess oils This specially formulated oily skin cleanser delivers broad-spectrum protection as well as and helping to dry up blackheads. ultra pure glycolic acid and 2% salicylic acid for gently antioxidants for a complete sunscreen cleansing acne prone and oily skin. solution for all skin types. Advanced Anti-Photoaging Sunscreen SPF 45 $43.75 Pigmentation This sunscreen combines broad-spectrum UVA/UVB Skin Tone Enhancement Therapy protection and a unique antioxidant blend to 2% $54.50 - 4% $63.00 - 6% $71.50 Glycolic Facial Toner $22.00 neutralize free-radical skin damage. This skin tone enhancement kit contains A refreshing toner that contains glycolic acid and botanical skin lighteners including Kojic acid, witch hazel to degrease the skin, as well as Arbutin and Bearberry in an alcohol and chamomile and comfrey to cool and calm the skin. -

Cosmetology (CSME) 1

Cosmetology (CSME) 1 CSME 1420. ORIENTATION TO FACIAL SPECIALIST COSMETOLOGY (CSME) (LECTURE 2, LAB 8). CREDIT 4. WECM. This course is an overview of the skills and knowledge necessary for CSME 1202. APPLICATIONS FOR FACIAL AND SKIN CARE TECHNOLOGY the field of facials and skin care. Instruction will demonstrate the theory, (LECTURE 1, LAB 2). CREDIT 2. WECM. skills, safety and sanitation, and professional ethics of basic facials Introduction to the application of facial and skin care technology. and skin care and explain the rules and regulations of the institution, Includes identifying and utilizing professional skin care products. department and state. This course is offered for Esthetic Specialty only. CSME 1244. INTRODUCTION TO SALON DEVELOPMENT CSME 1435. ORIENTATION TO THE INSTRUCTION OF COSMETOLOGY (LECTURE 1, LAB 3). CREDIT 2. WECM. (LECTURE 2, LAB 5). CREDIT 4. WECM. Develop procedures for appointment scheduling and record management. This course presents an overview of skills and knowledge necessary Identify issues related to inventory control and operational management. for the instruction of cosmetology students, including methods and CSME 1302. APPLICATIONS OF FACIAL AND SKIN CARE TECHNOLOGY I techniques of teaching skills, theory of teaching basic unit planning and (LECTURE 2, LAB 3). CREDIT 3. WECM. daily skill lesson plan development. Must have a valid Texas Cosmetology Explain the benefits of professional skin care products. Apply and Operator License. recommend professional skin care products to industry standards. CSME 1443. MANICURING AND RELATED THEORY CSME 1308. ORIENTATION OF EYELASH EXTENSIONS (LECTURE 2, LAB 8). CREDIT 4. WECM. (LECTURE 3, LAB 1). CREDIT 3. WECM. This course is a presentation of the theory and practice of nail This course provides the student with the practical skills necessary to technology. -

Salons-Feature.Pdf

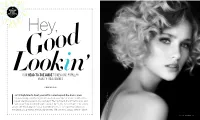

THE TRANS FOR MATION ISSUE Hey, Good LookOUR HEAD-TO-TOE GUIDE TO NEW ANDi POPULARn BEAUTY TREATMENTS ’ BY M ARY K ATE H OGAN sn’t it high time to treat yourself to some beyond-the-basics care: a rejuvenating, volumizing facial to perk up your skin or a luxe treatment to repair overprocessed locks, perhaps? The top talent at our town’s spas and Isalons can help you bring your beauty A-game, but which treatments should you book? We indulged in a few and talked to the pros to get their advice on the latest and greatest, the beauty secrets of those who always seem to shine. MODEL BY ISTOCKPHOTO.COM/©COFFEEANDMILK JANUARY 2017 GREENWICH 109 control. The aesthetician masque followed by a The Spa at selects a blend of hot stone massage. The essential oils tailored to aestheticians are trained Glowing Delamar your body’s and skin’s in reflexology methods needs. For overall skin and apply pressure to Celebs swear by detoxing toning and firming, the appropriate points on treatments, and now DELUXE BODY LIFT is a your feet and/or hands you can indulge in a facial for the body that to promote energy. The serums from Biologique provide the essential DETOX BODY WRAP at involves exfoliation and lounge’s four chairs are Recherche. Skinnutrients needed for The Spa at Delamar. micro-current therapy separated by long white During the procedure, their skin to glow,” says The ninety-minute for toning and skin drapes that close to special serums are Michelle Baizer Cooper, treatment combines tightening. -

Signature Treatment Massage and Facial Manicure and Pedicure in Your Element…

WITH THE PURSUIT OF GOOD HEALTH, NUTRITION AND WELLNESS AT ITS CORE, ELEMENTAL HERBOLOGY SKINCARE PRODUCTS ARE PACKED WITH NUTRIENTS TO HELP YOU REDISCOVER YOUR RADIANCE. START YOUR JOURNEY TODAY THROUGH THE FIVE ELEMENTS, ACHIEVING PERFECT HARMONY AND EQUILIBRIUM. EMPOWER WITH EARTH. Hit the reset button to balance and centre the mind with Earth Balance. Those who are always giving to others and never take time for themselves will love this calming oil. BE WORRY-FREE WITH WATER. Pressures from everyday life can leave you stressed, worried and unable to sleep. The comforting properties of Water Soothe will nurture, emotionally strengthen and deeply relax for a calm mind and a good night’s sleep. METAL TO HELP MELLOW. You’re organised, structured and have everything planned far in advance. Metal Detox will help you declutter your thoughts, purify a tired body and mind while helping to eliminate toxins. FUEL UP WITH FIRE. Always feeling tired and drained? Fire Zest will revitalise your whole body and increase energy levels throughout the day. A WORKAHOLIC NEEDS WOOD. Always on the go and have an active lifestyle? Your tired and aching muscles need Wood Rejuvenation oils to relieve aches and pains and refresh the mind so you’re ready for the gym the next day! SIGNATURE TREATMENT THE GOODWOOD SEASON (90 MINUTE) Following the seasons and events across the Goodwood Estate, our signature treatment reflects the changes of the beautiful countryside. Incorporating deep cleansing, an intensive face and décolleté massage and nourishment, this treatment replenishes your skin using healing botanicals, vitamins and marine extracts. -

Penelitian Pada Produk Gatsby Face Wash Di Wilayah DKI Jakarta)

Jurnal Ekonomi, Volume 20 Nomor 1, Pebruari 2018 Copyright @ 2018, oleh Program Pascasarjana, Universitas Borobudur ___________________________________________________________________________ Pengaruh Citra Merek Dan Loyalitas Merek Terhadap Perluasan Merek (Penelitian Pada Produk Gatsby Face Wash Di Wilayah DKI Jakarta) Oleh : Putra Adi Cipta, Handono Ishardyatmo, Djoharsjah Mx. Magister Ilmu Kefarmasian ( konsentrasi : Bisnis Farmasi ) Fakultas Farmasi, Universitas Pancasila [email protected] ABSTRACT Awareness of the importance of facial skin care is not only owned by women but also by men, or known as metrosexual men. PT. Mandom Indonesia. Tbk is one of the pioneer of men's cosmetics industry with its Gatsby brand. Gatsby successfully leads the top brand with its Gatsby hair oil products and extends the brand by launching Gatsby face wash. But the Gatsby face wash was unable to follow success like Gatsby hair oil. This study aims to determine the effect of brand image and brand loyalty to the extension of the brand Gatsby face wash in the area of Jakarta using 750 respondent. The results obtained from this study were analyzed using descriptive method, correlation, and structural equation modeling (SEM). The results of this study indicate that the Gatsby brand has a good image by customers and consumers loyal to the Gatsby brand; Brand image and brand loyalty are strongly influential on Gatsby face wash products; Brand image and brand loyalty have an influence on brand extension on Gatsby face wash products. But there are some indicators that make the strength of each variable to be weak. Keywords: brand image, brand loyalty, brand extension, Gatsby face wash PENDAHULUAN Mandom Indonesia Tbk merupakan Salah satu industri kosmetik yang perusahaan yang berkembang cukup pesat. -

Individualized Daily Skin Care Guide

01_102024_90013562_3_SknCr 4/26/04 3:50 PM Page 1 INDIVIDUALIZED DAILY SKIN CARE GUIDE Nu Skin® products are designed to work with various skin types, regardless of age, to promote vibrant looking skin. Accurately determining your skin type—taking into account its specific daily and seasonal needs—will help you select the right Nu Skin® products to achieve and maintain optimal skin health. IDENTIFY YOUR SKIN TYPE DRY NORMAL COMBINATION OILY Three hours after cleansing: Never Seldom Most of the time Always Do you notice any oiliness? Where? Mostly in T-zone* All over How does your skin look? Flaky, parched Slightly dry, pores not visible Shiny, visible pores in T-zone* Oily, noticeable pores Oily in T-zone,* slightly How does your skin feel? Tight Comfortable to slightly dry Oily dry on cheeks What seasonal change Needs extra help Needs extra care in winter Needs occasional boost Needs occasional help does your skin experience? in heat/humidity The column containing the most checks indicates your skin type. Refer to the chart below for products designed to meet your skin care needs. * T-zone is the forehead, nose, and chin area. SKIN HEALTH MAINTENANCE DRY TO NORMAL COMBINATION TO OILY Cleanse Creamy Cleansing Lotion Pure Cleansing Gel pH Balance pH Balance Tone Toner Mattefying Toner Celltrex® Ultra Celltrex® Ultra Nourish Recovery Fluid Recovery Fluid DAILY Moisture Restore™ Day Moisture Restore™ Day Hydrate and Protect a.m. Protective Lotion SPF 15 Protective Lotion SPF 15 Normal to Dry Skin Combination to Oily Skin Night Supply™ Night Supply™ Hydrate p.m. Nourishing Cream Nourishing Lotion Hydrate—Eyes Intensive Eye Complex Intensive Eye Complex PAMPER YOURSELF TO COMPLEMENT YOUR DAILY SKIN CARE REGIMEN, IDENTIFY YOUR SKIN CONCERNS AND ADD THE APPROPRIATE PRODUCTS.