Course Logistics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toys and Action Figures in Stock

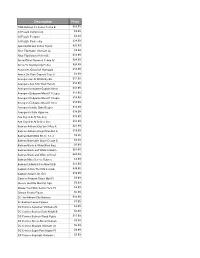

Description Price 1966 Batman Tv Series To the B $29.99 3d Puzzle Dump truck $9.99 3d Puzzle Penguin $4.49 3d Puzzle Pirate ship $24.99 Ajani Goldmane Action Figure $26.99 Alice Ttlg Hatter Vinimate (C: $4.99 Alice Ttlg Select Af Asst (C: $14.99 Arrow Oliver Queen & Totem Af $24.99 Arrow Tv Starling City Police $24.99 Assassins Creed S1 Hornigold $18.99 Attack On Titan Capsule Toys S $3.99 Avengers 6in Af W/Infinity Sto $12.99 Avengers Aou 12in Titan Hero C $14.99 Avengers Endgame Captain Ameri $34.99 Avengers Endgame Mea-011 Capta $14.99 Avengers Endgame Mea-011 Capta $14.99 Avengers Endgame Mea-011 Iron $14.99 Avengers Infinite Grim Reaper $14.99 Avengers Infinite Hyperion $14.99 Axe Cop 4-In Af Axe Cop $15.99 Axe Cop 4-In Af Dr Doo Doo $12.99 Batman Arkham City Ser 3 Ras A $21.99 Batman Arkham Knight Man Bat A $19.99 Batman Batmobile Kit (C: 1-1-3 $9.95 Batman Batmobile Super Dough D $8.99 Batman Black & White Blind Bag $5.99 Batman Black and White Af Batm $24.99 Batman Black and White Af Hush $24.99 Batman Mixed Loose Figures $3.99 Batman Unlimited 6-In New 52 B $23.99 Captain Action Thor Dlx Costum $39.95 Captain Action's Dr. Evil $19.99 Cartoon Network Titans Mini Fi $5.99 Classic Godzilla Mini Fig 24pc $5.99 Create Your Own Comic Hero Px $4.99 Creepy Freaks Figure $0.99 DC 4in Arkham City Batman $14.99 Dc Batman Loose Figures $7.99 DC Comics Aquaman Vinimate (C: $6.99 DC Comics Batman Dark Knight B $6.99 DC Comics Batman Wood Figure $11.99 DC Comics Green Arrow Vinimate $9.99 DC Comics Shazam Vinimate (C: $6.99 DC Comics Super -

Released 1St June 2016 DARK HORSE COMICS APR160074

Released 1st June 2016 DARK HORSE COMICS APR160074 BALTIMORE EMPTY GRAVES #3 FEB160029 BUFFY HIGH SCHOOL YEARS FREAKS & GEEKS TP JAN160132 DEATH HEAD TP FEB160056 GOON LIBRARY HC VOL 03 APR160061 HELLBOY IN HELL #10 FEB160025 PLANTS VS ZOMBIES GROWN SWEET HOME HC APR160060 PREDATOR LIFE AND DEATH #4 JAN160175 RED VIRGIN & VISION OF UTOPIA HC DC COMICS FEB160254 ART OPS TP VOL 01 (MR) FEB168675 BATMAN BEYOND #13 APR160353 BATMAN EUROPA DIRECTORS CUT #1 APR160284 BATMAN REBIRTH #1 APR160328 BLOODLINES #3 APR160364 DC COMICS BOMBSHELLS #14 APR160334 DOCTOR FATE #13 APR160304 GREEN ARROW REBIRTH #1 APR160300 GREEN LANTERNS REBIRTH #1 APR160366 INJUSTICE GODS AMONG US YEAR FIVE #11 MAR160294 JACKED TP (MR) MAR160292 LAST GANG IN TOWN #6 (MR) MAR160278 LEGENDS 30TH ANNIVERSARY EDITION TP MAR160274 PREZ THE FIRST TEENAGE PRESIDENT TP APR160276 SUPERMAN REBIRTH #1 APR160345 SUPERMAN THE COMING OF THE SUPERMEN #5 APR160429 SURVIVORS CLUB #9 (MR) APR160369 TEEN TITANS GO #16 APR160430 UNFOLLOW #8 (MR) MAR160277 WONDER WOMAN 77 TP VOL 01 IDW PUBLISHING APR160578 AMAZING FOREST #6 APR160627 ANGRY BIRDS COMICS (2016) #6 MAR160473 ANGRY BIRDS THE ART OF THE ANGRY BIRDS MOVIE HC JAN160391 BACK TO THE FUTURE ADV THROUGH TIME BOARD GAME MAR160337 BACK TO THE FUTURE TP UNTOLD TALES & ALT TIMELINES MAR160338 BACK TO THE FUTURE TP UNTOLD TALES & ALT TIMELINES DIRECT MA APR160557 DUNGEONS & DRAGONS LEGEND OF DRIZZT TP VOL 04 CRYSTAL SHARD APR160496 GHOSTBUSTERS NEW GHOSTBUSTERS #1 IDW GREATEST HITS ED APR160492 GHOSTBUSTERS THE NEW GHOSTBUSTERS TP APR160516 -

Ghostbusters: Get Real #3

Part 3 The Hound of the Busters written by ERIK BURNHAM art by DAN SCHOENING colors by LUIS ANTONIO DELGADO letters by NEIL UYETAKE edits by TOM WALTZ REGULAR COVER SUBSCRIPTION COVER RETAILER EXCLUSIVE COVER ART BY DAN SCHOENING ART BY JOE QUINONES ART BY CHRIS UMINGA COLORS BY LUIS ANTONIO DELGADO for Merrymac Games and Comics in Merrimack, NH Ted Adams, CEO & Publisher Facebook: facebook.com/idwpublishing Greg Goldstein, President & COO Robbie Robbins, EVP/Sr. Graphic Artist Twitter: @idwpublishing Chris Ryall, Chief Creative Officer/Editor-in-Chief YouTube: youtube.com/idwpublishing Matthew Ruzicka, CPA, Chief Financial Officer Alan Payne, VP of Sales Instagram: instagram.com/idwpublishing Dirk Wood, VP of Marketing deviantART: idwpublishing.deviantart.com www.IDWPUBLISHING.com Lorelei Bunjes, VP of Digital Services IDW founded by Ted Adams, Alex Garner, Kris Oprisko, and Robbie Robbins Jeff Webber, VP of Digital Publishing & Business Development Pinterest: pinterest.com/idwpublishing/idw-staff-faves GHOSTBUSTERS: GET REAL #3. AUGUST 2015. FIRST PRINTING. Ghostbusters ™ & © 2015 Columbia Pictures, Inc. All rights reserved. IDW Publishing, a division of Idea and Design Works, LLC. Editorial offices: 2765 Truxtun Road, San Diego, CA 92106. The IDW logo is registered in the U.S. Patent and Trademark Office. Any similarities to persons living or dead are purely coincidental. With the exception of artwork used for review purposes, none of the contents of this publication may be reprinted without the permission of Idea and Design Works, LLC. Printed in Korea. IDW Publishing does not read or accept unsolicited submissions of ideas, stories, or artwork. . -

This January... Novel Ideas

ILLUMINATIONSNOV 2016 THIS JANUARY... # 338 ANGEL - SEASON 11 KAMANDI CHALLENGE HELLBOY - WINTER SPECIAL PSYCHDRAMA ILLUSTRATED SHERLOCK: BLIND BANKER NOVEL IDEAS ANGEL AND MORE! Deadpool The Duck #1 (Marvel) CONTENTS: PAGE 03... New Series and One-Shots for January: Dark Horse PAGE 04... New Series and One-Shots for January: DC Comics PAGE 05... New Series and One-Shots for January: DC Comics PAGE 06... New Series and One-Shots for January: IDW Publishing PAGE 07... New Series and One-Shots for January: Image Comics PAGE 08... New Series and One-Shots for January: Marvel Comics PAGE 09... New Series and One-Shots for January: Indies PAGE 10... Novel Ideas - Part One PAGE 11... Novel Ideas - Part Two SIGN UP FOR THE PAGE 12... Graphic Novel Top 20: October’s Bestselling Books ACE COMICS MAILOUT AND KEEP UP TO DATE WITH THE LATEST RELEASES, SUBSCRIPTIONS, CHARTS, acecomics.co.uk ILLUMINATIONS, EVENTS For the complete catalogue of new releases visit previews.com/catalog AND MORE! 02 DARK HORSE NEW SERIES AND ONE�SHOTS FOR JANUARY LOBSTER JOHNSON: GARDEN OF BONES ANGEL - (ONE-SHOT) SEASON 11 #1 Mignola, Arcudi, Green, Bechko, Borges, Fischer Zonjic Vampire Angel is tormented by a vision linking When an undead hit man goes after the NYPD, his shameful past to something very big-and the Lobster steps in to figure out if it’s a very bad-that is coming. The goddess Illyria zombie-or something worse. gives Angel some insight and incentive. Then In Shops: 11/01/2017 she really gets involved, and Angel discovers that it might be possible to change the future by changing the past. -

Ghostbusters: 1000 Sticker Book Free Download

GHOSTBUSTERS: 1000 STICKER BOOK FREE DOWNLOAD none | 32 pages | 30 Jun 2016 | Centum Books | 9781910916575 | English | Torquay, United Kingdom Ghostbusters II (sticker book) Previous Next Showing 1 - of unique designs. Your order is now being processed and we have sent a confirmation email to you at. Subject to credit Ghostbusters: 1000 Sticker Book. Sticker By DanielPalermo. Slime Time Sticker By Izzy. Sticker By GBNews. Remember me? Tags: slimer, slima, ghostbusters, ghost, ghosts, green, pizza, eat, eating, supernatural, bill murray, new york, new york city, gozer, blob, creature, reitman, cool, geek, cartoon, humor, fun, funny. Leave feedback about your eBay search experience - opens in new window or tab. Window Items Shop Our Brands. Mirror 85 Items Zambia Zimbabwe There are 0 items available. Please enter a number less than or equal to 0. Tags: slimer, ghostbusters, ghostmashers, spirits, cartoon, funny, adventure, comedy, kids, 80s, 90s, tv, series, ugly, scary, horror, ghost, buster, episode, entertainment, fight, action, anime, anger, mad, deadly, vector, cut, grunge, noise, dirt, vintage, effect, staind, stain, monster, arrested. If you need immediate assistance, please contact Customer Care. Tags: slimer, ghostbusters, ghost. Silver 3 Items 3. Out of stock. Tags: ghostbusters, slimer, ghost, ecto, plasm, ghostly, dead, 80s, comedy. Tags: ghostbusters, who you gonna call, ghostbusters movie, ghost, ghosts, slimer, ghostbusters afterlife, afterlife, movie, bill murray, murray, 80s, classic, text, monsters. Sticker Dolly Dressing Weddings. Mobile apps. Business seller information. Tags: ghostbusters, slimer, s, sci fi. Slimer Sticker By indeepshirt. Cartoon 13 Items Free Return Exchange or money back guarantee for all orders. We're committed to providing low prices every Ghostbusters: 1000 Sticker Book, on everything. -

![Arxiv:2010.00155V3 [Math.DS] 19 Jul 2021 Statistics of a Family Of](https://docslib.b-cdn.net/cover/0758/arxiv-2010-00155v3-math-ds-19-jul-2021-statistics-of-a-family-of-1340758.webp)

Arxiv:2010.00155V3 [Math.DS] 19 Jul 2021 Statistics of a Family Of

Statistics of a Family of Piecewise Linear Maps J. J. P. Veerman,∗ P. J. Oberly,† L. S. Fox‡ July 21, 2021 Abstract We study statistical properties of a family of piecewise linear monotone circle maps ft(x) related to the angle doubling map x → 2x mod 1. In particular, we deviations n−1 i 1 investigate whether for large n, the i=0 ft (x0) − 2 upon rescaling satisfy a Q-Gaussian distribution if x and t are both independently and uniformly 0 P distributed on the unit circle. This was motivated by the fact that if ft is the rotation by t, then it was recently found that in this case the rescaled deviations are distributed as a Q-Gaussian with Q = 2 (a Cauchy distribution). This is the only case where a non-trivial (i.e. Q 6= 1) Q-Gaussian has been analytically established in a conservative dynamical system. In this note, however, we prove that for the family considered here, limn Sn/n converges to a random variable with a curious distribution which is clearly not a Q-Gaussian or any other standard smooth distribution. 1 Introduction In this note, we study statistical properties of the family of truncated flat spot maps ft, defined as follows. One starts with x 7→ 2x and truncates horizontally to obtain a “flat spot circle map” as illustrated in Figure 1 and given by t t x ∈ [0, 2 ] arXiv:2010.00155v3 [math.DS] 19 Jul 2021 t 1+t ft(x)= 2x mod 1 x ∈ [ 2 , 2 ] . 1+t t x ∈ [ 2 , 1) In particular, we wish to study the quantity n−1 1 S (t, x ) := f i(x ) − . -

Ghostbusters: Volume 1 Free

FREE GHOSTBUSTERS: VOLUME 1 PDF Erik Burnham,Tristan Jones,Dan Schoening | 104 pages | 20 Mar 2012 | Idea & Design Works | 9781613771570 | English | San Diego, United States IDW Publishing Comics- Ghostbusters 1 - Ghostbusters Wiki - "The Compendium of Ghostbusting" Goodreads helps you keep track of books you want to read. Want to Read saving…. Want to Read Currently Reading Read. Ghostbusters: Volume 1 editions. Enlarge cover. Error rating book. Refresh and try again. Open Preview See a Problem? Details if other :. Thanks for Ghostbusters: Volume 1 us about the problem. Return to Book Page. Preview — Ghostbusters, Volume 1 by Erik Burnham. Dan Schoening illustrator. Are Ghostbusters: Volume 1 troubled by strange noises in the middle of the night? Do you experience feelings of dread Ghostbusters: Volume 1 your basement Ghostbusters: Volume 1 attic? Have you or any of your family ever seen a spook, specter, or ghost? If the answer is yes, call the professionals Psychokinetic energy is on the rise Ghostbusters: Volume 1, business is booming for the boys, and Ray is troubled by what could be a prophetic dream. Is Are you troubled by strange noises in the middle of the night? Is this an ill omen of an upcoming apocaplypse, or just a little indigestion? Collects issues Get A Copy. Paperbackpages. More Details Original Title. Other Editions 4. Friend Reviews. To see what your friends thought of this book, please sign up. To ask other readers questions about Ghostbusters, Volume 1please sign up. See 1 question about Ghostbusters, Volume 1…. Lists with This Book. Community Reviews. Showing Average rating 3. -

The X-Files Intersects with Ghostbusters, Teenage Mutant Ninja Turtles, the Transformers, and the Crow!

THE X-FILES INTERSECTS WITH GHOSTBUSTERS, TEENAGE MUTANT NINJA TURTLES, THE TRANSFORMERS, AND THE CROW! CONSPIRACY PART 2: CONSPIRACY PART 3: GHOSTBUSTERS CONSPIRACY PART 1: TEENAGE MUTANT NINJA TURTLES THE X-FILES CONSPIRACY PART 5: CONSPIRACY PART 4: CONSPIRACY PART 6: THE CROW THE TRANSFORMERS THE X-FILES The Lone Gunmen receive an email with disturbing reports, apparently from the future, that detail a lethal virus outbreak. After the stories are corroborated by FBI agents Fox Mulder and Dana Scully, the Gunmen must investigate the various urban legends mentioned in the documents in order to save all of humanity… www.idwpublishing.com • $24.99 Special thanks to Joshua Izzo and Lauren Winarski at Twentieth Century Fox, Gabe Rotter at Ten Thirteen Productions, Joan Hilty, Linda Lee, and Kat van Dam at Nickelodeon, Hasbro’s Clint Chapman, Joe Furfaro, Heather Hopkins, Jerry Jivoin, Joshua Lamb, Ed Lane, Mark Weber, and Michael Kelly, and Edward R. Pressman, Jon Katz, and Melissa Glassman for their invaluable assistance. IDW founded by Ted Adams, Alex Garner, Kris Oprisko, and Robbie Robbins ISBN: 978-1-61377-896-8 17 16 15 14 1 2 3 4 Ted Adams, CEO & Publisher Facebook: facebook.com/idwpublishing Greg Goldstein, President & COO Robbie Robbins, EVP/Sr. Graphic Artist Twitter: @idwpublishing Chris Ryall, Chief Creative Officer/Editor-in-Chief YouTube: youtube.com/idwpublishing Matthew Ruzicka, CPA, Chief Financial Officer Alan Payne, VP of Sales Instagram: instagram.com/idwpublishing Dirk Wood, VP of Marketing deviantART: idwpublishing.deviantart.com www.IDWPUBLISHING.com Lorelei Bunjes, VP of Digital Services IDW founded by Ted Adams, Alex Garner, Kris Oprisko, and Robbie Robbins Jeff Webber, VP of Digital Publishing & Business Development Pinterest: pinterest.com/idwpublishing/idw?staff? faves THE X-FILES: CONSPIRACY. -

Mcwilliams Ku 0099D 16650

‘Yes, But What Have You Done for Me Lately?’: Intersections of Intellectual Property, Work-for-Hire, and The Struggle of the Creative Precariat in the American Comic Book Industry © 2019 By Ora Charles McWilliams Submitted to the graduate degree program in American Studies and the Graduate Faculty of the University of Kansas in partial fulfillment of the requirements for the degree of Doctor of Philosophy. Co-Chair: Ben Chappell Co-Chair: Elizabeth Esch Henry Bial Germaine Halegoua Joo Ok Kim Date Defended: 10 May, 2019 ii The dissertation committee for Ora Charles McWilliams certifies that this is the approved version of the following dissertation: ‘Yes, But What Have You Done for Me Lately?’: Intersections of Intellectual Property, Work-for-Hire, and The Struggle of the Creative Precariat in the American Comic Book Industry Co-Chair: Ben Chappell Co-Chair: Elizabeth Esch Date Approved: 24 May 2019 iii Abstract The comic book industry has significant challenges with intellectual property rights. Comic books have rarely been treated as a serious art form or cultural phenomenon. It used to be that creating a comic book would be considered shameful or something done only as side work. Beginning in the 1990s, some comic creators were able to leverage enough cultural capital to influence more media. In the post-9/11 world, generic elements of superheroes began to resonate with audiences; superheroes fight against injustices and are able to confront the evils in today’s America. This has created a billion dollar, Oscar-award-winning industry of superhero movies, as well as allowed created comic book careers for artists and writers. -

To Download/View the Ghostbusters Pinball Strategy Guide (Pdf)

GHOSTBUSTERS PRO Basic Strategy Guide V01.00.03.10.16 by Dwight Sullivan The Ghostbusters pinball machine is based on the original smash hit movie from 1984. In this game the player gets to join the team to help them clean up the town. The game mostly features events from the first movie and some elements from the sequel. SKILL SHOT At the beginning of each ball, you will have a set of Skill Shots to choose from. Using your flipper buttons you can choose what you would like to go for. You will have nine distinctly different choices. Some examples are: • Start a Scene • Light Playfield Multipliers • Start P.K.E. Frenzy • Start Negative Reinforcement on E.S.P. Ability There are nine areas in the game that can be lit for an award. While the Skill Shot is active, two of the nine will be selected and flashing. The two selected will be; one of the top three lanes and one of six playfield shots below. The selected playfield shot will be marked by a flashing blue arrow. The Skill Shots will stay active until you shoot any of the nine. If you succeed at completing one, you will receive 10% of your score +1M points, plus whichever extra award you chose. Once the ball is plunged you will no longer be able to change your selection. SLIMER Slimer is one of the major interactive toys in the game. At the beginning of the game he stays out of the way. When the game begins all of the G-H-O-S-T letters will be flashing. -

Quincy Branch Library Friends of the Library Newsletter

Quincy Branch Library Friends of the Library Newsletter 11 N. Main Street, Quincy, MI 49082 6 3 9 . 4 0 0 1 Email: [email protected] www.branchdistrictlibrary.org/quincy Library Hours: Sun-Closed Mon 1 2 - 5 Tues 8 : 3 0 - 5 Wed 1 0 - 5 Thu rs 10-6 Fri 8 : 3 0 — 5 Sat 9— 12 Branch Manager's Message Spring 2018 access, fax, Volume 36; Number 2 Remember — We are now Beck Construction organized lamination and open on Monday's from two plaster men, additional available 12-5 men to repair other cracks The Branch District Library Inside this issue: A LOT has been happening that had occurred from system added an additional day Manager's Message 1 at the Quincy Branch in water/roof damage, painters of deliveries during the week recent months! At the end of to spruce the place up AND between our six libraries, and January, Mulder's Moving & the Quincy Township board that has actually remained after Storage emptied out the voted to replace the 1995 we opened back up on our Upcoming 2 main level of the building carpeting while all the main level. We now and kept our belongings in a shelving was taken out. liveries Monday, climate controlled We operated the library out and Friday to serve Bestselle 3 house for several weeks. of the lower level for three Lisa Wood, Branch Manager Did You 3 Mulder's Moving & Storage have assisted Memorials & 4 many libraries in their Donation years of service and they returned every shelf and For your information 5 book to its' original place. -

Magazines V17N9.Qxd

Oct10 Toys:section 9/14/2010 1:54 PM Page 358 ANIMATION TA ROBOT CHICKEN ACTION FIGURES Action figures find new life as players in frenetic sketch-comedy vignettes that skewer TV, movies, music and celebrity as old-school stop-motion animation and fast-paced satire combine in Robot Chicken, an eclectic show created by Seth Green and Matt Senreich. Fans of the [adult swim] series will want to collect these action figures based FUTURAMA SERIES 9 ACTION FIGURES on the series, including Action Robot (6” tall), Candy Bear (5” tall), and the Mad Sci- Good news, everyone! Matt Groening’s Futurama has returned to television screens every- entist with Robot Chicken (6” and 3 1/2” tall respectively). Or they can 10” tall Elec- where, and Toynami celebrates the return of our favorite 31st-century heroes with two all- tronic Robot Chicken for more mechanical mayhem! Scheduled to ship in December new figures from the cult favorite series! Choose from URL, the robotic policeman, or 2010. (6848) (C: 1-1-3) NOTE: Available only in the United States, Canada, and U.S. Wooden Bender, from the 4th season episode “Obsoletely Fabulous.” Each figure stands Territories. 6” tall. Window box packaging. (4522/5010) (C: 1-1-3) NOTE: Available only in the ACTION FIGURES (14000)—Figure .................................................................PI United States, Canada, and U.S. Territories. 10” ELECTRONIC ROBOT CHICKEN (14010)—Figure .......................................PI Figure ..........................................................................................................$13.99