Control of Stochastic Discrete Event Systems Modeled by Probabilistic Languages 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proofs in Elementary Analysis by Branko´Curgus January 15, 2016

Proofs in Elementary Analysis by Branko Curgus´ January 15, 2016 Contents Chapter 1. Introductory Material 5 1.1. Goals 5 1.2. Strategies 5 1.3. Mathematics and logic 6 1.4. Proofs 8 1.5. Four basic ingredients of a Proof 10 Chapter 2. Sets and functions 11 2.1. Sets 11 2.2. Functions 14 2.3. Cardinality of sets 17 Chapter 3. The Set R of real numbers 19 3.1. Axioms of a field 19 3.2. Axioms of order in a field 21 3.3. Three functions: the unit step, the sign and the absolute value 23 3.4. Intervals 25 3.5. Bounded sets. Minimum and Maximum 28 3.6. The set N 29 3.7. Examples and Exercises related to N 32 3.8. Finite sets, infinite sets, countable sets 34 3.9. More on countable sets 37 3.10. The sets Z and Q 41 3.11. The Completeness axiom 42 3.12. More on the sets N, Z and Q 45 3.13. Infimum and supremum 47 3.14. The topology of R 49 3.15. The topology of R2 51 Chapter 4. Sequences in R 53 4.1. Definitions and examples 53 4.2. Bounded sequences 54 4.3. Thedefinitionofaconvergentsequence 55 4.4. Finding N(ǫ)foraconvergentsequence 57 4.5. Two standard sequences 60 4.6. Non-convergent sequences 61 4.7. Convergence and boundedness 61 4.8. Algebra of limits of convergent sequences 61 4.9. Convergent sequences and the order in R 62 4.10. Themonotonicconvergencetheorem 63 3 4 CONTENTS 4.11. -

A Set Optimization Approach to Utility Maximization Under Transaction Costs

A set optimization approach to utility maximization under transaction costs Andreas H. Hamel,∗ Sophie Qingzhen Wangy Abstract A set optimization approach to multi-utility maximization is presented, and duality re- sults are obtained for discrete market models with proportional transaction costs. The novel approach admits to obtain results for non-complete preferences, where the formulas derived closely resemble but generalize the scalar case. Keywords. utility maximization, non-complete preference, multi-utility representation, set optimization, duality theory, transaction costs JEL classification. C61, G11 1 Introduction In this note, we propose a set-valued approach to utility maximization for market models with transaction costs. For finite probability spaces and a one-period set-up, we derive results which resemble very closely the scalar case as discussed in [4, Theorem 3.2.1]. This is far beyond other approaches in which only scalar utility functions are used as, for example, in [1, 3], where a complete preference for multivariate position is assumed. As far as we are aware of, there is no argument justifying such strong assumption, and it does not seem appropriate for market models with transaction costs. On the other hand, recent results on multi-utility representations as given, among others, in [5] lead to the question how to formulate and solve an \expected multi-utility" maximiza- tion problem. The following optimistic goal formulated by Bosi and Herden in [2] does not seem achievable since, in particular, there is no satisfactory multi-objective duality which matches the power of the scalar version: `Moreover, as it reduces finding the maximal ele- ments in a given subset of X with respect to to a multi-objective optimization problem (cf. -

Supremum and Infimum of Set of Real Numbers

ISSN (Online) :2319-8753 ISSN (Print) : 2347-6710 International Journal of Innovative Research in Science, Engineering and Technology (An ISO 3297: 2007 Certified Organization) Vol. 4, Issue 9, September 2015 Supremum and Infimum of Set of Real Numbers M. Somasundari1, K. Janaki2 1,2Department of Mathematics, Bharath University, Chennai, Tamil Nadu, India ABSTRACT: In this paper, we study supremum and infimum of set of real numbers. KEYWORDS: Upper bound, Lower bound, Largest member, Smallest number, Least upper bound, Greatest Lower bound. I. INTRODUCTION Let S denote a set(a collection of objects). The notation x∈ S means that the object x is in the set S, and we write x ∉ S to indicate that x is not in S.. We assume there exists a nonempty set R of objects, called real numbers, which satisfy the ten axioms listed below. The axioms fall in anatural way into three groups which we refer to as the field axioms, the order axioms, and the completeness axiom( also called the least-upper-bound axiom or the axiom of continuity)[10]. Along with the R of real numbers we assume the existence of two operations, called addition and multiplication, such that for every pair of real numbers x and ythe sum x+y and the product xy are real numbers uniquely dtermined by x and y satisfying the following axioms[9]. ( In the axioms that appear below, x, y, z represent arbitrary real numbers unless something is said to the contrary.). In this section, we define axioms[6]. The field axioms Axiom 1. x+y = y+x, xy =yx (commutative laws) Axiom 2. -

Arxiv:2005.13313V1

Single Valued Neutrosophic Filters 1Giorgio Nordo, 2Arif Mehmood, 3Said Broumi 1MIFT - Department of Mathematical and Computer Science, Physical Sciences and Earth Sciences, Messina University, Italy. 2Department of Mathematics and Statistics, Riphah International University Sector I-14, Islamabad, Pakistan 3Laboratory of Information Processing, Faculty of Science, University Hassan II, Casablanca, Morocco [email protected], [email protected], [email protected] Abstract In this paper we give a comprehensive presentation of the notions of filter base, filter and ultrafilter on single valued neutrosophic set and we investigate some of their properties and relationships. More precisely, we discuss properties related to filter completion, the image of neutrosophic filter base by a neutrosophic induced mapping and the infimum and supremum of two neutrosophic filter bases. Keywords: neutrosophic set, single valued neutrosphic set, neutrosophic induced mapping, single valued neutrosophic filter, neutrosophic completion, single valued neutrosophic ultrafilter. 1 Introduction The notion of neutrosophic set was introduced in 1999 by Smarandache [20] as a generalization of both the notions of fuzzy set introduced by Zadeh in 1965 [22] and intuitionistic fuzzy set introduced by Atanassov in 1983 [2]. In 2012, Salama and Alblowi [17] introduced the notion of neutrosophic topological space which gen- eralizes both fuzzy topological spaces given by Chang [8] and that of intuitionistic fuzzy topological spaces given by Coker [9]. Further contributions to Neutrosophic Sets Theory, which also involve many fields of theoretical and applied mathematics were recently given by numerous authors (see, for example, [6], [3], [4], [15], [1], [13], [14] and [16]). In particular, in [18] Salama and Alagamy introduced and studied the notion of neutrosophic filter and they gave some applications to neutrosophic topological space. -

Sun Studio 9: Fortran 95 Interval Arithmetic

Fortran 95 Interval Arithmetic Programming Reference Sun™ Studio 9 Sun Microsystems, Inc. www.sun.com Part No. 817-6704-10 July 2004, Revision A Submit comments about this document at: http://www.sun.com/hwdocs/feedback Copyright © 2004 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. U.S. Government Rights - Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. Use is subject to license terms. This distribution may include materials developed by third parties. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and in other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, Java, and JavaHelp are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon architecture developed by Sun Microsystems, Inc. This product is covered and controlled by U.S. Export Control laws and may be subject to the export or import laws in other countries. Nuclear, missile, chemical biological weapons or nuclear maritime end uses or end users, whether direct or indirect, are strictly prohibited. Export or reexport to countries subject to U.S. embargo or to entities identified on U.S. export exclusion lists, including, but not limited to, the denied persons and specially designated nationals lists is strictly prohibited. -

Star Order and Topologies on Von Neumann Algebras

Star order and topologies on von Neumann algebras Martin Bohata1 Department of Mathematics, Faculty of Electrical Engineering, Czech Technical University in Prague, Technick´a2, 166 27 Prague 6, Czech Republic Abstract: The goal of the paper is to study a topology generated by the star order on von Neumann algebras. In particular, it is proved that the order topology under investigation is finer than σ-strong* topology. On the other hand, we show that it is comparable with the norm topology if and only if the von Neumann algebra is finite- dimensional. AMS Mathematics Subject Classification: 46L10; 06F30; 06A06 1 Introduction In the order-theoretical setting, the notion of convergence of a net was introduced by G. Birkhoff [3, 4]. Let (P, ≤) be a poset and let x ∈ P . If(xα)α∈Γ is an increasing net in (P, ≤) with the supremum x, we write xα ↑ x. Similarly, xα ↓ x means that (xα)α∈Γ is a decreasing net in (P, ≤) with the infimum x. We say that a net (xα)α∈Γ is order convergent to x in (P, ≤) if there are nets (yα)α∈Γ and (zα)α∈Γ in (P, ≤) such that yα ≤ xα ≤ zα for all α ∈ Γ, yα ↑ x, and zα ↓ x. If(xα)α∈Γ is order convergent o to x, we write xα → x. It is easy to see that every net is order convergent to at most one point. The order convergence determines a natural topology on a poset (P, ≤) as follows. A subset C of P is said to be order closed if no net in C is order convergent to a point in P \ C. -

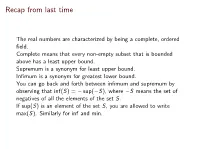

Recap from Last Time

Recap from last time The real numbers are characterized by being a complete, ordered field. Complete means that every non-empty subset that is bounded above has a least upper bound. Supremum is a synonym for least upper bound. Infimum is a synonym for greatest lower bound. You can go back and forth between infimum and supremum by observing that inf(S) = − sup(−S), where −S means the set of negatives of all the elements of the set S. If sup(S) is an element of the set S, you are allowed to write max(S). Similarly for inf and min. A consequence of completeness Theorem (Archimedean property of R) If x and y are two arbitrary positive real numbers, then there exists a natural number n such that nx > y. Proof. Seeking a contradiction, suppose for some x and y no such n exists. That is, nx ≤ y for every positive integer n. Then dividing by the positive number x shows that n ≤ y=x for every positive integer n. Since the natural numbers have an upper bound y=x, there is by completeness a least upper bound, say s. When n is a natural number, n ≤ s; but n + 1 is a natural number too, so n + 1 ≤ s. Add −1 to both sides to deduce that n ≤ s − 1 for every natural number n. Then s − 1 is an upper bound for the natural numbers that is smaller than the supposed least upper bound s. Contradiction. Density of Q in R If x < y, then there exists a rational number between x and y. -

Factorization Invariants of Puiseux Monoids Generated by Geometric Sequences

FACTORIZATION INVARIANTS OF PUISEUX MONOIDS GENERATED BY GEOMETRIC SEQUENCES SCOTT T. CHAPMAN, FELIX GOTTI, AND MARLY GOTTI Abstract. We study some of the factorization invariants of the class of Puiseux monoids generated by geometric sequences, and we compare and contrast them with the known results for numerical monoids generated by arithmetic sequences. The class n we study here consists of all atomic monoids of the form Sr := hr j n 2 N0i, where r is a positive rational. As the atomic monoids Sr are nicely generated, we are able to give detailed descriptions of many of their factorization invariants. One distinguishing characteristic of Sr is that all its sets of lengths are arithmetic sequences of the same distance, namely ja − bj, where a; b 2 N are such that r = a=b and gcd(a; b) = 1. We prove this, and then use it to study the elasticity and tameness of Sr. 1. Prologue There is little argument that the study of factorization properties of rings and integral domains was a driving force in the early development of commutative algebra. Most of this work centered on determining when an algebraic structure has \nice" factorization properties (i.e., what today has been deemed a unique factorization domain (or UFD). It was not until the appearance of papers in the 1970s and 1980s by Skula [42], Zaks [46], Narkiewicz [39, 40], Halter-Koch [36], and Valenza1 [44] that there emerged interest in studying the deviation of an algebraic object from the UFD condition. Implicit in much of this work is the realization that problems involving factorizations of elements in a ring or integral domain are merely problems involving the multiplicative semigroup of the object in question. -

Real Analysis

Real Analysis Part I: MEASURE THEORY 1. Algebras of sets and σ-algebras For a subset A ⊂ X, the complement of A in X is written X − A. If the ambient space X is understood, in these notes we will sometimes write Ac for X − A. In the literature, the notation A′ is also used sometimes, and the textbook uses A˜ for the complement of A. The set of subsets of a set X is called the power set of X, written 2X . Definition. A collection F of subsets of a set X is called an algebra of sets in X if 1) ∅,X ∈F 2) A ∈F⇒ Ac ∈F 3) A,B ∈F⇒ A ∪ B ∈F In mathematics, an “algebra” is often used to refer to an object which is simulta- neously a ring and a vector space over some field, and thus has operations of addition, multiplication, and scalar multiplication satisfying certain compatibility conditions. How- ever the use of the word “algebra” within the phrase “algebra of sets” is not related to the preceding use of the word, but instead comes from its appearance within the phrase “Boolean algebra”. A Boolean algebra is not an algebra in the preceding sense of the word, but instead an object with operations AND, OR, and NOT. Thus, as with the phrase “Boolean algebra”, it is best to treat the phrase “algebra of sets” as a single unit rather than as a sequence of words. For reference, the definition of a Boolean algebra follows. Definition. A Boolean algebra consists of a set Y together with binary operations ∨, ∧, and a unariy operation ∼ such that 1) x ∨ y = y ∨ x; x ∧ y = y ∧ x for all x,y ∈ Y 2) (x ∨ y) ∨ z = x ∨ (y ∨ z); (x ∧ y) ∧ z = x ∧ (y ∧ z) forall x,y,z ∈ Y 3) x ∨ x = x; x ∧ x = x for all x ∈ Y 4) (x ∨ y) ∧ x = x; (x ∧ y) ∨ x = x for all x ∈ Y 5) x ∧ (y ∨ z)=(x ∧ y) ∨ (x ∧ z) forall x,y,z ∈ Y 6) ∃ an element 0 ∈ Y such that 0 ∧ x = 0 for all x ∈ Y 7) ∃ an element 1 ∈ Y such that 1 ∨ x = 1 for all x ∈ Y 8) x ∧ (∼ x)=0; x ∨ (∼ x)=1 forall x ∈ Y Other properties, such as the other distributive law x ∨ (y ∧ z)=(x ∨ y) ∧ (x ∨ z), can be derived from the ones listed. -

A Ternary Variety Generated by Lattices

Commentationes Mathematicae Universitatis Carolinae William H. Cornish A ternary variety generated by lattices Commentationes Mathematicae Universitatis Carolinae, Vol. 22 (1981), No. 4, 773--784 Persistent URL: http://dml.cz/dmlcz/106119 Terms of use: © Charles University in Prague, Faculty of Mathematics and Physics, 1981 Institute of Mathematics of the Academy of Sciences of the Czech Republic provides access to digitized documents strictly for personal use. Each copy of any part of this document must contain these Terms of use. This paper has been digitized, optimized for electronic delivery and stamped with digital signature within the project DML-CZ: The Czech Digital Mathematics Library http://project.dml.cz COMMENTATIONES MATHEMATICAE UNIVERSITATIS CAROLINAE 22.4 (1981) A TERNARY VARIETY GENERATED BY LATTICES William H. CORNISH Abatract: The class of all subreducts of lattices, with respect to the ternary lattice-polynomial sCxfyfz) * * xACyvz), is a 4-baeed variety. Within ita lattice ot aubvarietiea, the subvariety of distributive supremum algeb ras ia atomic and needs 4 variables in any equatlonal des cription. Key words: Subreduct, lattice, neariattice, distribu— tivity. Classification: 03005, 06A12, 06D99, 08B10 0* Introduction. Consider the variety of algebras (A;a) sf typ#<3> that satisfy the following identities CS1) aCxfxfy) » xf CS2) aCxfy,y) »s(yfxfx)f CS3) s(xfyfz) «s(xfzfy)f (54) wAa(xfyfz) *s(iAx,y,zl, (55) 8(xfa(yfvfw)f aCzfvfw)) £aCxfvfw), where A is the derived operation given by CD1) xAy * aCxfyfy), and £ Is the derived relation defined by CDS) x^y if and only if x « XAy. Then, we ahow that an algebra la in thla variety if and only if it ia a subalgebra of s reduct (L;a), where CL; A ,V ) ia - 773 - a lattice and s(x,y,z) » xACyvz). -

An Introduction to Real Analysis John K. Hunter1

An Introduction to Real Analysis John K. Hunter1 Department of Mathematics, University of California at Davis 1The author was supported in part by the NSF. Thanks to Janko Gravner for a number of correc- tions and comments. Abstract. These are some notes on introductory real analysis. They cover the properties of the real numbers, sequences and series of real numbers, limits of functions, continuity, differentiability, sequences and series of functions, and Riemann integration. They don't include multi-variable calculus or contain any problem sets. Optional sections are starred. c John K. Hunter, 2014 Contents Chapter 1. Sets and Functions 1 1.1. Sets 1 1.2. Functions 5 1.3. Composition and inverses of functions 7 1.4. Indexed sets 8 1.5. Relations 11 1.6. Countable and uncountable sets 14 Chapter 2. Numbers 21 2.1. Integers 22 2.2. Rational numbers 23 2.3. Real numbers: algebraic properties 25 2.4. Real numbers: ordering properties 26 2.5. The supremum and infimum 27 2.6. Real numbers: completeness 29 2.7. Properties of the supremum and infimum 31 Chapter 3. Sequences 35 3.1. The absolute value 35 3.2. Sequences 36 3.3. Convergence and limits 39 3.4. Properties of limits 43 3.5. Monotone sequences 45 3.6. The lim sup and lim inf 48 3.7. Cauchy sequences 54 3.8. Subsequences 55 iii iv Contents 3.9. The Bolzano-Weierstrass theorem 57 Chapter 4. Series 59 4.1. Convergence of series 59 4.2. The Cauchy condition 62 4.3. Absolutely convergent series 64 4.4. -

Various Types of Completeness in Topologized Semilattices

Various types of completeness in topologized semilattices Konstantin Kazachenkoa, Alexander V. Osipovb aKrasovskii Institute of Mathematics and Mechanics, Yekaterinburg, Russia bKrasovskii Institute of Mathematics and Mechanics, Ural Federal University, Ural State University of Economics, Yekaterinburg, Russia Abstract A topologized semilattice X is called complete if each non-empty chain C ⊂ X has inf C and sup C that belong to the closure C of the chain C in X. In this paper, we introduce various concepts of completeness of topologized semilattices in the context of operators that generalize the closure operator, and study their basic properties. In addition, examples of specific topologized semilattices are given, showing that these classes do not coincide with each other. Also in this paper, we prove theorems that allow us to generalize the available results on complete semilattices endowed with topology. Keywords: topologized semilattice, complete semilattice, θ-closed set, δ-closed set, H-set 2010 MSC: 06B30, 06B35, 54D55 1. Introduction The use of various algebraic structures, additionally endowed with the structure of a topo- logical space, has long established itself as a convenient and powerful tool in various fields of modern mathematics. This phenomenon motivates the fundamental study of the properties of these objects. When studying spaces that have some additional structure consistent with the topology, quite often the concept of completeness naturally arises, as some internal property of these objects. In most cases, completeness can also be described as an external property, and often it is associated with the concept of absolute closedness, understood in a suitable sense. For example, a metric space X is complete if and only if it is closed in every metric space Y containing X as a metric subspace.