Improving Robustness and Precision in Mobile Robot Localization by Using Laser Range Finding and Monocular Vision

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Eurobot 2009 Rules

Eurobot 2011 Chess’Up ! Rules 2011 Chess’Up ! The robot which has more pieces on its color wins the match. 1/39 Eurobot 2011 Chess’Up ! Rules 2011 Table of Contents 1. Introduction ................................................................................... 5 2. General notes ................................................................................. 6 2.1. Rules scope ............................................................................... 6 2.2. Event schedule ........................................................................... 6 2.3. Refereeing ................................................................................ 7 3. The 2011 theme .............................................................................. 8 3.1. The theme ................................................................................ 8 3.2. Playing elements ........................................................................ 8 3.2.1. King and queens ........................................................................................ 9 3.2.2. Detection of playing elements ..................................................................... 10 3.3. Playing area ............................................................................. 11 3.3.1. Starting zones ......................................................................................... 11 3.3.2. Protected area ........................................................................................ 12 3.3.3. Beacon supports ..................................................................................... -

4. the Robots 4.1. General Conditions

Eurobot 2010 Feed the world Rules 2010 Feed the World The robot who collect the most of fruits, vegetable and seeds will be declared the winner ! 1 Document version : 24/09/2009 15:33 Eurobot 2010 Feed the world Rules 2010 Table of Contents 1. Introduction................................................................................. 4 2. General notes............................................................................... 5 2.1. Rules scope.............................................................................................5 2.2. Event schedule ........................................................................................5 2.3. Refereeing..............................................................................................5 3. The 2010 theme ............................................................................ 6 3.1. The theme..............................................................................................6 3.2. Playing elements ......................................................................................6 3.3. Playing area ............................................................................................7 3.3.1. Starting zones..........................................................................................7 3.3.2. Beacon supports .......................................................................................8 3.4. Dispensing zones ......................................................................................8 3.4.1. The raised zone and -

Participating in an International Robot Contest As a Way to Develop Professional Skills in Engineering Students

Session S3F Participating in an International Robot Contest as a Way to Develop Professional Skills In Engineering Students Julio Pastor, Irene González, F.J Rodríguez Department of Electronics. Universidad de Alcalá; [email protected] Abstract – The article analyses the design of robots that Anyone?” project designed a robot, based on Pioneer and were developed by engineering students for a robot contest Nomad, that was qualified to serve appetizers was realized with the aim of strengthening a set of basic skills that (AAAI’s Robot Competition). [4] also organized an would be useful for the future professional lives of the experience making robots for RoboCup with students in participants. To this end, the results of a survey given to their last year of Information Engineering, Electrical the participants of the international competition Eurobot Engineering and Mechanical Engineering. In [5] a fourth 2007 are presented. Participants were asked their opinions year course at the Osaka Prefectural Collage of Technology on why they participated in the competition, what they was formed where students have to make an orchestra gained in their personal and professional lives for having consisting of robots that play musical instruments. participated as well as positive and negative aspects of the The design of mobile robots is also used in more experience. elementary teaching as an element for the integration of understanding and as a motivational tool for the students. An example of this is the work of [6] which incorporates the INTRODUCTION design of robots in the first year of study to introduce Mobile robots began to be designed in the 1970’s for big concepts that will be taught in other courses and as an budget space applications. -

Roboking - Bringing Robotics Closer to Pupils

Sünderhauf, N., Krause, T. & Protzel, P. (2005). RoboKing - Bringing Robotics closer to Pupils. Proc. of IEEE International Conference on Robotics and Automation ICRA05, Barcelona, Spain, pp. 4265-4270. RoboKing - Bringing Robotics closer to Pupils Niko Sünderhauf, Thomas Krause, Peter Protzel Department of Computer Science and Department of Electrical Engineering and Information Technology Chemnitz University of Technology 09126 Chemnitz, Germany [email protected] {thomas.krause, peter.protzel}@etit.tu-chemnitz.de Abstract— In this paper, we introduce RoboKing, a the Junior-League, Sony-League or even the Small-Size- national contest of mobile autonomous robots, dedicated to League could be suitable for teams of encouraged teams of high school students. RoboKing differs from similar pupils, several problems occur. The Junior-League is well contests by supporting the participating teams with a 250 Euro voucher and by not restricting the kind of materials the suited for young pupils, but pupils with some advanced robots can be build with. Its task is manageable for students knowledge feel very limited by the few opportunities the without previous knowledge in robotics but offers enough mandatory LEGO Mindstorms environment offers. They complexity to be challenging for advanced participants. The also feel subchallenged by the relatively simple tasks first RoboKing contest with 12 participating teams from the robots have to do. On the other hand, the Sony- and different parts of Germany took place at the Hannover Messe in 2004. Because of its great success, RoboKing will Small-Size Leagues are too complex for pupils because the be held annually. RoboKing 2005 has been extended to 20 tasks always require multi-robot interaction and advanced teams of pupils and offers a new challenging task. -

Cansat France : an Innovative Competition to Encourage Wide Adoption and Public Awareness

IAC-10-E1.4.13 CANSAT FRANCE : AN INNOVATIVE COMPETITION TO ENCOURAGE WIDE ADOPTION AND PUBLIC AWARENESS. Emmanuel JOLLY Cyril ARNOLDO Christophe SCICLUNA From Planète Sciences Nicolas PILLET From CNES 61 th International Astronautical Congress th rd 27 Sept-01 Oct 2010/PRAGUE, CZ Copyright © 2010 by Planète Sciences - All rights reserved page 1 CANSAT FRANCE : AN INNOVATIVE COMPETITION TO ENCOURAGE WIDE ADOPTION AND PUBLIC AWARENESS Cyril ARNOLDO, Member of Planète Sciences, France Christophe SCICLUNA, Member of Planète Sciences, France Emmanuel JOLLY , Member of Planète Sciences, France Nicolas PILLET, Space Culture Division, CNES, France ABSTRACT Since 1962, and with the support of CNES and major aerospace industries, the French non-profit organization Planète Sciences has been providing young amateurs with facilities, tools and programmes to make their dream fly. Young space conquerors are proposed to get hands on projects ranging from micro- rockets to experimental rockets but also stratospheric balloons and Cansats. Cansat France is opened to international teams. The pilot Competition was held in 2008 followed by the first edition in 2009. 2010 is the France-Russia year : two teams from Siberia joined the CanSat France and the winning team of the Spanish competition invited to compete. This paper will discuss how CanSat France offers the integration of the CanSat Spirit with proven design and setup methods developed by Planete Sciences/CNES. It will review the details of the differences with other CanSat competitions. 1-INTRODUCTION activities and experimentation to youth from elementary school to university 1.1 CNES , the French space agency levels [1]. The spectrum of thematics established in 1961, is a public has broadened over the years and now organization in charge of the development includes space activities, astronomy, and management of the French space robotics, environment, meteorology, programmes. -

Eurobot 2008 Rules

Eurobotopen 2008 Mission to Mars Rules 2008 – Revision 1 Find proofs of life and bring them to Earth... for analyse! The robot which will bring back to Earth the most living organisms in good conditions will be the winner. Vdocument version : 28 Nov. 2007 Eurobotopen 2008 Mission to Mars Rules 2008 – Revision 1 Forewords This document is an update of the rules published in September 2007. It includes : • the correction of errors and missing points detected after the publishing of the original version, • complementary information about some topics, • answers and details requested by teams in the forum, which content has by the way already published in real time in the FAQ 2008 topic of this same forum (http://www.planete- sciences.org/forums/viewtopic.php?t=10762) This work has been done to provide you with the complete information in a single document, relieving you from compile the original rules document and the various FAQ. In case of additional need of update, a new version of this document will be published, using the same principle of information merge. In order to allow a quick identification of the changes, text modified or added since the previous version are marked by a revision bar in the right margin, like the present page. i Document version : 28 Nov. 2007 Eurobotopen 2008 Mission to Mars Rules 2008 – Revision 1 Table of Contents 1. Scope..................................................................................................................1 2. Basic rules............................................................................................................3 -

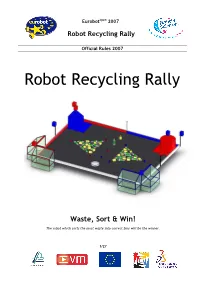

Robot Recycling Rally

Eurobotopen 2007 Robot Recycling Rally Official Rules 2007 Robot Recycling Rally Waste, Sort & Win! The robot which sorts the most waste into correct bins will be the winner. 1/27 Eurobotopen 2007 Robot Recycling Rally Official Rules 2007 1. Scope The following game rules are applicable to all the national qualifications and the final of EUROBOT 2007 autonomous robot contest. EUROBOT is a amateur robotics contest open to world-wide teams of young people, organised either in student projects, in independent clubs, or in an educational project. A team is composed of several people. A team must be made up of two or more active participants. Team members may be up to (including) 30 years old, each team may have one supervisor for which this age limit does not apply. The contest aims at interesting the largest public to robotics and at encouraging the hands on, group practice of science by young people. EUROBOT and its national qualifications are intended to take place in a friendly and sporting spirit. More than an engineering championship for young people, EUROBOT is a friendly pretext to free technical imagination and exchange ideas, know-how, hints and engineering knowledge around a common challenge. Creativity is at stake and interdisciplinarity requested. Technical and cultural enrichment is the goal. Participation to the competitions assumes full acceptation of these principles as well as the rules and any interpretation of them that will be made by the refereeing committee (throughout the year) and by the referees (during the matches). The referees’ decisions are final and may not be challenged, unless an agreement is reached between all the parties involved. -

Bringing Robotics Closer to Students - a Threefold Approach

Proceedings of the 2006 IEEE International Conference on Robotics and Automation Orlando, Florida - May 2006 Bringing Robotics Closer to Students - A Threefold Approach Niko Sünderhauf, Thomas Krause, Peter Protzel Department of Computer Science and Department of Electrical Engineering and Information Technology Chemnitz University of Technology 09126 Chemnitz, Germany [email protected] {thomas.krause, peter.protzel}@etit.tu-chemnitz.de Abstract— In this paper, we present our threefold concept of and graduate students. ”bringing robotics closer to students”. Our efforts begin with motivating high-school students to study engineering sciences II. ROBOKING -ACONTEST FOR HIGH-SCHOOL by increasing their interest in technical issues. This is achieved STUDENTS by a national robotics contest. Besides our work with high-school students, we also focus During the past few years, mobile robotics has increa- on projects for undergraduate university students. Several singly attracted public attention. Many TV documentations robotics projects and competitions are included into the about the DARPA Grand Challenge, about new develop- curriculum of electrical and mechanical engineering and ments in robotics in general, and several reports about Ro- computer science. These projects offer chances to do spe- cial assignments and student research projects. It may be boCup demonstrate this development. Nonetheless, many important to point out that most of the work described in universities report stagnating or even declining numbers this paper, including inventing and specifying the projects of students in engineering subjects while at the same and organizing the events, has been done by undergraduate time, the economy experiences a massive lack of skilled and graduate students.