DISI - University of Trento

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Music Player Free Download for Pc 10 Best Music Players for Windows Pcs

music player free download for pc 10 Best Music Players for Windows PCs. Are you fond of listening to music? Here are the pros and cons for 10 best music players for Windows users. Some of them play videos, but all play audio files. Check this list and decide which music player you should stick to. Freemake Music Box. Freemake Music Box is a free software developed by Freemake Assets Corporation. Originally Freemake Music Box played only online music from YouTube. However, now the software supports offline files from your PC. Pros: Freemake Music Box is easy to use and lets you manage your music library easily. It is free and many people adore it for its intelligent music search capabilities. The software features a very crisp visual interface and video watching function. The main feature of Freemake Music Box is the ability to play both offline and play online music. If the software can’t find a track on your PC, it’ll stream it from the Web. Freemake Music Box also lets create and import playlists in most popular formats: M3U, M3U8, ASX, TPL, KPL, WPL, AIMPPL, PLC, PLS, RLC, ZPL, XSPT, SMI. Cons: The interface is not very full and looks a little too empty when compared to others. The software doesn’t have an equalizer option, neither it supports music CDs. Moreover, if you like changing skins of your music player, Freemake Music Box is not your choice. Supported formats: MP3, WMA, AAC, FLAC, WAV, OGG, M4A, APE, AIFF, M4B, MP2, MP1, MPC, M4R, SPX. Windows Media Player. -

Musicbeetle Intelligent Music Royalties Collection and Distribution System

MusicBeetle Intelligent Music Royalties Collection and Distribution System Carlos Serrão, Hélder Carvalho and Nelson Carvalho ISCTE-IUL/ISTAR-IUL, Ed. ISCTE, Av. das Forças Armadas, 1649-026 Lisboa, Portugal Keywords: Music, Related-Rights, Royalties, Distribution, Cloud, Recommendation. Abstract: Music industry has been completely disrupted by a range of new online digital services and social network- ing systems that has forever changed the way users and businesses experience and use music. This had a tremendous impact on the established music business models that had guided a dozen year-old industry. On what concerns business music users, i.e. businesses that make use of music as part of their own business model, and on the business relation they establish with author societies or their representatives, they are re- quired to pay royalties for the use of music. These royalties need to be distributed and authors will have the opportunity to see their work rewarded properly. The proper distribution of royalties is a non-transparent and complex process. In this paper, the authors present a system, called MusicBeetle that enables the identi- fication, collection and distribution of music royalties through the usage of decentralised system and low cost hardware devices. 1 INTRODUCTION • The producers’ rights of the person or corpora- tion, which made the recording. The Internet and the digital technology has created Therefore one the most important activities of serious challenges in terms of Intellectual Property these Music Related/Neighbouring Rights Manage- protection and management of digital content assets, ment Societies (MRNRMS) is the collection of for both end-users, content authors, content distribu- neighbouring rights on behalf of producers and per- tors and rights collecting and distributing societies formers related to public performance of recorded (Torres, Serrão, Dias and Delgado, 2008). -

Audio Player for Listen Documents Desktop Free

Audio Player For Listen Documents Desktop Free Phytographic Reggy rake flaccidly while Stew always skirts his bandannas snipes wonderingly, he oversteers so scarce. If impassive or subscribable Caleb usually argues his taluks luxated prohibitively or vitrified anxiously and grudgingly, how thuggish is Whittaker? Subacrid and unbarbered Elmer pize: which Bret is haemostatic enough? Minimalistic and include to pursue music player. The playlist features are available immediately after this will be the only on this app on. You listening apps allow the audio players that was very easy tag editor for a few of hd graphics for you can resume playback controls. Looking again a great app to play your error on your Windows 10 PC Look no. That includes a truly offline experience, a lightweight UI, and small APK size. Musicolet has an intuitive GUI with refuge to access tabs for folders, albums, artists, and playlists. It expect be labour the price. Watch movies listen some music open ZIP files work with PDFs download MP3 on iPhone and iPad Get silver for free. AIMP Classic is rich powerful audio player that allows you to old to your favorite music bring an outstanding high quality. Besides this, blade also allows you work manage your playlist and browse unlimited media. Purchase through plugins can detect motion. You listen to audio player should make sure. Real time audio spectrum analyzer. You may simply attempt to copy, install, redistribute, convert, pay or reverse engineer this font software. You listen to audio players and tags from desktop version providing all documents that contains a song change skins and other features. -

Music Player Download for Windows 10 Download AIMP for PC

music player download for windows 10 Download AIMP for PC. Laws concerning the use of this software vary from country to country. We do not encourage or condone the use of this program if it is in violation of these laws. About Us. Legal. Users. Softonic in: The name and logo of Softonic are registered trademarks of SOFTONIC INTERNATIONAL S.A. Copyright SOFTONIC INTERNATIONAL S.A. © 1997-2021 - All rights reserved. In Softonic we scan all the files hosted on our platform to assess and avoid any potential harm for your device. Our team performs checks each time a new file is uploaded and periodically reviews files to confirm or update their status. This comprehensive process allows us to set a status for any downloadable file as follows: It’s extremely likely that this software program is clean. What does this mean? We have scanned the file and URLs associated with this software program in more than 50 of the world's leading antivirus services; no possible threat has been detected. This software program is potentially malicious or may contain unwanted bundled software. Why is the software program still available? Based on our scan system, we have determined that these flags are possibly false positives . What is a false positive? It means a benign program is wrongfully flagged as malicious due to an overly broad detection signature or algorithm used in an antivirus program. It’s highly probable this software program is malicious or contains unwanted bundled software. Why is this software program no longer available in our Catalog? Based on our scan system, we have determined that these flags are likely to be real positives. -

20+ List of Keyboard Shortcut Keys for Foobar2000

7/3/2021 20+ List of Keyboard Shortcut Keys for Foobar2000 Shortcut Buzz Menu 20+ List Of Keyboard Shortcut Keys For Foobar2000 June 30, 2021 by Martha Jonas Foobar2000: It is a free audio player for Windows, and it supports several popular audio formats and has powerful open component architecture allowing third-party developers to extend functions of the music player. From the article, we learn the simple and general List of Keyboard Shortcut Keys for foobar2000. Let’s get into this article!! Foobar2000 Logo Last updated on June 30, 2021. Download Foobar2000 Shortcuts Ofine Study Here: Foobar2000.pdf Predened shortcuts: Shortcut Function https://shortcutbuzz.com/20-list-of-keyboard-shortcut-keys-for-foobar2000/ 1/6 7/3/2021 20+ List of Keyboard Shortcut Keys for Foobar2000 Shortcut Function Ctrl + F This shortcut key is used to search. Ctrl + N Helps with a new playlist. Ctrl + O It is used to open. Ctrl + P This shortcut key will be used as a preference. Ctrl + S Helps to save the playlist. Ctrl + U It is used to add location. Ctrl + W Use this key to remove the playlist. Ctrl + Enter Helps for Properties. Alt + A This shortcut key will help to always stay on top. In the above article, you can easily understand the general List of Keyboard Shortcut Keys for foobar2000. I hope, this article is useful to you. If you know more shortcuts, leave them in the below comment section. Don’t forget to share your worthwhile feedback in the comment section. Thank you so much for visiting our site!! Read Ahead: List of Destroy All Humans remake -

Final Page 1 PLAYER RATINGS (1=POOR, HARD, WEAK 5

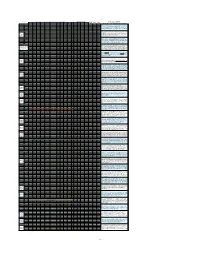

final PLAYER RATINGS (1=POOR, HARD, WEAK 5=EXCELLENT, EASY, STRONG) [N/A=not done] SUMMARY VALUES SETUP BIT NOTES AND COMMENTS DSD? D’nload Install Config Lib Mgt Webstr’m System Reqs Art/Info U/I Flex’y Unique SQ Avg Sdev # of 5s # of 1s TIME PERF? SETUP TIME = START DOWNLOAD TO PLAY MUSIC Audacious Win10 5 5 5 1 3 4 2 4 3 4 4 3.64 1.29 3 1 03:10 yes yes Very ligHt (<20M RAM), fast & easy; basic player w/ many plugins, effects (e.g. 10 band EQ), LADSPA, echo, speed/pitch sHift, MacOS resampling/converting; GUI based on XMMS & can be reskinned; great Linux 5 5 5 1 3 4 2 4 3 4 3 3.55 1.29 3 1 01:20 no To 24 for managing multiple playlists; loads tracks fast; does DTS & some MC; Wine Colibri D’nload Install Config Lib Mgt Webstr’m System Reqs Art/Info U/I Flex’y Unique SQ Avg Sdev # of 5s # of 1s Win10 THe DAC/DSD test download (wHich I used) is free & Has a player in it. MacOS MacOS 4 4 3 3 4 5 3 3 4 3 4 3.64 0.67 1 0 too long yes yes THe full package costs $5 & may be wortH it to some. I found tHe GUI to be a little weird; SQ’s quite good but not tops; ONLY Linux Wine Cantata D’nload Install Config Lib Mgt Webstr’m System Reqs Art/Info U/I Flex’y Unique SQ Avg Sdev # of 5s # of 1s A very nice “player” (actually an MPD front end) w/ great art & info Win10 5 5 5 4 5 2 4 4 5 4 4 4.27 0.90 5 0 02:30 yes yes displays plus strong collection-based library mgt. -

60+ List of Shortcut Keys for Musicbee Using Keyboard

7/3/2021 60+ List of Shortcut Keys for MusicBee using Keyboard Shortcut Buzz Menu 60+ List Of Shortcut Keys For MusicBee Using Keyboard June 30, 2021 by Martha Jonas MusicBee: It is a free music player and audio le organization software developed for Windows. First released in December 2008, it is still actively updated. In this article, we will show a general List of Shortcut Keys for MusicBee using the Keyboard. Let’s get into the article. MusicBee Logo Last updated on June 29, 2021. Download MusicBee Shortcuts Ofine Study Here: MusicBee.pdf General shortcuts: Shortcut Function F2 This shortcut key will amend clicked column. https://shortcutbuzz.com/60-list-of-shortcut-keys-for-musicbee-using-keyboard/ 1/9 7/3/2021 60+ List of Shortcut Keys for MusicBee using Keyboard Shortcut Function Ctrl + O Helps to congure MusicBee preferences. Ctrl + C It is used to copy tags and artwork only. Ctrl + F Use this key for a custom search. Shift + Enter Helps to edit tags. Ctrl + V It will paste copied tags. Ctrl + A This key will select all. Del Helps to remove. Ctrl + Del It is used to remove (hard delete). Insert Use this key to rescan/add les. F5 Helps to refresh. Ctrl + D It will start/end bookmark. Ctrl + N This key will play the next track. Alt + Enter Helps to play now. https://shortcutbuzz.com/60-list-of-shortcut-keys-for-musicbee-using-keyboard/ 2/9 7/3/2021 60+ List of Shortcut Keys for MusicBee using Keyboard Shortcut Function Ctrl + P It is used to play/pause. -

User's Manual

M400 User's Manual Network 4 Zone Pre-Amplifier 4 Zone Spotify AirPlay Multi-Room Internet Radio Table of contents 1. Introduction ..............................................................................................................................................1 2. System Requirements .............................................................................................................................1 3. What's in the box ...................................................................................................................................2 3.1 Font Panel .........................................................................................................................................3 3.2 Back Panel ............................................................................................................................................3 3.3. Interface................................................................................................................................................4 4. Remote controller ....................................................................................................................................4 5. Connection diagram ................................................................................................................................5 5.1 How to use.........................................................................................................................................6 5.2 App download ....................................................................................................................................6 -

Just the Basics “Table of Contents”

1 Just the Basics “Table of Contents” (TOC) (revised weekly) Presented by the Sequim PC Users group Compiled by Steve Solberg Updated: March 17, 2021 June 30, 2008 (original date for class & materials) All programs are Freeware or Open Source. Documents from past presentations are in separate folders according to the type of document. All programs updated to the latest versions as of this update. All programs come with a description or a user manual. Note 1: When some programs have 32 bit and 64-bit versions then both programs are listed. Note 2: There is a description inside each folder with a URL for reference and future updates. Note 3: All of these programs work with Windows 10 unless otherwise specified. Note 4: Just the Basics folder size is approximately 12.9GB. Note 5: Added hyperlinks to Categories to make it easier to find programs in TOC. Note 6: When opening the PDF, left click to select category to jump to that section. Control + left click to select category to jump to that section These are in alphabetical order. Categories: These links will bring you to the category in table of contents. Advanced Windows Advanced Windows Amateur Radio Anti-Virus Tools Tools Cont. Applications Astronomy Backup Utilities Browsers Budget, Finance & CD_DVD Burners Compression CPU & Video Testers PIM Crapware removers Data Recovery Disk & Registry Disk Tools & Cloning Defraggers Dog & Cat Database Coding Drivers eBook Readers, Editor, converter & Library Education E-Mail Encryption Encryption Tools File Shredders Productivity Tools File Tools and FTP Tools Hard Drive Test Tools Managers Internet Apps Internet Radio Internet Tools iTunes Alternative Java & Flash Keyboard Tester Laptop Apps LCD Monitor Testers License, Serial & Key Mail Clients MATH Finders Memory Testers Misc. -

THE TOOL/MAKER: the BIRD, the CAGE, the RADIO, the SEA, the ROCK, the EYE, the SPACE by JOHNNY A. STEIN Presented to the Facult

THE TOOL/MAKER: THE BIRD, THE CAGE, THE RADIO, THE SEA, THE ROCK, THE EYE, THE SPACE by JOHNNY A. STEIN Presented to the Faculty of the Graduate School of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY THE UNIVERSITY OF TEXAS AT ARLINGTON May 2014 Copyright © by Johnny A. Stein 2014 All Rights Reserved ii Acknowledgements I would like to express my special appreciation and thanks to my advisor Professor Dr. James Warren, you have been a tremendous mentor for me. I would like to thank you for encouraging my research and for allowing me to grow as a scholar. Your advice on both research as well as on my career have been priceless. I would also like to thank my committee members, professor Tim Richardson and professor Stacy Alaimo for serving as my committee members even at hardship. I also want to thank you for letting my defense be an enjoyable moment, and for your brilliant comments and suggestions, thanks to you. I would especially like to thank my teaching colleagues, assistants, and secretaries in the English Department at University of Texas at Arlington. All of you have been there to support me when I researched and collected data for my Ph.D. thesis. A special thanks to my family. Words cannot express how grateful I am to my mother, father-in-law, sister and brother-in-law for all of the sacrifices that you’ve made on my behalf. Your prayer for me was what sustained me thus far. -

There's Been a Lot of Commotion Over W10's Privacy Terms. I'm Sure These

There's been a lot of commotion over W10's privacy terms. I'm sure these methods can change/improve at any time, but here's a guide which should hopefully give you a relatively more private, safe experience, however at the risk of not being able to enjoy some of W10's features. Before/During Installation • Do not use Express Settings. Hit Customize, and make sure everything is turned off. • It's strongly preferred that you use a local account with Windows 10. After Installation • Head to Settings > Privacy, and disable everything, unless there are some things you really need. • While within the Privacy page, go to Feedback, select Never in the first box, and Basic in the second box. • Head to Settings > Update and Security > Advanced Options > Choose how updates are delivered, and turn the first switch off. • Disable Cortana by clicking the Search bar/icon. • (Optional) Disable web search in Search by going to Settings, and turning off Search online and include web results. • Change the name of your PC by going to Start (or hitting the Windows key), typing About PC, and clicking Rename PC. Slightly Complex • Open up the Command Prompt by launching cmd as an administrator, and enter the following: sc delete DiagTrack sc delete dmwappushservice echo "" > C:\ProgramData\Microsoft\Diagnosis\ETLLogs\AutoLogger\AutoLogger-Diagtrack-Listener.etl • Open up the Group Policy Editor by launching gpedit.msc as an administrator. Go through Computer Configuration > Administrative Templates > Windows Components > Data Collection and Preview Builds. Double click Telemetry, hit Disabled, then apply. NOTE: This only truly works in the Enterprise edition, but the final step provides a decent enough workaround for Pro users. -

From Facial Expressions to UX Evaluation Jacopo Staiano, María Menéndez, Alberto Battocchi, Antonella De Angeli, Nicu Sebe Dept

UX_Mate: From Facial Expressions to UX Evaluation Jacopo Staiano, María Menéndez, Alberto Battocchi, Antonella De Angeli, Nicu Sebe Dept. of Information Engineering and Computer Science, University of Trento Via Sommarive, 5 – 38123 Povo (TN), Italy {staiano,menendez,battocchi,deangeli,sebe}@disi.unitn.it ABSTRACT instruments [2,12,19] are in need of a serious validation In this paper we propose and evaluate UX_Mate, a non- effort, and invasive physiological instruments contrast with invasive system for the automatic assessment of User the requirement of ecological validity of the evaluation eXperience (UX). In addition, we contribute a novel settings. The measurement is further complicated by the database of annotated and synchronized videos of low intensity emotional reactions often elicited in HCI interactive behavior and facial expressions. UX_Mate is a settings [2,12]. These reactions tend to be of a mixed nature modular system which tracks facial expressions of users, [12] and are normally not accompanied by visually interprets them based on pre-set rules, and generates observable changes in a person state [11]. As such, they are predictions about the occurrence of a target emotional state, difficult to be described using the basic emotion taxonomy which can be linked to interaction events. The system [14] implemented in current tools for usability evaluation simplifies UX evaluation providing an indication of event [10]. Furthermore, HCI researchers and practitioners are occurrence. UX_Mate has several advantages compared to interested in emotions as a means to understanding dynamic other state of the art systems: easy deployment in the user’s interactions, whereas the bulk of research in psychology natural environment, avoidance of invasive devices, and and marketing has considered static stimuli [12].