Quantifying Correlation Uncertainty Risk in Credit Derivatives Pricing †

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interest Rate Options

Interest Rate Options Saurav Sen April 2001 Contents 1. Caps and Floors 2 1.1. Defintions . 2 1.2. Plain Vanilla Caps . 2 1.2.1. Caplets . 3 1.2.2. Caps . 4 1.2.3. Bootstrapping the Forward Volatility Curve . 4 1.2.4. Caplet as a Put Option on a Zero-Coupon Bond . 5 1.2.5. Hedging Caps . 6 1.3. Floors . 7 1.3.1. Pricing and Hedging . 7 1.3.2. Put-Call Parity . 7 1.3.3. At-the-money (ATM) Caps and Floors . 7 1.4. Digital Caps . 8 1.4.1. Pricing . 8 1.4.2. Hedging . 8 1.5. Other Exotic Caps and Floors . 9 1.5.1. Knock-In Caps . 9 1.5.2. LIBOR Reset Caps . 9 1.5.3. Auto Caps . 9 1.5.4. Chooser Caps . 9 1.5.5. CMS Caps and Floors . 9 2. Swap Options 10 2.1. Swaps: A Brief Review of Essentials . 10 2.2. Swaptions . 11 2.2.1. Definitions . 11 2.2.2. Payoff Structure . 11 2.2.3. Pricing . 12 2.2.4. Put-Call Parity and Moneyness for Swaptions . 13 2.2.5. Hedging . 13 2.3. Constant Maturity Swaps . 13 2.3.1. Definition . 13 2.3.2. Pricing . 14 1 2.3.3. Approximate CMS Convexity Correction . 14 2.3.4. Pricing (continued) . 15 2.3.5. CMS Summary . 15 2.4. Other Swap Options . 16 2.4.1. LIBOR in Arrears Swaps . 16 2.4.2. Bermudan Swaptions . 16 2.4.3. Hybrid Structures . 17 Appendix: The Black Model 17 A.1. -

Tax Treatment of Derivatives

United States Viva Hammer* Tax Treatment of Derivatives 1. Introduction instruments, as well as principles of general applicability. Often, the nature of the derivative instrument will dictate The US federal income taxation of derivative instruments whether it is taxed as a capital asset or an ordinary asset is determined under numerous tax rules set forth in the US (see discussion of section 1256 contracts, below). In other tax code, the regulations thereunder (and supplemented instances, the nature of the taxpayer will dictate whether it by various forms of published and unpublished guidance is taxed as a capital asset or an ordinary asset (see discus- from the US tax authorities and by the case law).1 These tax sion of dealers versus traders, below). rules dictate the US federal income taxation of derivative instruments without regard to applicable accounting rules. Generally, the starting point will be to determine whether the instrument is a “capital asset” or an “ordinary asset” The tax rules applicable to derivative instruments have in the hands of the taxpayer. Section 1221 defines “capital developed over time in piecemeal fashion. There are no assets” by exclusion – unless an asset falls within one of general principles governing the taxation of derivatives eight enumerated exceptions, it is viewed as a capital asset. in the United States. Every transaction must be examined Exceptions to capital asset treatment relevant to taxpayers in light of these piecemeal rules. Key considerations for transacting in derivative instruments include the excep- issuers and holders of derivative instruments under US tions for (1) hedging transactions3 and (2) “commodities tax principles will include the character of income, gain, derivative financial instruments” held by a “commodities loss and deduction related to the instrument (ordinary derivatives dealer”.4 vs. -

An Analysis of OTC Interest Rate Derivatives Transactions: Implications for Public Reporting

Federal Reserve Bank of New York Staff Reports An Analysis of OTC Interest Rate Derivatives Transactions: Implications for Public Reporting Michael Fleming John Jackson Ada Li Asani Sarkar Patricia Zobel Staff Report No. 557 March 2012 Revised October 2012 FRBNY Staff REPORTS This paper presents preliminary fi ndings and is being distributed to economists and other interested readers solely to stimulate discussion and elicit comments. The views expressed in this paper are those of the authors and are not necessarily refl ective of views at the Federal Reserve Bank of New York or the Federal Reserve System. Any errors or omissions are the responsibility of the authors. An Analysis of OTC Interest Rate Derivatives Transactions: Implications for Public Reporting Michael Fleming, John Jackson, Ada Li, Asani Sarkar, and Patricia Zobel Federal Reserve Bank of New York Staff Reports, no. 557 March 2012; revised October 2012 JEL classifi cation: G12, G13, G18 Abstract This paper examines the over-the-counter (OTC) interest rate derivatives (IRD) market in order to inform the design of post-trade price reporting. Our analysis uses a novel transaction-level data set to examine trading activity, the composition of market participants, levels of product standardization, and market-making behavior. We fi nd that trading activity in the IRD market is dispersed across a broad array of product types, currency denominations, and maturities, leading to more than 10,500 observed unique product combinations. While a select group of standard instruments trade with relative frequency and may provide timely and pertinent price information for market partici- pants, many other IRD instruments trade infrequently and with diverse contract terms, limiting the impact on price formation from the reporting of those transactions. -

Panagora Global Diversified Risk Portfolio General Information Portfolio Allocation

March 31, 2021 PanAgora Global Diversified Risk Portfolio General Information Portfolio Allocation Inception Date April 15, 2014 Total Assets $254 Million (as of 3/31/2021) Adviser Brighthouse Investment Advisers, LLC SubAdviser PanAgora Asset Management, Inc. Portfolio Managers Bryan Belton, CFA, Director, Multi Asset Edward Qian, Ph.D., CFA, Chief Investment Officer and Head of Multi Asset Research Jonathon Beaulieu, CFA Investment Strategy The PanAgora Global Diversified Risk Portfolio investment philosophy is centered on the belief that risk diversification is the key to generating better risk-adjusted returns and avoiding risk concentration within a portfolio is the best way to achieve true diversification. They look to accomplish this by evaluating risk across and within asset classes using proprietary risk assessment and management techniques, including an approach to active risk management called Dynamic Risk Allocation. The portfolio targets a risk allocation of 40% equities, 40% fixed income and 20% inflation protection. Portfolio Statistics Portfolio Composition 1 Yr 3 Yr Inception 1.88 0.62 Sharpe Ratio 0.6 Positioning as of Positioning as of 0.83 0.75 Beta* 0.78 December 31, 2020 March 31, 2021 Correlation* 0.87 0.86 0.79 42.4% 10.04 10.6 Global Equity 43.4% Std. Deviation 9.15 24.9% U.S. Stocks 23.6% Weighted Portfolio Duration (Month End) 9.0% 8.03 Developed non-U.S. Stocks 9.8% 8.5% *Statistic is measured against the Dow Jones Moderate Index Emerging Markets Equity 10.0% 106.8% Portfolio Benchmark: Nominal Fixed Income 142.6% 42.0% The Dow Jones Moderate Index is a composite index with U.S. -

Interest Rate Swap Contracts the Company Has Two Interest Rate Swap Contracts That Hedge the Base Interest Rate Risk on Its Two Term Loans

$400.0 million. However, the $100.0 million expansion feature may or may not be available to the Company depending upon prevailing credit market conditions. To date the Company has not sought to borrow under the expansion feature. Borrowings under the Credit Agreement carry interest rates that are either prime-based or Libor-based. Interest rates under these borrowings include a base rate plus a margin between 0.00% and 0.75% on Prime-based borrowings and between 0.625% and 1.75% on Libor-based borrowings. Generally, the Company’s borrowings are Libor-based. The revolving loans may be borrowed, repaid and reborrowed until January 31, 2012, at which time all amounts borrowed must be repaid. The revolver borrowing capacity is reduced for both amounts outstanding under the revolver and for letters of credit. The original term loan will be repaid in 18 consecutive quarterly installments which commenced on September 30, 2007, with the final payment due on January 31, 2012, and may be prepaid at any time without penalty or premium at the option of the Company. The 2008 term loan is co-terminus with the original 2007 term loan under the Credit Agreement and will be repaid in 16 consecutive quarterly installments which commenced June 30, 2008, plus a final payment due on January 31, 2012, and may be prepaid at any time without penalty or premium at the option of Gartner. The Credit Agreement contains certain customary restrictive loan covenants, including, among others, financial covenants requiring a maximum leverage ratio, a minimum fixed charge coverage ratio, and a minimum annualized contract value ratio and covenants limiting Gartner’s ability to incur indebtedness, grant liens, make acquisitions, be acquired, dispose of assets, pay dividends, repurchase stock, make capital expenditures, and make investments. -

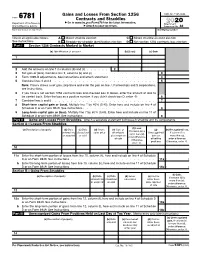

Form 6781 Contracts and Straddles ▶ Go to for the Latest Information

Gains and Losses From Section 1256 OMB No. 1545-0644 Form 6781 Contracts and Straddles ▶ Go to www.irs.gov/Form6781 for the latest information. 2020 Department of the Treasury Attachment Internal Revenue Service ▶ Attach to your tax return. Sequence No. 82 Name(s) shown on tax return Identifying number Check all applicable boxes. A Mixed straddle election C Mixed straddle account election See instructions. B Straddle-by-straddle identification election D Net section 1256 contracts loss election Part I Section 1256 Contracts Marked to Market (a) Identification of account (b) (Loss) (c) Gain 1 2 Add the amounts on line 1 in columns (b) and (c) . 2 ( ) 3 Net gain or (loss). Combine line 2, columns (b) and (c) . 3 4 Form 1099-B adjustments. See instructions and attach statement . 4 5 Combine lines 3 and 4 . 5 Note: If line 5 shows a net gain, skip line 6 and enter the gain on line 7. Partnerships and S corporations, see instructions. 6 If you have a net section 1256 contracts loss and checked box D above, enter the amount of loss to be carried back. Enter the loss as a positive number. If you didn’t check box D, enter -0- . 6 7 Combine lines 5 and 6 . 7 8 Short-term capital gain or (loss). Multiply line 7 by 40% (0.40). Enter here and include on line 4 of Schedule D or on Form 8949. See instructions . 8 9 Long-term capital gain or (loss). Multiply line 7 by 60% (0.60). Enter here and include on line 11 of Schedule D or on Form 8949. -

IDEX IRS Products

IDEX IRS Products International Derivatives Clearing Group (IDCG), a majority owned, independently operated subsidiary of The NASDAQ OMX Group®, has developed an integrated derivatives trading and clearing platform. This platform will provide an efficient and transparent venue to trade, clear and settle IDEXTM interest rate swap (IRS) futures as well as other fixed income derivatives contracts. The platform offers a state-of-the-art trade matching engine and a best-in-breed clearing system through IDCG’s wholly-owned subsidiary, the International Derivatives Clearinghouse, LLC (IDCH)*. The trade matching engine is provided by IDCG and operated under the auspices of the NASDAQ OMX Futures Exchange (NFX), a wholly- owned subsidiary of The NASDAQ OMX Group, in NFX’s capacity as a CFTC-designated contract market for IDEXTM IRS futures products. IDEXTM IRS Product Offerings IDEXTM IRS futures are designed to be economically equivalent in every material respect to plain vanilla interest rate swap contracts currently traded in the OTC derivatives market. The first product launched was IDEXTM USD Interest Rate Swap Futures. • IDEXTM USD Interest Rate Swap Futures — The exchange of semi-annual fixed-rate payments in exchange for quarterly floating-rate payments based on the 3-Month U.S. Dollar London Interbank Offered Rate (USD LIBOR). There are thirty years of daily maturities available for trading on days that NFX and IDCH are open for business. • IDEX SwapDrop PortalTM — The SwapDrop Portal is a web portal maintained by the NFX, which is utilized to report Exchange of Futures for Swaps (EFS) transactions involving IDEXTM USD Interest Rate Swap Futures contracts. -

Interest Rate Models: Paradigm Shifts in Recent Years

Interest Rate Models: Paradigm shifts in recent years Damiano Brigo Q-SCI, Managing Director and Global Head DerivativeFitch, 101 Finsbury Pavement, London Columbia University Seminar, New York, November 5, 2007 This presentation is based on the book "Interest Rate Models: Theory and Practice - with Smile, Inflation and Credit" by D. Brigo and F. Mercurio, Springer-Verlag, 2001 (2nd ed. 2006) http://www.damianobrigo.it/book.html Damiano Brigo, Q-SCI, DerivativeFitch, London Columbia University Seminar, November 5, 2007 Overview ² No arbitrage and derivatives pricing. ² Modeling suggested by no-arbitrage discounting. 1977: Endogenous short-rate term structure models ² Reproducing the initial market interest-rate curve exactly. 1990: Exogenous short rate models ² A general framework for no-arbitrage rates dynamics. 1990: HJM - modeling instantaneous forward rates ² Moving closer to the market and consistency with market formulas 1997: Fwd market-rates models calibration and diagnostics power ² 2002: Volatility smile extensions of Forward market-rates models Interest rate models: Paradigms shifts in recent years 1 Damiano Brigo, Q-SCI, DerivativeFitch, London Columbia University Seminar, November 5, 2007 No arbitrage and Risk neutral valuation Recall shortly the risk-neutral valuation paradigm of Harrison and Pliska's (1983), characterizing no-arbitrage theory: A future stochastic payo®, built on an underlying fundamental ¯nancial asset, paid at a future time T and satisfying some technical conditions, has as unique price at current time t -

The Role of Interest Rate Swaps in Corporate Finance

The Role of Interest Rate Swaps in Corporate Finance Anatoli Kuprianov n interest rate swap is a contractual agreement between two parties to exchange a series of interest rate payments without exchanging the A underlying debt. The interest rate swap represents one example of a general category of financial instruments known as derivative instruments. In the most general terms, a derivative instrument is an agreement whose value derives from some underlying market return, market price, or price index. The rapid growth of the market for swaps and other derivatives in re- cent years has spurred considerable controversy over the economic rationale for these instruments. Many observers have expressed alarm over the growth and size of the market, arguing that interest rate swaps and other derivative instruments threaten the stability of financial markets. Recently, such fears have led both legislators and bank regulators to consider measures to curb the growth of the market. Several legislators have begun to promote initiatives to create an entirely new regulatory agency to supervise derivatives trading activity. Underlying these initiatives is the premise that derivative instruments increase aggregate risk in the economy, either by encouraging speculation or by burdening firms with risks that management does not understand fully and is incapable of controlling.1 To be certain, much of this criticism is aimed at many of the more exotic derivative instruments that have begun to appear recently. Nevertheless, it is difficult, if not impossible, to appreciate the economic role of these more exotic instruments without an understanding of the role of the interest rate swap, the most basic of the new generation of financial derivatives. -

Interest Rate Swap Policy

INTEREST RATE SWAP POLICY September 2011 Table of Contents I. Introduction ................................................................................................................. 1 II. Scope and Authority................................................................................................... 1 III. Conditions for the Use of Interest Rate Swaps.......................................................... 2 A. General Usage ............................................................................................ 2 B. Maximum Notional Amount.......................................................................... 2 C. Liquidity Considerations............................................................................... 2 D. Call Option Value Considerations................................................................ 2 IV. Interest Rate Swap Features .................................................................................... 3 A. Interest Rate Swap Agreement.................................................................... 3 B. Interest Rate Swap Counterparties.............................................................. 3 C. Term and Notional Amount.......................................................................... 5 D. Collateral Requirements .............................................................................. 5 E. Security and Source of Repayment ............................................................. 6 G. Prohibited Interest Rate Swap Features..................................................... -

The Hidden Costs of Non-Zero Interest Rate Floors in European Variable-Rate Debt Facilities

The Hidden Costs of Non-Zero Interest Rate Floors in European Variable-Rate Debt Facilities March 2015 White Paper EXECUTIVE SUMMARY Although any lender could include a floor in a variable-rate loan, the feature has been most Floors above zero percent1 in leveraged finance prevalent in the higher-risk corporate debt facilities transactions are not nearly as prevalent in Europe as in leveraged buy-out transactions. Their incidence in they have been in the USA, but they have featured in European leveraged finance markets has been fairly an increasing proportion of European deals in recent low until recently, but it is not clear whether the years. The rationale for a floor is simple enough: trend will continue. when absolute levels of interest rates are depressed, it provides a targeted minimum yield for lenders It is important to clarify the mechanics of a floor in without increasing the loan margin. a loan before delving into the details. To illustrate the concepts and to maintain consistency, this paper In practical terms, however, floors impose two sets considers the following example throughout, using of burdens on the borrower: market data from the end of June 2014: 1. Direct and indirect increases to interest • Seven-year euro-denominated Term Loan “B” facility expense, with the latter resulting from the • 500bp2 margin over EURIBOR time value inherent to options. • 1.00% floor / minimum EURIBOR3 provision 2. Considerable accounting complexity for the • Prevailing EURIBOR of 0.25% is substantially debt and any interest rate hedging under IFRS. below the floor level The goal of this paper is to explain the effects of • Market forward curve “implies”4 that EURIBOR these floors and conclude with a few examples will exceed 1.00% by end of year four of perhaps more efficient and less burdensome alternate means to satisfy the rationale for non-zero Diagram 1 floors in variable-rate facilities. -

Derivatives Primer Analyst: Michele Wong

The NAIC’s Capital Markets Bureau monitors developments in the capital markets globally and analyzes their potential impact on the investment portfolios of U.S. insurance companies. A list of archived Capital Markets Bureau Primers is available via the INDEX. Derivatives Primer Analyst: Michele Wong Executive Summary • Derivatives are contracts whose value is based on an observable underlying value—a security’s or commodity’s price, an interest rate, an exchange rate, an index, or an event. • U.S. insurers primarily use derivatives to hedge risks (such as interest rate risk, credit risk, currency risk and equity risk) and, to a lesser extent, replicate assets and generate additional income. • The primary risks associated with derivatives use include market risk, liquidity risk and counterparty risks. • Derivatives use laws, NAIC model regulations, derivatives use plans and statutory accounting requirements are important components in the oversight of derivatives use by U.S. insurers. What Are Derivatives? Derivatives are contracts whose value, at one or more future points in time, is based on an observable underlying value—a security’s or commodity’s price, an interest rate, exchange rate, index, or event, such as a credit default. The derivatives contract is between two parties and specifies terms under which payments are to be made, depending on fluctuations in the value of the underlying. Specified terms typically include notional amount, price, premium, maturity date, delivery date, and reference entity or underlying asset. Statement of Statutory Accounting Principles (SSAP) No. 86 – Derivatives defines a “derivative instrument” as “an agreement, option, instrument or a series or combination thereof: a.