Institute for Software Integrated Systems Vanderbilt University Nashville, Tennessee, 37212 a Case Study on the Application of S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Egyptian Literature

The Project Gutenberg EBook of Egyptian Literature This eBook is for the use of anyone anywhere at no cost and with almost no restrictions whatsoever. You may copy it, give it away or re-use it under the terms of the Project Gutenberg License included with this eBook or online at http://www.gutenberg.org/license Title: Egyptian Literature Release Date: March 8, 2009 [Ebook 28282] Language: English ***START OF THE PROJECT GUTENBERG EBOOK EGYPTIAN LITERATURE*** Egyptian Literature Comprising Egyptian Tales, Hymns, Litanies, Invocations, The Book Of The Dead, And Cuneiform Writings Edited And With A Special Introduction By Epiphanius Wilson, A.M. New York And London The Co-Operative Publication Society Copyright, 1901 The Colonial Press Contents Special Introduction. 2 The Book Of The Dead . 7 A Hymn To The Setting Sun . 7 Hymn And Litany To Osiris . 8 Litany . 9 Hymn To R ....................... 11 Hymn To The Setting Sun . 15 Hymn To The Setting Sun . 19 The Chapter Of The Chaplet Of Victory . 20 The Chapter Of The Victory Over Enemies. 22 The Chapter Of Giving A Mouth To The Overseer . 24 The Chapter Of Giving A Mouth To Osiris Ani . 24 Opening The Mouth Of Osiris . 25 The Chapter Of Bringing Charms To Osiris . 26 The Chapter Of Memory . 26 The Chapter Of Giving A Heart To Osiris . 27 The Chapter Of Preserving The Heart . 28 The Chapter Of Preserving The Heart . 29 The Chapter Of Preserving The Heart . 30 The Chapter Of Preserving The Heart . 30 The Heart Of Carnelian . 31 Preserving The Heart . 31 Preserving The Heart . -

Neter Aaru Chapter 1

THE AWAKENING OF GODS At the end of the battle against the god Hades, Athena and her saints were trapped on the Elysion mountains, leaving Earth without the protection of the goddess… This allowed all the gods enclosed by Athena since the era of mythology to be released. At that time, the Athena's Sanctuary has been leave under the protection of a few remaining saints. 2 Temple of Osiris, Abydos (Egypt) : it is within this temple that are sealed the spirits of the goddess Isis and the god Osiris. They are protected by the twelve cloths Neter Aaru (heavenly guardians). 3 Time for my revenge has finally come ! But before that, I must wake the guards... 4 5 6 Now that the twelve Neter Aaru met, it is necessary to go to the Athena's Sanctuary... And to bring the Athena's shield, hidden at the end of the twelve temples ! 7 This shield is the only way to restore life to Osiris... Let me handle this mission ! In truth, I was thinking about Nebka because he studied into the Sanctuary. 8 Got a problem that I lead this mission ? What I don't understand is why the gods rely on someone who's not even Egyptian ! And I believe that the gods know who to trust and who not ! Don't loose sight ! 9 ATHENA'S SANCTUARY Don't go further if you value your life ! 10 Which was once one of the most powerful places of this world is now protected by worms... I regret that the one I once called master will die by the hands of this worm.. -

The Constitution of the Individual and the Afterlife in Ancient Egypt As Portrayed in the Secret Doctrine of H.P

The veil of Egypt: the constitution of the individual and the afterlife in Ancient Egypt as portrayed in The Secret Doctrine of H.P. Blavatsky, co-founder of the Theosophical Society by Dewald Bester submitted in accordance with the requirements for the degree of Master of Arts In the subject Religious Studies at the University of South Africa Supervisor: Professor M Clasquin-Johnson November 2012 Student number: 0754-914-8 I declare that The veil of Egypt: the constitution of the individual and the afterlife in Ancient Egypt as portrayed in The Secret Doctrine of H.P. Blavatsky, co-founder of the Theosophical Society is my own work and that all the sources that I have used or quoted have been indicated and acknowledged by means of complete references. ----------------------------------- ----------------------------------- SIGNATURE DATE Summary The Secret Doctrine is the magnum opus of H.P. Blavatsky and one of the foundation texts of the Theosophical Society. It represents her attempt to appropriate authority in a wide variety of fields, including, science, religion, and philosophy. This study examines H.P. Blavatsky’s engagement with Ancient Egypt in relation to two specific themes, the constitution of the individual and the afterlife, as they are portrayed in this work. It locates Theosophy in its historical context, the late nineteenth century, in relation to various fields of knowledge. It reviews the sources that H.P. Blavatsky drew on in her work and discusses the various interpretive techniques she employed to insert Theosophical content into various world religions. Finally, it contrasts the Theosophical presentation of Ancient Egypt in The Secret Doctrine with that of mainstream modern Egyptology. -

How Ancient Cultures Perceived Mires and Wetlands (3000 BCE – 500 CE): an Introduction

Pim de Klerk & Hans Joosten How ancient cultures perceived mires and wetlands (3000 BCE – 500 CE): an introduction IMCG Bulletin 2019-04 (May-July) pages 4-15 Egyptian wetland scene, c. 1900 BCE, Memphite region; Metropolitan Museum of Arts, New York 1 Ground truthing peatland occurrence along the Ethiopian-S.-Sudanse border near Gambela. Photo: Hans Joosten. IMCG Bulletin 2019-04: May – July 2019 www.imcg.net Contents of this Bulletin IMCG issues Word from the Secretary-General 02 Tribute to Richard Payne 02 Mires and Peat 03 Papers How ancient cultures perceived mires and wetlands (3000 BCE – 500 CE): an introduction 04 Peatland news Global: Permafrost, Arctic fires, monitoring, MEA synergies, Ramsar, ITPC etc. 16 Africa 25 Republic of Congo 25 South-Africa 27 Asia 28 China 28 Indonesia 28 Malaysia 40 Europe - European Union 41 Belarus 42 Germany 43 Latvia 44 Netherlands 45 Russian Federation 48 Slovakia 49 United Kingdom 49 North- and Central America - United States of America 55 New peatland conservation relevant papers 56 4 How ancient cultures perceived mires and wetlands (3000 BCE – 500 CE): an introduction Pim de Klerk (Greifswald Mire Centre/State Museum of Natural History Karlsruhe, [email protected]) & Hans Joosten (Greifswald Mire Centre; [email protected]) The reconstruction of the past development of peat- and wetlands is normally the task of a wide variety of biological and earth-scientific disciplines (Birks & Birks 1980; Berglund 1986). An important source is, however, often overlooked: contemporary written accounts of eye-witnesses of these landscape types. Written records are generally considered to belong to the realms of linguistics, literature, history and theology, which often prevents them to be interpreted using the most recent insights of biology, (palaeo)ecology and earth sciences. -

The Celestial Ferryman in Ancient Egyptian Religion "Sailor of the Dead"

Journal of the General Union of Arab Archaeologists Volume 1 Issue 1 Article 6 2016 The Celestial Ferryman in Ancient Egyptian Religion "Sailor of the Dead". Dr.Radwan Abdel-Rady Sayed Ahmed Aswan University, [email protected] Follow this and additional works at: https://digitalcommons.aaru.edu.jo/jguaa Part of the History of Art, Architecture, and Archaeology Commons Recommended Citation Sayed Ahmed, Dr.Radwan Abdel-Rady (2016) "The Celestial Ferryman in Ancient Egyptian Religion "Sailor of the Dead".," Journal of the General Union of Arab Archaeologists: Vol. 1 : Iss. 1 , Article 6. Available at: https://digitalcommons.aaru.edu.jo/jguaa/vol1/iss1/6 This Article is brought to you for free and open access by Arab Journals Platform. It has been accepted for inclusion in Journal of the General Union of Arab Archaeologists by an authorized editor. The journal is hosted on Digital Commons, an Elsevier platform. For more information, please contact [email protected], [email protected], [email protected]. The Celestial Ferryman in Ancient Egyptian Religion "Sailor of the Dead". Cover Page Footnote * Lecturer of Egyptology, Egyptology Department, Faculty of Archaeology - Aswan University, Aswan, Egypt. [email protected] My thanks, gratitude and affection to Professor Penelope Wilson (England) and Dr. Ayman Wahby Taher (Egypt) for their help and useful notes as they gave a final er vision for the manuscript and correction the English writing. This article is available in Journal of the General Union of Arab Archaeologists: https://digitalcommons.aaru.edu.jo/ jguaa/vol1/iss1/6 Sayed Ahmed: The Celestial Ferryman in Ancient Egyptian Religion "Sailor of th (JOURNAL OF The General Union OF Arab Archaeologists (1 ــــــــــــــــــــــــــــــــــــــــــــــــ The Celestial Ferryman in Ancient Egyptian Religion "Sailor of the Dead" Dr.Radwan Abdel-Rady Sayed Ahmed* Abstract: In the ancient Egyptian religion, the ferryman was generally called (¡r.f-HA.f) and depicted as a sailor or a boatman standing in the stern of a papyrus boat. -

HEBET EN BA: the Egyptian Mystical Rites

HEBET EN BA: The Egyptian Mystical Rites Alexander the Great was 'converted' by it.. and spent the rest of his short but eventful life trying to fit his Macedonian heritage into Egypt's magical power. Julius Cesear was won-over not only by Cleopatra but the Egypt over which she was Queen.. as was Marc Anthony, after him. Napoleon was mesmerized by it; and ordered his army to survey and catalog all of the ancient wonders they found in Egypt's mysterious sands. From Rome to Paris, London and Washington DC Egyptian obelisks have been raised over the great cities of the world, in mute testimony to the magical spell of Egypt. I was 'spell-bound' by it too, from the time I first saw pictures of the ancient relics of Egypt in my school-books. I could not explain why, but something 'different' happened to me when I looked at those pictures.. something that never happened at all when I was not looking upon the Magic of Egypt. It is an Enigma, like the Sphinx which is it's most famous symbol. Any who have been touched by it's spell can neither "explain" it nor solve it's riddle. But we CAN surrender to it, if we wish. For generations now the ancient texts have been available to us, to study; Hebet En Ba: the Book Of Rites. It was left for us, carved and painted upon tomb-walls, written on papyrus scrolls buried with scribes, viziers and kings. Sure, you have to be able to 'weed-out' of the scrolls the lengthy Funerary Prayers (for the great Rites were preserved, intermingled with the Funeral Rites as well). -

The Egyptian Book of the Dead, Nuclear Physics and the Substratum

The Egyptian Book of the Dead, Nuclear Physics and the Substratum By John Frederick Sweeney Abstract The Egyptian Book of the Dead, a collection of coffin texts, has long been thought by Egyptologists to describe the journey of the soul in the afterlife, or the Am Duat. In fact, the so – called Book of the Dead describes the invisible Substratum, the “black hole” form of matter to which all matter returns, and from which all matter arises. The hieroglyphics of the Papyrus of Ani, for example, do not describe the journey of the soul, but the creation of the atom. This paper gives evidence for the very Ancient Egyptians as having knowledge of a higher mathematics than our own civilization, including the Exceptional Lie Algebras E6 and G2, the Octonions and Sedenions, as well as the Substratum and the nuclear processes that occur there. The Osiris myth represents a general re – telling of the nuclear processes which occur within the Substratum, the invisible “black hole” form of matter. 1 Table of Contents Introduction 3 Book of the Dead / Wikipedia 5 The Papyrus of Ani 11 Octonions 14 Sedenions 15 The Exceptional Lie Algebra G2 19 Conclusion 22 Bibliography 25 Appendix I The Osiris Legend 26 Appendix II 42 Negative Confessions (Papyrus of Ani) 41 Appendix III Fields of Aaru 44 Appendix III The Am Duat 45 Cover Illustration This detail scene, from the Papyrus of Hunefer (ca. 1275 BCE), shows the scribe Hunefer's heart being weighed on the scale of Maat against the feather of truth, by the jackal-headed Anubis. -

Osiris and the Egyptian Resurrection

CORNELL UNIVERSITY LIBRARY Cornell University Library The original of this bool< is in the Cornell University Library. There are no known copyright restrictions in the United States on the use of the text. http://www.archive.org/details/cu31924031011707 jata&mm OSIRIS &THE EGYPTIAN RESURRECTION E.A.WALLIS BUDGE Osiris, the king, was slain by his brother Set, dismembered, scattered, then gathered up and reconstituted by his wife Isis and finally placed in the underworld as lord and judge of the dead. He was worshipped in Egypt from archaic, pre-dynastic times right through the 4000-year span of classical Egyptian civilization up until the Christian era, and even today folkloristic elements of his worship survive among the Egyptian fellaheen. In this h>ook E. A. VVallis Budge, one of the world's foremost Egyptologists, focuses on Osiris as the single most important Egyptian deity. This is the most thorough explanation ever offered of Osirism. With rigorous scholarship, going directly to numerous Egyptian texts, making use of the writings of Herodotus, Diodorus, Plutarch and other classical writers, and of more recent ethnographic research in the Sudan and other parts of Africa, Wallis Budge examines every detail of the cult of Osiris. At the same time he establishes a link between Osiris worship and African religions. He systematically investigates such topics as: the meaning of the name "Osiris" (in Egyptian, Asar) ; the iconography associated with him; the heaven of Osiris as conceived in the \'Ith dynasty; Osiris's relationship to cannibalism, human sacrifice and dancing; Osiris as ancestral spirit, judge of the dead, moon-god and bull-god; the general African belief in God; ideas of sin and purity in Osiris worship; the shrines, miracle play and mysteries of Osiris; "The Book of Making the Spirit of Osiris" and other liturgical texts; funeral and burial practices of the Egyptians and Africans; the idea of the Ka, spirit-body and shadow; magical practices relating to Osiris; and the worship of Osiris and Isis in foreign lands. -

The Sacredness of Some Seals and Its Relationship to God Thoth

Journal of the General Union of Arab Archaeologists Volume 1 Issue 1 Article 4 2016 The Sacredness of Some Seals and its Relationship to God Thoth Dr.Hayam Hafez Rawash Cairo University, [email protected] Follow this and additional works at: https://digitalcommons.aaru.edu.jo/jguaa Part of the History of Art, Architecture, and Archaeology Commons Recommended Citation Rawash, Dr.Hayam Hafez (2016) "The Sacredness of Some Seals and its Relationship to God Thoth," Journal of the General Union of Arab Archaeologists: Vol. 1 : Iss. 1 , Article 4. Available at: https://digitalcommons.aaru.edu.jo/jguaa/vol1/iss1/4 This Article is brought to you for free and open access by Arab Journals Platform. It has been accepted for inclusion in Journal of the General Union of Arab Archaeologists by an authorized editor. The journal is hosted on Digital Commons, an Elsevier platform. For more information, please contact [email protected], [email protected], [email protected]. Rawash: The Sacredness of Some Seals and its Relationship to God Thoth (JOURNAL OF The General Union OF Arab Archaeologists (1 ـــــــــــــــــــــــــــــــــــــــــــــــــ The Sacredness of Some Seals and its Relationship to God Thoth Dr.Hayam Hafez Rawash Abstracte: Seals played an important role in ancient Egypt. They were not only used as administrative securing devices for the state bureaucracy, private individual, documents, containers, places, but also as amulets during daily life and in the netherworld. There are some features confirmed the sacredness of seals such as: •Seals as devices of protection. •The crime of breaking the seal. •The seal and sacredness of the place. -

The Milk Goddess in Ancient Egyptian Theology

Journal of the General Union of Arab Archaeologists Volume 2 Issue 1 issue 1 Article 2 2017 The Milk Goddess in Ancient Egyptian Theology. Ayman Mohamed Ahmed Damanhour University, [email protected] Follow this and additional works at: https://digitalcommons.aaru.edu.jo/jguaa Part of the History of Art, Architecture, and Archaeology Commons, and the Museum Studies Commons Recommended Citation Ahmed, Ayman Mohamed (2017) "The Milk Goddess in Ancient Egyptian Theology.," Journal of the General Union of Arab Archaeologists: Vol. 2 : Iss. 1 , Article 2. Available at: https://digitalcommons.aaru.edu.jo/jguaa/vol2/iss1/2 This Article is brought to you for free and open access by Arab Journals Platform. It has been accepted for inclusion in Journal of the General Union of Arab Archaeologists by an authorized editor. The journal is hosted on Digital Commons, an Elsevier platform. For more information, please contact [email protected], [email protected], [email protected]. Ahmed: The Milk Goddess in Ancient Egyptian Theology. (JOURNAL OF The General Union OF Arab Archaeologists (2 ــــــــــــــــــــــــــــــــــــــــــــــــــ IAt The Milk Goddess in Ancient Egyptian Theology Dr. Ayman Mohamed Ahmed Mohamed Abstract: This paper tackles the question of the deity (IAt) in ancient Egyptian theology. It aims at presenting a complete overview of the deity in ancient Egyptian art and scripts in an attempt to consolidate its origin and track the forms in which it was portrayed as well as accounting for its features, symbol and role in ancient Egyptian theology since so far there has been no study tackling this deity. -

Resurrection Machines: an Analysis of Burial Sites in Ancient Egypt's Valley of the Kings As Catalysts for Spiritual Rebirth

Grand Valley State University ScholarWorks@GVSU Student Summer Scholars Undergraduate Research and Creative Practice Summer 2009 Resurrection Machines: An Analysis of Burial Sites in Ancient Egypt’s Valley of the Kings as Catalysts for Spiritual Rebirth Jarrett Zeman Grand Valley State University, [email protected] Follow this and additional works at: http://scholarworks.gvsu.edu/sss Part of the Anthropology Commons Recommended Citation Zeman, Jarrett, "Resurrection Machines: An Analysis of Burial Sites in Ancient Egypt’s Valley of the Kings as Catalysts for Spiritual Rebirth" (2009). Student Summer Scholars. 14. http://scholarworks.gvsu.edu/sss/14 This Open Access is brought to you for free and open access by the Undergraduate Research and Creative Practice at ScholarWorks@GVSU. It has been accepted for inclusion in Student Summer Scholars by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. Table of Contents Note: Pages correspond to the numbers that appear in the bottom right hand corner of each page. They do not correspond to the page numbers indicated by Adobe Reader. List of Figures and Tables…………………………………………….……………..………..2 Introduction…………………………………………………………….……………..……...3 Background…………………………………………………………………………………..9 Theory…………………………………………….…………………………………………13 Methods…………………………………………………………….……………………….17 Architectural Structure of Valley Tombs…………………………….…………….………..19 Tomb Orientation, Symbolism, and the Link to Dung Beetles……….……..….........19 Well Shafts/Chambers in -

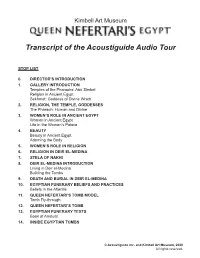

Transcript of the Acoustiguide Audio Tour

Kimbell Art Museum Transcript of the Acoustiguide Audio Tour STOP LIST 0. DIRECTOR’S INTRODUCTION 1. GALLERY INTRODUCTION Temples of the Pharaohs: Abu Simbel Religion in Ancient Egypt Sekhmet: Goddess of Divine Wrath 2. RELIGION, THE TEMPLE, GODDESSES The Pharaoh: Human and Divine 3. WOMEN’S ROLE IN ANCIENT EGYPT Women in Ancient Egypt Life in the Women’s Palace 4. BEAUTY Beauty in Ancient Egypt Adorning the Body 5. WOMEN’S ROLE IN RELIGION 6. RELIGION IN DEIR EL-MEDINA 7. STELA OF NAKHI 8. DEIR EL-MEDINA INTRODUCTION Living in Deir el-Medina Building the Tombs 9. DEATH AND BURIAL IN DEIR EL-MEDINA 10. EGYPTIAN FUNERARY BELIEFS AND PRACTICES Beliefs in the Afterlife 11. QUEEN NEFERTARI’S TOMB MODEL Tomb Fly-through 12. QUEEN NEFERTARI’S TOMB 13. EGYPTIAN FUNERARY TEXTS Book of Amduat 14. INSIDE EGYPTIAN TOMBS © Acoustiguide Inc. and Kimbell Art Museum, 2020 All rights reserved. 0. DIRECTOR’S INTRODUCTION ERIC LEE: Hello, I’m Eric Lee, director of the Kimbell Art Museum, and I'm pleased to welcome you to Queen Nefertari’s Egypt. The superb works of art you will see today come to us from the renowned Museo Egizio in Turin, Italy, which holds one of the most important collections of ancient Egyptian art outside of Cairo. Nefertari was a celebrated queen of ancient Egypt, and the beloved royal Queen before the divine scribe Thoth. Mural painting, Tomb of Nefertari. wife of pharaoh Ramesses II—one Photo: S. Vannini. © DeA Picture Library / Art Resource, NY of the greatest rulers in Egypt’s long history.