Are Spiders Eating Your Servers? the Impact of Their Unexpected Load and How to Counter It

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Presentation Slides

ColdBox Platform 4.0 AND BEYOND Who am I? ● ColdFusion Architect (12 years) ● Geek ● Android Lover ● Blogger (codersrevolution.com) ● ColdBox Platform Evangelist ● Musician ● Shade-Tree Mechanic ● Husband (11 years) ● Dad (3 beautiful girls) What we will cover? History of ColdBox What is ColdBox? Why ColdBox? Major ColdBox Parts Demos History & Facts Did not start as open source Designed and built for a high availability application in 2005 1st Conventions CFML Framework in 2006 30 releases -> 3.8.1 Active roadmap, collaboration & development High-profile company adoptions Community Large and Active Community Incredible download rates Mailing List & Forums growth Many hours of video training ForgeBox : *Box CFML Community! Share modules, plugins, interceptors, contentbox, etc Professional Open Source Company backing and funding Professional Training Courses Books Support & Mentoring Plans Architecture & Design Sessions Server Tuning & Optimizations Code Reviews & Sanity Checks Dancing lessons Why use a framework? Common Vocabulary Separation of Concerns Tested in multiple environments Performance-tuned Reduces ramp up time for new developers Do not reinvent the wheel Should address most infrastructure concerns Increases Maintainability, Scalability, and keeps your sanity! What is ColdBox? A place for root beer? “Address most infrastructure concerns of typical ColdFusion applications” How we build our apps? Usually start with a need for MVC Requirements are more than just MVC MVC is not enough What about? SES/URL Mappings RESTful Services -

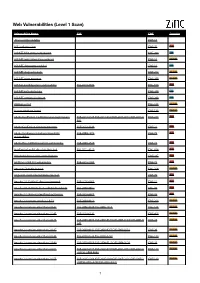

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

B 0313 DEP1900000019 01.Pdf

The following documentation is an electronically‐ submitted vendor response to an advertised solicitation from the West Virginia Purchasing Bulletin within the Vendor Self‐Service portal at wvOASIS.gov. As part of the State of West Virginia’s procurement process, and to maintain the transparency of the bid‐opening process, this documentation submitted online is publicly posted by the West Virginia Purchasing Division at WVPurchasing.gov with any other vendor responses to this solicitation submitted to the Purchasing Division in hard copy format. Purchasing Division State of West Virginia 2019 Washington Street East Solicitation Response Post Office Box 50130 Charleston, WV 25305-0130 Proc Folder : 536470 Solicitation Description : Lucee Software Support Proc Type : Central Contract - Fixed Amt Date issued Solicitation Closes Solicitation Response Version 2019-02-26 SR 0313 ESR02211900000003842 1 13:30:00 VENDOR VS0000017430 RASIA INC Solicitation Number: CRFQ 0313 DEP1900000019 Total Bid : $2,747,100.00 Response Date: 2019-02-22 Response Time: 02:05:54 Comments: FOR INFORMATION CONTACT THE BUYER Jessica S Chambers (304) 558-0246 [email protected] Signature on File FEIN # DATE All offers subject to all terms and conditions contained in this solicitation Page : 1 FORM ID : WV-PRC-SR-001 Line Comm Ln Desc Qty Unit Issue Unit Price Ln Total Or Contract Amount 1 Lucee or Equal Support 110.00000 HOUR $24,750.000000 $2,722,500.00 Comm Code Manufacturer Specification Model # 81111811 Extended Description : Lucee or Equal Server Support, Application Development Support, and CFML Conversion from Adobe Cold Fusion to Lucee Support for one (1) year. Up to 110 hours total for all listed support. -

Towards Left Duff S Mdbg Holt Winters Gai Incl Tax Drupal Fapi Icici

jimportneoneo_clienterrorentitynotfoundrelatedtonoeneo_j_sdn neo_j_traversalcyperneo_jclientpy_neo_neo_jneo_jphpgraphesrelsjshelltraverserwritebatchtransactioneventhandlerbatchinsertereverymangraphenedbgraphdatabaseserviceneo_j_communityjconfigurationjserverstartnodenotintransactionexceptionrest_graphdbneographytransactionfailureexceptionrelationshipentityneo_j_ogmsdnwrappingneoserverbootstrappergraphrepositoryneo_j_graphdbnodeentityembeddedgraphdatabaseneo_jtemplate neo_j_spatialcypher_neo_jneo_j_cyphercypher_querynoe_jcypherneo_jrestclientpy_neoallshortestpathscypher_querieslinkuriousneoclipseexecutionresultbatch_importerwebadmingraphdatabasetimetreegraphawarerelatedtoviacypherqueryrecorelationshiptypespringrestgraphdatabaseflockdbneomodelneo_j_rbshortpathpersistable withindistancegraphdbneo_jneo_j_webadminmiddle_ground_betweenanormcypher materialised handaling hinted finds_nothingbulbsbulbflowrexprorexster cayleygremlintitandborient_dbaurelius tinkerpoptitan_cassandratitan_graph_dbtitan_graphorientdbtitan rexter enough_ram arangotinkerpop_gremlinpyorientlinkset arangodb_graphfoxxodocumentarangodborientjssails_orientdborientgraphexectedbaasbox spark_javarddrddsunpersist asigned aql fetchplanoriento bsonobjectpyspark_rddrddmatrixfactorizationmodelresultiterablemlibpushdownlineage transforamtionspark_rddpairrddreducebykeymappartitionstakeorderedrowmatrixpair_rddblockmanagerlinearregressionwithsgddstreamsencouter fieldtypes spark_dataframejavarddgroupbykeyorg_apache_spark_rddlabeledpointdatabricksaggregatebykeyjavasparkcontextsaveastextfilejavapairdstreamcombinebykeysparkcontext_textfilejavadstreammappartitionswithindexupdatestatebykeyreducebykeyandwindowrepartitioning -

Configuring Multiple Instances of Railo on Windows The

Vivio Technologies Inc. POB 345 Walla Walla, WA 99362 Toll Free – 1.877.44VIVIO 1.877.448.4847 Direct – 1.509.593.4207 http://www.viviotech.net/ Configuring Multiple Instances of Railo on Windows The purpose of this guide is to explain how to set up multiple instances of Railo on a single instance of Windows. The instances can then be used for redundancy, load-balancing, etc. This guide was written specifically for Windows 2003. Setting up multiple instances on other versions of Windows should be quite similar, but not identical, so adapt as necessary in order to accomplish the same thing in your specific environment. We will be using the Railo 3.3.2 installers for this document, as they are the most recent at the time this document was created. Further, they utilize the BonCode Connector, which was specifically written for CFML. System Shortlist: Windows Server 2003 IIS6 pre-installed Railo 3.3.2.002 (railo-3.3.2.002-pl0-windows-installer.exe) Logged in as “Administrator” Installing the First Instance of Railo/Tomcat Installing the first instance of Railo is the easy part. It's a typical install with some customizations that allow it to work better in a multiple-instance server. 1. Download the installer from http://railo.viviotech.net/ 2. Launch the installer, and set the installation directory to “C:\railo01\” - this will help us properly identify our multiple installations later on. Vivio Technologies Inc. POB 345 Walla Walla, WA 99362 Toll Free – 1.877.44VIVIO 1.877.448.4847 Direct – 1.509.593.4207 http://www.viviotech.net/ 3. -

PHEWR Installation Guide (Version 3)

PHEWR Installation Guide (version 3) Introduction Included in this Zip File: ● Database - sql scripts to install database objects ● Admin - directory structure containing the files necessary to run the PHEWR administration module ● Public - directory structure containing the files necessary for public to register for events ● User Guide System Requirements ● IIS 6.0 ● Configure IIS for Silverlight applications * ● SQL Server 2005 ● Framework 4.0 ● WCF RIA Services V1.0 for Visual Studio 2010 * Please review the included document Prerequisite Guide for PHEWR Admin to review steps required to properly configure IIS for silverlight applications Public Module ● Adobe ColdFusion 9+ or Railo (Open Source) 3.3+ 1 Installation Move the phewr.zip file to the root of your local hard drive and unzip. This will create a directory called Phewr. All references to source files are relative to this Phewr directory. Note that the Admin and Public websites do NOT have to reside on the same web server. PHEWR Database In order to create the Phewr database you should have an existing SQL Server 2005 setup and be able to login with administrative rights. Phewr has currently not been tested with SQL Server 2008. 1. Using SQL Server Management Studio, login to SQL Server as the sa user or a user that has rights to create a new database. ○ Right-click on the database folder in the left most window and select “New Database”. 2. Create a new database called Phewr. Make the datafile 10MB and the log file 10MB. ○ Select and click on the ‘Initial size” column of the corresponding file and set the size to 10 mb for each file. -

The Definitive Guide to the Coldbox Platform

“Building Sustainable ColdFusion Applications” The Definitive Guide To The ColdBox Platform (Covers up to version 2.6.3: Renewed) By Luis F. Majano Copyright © 2009 ISBN 1449907865 EAN-13 9781449907860 Ortus Solutions, Corp & Luis Majano All rights reserved First Edition The inforMation contained in this docuMent is subject to change without notice. The inforMation contained in this docuMent is the exclusive property of Ortus Solutions, Corp. This work is protected under United States copyright law and the copyright laws of the given countries of origin and applicable international laws, treaties, and/or conventions. No part of this work May be reproduced or transMitted in any forM or by any means, electronic or mechanical, including photocopying or recording, or by any inforMation storage or retrieval systeM, except as expressly perMitted in writing by Ortus Solutions, Corp. All requests should be sent to [email protected] ColdBox FraMework, ColdBox PlatforM, ColdBox PlatforM Training Series are copyrighted software and content service Marks of Ortus Solutions, Corp. Mention of other fraMeworks and software are Made on this book, which are exclusive copyright property of their respective authors and not Ortus Solutions, Corp. External Trademarks & Copyrights Flash, Flex, ColdFusion, and Adobe are registered tradeMarks and copyrights of Adobe SysteMs, Inc. Railo is a tradeMark and copyright of Railo Technologies, GMbH Notice of Liability The inforMation in this book is distributed “as is”, without warranty. The author and Ortus Solutions, Corp shall not have any liability to any person or entity with respect to loss or daMage caused or alleged to be caused directly or indirectly by the content of this training book, software and resources described in it. -

Commonspot™ 7.0.1 Service Pack Release Notes

™ CommonSpot 7.0.1 Service Pack Release Notes Copyright 1998-2013 PaperThin, Inc. All rights reserved . CommonSpot 7.0.1 Release Notes About this Document This document summarizes the following for this service pack: • Important Notes • Enhancements • Notable Bug Fixes For detailed instructions for installing CommonSpot, see the Installation Guide appropriate for the release you are currently using. For detailed instructions on upgrading from a previous version of CommonSpot, see the appropriate release of the CommonSpot Upgrade Guide . For the latest system requirements, see http://www.paperthin.com/support/tech-specs.cfm - Page 2 - CommonSpot 7.0.1 Release Notes Important Notes Schema Update You must run a schema update as part of the 7.0.1 update by navigating to {yoursite}/commonspot/upgrade. Although this service pack contains no schema changes, updates to CommonSpot “factory” data require a database schema update. Browser Support Updates This release supports the following browsers for authoring in CommonSpot: • Firefox 10 ESR, Firefox 17 ESR • Internet Explorer 8, 9 • Internet Explore 10 (for Windows 7) • The latest versions of Chrome and • The latest version of Safari (for Mac only) NOTE: The latest version of Chrome does not support automatic download and installation of a clipboard helper for cut, copy, and paste operations in CommonSpot. For security reasons Firefox 17 ESR does not include programmatic clipboard access. The following considerations apply: • For Chrome support, search the Chrome store for the cs_paste_extension: https://chrome.google.com/webstore/search-extensions/paperthin Sites currently using the CommonSpot Chrome extension for authoring should replace it with the Chrome store version. -

Installation Guide

CommonSpot™ Winter 2012 Installation Guide Copyright 1998-2011 PaperThin, Inc. All rights reserved CommonSpot Winter 2012 Installation Guide 2 This CommonSpot Installation Guide, as well as the software described with it, is provided under the CommonSpot License Agreement and may be used, copied and distributed only in accordance with the terms of the license. The content outlined in this manual is for informational purposes only and is subject to change without notice. By no means should the information contained herein be construed, as a commitment by PaperThin, Inc. PaperThin assumes no responsibilities or liability for errors or inaccuracies found in this documentation. ColdFusion, Acrobat, and Flash are registered trademarks of Adobe Systems Incorporated. Microsoft, Windows, Microsoft SQL Server, Microsoft Word, Excel and PowerPoint, FrontPage and Windows NT are all registered trademarks of Microsoft Corporation. MySQL, Solaris, and Oracle are registered trademarks of Oracle Corporation, and Verity is a trademark of Autonomy, Inc. Railo is a product of Railo Technologies GmbH, Bern, Switzerland. This document was last updated March 15, 2012. Copyright 1998-2012 PaperThin, Inc. All rights reserved. CommonSpot Winter 2012 Installation Guide 3 Chapter 1 About the Installation Guide .......................................................................................................5 Chapter 2 Pre-Installation Requirements and Considerations......................................................................6 2.1. Requirements ...................................................................................................................................6 -

Oct 2016 Cfsummit

Keep Applications Online And Detect & Isolate Application Issues & Performance Bottlenecks In No Time With FusionReactor 6 Charlie Arehart, Independent Consultant CF Server Troubleshooter CArehart.org [email protected] @carehart (Tw, Fb, Li) What we’ll cover • Key new features of FusionReactor 6 • Low overhead Profiler • Production Debugger • Additional FR 6 improvements • Some FR 6 hidden gems • Quick overview of FR’s long-standing benefits • Sneak peek at FusionCLOUD (coming soon) Who does this talk apply to? • Anyone interested in troubleshooting/tuning servers; solving coding issues • Admins, managers, developers, devops • Whether using any CFML engine • ColdFusion, Lucee, Railo, BlueDragon • Or any Java application or server • Tomcat, JBoss, Wildfly, Jetty, WebSphere etc. • Or java applications: Solr, Confluence, etc. • FusionReactor is implemented simply as a javaagent • And is a low-cost, low-impact, reliable tool • Trusted for over 10 years by thousands of companies (over 30K servers world-wide) Key new features in FR 6 • Low overhead Profiler (in Ultimate edition) • Lets you see where the most time is spent within a request • Can change profiler default triggering at > 3 seconds; change poll interval (200 ms) • FusionReactor>plugins>active plugins>FusionReactor Low Cost Profiler • More: • www.fusion-reactor.com/production-java-profiler/ • www.fusion-reactor.com/evangelism/introducing-fusionreactors-production-java-profiler/ • docs.intergral.com/display/FR62/Profiler • … Key new features in FR 6 (cont.) • Production Debugger -

Commonspot™ Release 10.6 Upgrade Guide

CommonSpot™ Release 10.6 Upgrade Guide Copyright 1998-2019 PaperThin, Inc. All rights reserved CommonSpot 10.6.0 Upgrade Guide This CommonSpot Upgrade Guide, as well as the software described with it, is provided under the CommonSpot License Agreement and may be used, copied and distributed only in accordance with the terms of the license. The content outlined in this manual is for informational purposes only and is subject to change without notice. By no means should the information contained herein be construed, as a commitment by PaperThin, Inc. PaperThin assumes no responsibilities or liability for errors or inaccuracies found in this documentation. ColdFusion, Acrobat, and Flash are registered trademarks of Adobe Systems Incorporated. Microsoft, Windows, Microsoft SQL Server, Microsoft Word, Excel, Edge, and PowerPoint are all registered trademarks of Microsoft Corporation. MySQL, Solaris, and Oracle are registered trademarks of Oracle Corporation. Chrome is a registered trademark of Google. Safari is a registered Apple trademark. Firefox is a registered trademark of Mozilla. Lucee is a product of Lucee Association Switzerland; CKEditor is a registered trademark of CKSource. Apache Solr and their respective logos are trademarks of the Apache Software Foundation. This document was last updated October 2, 2019 2 CommonSpot 10.6.0 Upgrade Guide Contents Chapter 1 Upgrade Process and Steps.......................................................................................................................6 1.1. Pre-Upgrade Steps..........................................................................................................................................8 -

OMIH Project Hewlett-Packard and Intergral Information Solutions

SlowShop.com SuperShop.com A practical example of how FusionReactor v5 can identify 10 common problems in ColdFusion applications. Intergral Information Solutions David Stockton Senior Technical Consultant +1 (978) 496-9990 (USA) +44 (0)207 193 1212 (UK) +49 (0)7031 221 471 (DE) [email protected] Agenda 1. About me 2. End User Experience 3. If a tree falls in the woods... 4. Practical Examples 5. Key Points to take away today 6. Further Reading Intergral GmbH page 2 About Me • Technical Consultant at Intergral (the makers of the Fusion Product Suite) – Assisting our clients with a wide range of issues • 3rd level Fusion product support • Enterprise client technical pre-sales • Architecting solutions • Installation, configuration & tuning of platforms (OS, DB, App) • Training • Entire platform support & tuning • Custom Development • 13+ years Enterprise CF experience – E-Commerce, Publishing, Enterprise Search, Social Networking, Corporate Portals, Financials and Knowledge Management Intergral GmbH End User Experience What happens when you browse to a website? 1. DNS lookup 2. Network / Routing 3. Firewall 4. TCP handshake 5. Load Balancer 6. Web Server 7. HTTP 8. Web Connector (ISAPI / Apache Module) 9. JRun Proxy / AJP (Tomcat/CF10) 10. ColdFusion Server 11. CFML Code 12. DB / WebServices / Back-end 13. ... Then don’t forget the client-side asset (CSS, JS, IMG) retrieval, rendering and execution! Intergral GmbH End User Experience • We’re all great, pro-active engineers right? • So you have – Internal monitoring • ColdFusion monitor or perhaps FusionReactor v4 • Can see page generation time (and some back-end operations with FusionReactor v4) – External monitoring • Pingdom/Gomez/etc • Can see how long HTML takes to be retrieved or in some cases how long assets are downloaded & processed.