Smartphone Camera De-Identification While Preserving Biometric Utility

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Product ID Product Type Product Description Notes Price (USD) Weight (KG) SKU 10534 Mobile-Phone Apple Iphone 4S 8GB White 226.8

Rm A1,10/F, Shun Luen Factory Building, 86 Tokwawan Road, Hong Kong TEL: +852 2325 1867 FAX: +852 23251689 Website: http://www.ac-electronic.com/ For products not in our pricelist, please contact our sales. 29/8/2015 Product Price Weight Product Type Product Description Notes SKU ID (USD) (KG) 10534 mobile-phone Apple iPhone 4S 8GB White 226.8 0.5 40599 10491 mobile-phone Apple iPhone 5s 16GB Black Slate 486.4 0.5 40557 10497 mobile-phone Apple iPhone 5s 16GB Gold 495.6 0.5 40563 10494 mobile-phone Apple iPhone 5s 16GB White Silver 487.7 0.5 40560 10498 mobile-phone Apple iPhone 5s 32GB Gold 536.3 0.5 40564 11941 mobile-phone Apple iPhone 6 128GB Gold 784.1 0.5 41970 11939 mobile-phone Apple iPhone 6 16GB Gold 622.8 0.5 41968 11936 mobile-phone Apple iPhone 6 16GB Silver 633.3 0.5 41965 11942 mobile-phone Apple iPhone 6 16GB Space Grey 618.9 0.5 41971 11940 mobile-phone Apple iPhone 6 64GB Gold 705.4 0.5 41969 11937 mobile-phone Apple iPhone 6 64GB Silver 706.7 0.5 41966 11943 mobile-phone Apple iPhone 6 64GB Space Grey 708 0.5 41972 11963 mobile-phone Apple iPhone 6 Plus 128GB Silver 917.9 1 41991 11955 mobile-phone Apple iPhone 6 Plus 16GB Gold 755.3 1 41983 11961 mobile-phone Apple iPhone 6 Plus 16GB Silver 731.6 1 41989 11958 mobile-phone Apple iPhone 6 Plus 16GB Space Grey 735.6 1 41986 11956 mobile-phone Apple iPhone 6 Plus 64GB Gold 843.1 1 41984 11962 mobile-phone Apple iPhone 6 Plus 64GB Silver 841.8 1 41990 11959 mobile-phone Apple iPhone 6 Plus 64GB Space Grey 840.5 1 41987 12733 mobile-phone ASUS ZenFone 2 ZE550ML Dual SIM -

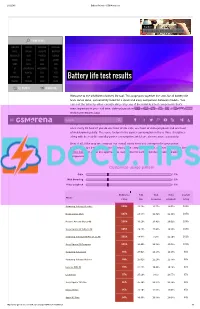

Battery Life Test Results HUAWEI TOSHIBA INTEX PLUM

2/12/2015 Battery life tests GSMArena.com Starborn SAMSUNG GALAXY S6 EDGE+ REVIEW PHONE FINDER SAMSUNG LENOVO VODAFONE VERYKOOL APPLE XIAOMI GIGABYTE MAXWEST MICROSOFT ACER PANTECH CELKON NOKIA ASUS XOLO GIONEE SONY OPPO LAVA VIVO LG BLACKBERRY MICROMAX NIU HTC ALCATEL BLU YEZZ MOTOROLA ZTE SPICE PARLA Battery life test results HUAWEI TOSHIBA INTEX PLUM ALL BRANDS RUMOR MILL Welcome to the GSMArena battery life tool. This page puts together the stats for all battery life tests we've done, conveniently listed for a quick and easy comparison between models. You can sort the table by either overall rating or by any of the individual test components that's most important to you call time, video playback or web browsing.TIP US 828K 100K You can find all about our84K 137K RSS LOG IN SIGN UP testing procedures here. SearchOur overall rating gives you an idea of how much battery backup you can get on a single charge. An overall rating of 40h means that you'll need to fully charge the device in question once every 40 hours if you do one hour of 3G calls, one hour of video playback and one hour of web browsing daily. The score factors in the power consumption in these three disciplines along with the reallife standby power consumption, which we also measure separately. Best of all, if the way we compute our overall rating does not correspond to your usage pattern, you are free to adjust the different usage components to get a closer match. Use the sliders below to adjust the approximate usage time for each of the three battery draining components. -

Compatibility Sheet

COMPATIBILITY SHEET SanDisk Ultra Dual USB Drive Transfer Files Easily from Your Smartphone or Tablet Using the SanDisk Ultra Dual USB Drive, you can easily move files from your Android™ smartphone or tablet1 to your computer, freeing up space for music, photos, or HD videos2 Please check for your phone/tablet or mobile device compatiblity below. If your device is not listed, please check with your device manufacturer for OTG compatibility. Acer Acer A3-A10 Acer EE6 Acer W510 tab Alcatel Alcatel_7049D Flash 2 Pop4S(5095K) Archos Diamond S ASUS ASUS FonePad Note 6 ASUS FonePad 7 LTE ASUS Infinity 2 ASUS MeMo Pad (ME172V) * ASUS MeMo Pad 8 ASUS MeMo Pad 10 ASUS ZenFone 2 ASUS ZenFone 3 Laser ASUS ZenFone 5 (LTE/A500KL) ASUS ZenFone 6 BlackBerry Passport Prevro Z30 Blu Vivo 5R Celkon Celkon Q455 Celkon Q500 Celkon Millenia Epic Q550 CoolPad (酷派) CoolPad 8730 * CoolPad 9190L * CoolPad Note 5 CoolPad X7 大神 * Datawind Ubislate 7Ci Dell Venue 8 Venue 10 Pro Gionee (金立) Gionee E7 * Gionee Elife S5.5 Gionee Elife S7 Gionee Elife E8 Gionee Marathon M3 Gionee S5.5 * Gionee P7 Max HTC HTC Butterfly HTC Butterfly 3 HTC Butterfly S HTC Droid DNA (6435LVW) HTC Droid (htc 6435luw) HTC Desire 10 Pro HTC Desire 500 Dual HTC Desire 601 HTC Desire 620h HTC Desire 700 Dual HTC Desire 816 HTC Desire 816W HTC Desire 828 Dual HTC Desire X * HTC J Butterfly (HTL23) HTC J Butterfly (HTV31) HTC Nexus 9 Tab HTC One (6500LVW) HTC One A9 HTC One E8 HTC One M8 HTC One M9 HTC One M9 Plus HTC One M9 (0PJA1) -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer -

Phone Compatibility

Phone Compatibility • Compatible with iPhone models 4S and above using iOS versions 7 or higher. Last Updated: February 14, 2017 • Compatible with phone models using Android versions 4.1 (Jelly Bean) or higher, and that have the following four sensors: Accelerometer, Gyroscope, Magnetometer, GPS/Location Services. • Phone compatibility information is provided by phone manufacturers and third-party sources. While every attempt is made to ensure the accuracy of this information, this list should only be used as a guide. As phones are consistently introduced to market, this list may not be all inclusive and will be updated as new information is received. Please check your phone for the required sensors and operating system. Brand Phone Compatible Non-Compatible Acer Acer Iconia Talk S • Acer Acer Jade Primo • Acer Acer Liquid E3 • Acer Acer Liquid E600 • Acer Acer Liquid E700 • Acer Acer Liquid Jade • Acer Acer Liquid Jade 2 • Acer Acer Liquid Jade Primo • Acer Acer Liquid Jade S • Acer Acer Liquid Jade Z • Acer Acer Liquid M220 • Acer Acer Liquid S1 • Acer Acer Liquid S2 • Acer Acer Liquid X1 • Acer Acer Liquid X2 • Acer Acer Liquid Z200 • Acer Acer Liquid Z220 • Acer Acer Liquid Z3 • Acer Acer Liquid Z4 • Acer Acer Liquid Z410 • Acer Acer Liquid Z5 • Acer Acer Liquid Z500 • Acer Acer Liquid Z520 • Acer Acer Liquid Z6 • Acer Acer Liquid Z6 Plus • Acer Acer Liquid Zest • Acer Acer Liquid Zest Plus • Acer Acer Predator 8 • Alcatel Alcatel Fierce • Alcatel Alcatel Fierce 4 • Alcatel Alcatel Flash Plus 2 • Alcatel Alcatel Go Play • Alcatel Alcatel Idol 4 • Alcatel Alcatel Idol 4s • Alcatel Alcatel One Touch Fire C • Alcatel Alcatel One Touch Fire E • Alcatel Alcatel One Touch Fire S • 1 Phone Compatibility • Compatible with iPhone models 4S and above using iOS versions 7 or higher. -

Brand Old Device

# New Device Old Device - Brand Old Device - Model Name 1 Galaxy A6+ Asus Asus Zenfone 2 Laser ZE500KL 2 Galaxy A6+ Asus Asus Zenfone 2 Laser ZE601KL 3 Galaxy A6+ Asus Asus ZenFone 2 ZE550ML 4 Galaxy A6+ Asus Asus Zenfone 2 ZE551ML 5 Galaxy A6+ Asus Asus Zenfone 3 Laser 6 Galaxy A6+ Asus Asus Zenfone 3 Max ZC520TL 7 Galaxy A6+ Asus Asus Zenfone 3 Max ZC553KL 8 Galaxy A6+ Asus Asus Zenfone 3 ZE520KL 9 Galaxy A6+ Asus Asus Zenfone 3 ZE552KL 10 Galaxy A6+ Asus Asus Zenfone 3s Max 11 Galaxy A6+ Asus Asus Zenfone Max 12 Galaxy A6+ Asus Asus Zenfone Selfie 13 Galaxy A6+ Asus Asus ZenFone Zoom ZX550 14 Galaxy A6+ Gionee Gionee A1 15 Galaxy A6+ Gionee Gionee A1 Lite 16 Galaxy A6+ Gionee Gionee A1 Plus 17 Galaxy A6+ Gionee Gionee Elife E8 18 Galaxy A6+ Gionee Gionee Elife S Plus 19 Galaxy A6+ Gionee Gionee Elife S7 20 Galaxy A6+ Gionee Gionee F103 21 Galaxy A6+ Gionee Gionee F103 Pro 22 Galaxy A6+ Gionee Gionee Marathon M4 23 Galaxy A6+ Gionee Gionee Marathon M5 24 Galaxy A6+ Gionee Gionee marathon M5 Lite 25 Galaxy A6+ Gionee Gionee Marathon M5 Plus 26 Galaxy A6+ Gionee Gionee P5L 27 Galaxy A6+ Gionee Gionee P7 Max 28 Galaxy A6+ Gionee Gionee S6 29 Galaxy A6+ Gionee Gionee S6 Pro 30 Galaxy A6+ Gionee Gionee S6s 31 Galaxy A6+ Gionee Gionee X1s 32 Galaxy A6+ Google Google Pixel 33 Galaxy A6+ Google Google Pixel XL LTE 34 Galaxy A6+ Google Nexus 5X 35 Galaxy A6+ Google Nexus 6 36 Galaxy A6+ Google Nexus 6P 37 Galaxy A6+ HTC Htc 10 38 Galaxy A6+ HTC Htc Desire 10 Pro 39 Galaxy A6+ HTC Htc Desire 628 40 Galaxy A6+ HTC HTC Desire 630 41 Galaxy A6+ -

ETUI W Kolorze Czarnym ALCATEL A3

ETUI w kolorze czarnym ALCATEL A3 5.0'' CZARNY ALCATEL PIXI 4 4.0'' 4034A CZARNY ALCATEL PIXI 4 5.0'' 5045X CZARNY ALCATEL POP C3 4033A CZARNY ALCATEL POP C5 5036A CZARNY ALCATEL POP C7 7041X CZARNY ALCATEL POP C9 7047D CZARNY ALCATEL U5 5044D 5044Y CZARNY HTC 10 CZARNY HTC DESIRE 310 CZARNY HTC DESIRE 500 CZARNY HTC DESIRE 516 CZARNY HTC DESIRE 610 CZARNY HTC DESIRE 616 CZARNY HTC DESIRE 626 CZARNY HTC DESIRE 650 CZARNY HTC DESIRE 816 CZARNY HTC ONE A9 CZARNY HTC ONE A9s CZARNY HTC ONE M9 CZARNY HTC U11 CZARNY HUAWEI ASCEND G510 CZARNY HUAWEI ASCEND Y530 CZARNY HUAWEI ASCEND Y600 CZARNY HUAWEI G8 GX8 CZARNY HUAWEI HONOR 4C CZARNY HUAWEI HONOR 6X CZARNY HUAWEI HONOR 7 LITE 5C CZARNY HUAWEI HONOR 8 CZARNY HUAWEI HONOR 9 CZARNY HUAWEI MATE 10 CZARNY HUAWEI MATE 10 LITE CZARNY HUAWEI MATE 10 PRO CZARNY HUAWEI MATE S CZARNY HUAWEI P10 CZARNY HUAWEI P10 LITE CZARNY HUAWEI P10 PLUS CZARNY HUAWEI P8 CZARNY HUAWEI P8 LITE 2017 CZARNY HUAWEI P8 LITE CZARNY HUAWEI P9 CZARNY HUAWEI P9 LITE CZARNY HUAWEI P9 LITE MINI CZARNY HUAWEI Y3 2017 CZARNY HUAWEI Y3 II CZARNY HUAWEI Y5 2017 Y6 2017 CZARNY HUAWEI Y5 Y560 CZARNY HUAWEI Y520 Y540 CZARNY HUAWEI Y541 CZARNY HUAWEI Y6 II CZARNY HUAWEI Y625 CZARNY HUAWEI Y7 CZARNY iPHONE 5C CZARNY iPHONE 5G CZARNY iPHONE 6 4.7'' CZARNY iPHONE 7 4.7'' 8 4.7'' CZARNY iPHONE 7 PLUS 5.5'' 8 PLUS CZARNY iPHONE X A1865 A1901 CZARNY LENOVO K6 NOTE CZARNY LENOVO MOTO C CZARNY LENOVO MOTO C PLUS CZARNY LENOVO MOTO E4 CZARNY LENOVO MOTO E4 PLUS CZARNY LENOVO MOTO G4 XT1622 CZARNY LENOVO VIBE C2 CZARNY LENOVO VIBE K5 CZARNY -

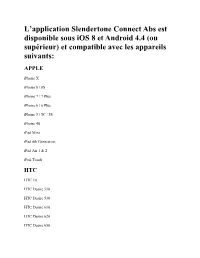

L'application Slendertone Connect Abs Est Disponible Sous Ios 8 Et

L’application Slendertone Connect Abs est disponible sous iOS 8 et Android 4.4 (ou supérieur) et compatible avec les appareils suivants: APPLE iPhone X iPhone 8 / 8S iPhone 7 / 7 Plus iPhone 6 / 6 Plus iPhone 5 / 5C / 5S iPhone 4S iPad Mini iPad 4th Generation iPad Air 1 & 2 iPod Touch HTC HTC 10 HTC Desire 510 HTC Desire 530 HTC Desire 610 HTC Desire 620 HTC Desire 650 HTC Desire 820 HTC One (M8) HTC ONE M8s HTC One M9 HTC One mini 2 HTC U Ultra HTC U11 LG LGE G3 S LGE L90 LGE LG G3 LGE LG G4 LGE LG G5 LGE LG G5 SE LGE LG G6 LGE Nexus 5 LGE Nexus 5X LGE V10 LGE V20 LGE G4 Stylus LGE G4s LGE G4c LGE Magna LGE Spirit LGE Leon LGE G Flex 2 MOTOROLA Motorola Moto G (1st Gen) Motorola Moto G (2nd Gen) Motorola Moto G (3rd Gen) Motorola Moto G (5) Plus Motorola Moto G with 4G LTE (1st Gen) Motorola Moto G with 4G LTE (2nd Gen) Motorola Moto G(4) Motorola Moto G(4) Plus Motorola Moto G4 Play Motorola Moto X (1st Gen) Motorola Moto X (2nd Gen) Motorola Moto X Play Motorola Moto Z Motorola Nexus 6 Motorola Moto X Style Motorola Moto E (2nd Gen) Motorola Moto G2 Moto G 5th Gen ONEPLUS OnePlus One OnePlus OnePlus3 OnePlus OnePlus3T OnePlus OnePlus5 OnePlus X (OnePlus) SAMSUNG Samsung Galaxy A3 (2017) Samsung Galaxy A3 Samsung Galaxy A3(2016) Samsung Galaxy A5 Samsung Galaxy A5(2016) Samsung Galaxy A5(2017) Samsung Galaxy A7 Samsung Galaxy A7(2016) Samsung Galaxy A7(2017) Samsung Galaxy A8(2016) Samsung Galaxy Ace Style Samsung Galaxy Alpha Samsung Galaxy C9 Pro Samsung Galaxy Core Prime Samsung Galaxy Core Prime Samsung Galaxy Grand Prime Samsung -

Spardasecureapp-Geraeteliste.Pdf

SpardaSecureApp Übersicht der Smartphones Offiziell vom Hersteller unterstützte Geräte Mindestanforderung der Android-Version Asus Google Nexus 7 2013 6.0.1 6.0.1 Asus Google Nexus 7 6.0 6.0 Asus Google Nexus 7 ME370T 5.0.2 5.0.2 Asus Google Nexus 7 ME370T 5.1.1 5.1.1 Google Pixel 2 -US 8.1.0 Google Pixel 2 Android 10 -US 10 Google Pixel 2 8.1.0 Google Pixel 3 -US 9 Google Pixel 3 XL -US 9 Google Pixel 3a -US 9 Google Pixel 3a Android 10 -US 10 Google Pixel 3a XL -US 9 Google Pixel 3a XL Android 10 -US 10 Google Pixel 9.0 -US 9 Google Pixel Pie -US 9 Google Pixel XL 8.1.0 8.1.0 HTC Desire 610 4.4.2 HTC Desire 816 (HTC 710C) 5.0.2 HTC Droid DNA 4.4.2 HTC One E8 5.0.2 HTC One M8 6.0 HTC One M9 7.0 HTC U12+ 8.0.0 Honor 7 Lite 6.0 Huawei Ascend Mate 7 6.0 Huawei Ascend P8 Lite 5.0.2 Huawei Ascend P8 5.0.1 Huawei Google Nexus 6P 7.0 7.0 Huawei Google Nexus 6P 8.0 8.0.0 Huawei Honor 4A 5.1.1 Huawei Honor 4C Play 4.4.4 Huawei Honor 4X Play Che2-TL00 4.4.2 Huawei Honor 5X 6.0.1 Huawei Honor 6 H60-L01 4.4.2 Huawei Honor 6 H60-L04 4.4.2 Huawei Honor 6 Plus 4.4.2 Huawei Honor 7 PLK-L01 5.0 Huawei Honor 7i 6.0.1 Stand 16.04.2020 | SecureApp-Version 3.0.2 (SDK 2.7.3245) Offiziell vom Hersteller unterstützte Geräte Mindestanforderung der Android-Version Huawei P Smart 9 Huawei P10 9 Huawei P9 6.0 LG G2 Mini D620 5.0.2 LG G3 D855 5.0 LG G4c 5.0.2 LG G5 H830 8.0.0 LG Google Nexus 4 E960 5.1.1 LG Google Nexus 5 5.1 -US 5.1 LG Google Nexus 5 5.1 5.1 LG Google Nexus 5 6.0 6.0 LG Google Nexus 5 6.0.1 -US 6.0.1 LG Google Nexus 5 6.0.1 6.0.1 LG Google Nexus -

Smart Fusion Списък Съвместимост На Устройствата

Smart Fusion Списък Съвместимост на Устройствата Acer Alcatel Acer Cloud Mobile Alcatel Idol 4 Acer E320 Liquid Express Alcatel One Touch 922 Acer Liquid Glow Alcatel One Touch 996 Acer Liquid S2 Alcatel Onetouch Idol 2 Alcatel Onetouch Idol 2 Mini S Adlink Alcatel Onetouch Idol 2S Alcatel Onetouch Pop Fit Adlink IMX-3000 Apple Asus Apple iPhone 11 and iPhone 11 Pro Asus MeMO Pad 8 (ME581CL) Apple iPhone 12, iPhone 12 mini, iPhone 12 Asus Padfone 2 Pro, iPhone 12 Pro Max Asus Padfone Infinity Apple iPhone 6 and iPhone 6 Plus Asus Vivo Tab Apple iPhone 6s and iPhone 6s Plus Asus Vivo Tab RT Apple iPhone 7 and iPhone 7 Plus Asus VivoTab Smart Apple iPhone 8 and iPhone 8 Plus Asus ZenFone 2 Apple iPhone SE Asus Zenfone 4 Apple iPhone X Asus Zenfone 5 Apple iPhone XR Asus Zenfone 6 Apple iPhone Xs and iPhone Xs Max Apple Watch: BlackBerry HTC BlackBerry Bold 9790 HTC Desire 500 BlackBerry Bold 9900/9930 HTC Desire 510 BlackBerry Classic HTC Desire 610 BlackBerry Curve 9350/9360/9370 HTC Desire 620 BlackBerry Curve 9380 HTC Desire 816 BlackBerry Dtek50 HTC Desire C BlackBerry Dtek60 HTC Desire Eye BlackBerry KEY2 HTC Droid DNA/HTC J Butterfly BlackBerry KEYone HTC Droid Incredible 4G LTE BlackBerry Motion HTC Evo 4G LTE BlackBerry Passport HTC First BlackBerry PlayBook HTC Incredible BlackBerry Priv HTC Mini BlackBerry Q10 HTC One BlackBerry Q5 HTC One M8 BlackBerry Z10 HTC One M9 BlackBerry Z30 HTC One Max Blackview BV9800 HTC One SV Blackview BV9800 Pro HTC One VX Blu Life Pure XL HTC One X/XL HTC Ruby/Amaze 4G BBK Vivo Xplay HTC U Play -

Avis Safedrive Compatible Smartphones

Avis SafeDrive Compatible smartphones The Avis SafeDrive app is compatible with the smartphones listed below. If you have one of the phones below, you can earn Active Rewards for driving well. ANDROID iOS Samsung Motorola – Droid Motorola HTC LG Sony Google Huawei Blackberry Apple Galaxy Mega 2 Droid Mini Moto X One E8 LG G3 Xperia Z2 Nexus 5 Honor 6 Dtek 50 iPhone SE Galaxy Mega 5.8 I9150 Droid Ultra Moto G (2014) One E8 CDMA LG G3 Stylus Xperia T2 Ultra Nexus 4 Mate 8 iPhone 6s Plus Galaxy Mega 6.3 I920 Droid Maxx Moto G Dual SIM (2014) One Max (4.3+) LG G3 A Xperia Z1 COMPACT (4.3+) Nexus 5X iPhone 6s Galaxy S5 Mini Moto Z Force Moto G 4G One Mini (4.3+) LG G3 LTE-A Xperia Z1S (4.3+) Nexus 6P iPhone 6 Plus Galaxy S5 Sport Moto X (2014) One (M7) (4.3+) LG G3 (CDMA) Xperia Z1F (4.3+) iPhone 6 Galaxy S5 Active Nexus 6 One S9 LG G4 Xperia Z1 (4.3+) iPhone 5S Galaxy S5 Moto Z Droid DNA (4.4.2) LG G5 Xperia Z (4.3+) iPhone 5C Galaxy S4 Active Evo 4G LTE (4.3+) LG G Pro2 Xperia ZL (4.3+) iPhone 5 Galaxy S4 HTC 10 LG G Flex Xperia SP (4.3+) iPhone 4S Galaxy S4 Zoom LG G Flex 2 Xperia ZR (4.3+) iPhone 7 Galaxy S3 Neo LG G2 Xperia Z ULTRA (4.3+) iPhone 7 Plus Galaxy S3 LG Optimus G Pro (4.4.2+) Xperia T (4.3+) iPhone 8 Galaxy Grand I19082 LG Optimus G (4.4.2+) Xperia TX (4.3+) iPhone 8 Plus Galaxy J LG VU (3.0) Xperia VL (4.3+) Galaxy Note 3 LG V10 Xperia V (4.3+) Galaxy Note 4 LG X Cam Xperia AX (4.3+) Galaxy K Zoom LG G5 SE Xperia Z3 (4.3+) Galaxy S6 LG G6 Xperia Z3 Compact Galaxy S6 Edge LG V20 Xperia Z5 Galaxy Alpha Xperia X Galaxy S4 Mini Xperia X Performance Galaxy Note 5 Sony Xperia XZ Galaxy S7 Sony Xperia X Compact Galaxy S7 Edge Xperia Z5 Compact Galaxy S8 Sony Xperia XA1 Ultra Galaxy Note8 Powered by Vitalitydrive administered by Discovery Insure Limited. -

Sony Xperia C5 Ultra

Guía del usuario Xperia™ C5 Ultra E5506/E5553 Contenido Introducción................................................................................... 6 Acerca de esta guía del usuario...........................................................6 Descripción general.............................................................................7 Montaje...............................................................................................8 Protección de la pantalla..................................................................... 9 Encendido del dispositivo por primera vez...........................................9 ¿Por qué se necesita una cuenta de Google™?................................10 Seguridad del dispositivo.............................................................11 Garantizar la protección del dispositivo............................................. 11 Bloqueo de pantalla.......................................................................... 11 Desbloqueo automático del dispositivo............................................. 13 Protección de la tarjeta SIM.............................................................. 17 Cómo encontrar el número de identificación del dispositivo...............18 Búsqueda de un dispositivo perdido ................................................ 18 Conocimiento de los aspectos básicos....................................... 21 Uso de la pantalla táctil..................................................................... 21 Bloqueo y desbloqueo de la pantalla.................................................23