Evaluation of Deep Learning Frameworks Over Different HPC Architectures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Machine Learning and Deep Learning for IIOT

Machine Learning and Deep Learning for IIOT Chanchal Chatterjee, Dell EMC Reston, March 22 2016 1 Goals of the Meeting ➢ Provide insights on methods and systems for machine learning and deep learning. ➢ Provide machine/deep learning use cases for IIOT. ➢ Provide architectures and frameworks for machine/deep learning for IIOT. 2 Machine Learning & Deep Learning – Confusing, Eh! From Machine Learning Mastery (http://machinelearningmastery.com/) 3 Machine Learning and Deep Learning Dependencies • Types of Data • Types of Learning • Types of Algorithms 4 Types of Data • Structured Data • Time Series • Events • Graph • Unstructured Data • Video/Images • Voice • Text 5 Types of Learning • Un-Supervised • Do not require training data • Assume normal instances far more frequent than anomalies • Semi-Supervised • Training data has labeled instances for only the normal class • Assume normal instances far more frequent than anomalies • Supervised 6 Types of Algorithms • ML: Machine Learning • Anomaly Detection • Trends, Predictions & Forecasting • Association & Grouping • DL: Deep Learning • Ladder Network • Convolutional Neural Network • Recurrent Neural Network • Deep Belief Networks 7 Some Details 8 Machine Learning • Anomaly Detection • Point Anomaly • Contextual Anomaly • Collective Anomaly • Graph Anomaly • Trends, Predictions & Forecasting • Associations & Grouping 9 Deep Learning • Ladder Network • Convolutional NN (CNN) • Recurrent NN (RNN) • Recurrent Recursive NN (R2NN) • Long Short Term Memory (LSTM) • Deep Belief Networks (DBM) • Restricted -

Machine Learning for Dummies®

Machine Learning by John Paul Mueller and Luca Massaron Machine Learning For Dummies® Published by: John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030-5774, www.wiley.com Copyright © 2016 by John Wiley & Sons, Inc., Hoboken, New Jersey Media and software compilation copyright © 2016 by John Wiley & Sons, Inc. All rights reserved. Published simultaneously in Canada No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without the prior written permission of the Publisher. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, or online at http://www.wiley.com/go/permissions. Trademarks: Wiley, For Dummies, the Dummies Man logo, Dummies.com, Making Everything Easier, and related trade dress are trademarks or registered trademarks of John Wiley & Sons, Inc. and may not be used without written permission. All other trademarks are the property of their respective owners. John Wiley & Sons, Inc. is not associated with any product or vendor mentioned in this book. LIMIT OF LIABILITY/DISCLAIMER OF WARRANTY: THE PUBLISHER AND THE AUTHOR MAKE NO REPRESENTATIONS OR WARRANTIES WITH RESPECT TO THE ACCURACY OR COMPLETENESS OF THE CONTENTS OF THIS WORK AND SPECIFICALLY DISCLAIM ALL WARRANTIES, INCLUDING WITHOUT LIMITATION WARRANTIES OF FITNESS FOR A PARTICULAR PURPOSE. NO WARRANTY MAY BE CREATED OR EXTENDED BY SALES OR PROMOTIONAL MATERIALS. -

Outline of Machine Learning

Outline of machine learning The following outline is provided as an overview of and topical guide to machine learning: Machine learning – subfield of computer science[1] (more particularly soft computing) that evolved from the study of pattern recognition and computational learning theory in artificial intelligence.[1] In 1959, Arthur Samuel defined machine learning as a "Field of study that gives computers the ability to learn without being explicitly programmed".[2] Machine learning explores the study and construction of algorithms that can learn from and make predictions on data.[3] Such algorithms operate by building a model from an example training set of input observations in order to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions. Contents What type of thing is machine learning? Branches of machine learning Subfields of machine learning Cross-disciplinary fields involving machine learning Applications of machine learning Machine learning hardware Machine learning tools Machine learning frameworks Machine learning libraries Machine learning algorithms Machine learning methods Dimensionality reduction Ensemble learning Meta learning Reinforcement learning Supervised learning Unsupervised learning Semi-supervised learning Deep learning Other machine learning methods and problems Machine learning research History of machine learning Machine learning projects Machine learning organizations Machine learning conferences and workshops Machine learning publications -

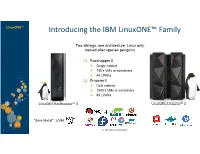

Introducing the IBM Linuxone™ Family

LinuxONE™ Introducing the IBM LinuxONE™ Family Two siblings, one architecture, Linux only, named after species penguins Rockhopper II Single cabinet 100’s VMs or containers 40 LPARs Emperor II Dual cabinet 1000’s VMs or containers 85 LPARs LinuxONE Rockhopper™ II LinuxONE Emperor™ II “Bare Metal” z/VM 1 © 2017 IBM Corporation© 2018 IBM Corporation LinuxONE™ An IBM LinuxONE for everyone “Right-size” to fit your needs Rockhopper II Emperor II Performance in a Smaller Footprint Extreme Scale • Equivalent to ~200 x86 cores • Equivalent to ~1300 x86 cores • Up to 8 TB memory • Up to 32 TB memory • I/O support for up to 2 million • I/O requirements up to 9 million IOPS IOPS, • Raw I/O bandwidth of 128 GB/S • Raw I/O bandwidth of • 19” industry standard form factor 832 GB/S • PDU-based1 with 200v-240v • Bulk power based on 480v power • Massive Capacity Back Up (CBU) • Optional 16U of available frame on demand space for additional components, • Can fill need for on-site disaster e.g., storage, server, network recovery switch • Air-cooled or water-cooled • Air-cooled only 2 © 2017 IBM Corporation© 2018 IBM Corporation Our 18-year journey with Linux on the Platform 2018: 2017: § I BM z14 ZR1 § I BM z14 M01-M05 § Preview: § z/ VM Subcapacity z/VM 7.1 2016: § I BM Wave 1.2 SP6 § KVM 1.1.2 § z/ VM 6.4 2015: § KVM for I BM z § I BM Wave upd. • I BM Cloud Private § I BM zAware for Linux • Db2 Warehouse § I BM LinuxONE™ • Blockchain • DBaaS ref.archit. -

Machine Intelligence & Augmented Finance

#MACHINE INTELLIGENCE & AUGMENTED FINANCE How Artificial Intelligence creates $1 trillion of change in the front, middle and back office of the financial services industry Suitable only for professional investors General Disclaimer Please note that the information and data presented herein is based on reliable sources and, or opinion of Autonomous Research LLP (herein referred to as “Autonomous”). The material within this document has been prepared for information purposes only. This material does not give a Research Recommendation and/or Price Target and thus applicable regulatory Research Recommendation disclosures relating to the same are not included herein. This communication is not a product of the Research department of Autonomous although there may be links or references to Research reports and products. This communication has been issued and approved for distribution in the U.K. by Autonomous Research LLP only to, and directed at, a) persons who have professional experience in matters relating to investments falling within Article 19(1) of the Financial Services and Markets Act 2000 (Financial Promotion) Order 2005 (the “Order”) or b) by high net worth entities and other person to whom it may otherwise lawfully be communicated, falling within 49(1) of the Order – together Relevant Persons. This material is intended only for investors who are "eligible counterparties" or "professional clients", and must not be redistributed to retail investors. This communication is not intended to be acted on or relied upon by retail investors. Retail investors who through whatever means or media receive this material should note that the services of Autonomous are not available to them and should not rely on the contents to make any investment decision. -

![Arxiv:1811.05187V1 [Cs.LG] 13 Nov 2018 1 Introduction](https://docslib.b-cdn.net/cover/6867/arxiv-1811-05187v1-cs-lg-13-nov-2018-1-introduction-3686867.webp)

Arxiv:1811.05187V1 [Cs.LG] 13 Nov 2018 1 Introduction

An Orchestrated Empirical Study on Deep Learning Frameworks and Platforms Qianyu Guo1, Xiaofei Xie2, Lei Ma3, Qiang Hu4, Ruitao Feng2, Li Li5, Yang Liu2, Jianjun Zhao4, and Xiaohong Li1 1 Tianjin University, China 2 Nanyang Technological University, Singapore 3 Harbin Institute of Technology, China 4 Kyushu University, Japan 5 Monash University, Australia Abstract. Deep learning (DL) has recently achieved tremendous success in a variety of cutting-edge applications, e.g., image recognition, speech and natural language processing, and autonomous driving. Besides the available big data and hardware evolution, DL frameworks and platforms play a key role to catalyze the research, development, and deployment of DL intelligent solutions. However, the difference in computation paradigm, architecture design and implementation of existing DL frameworks and platforms brings challenges for DL software devel- opment, deployment, maintenance and migration. Up to the present, it still lacks a comprehensive study on how current diverse DL frameworks and platforms in- fluence the DL software development process. In this paper, we initiate the first step towards the investigation on how exist- ing state-of-the-art DL frameworks (i.e., TensorFlow, Theano, and Torch) and platforms (i.e., server/desktop, web, and mobile) support the DL software devel- opment activities. We perform an in-depth and comparative evaluation on metrics such as learning accuracy, DL model size, robustness and performance, on state- of-the-art DL frameworks across platforms using two popular datasets MNIST and CIFAR-10. Our study reveals that existing DL frameworks still suffer from compatibility issues, which becomes even more severe when it comes to different platforms. -

Benchmarking Contemporary Deep Learning Hardware And

Benchmarking Contemporary Deep Learning Hardware and Frameworks:A Survey of Qualitative Metrics Wei Dai Daniel Berleant Department of Computer Science Department of Information Science Southeast Missouri State University University of Arkansas at Little Rock Cape Girardeau, MO, USA Little Rock, AR, USA [email protected] [email protected] Abstract—This paper surveys benchmarking principles, machine learning devices including GPUs, FPGAs, and ASICs, and deep learning software frameworks. It also reviews these technologies with respect to benchmarking from the perspectives of a 6-metric approach to frameworks and an 11-metric approach to hardware platforms. Because MLPerf is a benchmark organization working with industry and academia, and offering deep learning benchmarks that evaluate training and inference on deep learning hardware devices, the survey also mentions MLPerf benchmark results, benchmark metrics, datasets, deep learning frameworks and algorithms. We summarize seven benchmarking principles, differential characteristics of mainstream AI devices, and qualitative comparison of deep learning hardware and frameworks. Fig. 1. Benchmarking Metrics and AI Architectures Keywords— Deep Learning benchmark, AI hardware and software, MLPerf, AI metrics benchmarking metrics for hardware devices and six metrics for software frameworks in deep learning, respectively. The I. INTRODUCTION paper also provides qualitative benchmark results for major After developing for about 75 years, deep learning deep learning devices, and compares 18 deep learning technologies are still maturing. In July 2018, Gartner, an IT frameworks. research and consultancy company, pointed out that deep According to [16],[17], and [18], there are seven vital learning technologies are in the Peak-of-Inflated- characteristics for benchmarks. These key properties are: Expectations (PoIE) stage on the Gartner Hype Cycle diagram [1] as shown in Figure 2, which means deep 1) Relevance: Benchmarks should measure important learning networks trigger many industry projects as well as features. -

Container + DL-V2

Container + DeepLearning: from working to scaling Frank Zhao Software Architect, EMC CTO Office [email protected] EMC CONFIDENTIAL—INTERNAL USE ONLY 1 WHO AM I? • Frank Zhao, 赵军平, Junping Zhao • Software Architect @ EMC CTO Office • 10+year engineering in storage, virtualization, flash – 30+ patents (3 granted in U.S.) • Now, working areas: – In-mem processing and analytics, perf acceleration – Micro-service, container – Streaming processing system – Software defined storage … © Copyright 2016 EMC Corporation. All rights reserved. 2 AGENDA • Motivations • Deep learning and TensorFlow • Distributed DL training – Docker + K8S + Tensorflow as example • Distributed DL serving – Docker + K8S + Tensorflow as example • Summary • Outlook © Copyright 2016 EMC Corporation. All rights reserved. 3 Motivations • Container + DL for – Easier deployment & management – Scalability: from infrastructure to typical DL framework – Smooth transition from training to serving, or Dev&Ops – Least performance overhead (vs. VM) especially CPU/mem • Maximize heterogeneous env, computing, memory, networking etc • “DL as a Service” by container ecosystem? – In cloud env, scale-out & heterogeneous • Focus on DL workload, practices sharing, opportunities – Non-goal: TF internal or deep dive © Copyright 2016 EMC Corporation. All rights reserved. 4 Deep learning Photo from Google intro: “Diving into ML through Tensorflow” © Copyright 2016 EMC Corporation. All rights reserved. 5 Open-source DL frameworks By 6/28/2016 Star Forks Contributor Tensorflow 26995 10723 286 Caffe 10973 6575 196 CNTK 5699 1173 69 Torch 4852 1360 100 Theano 4022 1448 234 MXNet 4173 1515 152 Apache SINGA 607 211 18 A more detailed summary and comparison @ https://github.com/zer0n/deepframeworks © Copyright 2016 EMC Corporation. All rights reserved. 6 TensorFlow • DL framework from Google – GPU/CPU/TPU, heterogeneous: desktop, server, mobile etc – C++, Python; Distributed training and serving – DNN building blocks, ckpt/queue/ …, TensorBoard – Good docker/K8S supported © Copyright 2016 EMC Corporation. -

Benchmarking Contemporary Deep Learning Hardware

Benchmarking Contemporary Deep Learning Hardware and Frameworks: a Survey of Qualitative Metrics Wei Dai Daniel Berleant Department of Computer Science Department of Information Science Southeast Missouri State University University of Arkansas at Little Rock Cape Girardeau, MO, USA Little Rock, AR, USA [email protected] [email protected] Abstract—This paper surveys benchmarking principles, machine learning devices including GPUs, FPGAs, and ASICs, and deep learning software frameworks. It also reviews these technologies with respect to benchmarking from the perspectives of a 6-metric approach to frameworks and an 11-metric approach to hardware platforms. Because MLPerf is a benchmark organization working with industry and academia, and offering deep learning benchmarks that evaluate training and inference on deep learning hardware devices, the survey also mentions MLPerf benchmark results, benchmark metrics, datasets, deep learning frameworks and algorithms. We summarize seven benchmarking principles, differential characteristics of mainstream AI devices, and qualitative comparison of deep learning hardware and frameworks. Fig. 1. Benchmarking Metrics and AI Architectures Keywords— Deep Learning benchmark, AI hardware and software, MLPerf, AI metrics paper also provides qualitative benchmark results for major deep learning devices, and compares 18 deep learning I. INTRODUCTION frameworks. After developing for about 75 years, deep learning According to [16], [17], and [18], there are seven vital technologies are still maturing. In July 2018, Gartner, an IT characteristics for benchmarks. These key properties are: research and consultancy company, pointed out that deep learning technologies are in the Peak-of-Inflated- 1) Relevance: Benchmarks should measure important Expectations (PoIE) stage on the Gartner Hype Cycle features. diagram [1] as shown in Figure 2, which means deep 2) Representativeness: Benchmark performance learning networks trigger many industry projects as well as metrics should be broadly accepted by industry and academia. -

Next Generation Data Transfer Nodes (Dtns) for Global Science: Architecture, Technology, Enabling Capabilities

Next Generation Data Transfer Nodes (DTNs) For Global Science: Architecture, Technology, Enabling Capabilities Joe Mambretti, Director, ([email protected]) International Center for Advanced Internet Research (www.icair.org) Northwestern University Director, Metropolitan Research and Education Network (www.mren.org) Director, StarLight, PI StarLight IRNC SDX,Co-PI Chameleon, PI-iGENI, PI- OMNINet (www.startap.net/starlight) LHCOPN LHCONE Meeting Fermi National Accelerator Laboratory Batavia, Illinois October 30-31, 2018 StarLight – “By Researchers For Researchers” StarLight: Experimental Optical Infrastructure/Proving Ground For Next Gen Network Services Optimized for High Performance Data Intensive Science Multiple 100 Gbps (57+ Paths) StarWave 100 G Exchange World’s Most Advanced Exchange Multiple First of a Kind Services and Abbott Hall, Northwestern University’s Capabilities View from StarLight Chicago Campus IRNC: RXP: StarLight SDX A Software Defined Networking Exchange for Global Science Research and Education Joe Mambretti, Director, ([email protected]) International Center for Advanced Internet Research (www.icair.org) Northwestern University Director, Metropolitan Research and Education Network (www.mren.org) Co-Director, StarLight (www.startap.net/starlight) PI IRNC: RXP: StarLight SDX Co-PI Tom DeFanti, Research Scientist, ([email protected]) California Institute for Telecommunications and Information Technology (Calit2), University of California, San Diego Co-Director, StarLight Co-PI Maxine Brown, Director, ([email protected]) Electronic Visualization Laboratory, University of Illinois at Chicago Co-Director, StarLight Co-PI Jim Chen, Associate Director, International Center for Advanced Internet Research, Northwestern University National Science Foundation International Research Network Connections Program Workshop Chicago, Illinois May 15, 2015 Global Research Platform: Global Lambda Integrated Facility Available Advanced Network Resources Visualization courtesy of Bob Patterson, NCSA; data compilation by Maxine Brown, UIC. -

Scalable Deep Learning on Distributed Infrastructures: Challenges, Techniques and Tools

1 Scalable Deep Learning on Distributed Infrastructures: Challenges, Techniques and Tools RUBEN MAYER, Technical University of Munich, Germany HANS-ARNO JACOBSEN, Technical University of Munich, Germany Deep Learning (DL) has had an immense success in the recent past, leading to state-of-the-art results in various domains such as image recognition and natural language processing. One of the reasons for this success is the increasing size of DL models and the proliferation of vast amounts of training data being available. To keep on improving the performance of DL, increasing the scalability of DL systems is necessary. In this survey, we perform a broad and thorough investigation on challenges, techniques and tools for scalable DL on distributed infrastructures. This incorporates infrastructures for DL, methods for parallel DL training, multi- tenant resource scheduling and the management of training and model data. Further, we analyze and compare 11 current open-source DL frameworks and tools and investigate which of the techniques are commonly implemented in practice. Finally, we highlight future research trends in DL systems that deserve further research. CCS Concepts: • Computing methodologies → Neural networks; • Computer systems organization → Parallel architectures; Distributed architectures; Additional Key Words and Phrases: Deep Learning Systems ACM Reference Format: Ruben Mayer and Hans-Arno Jacobsen. 2019. Scalable Deep Learning on Distributed Infrastructures: Challenges, Techniques and Tools. ACM Comput. Surv. 1, 1, Article 1 (September 2019), 35 pages. https://doi.org/0000001. 0000001 1 INTRODUCTION Deep Learning (DL) has recently gained a lot of attention due to its superior performance in tasks like speech recognition [65, 69], optical character recognition [20], and object detection [95]. -

Machine Learning-Based Advanced Analytics Using Intel® Technology

REFERENCE ARCHITECTURE Data Center-Big Data & Analytics Machine Learning Machine Learning-Based Advanced Analytics Using Intel® Technology Learn about the machine-learning process, how it affects architecture choices, and how Intel® technology can help build a scalable, powerful, and reliable machine-learning solution Executive Summary This reference architecture provides a fully functional Machine learning enables businesses and organizations to discover insights previously technical picture for developing hidden within their data. Whether exploring oil reserves, improving the safety of a cohesive business solution. automobiles, detecting fraud, or mapping genomes, machine learning is at the heart If you are responsible for… of innovation and business intelligence. Unleashing the power of machine learning, however, requires access to large amounts of diverse datasets, optimized data • Business strategy: platforms, powerful data analysis and visualization tools, a highly scalable and flexible You will better understand how machine-learning technology compute and storage infrastructure, and lastly, the right skill sets. can improve business Intel’s high-performance computing (HPC) reference architectures are optimized for capabilities and functions. machine learning. These solutions are built on a hardware foundation that includes • Technology decisions: compute, memory, storage, and network. They are designed to run optimized, scalable You will learn about the critical analytics and artificial intelligence (AI) workloads—including streaming, predictive machine-learning architecture analytics, machine learning, and deep learning. components and how they work together to create a Businesses can gain scalability, effectiveness, efficiency, and lower total cost of ownership cohesive business solution. (TCO) by using a machine learning-optimized solution built on Intel® architecture that is designed to handle analytics and AI workloads from the edge, in the cloud, or on- premises.