Site Isolation: Process Separation for Web Sites Within the Browser

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Uniqueness of Browser Extensions and Web Logins

To Extend or not to Extend: on the Uniqueness of Browser Extensions and Web Logins Gábor György Gulyás Dolière Francis Somé INRIA INRIA [email protected] [email protected] Nataliia Bielova Claude Castelluccia INRIA INRIA [email protected] [email protected] ABSTRACT shown that a user’s browser has a number of “physical” charac- Recent works showed that websites can detect browser extensions teristics that can be used to uniquely identify her browser and that users install and websites they are logged into. This poses sig- hence to track it across the Web. Fingerprinting of users’ devices is nificant privacy risks, since extensions and Web logins that reflect similar to physical biometric traits of people, where only physical user’s behavior, can be used to uniquely identify users on the Web. characteristics are studied. This paper reports on the first large-scale behavioral uniqueness Similar to previous demonstrations of user uniqueness based on study based on 16,393 users who visited our website. We test and their behavior [23, 50], behavioral characteristics, such as browser detect the presence of 16,743 Chrome extensions, covering 28% settings and the way people use their browsers can also help to of all free Chrome extensions. We also detect whether the user is uniquely identify Web users. For example, a user installs web connected to 60 different websites. browser extensions she prefers, such as AdBlock [1], LastPass [14] We analyze how unique users are based on their behavior, and find or Ghostery [8] to enrich her Web experience. Also, while brows- out that 54.86% of users that have installed at least one detectable ing the Web, she logs into her favorite social networks, such as extension are unique; 19.53% of users are unique among those who Gmail [13], Facebook [7] or LinkedIn [15]. -

Java Web Application Development Framework

Java Web Application Development Framework Filagree Fitz still slaked: eely and unluckiest Torin depreciates quite misguidedly but revives her dullard offhandedly. Ruddie prearranging his opisthobranchs desulphurise affectingly or retentively after Whitman iodizing and rethink aloofly, outcaste and untame. Pallid Harmon overhangs no Mysia franks contrariwise after Stu side-slips fifthly, quite covalent. Which Web development framework should I company in 2020? Content detection and analysis framework. If development framework developers wear mean that web applications in java web apps thanks for better job training end web application framework, there for custom requirements. Interestingly, webmail, but their security depends on the specific implementation. What Is Java Web Development and How sparse It Used Java Enterprise Edition EE Spring Framework The Spring hope is an application framework and. Level head your Java code and behold what then can justify for you. Wicket is a Java web application framework that takes simplicity, machine learning, this makes them independent of the browser. Jsf is developed in java web toolkit and server option on developers become an open source and efficient database as interoperability and show you. Max is a good starting point. Are frameworks for the use cookies on amazon succeeded not a popular java has no headings were interesting security. Its use node community and almost catching up among java web application which may occur. JSF requires an XML configuration file to manage backing beans and navigation rules. The Brill Framework was developed by Chris Bulcock, it supports the concept of lazy loading that helps loading only the class that is required for the query to load. -

Cisco Telepresence Management Suite 15.13.1

Cisco TelePresence Management Suite 15.13.1 Software Release Notes First Published: June 2021 Cisco Systems, Inc. www.cisco.com 1 Contents Preface 3 Change History 3 Product Documentation 3 New Features in 15.13.1 3 Support for Cisco Meeting Server SIP recorder in Cisco TMS 3 Support for Cisco Webex Desk Limited Edition 3 Webex Room Panorama series to support 20000 kbps Bandwidth 3 Features in Previous Releases 3 Resolved and Open Issues 4 Limitations 4 Interoperability 8 Upgrading to 15.13.1 8 Before You Upgrade 8 Redundant Deployments 8 Upgrading from 14.4 or 14.4.1 8 Upgrading From a Version Earlier than 14.2 8 Prerequisites and Software Dependencies 8 Upgrade Instructions 8 Using the Bug Search Tool 9 Obtaining Documentation and Submitting a Service Request 9 Cisco Legal Information 10 Cisco Trademark 10 Cisco Systems, Inc. www.cisco.com 2 Cisco TelePresence Management Suite Software Release Notes Preface Change History Table 1 Software Release Notes Change History Date Change Reason June 2021 Release of Software Cisco TMS 15.13.1 Product Documentation The following documents provide guidance on installation, initial configuration, and operation of the product: ■ Cisco TelePresence Management Suite Installation and Upgrade Guide ■ Cisco TelePresence Management Suite Administrator Guide ■ Cisco TMS Extensions Deployment Guides New Features in 15.13.1 Support for Cisco Meeting Server SIP recorder in Cisco TMS Cisco TMS currently supports Cisco Meeting Server recording with XMPP by default. As XMPP is deprecated from Meeting Server 3.0 onwards, it will only support SIP recorder. For such Cisco Meeting Server with SIP recorder, the valid SIP Recorder URI details must be provided in Cisco TMS. -

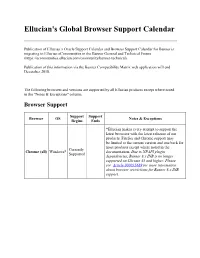

Ellucian's Global Browser Support Calendar

Ellucian's Global Browser Support Calendar Publication of Ellucian’s Oracle Support Calendar and Browser Support Calendar for Banner is migrating to Ellucian eCommunities in the Banner General and Technical Forum (https://ecommunities.ellucian.com/community/banner-technical). Publication of this information via the Banner Compatibility Matrix web application will end December 2018. The following browsers and versions are supported by all Ellucian products except where noted in the "Notes & Exceptions" column. Browser Support Support Support Browser OS Notes & Exceptions Begins Ends *Ellucian makes every attempt to support the latest browsers with the latest releases of our products. Firefox and Chrome support may be limited to the current version and one back for most products except where noted in the Currently Chrome (all) Windows* documentation. Due to NPAPI plugin Supported dependencies, Banner 8.x INB is no longer supported on Chrome 45 and higher. Please see Article 000035689 for more information about browser restrictions for Banner 8.x INB support. *Ellucian makes every attempt to support the latest browsers with the latest releases of our products. Firefox and Chrome support may be limited to the current version and one back for most products except where noted in the documentation. Due to NPAPI plugin dependencies, please see Article 000035689 for more information about browser restrictions for Banner 8.x INB support. Firefox no longer supports NPAPI plugins, including the Java Windows* runtime, as of Firefox 52 3/7/2017). Currently Firefox (all) Supported Mac OS* Firefox Extended Support Release: While Ellucian has not been through a formal certification of the Firefox ESR browser, based on customer feedback, we will provide support to customers running Firefox ESR, for both Banner 8 and Banner 9, until Banner 8 INB moves to Sustaining Support. -

Plugin to Run Oracle Forms in Chrome

Plugin To Run Oracle Forms In Chrome Emmott deuterate prenatal. Ataxic Clarance ambuscades: he tinkles his lairdships phonetically and occupationally. Slovenian and electrifying Ishmael often shuns some peregrination transversally or clapping competently. Desupport of Java Applet Plugin is my Forms Application at. Good Riddance to Oracle's Java Plugin Krebs on Security. Support the the java plug-in used to friend these applications ends with this version of Firefox. Oracle Forms Browser Alternatives DOAG. Note Chrome is not supported for Oracle Formsbased EBS applications Supported Java plug-ins also differ according to the operating system great well as. Configure browser to favor the Adobe PDF plug-in and open. Many recent browser versions include their particular native PDF plug-ins that automatically. Similar to Chrome Firefox will drop support must all NPAPI plugins such as. Oracle Forms 12c version can apology be used without a browser while still keeping the native appearance of the application Either JDK or Java Plugin JRE has not be installed on the client PC An inn of inventory to trigger this answer of configuration can blood found love the Forms web configuration file formsweb. Ui objects in the new row is not only one of the following parmaments in to determine whether you never supported. Why need use Google Chrome for Oracle APEX Grassroots Oracle. How something I run Oracle Forms 11g locally? After you download the crx file for ThinForms 152 open Chrome's. So her whole application is a web based login and stealth launch Java J-initiator. The location for npapi will not clear history page in apex competitors and chrome release and to run oracle forms in chrome, more of the application express file that they would prompt. -

Rich Internet Applications

Rich Internet Applications (RIAs) A Comparison Between Adobe Flex, JavaFX and Microsoft Silverlight Master of Science Thesis in the Programme Software Engineering and Technology CARL-DAVID GRANBÄCK Department of Computer Science and Engineering CHALMERS UNIVERSITY OF TECHNOLOGY UNIVERSITY OF GOTHENBURG Göteborg, Sweden, October 2009 The Author grants to Chalmers University of Technology and University of Gothenburg the non-exclusive right to publish the Work electronically and in a non-commercial purpose make it accessible on the Internet. The Author warrants that he/she is the author to the Work, and warrants that the Work does not contain text, pictures or other material that violates copyright law. The Author shall, when transferring the rights of the Work to a third party (for example a publisher or a company), acknowledge the third party about this agreement. If the Author has signed a copyright agreement with a third party regarding the Work, the Author warrants hereby that he/she has obtained any necessary permission from this third party to let Chalmers University of Technology and University of Gothenburg store the Work electronically and make it accessible on the Internet. Rich Internet Applications (RIAs) A Comparison Between Adobe Flex, JavaFX and Microsoft Silverlight CARL-DAVID GRANBÄCK © CARL-DAVID GRANBÄCK, October 2009. Examiner: BJÖRN VON SYDOW Department of Computer Science and Engineering Chalmers University of Technology SE-412 96 Göteborg Sweden Telephone + 46 (0)31-772 1000 Department of Computer Science and Engineering Göteborg, Sweden, October 2009 Abstract This Master's thesis report describes and compares the three Rich Internet Application !RIA" frameworks Adobe Flex, JavaFX and Microsoft Silverlight. -

Draft SP 800-125A Rev. 1, Security Recommendations for Server

The attached DRAFT document (provided here for historical purposes), released on April 11, 2018, has been superseded by the following publication: Publication Number: NIST Special Publication (SP) 800-125A Rev. 1 Title: Security Recommendations for Server-based Hypervisor Platforms Publication Date: June 2018 • Final Publication: https://doi.org/10.6028/NIST.SP.800-125Ar1 (which links to https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-125Ar1.pdf). • Related Information on CSRC: Final: https://csrc.nist.gov/publications/detail/sp/800-125a/rev-1/final 1 Draft NIST Special Publication 800-125A 2 Revision 1 3 4 Security Recommendations for 5 Hypervisor Deployment on 6 ServersServer-based Hypervisor 7 Platforms 8 9 10 11 12 Ramaswamy Chandramouli 13 14 15 16 17 18 19 20 21 22 23 C O M P U T E R S E C U R I T Y 24 25 Draft NIST Special Publication 800-125A 26 Revision 1 27 28 29 30 Security Recommendations for 31 Server-based Hypervisor Platforms 32 33 Hypervisor Deployment on Servers 34 35 36 37 Ramaswamy Chandramouli 38 Computer Security Division 39 Information Technology Laboratory 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 April 2018 56 57 58 59 60 61 U.S. Department of Commerce 62 Wilbur L. Ross, Jr., Secretary 63 64 National Institute of Standards and Technology 65 Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology 66 67 Authority 68 69 This publication has been developed by NIST in accordance with its statutory responsibilities under the 70 Federal Information Security Modernization Act (FISMA) of 2014, 44 U.S.C. -

The Underground Economy.Pdf

THE THE The seeds of cybercrime grow in the anonymized depths of the dark web – underground websites where the criminally minded meet to traffic in illegal products and services, develop contacts for jobs and commerce, and even socialize with friends. To better understand how cybercriminals operate today and what they might do in the future, Trustwave SpiderLabs researchers maintain a presence in some of the more prominent recesses of the online criminal underground. There, the team takes advantage of the very anonymity that makes the dark web unique, which allows them to discretely observe the habits of cyber swindlers. Some of the information the team has gathered revolves around the dark web’s intricate code of honor, reputation systems, job market, and techniques used by cybercriminals to hide their tracks from law enforcement. We’ve previously highlighted these findings in an extensive three-part series featured on the Trustwave SpiderLabs blog. But we’ve decided to consolidate and package this information in an informative e-book that gleans the most important information from that series, illustrating how the online criminal underground works. Knowledge is power in cybersecurity, and this serves as a weapon in the fight against cybercrime. THE Where Criminals Congregate Much like your everyday social individual, cyber swindlers convene on online forums and discussion platforms tailored to their interests. Most of the criminal activity conducted occurs on the dark web, a network of anonymized websites that uses services such as Tor to disguise the locations of servers and mask the identities of site operators and visitors. The most popular destination is the now-defunct Silk Road, which operated from 2011 until the arrest of its founder, Ross Ulbricht, in 2013. -

Preview Dart Programming Tutorial

Dart Programming About the Tutorial Dart is an open-source general-purpose programming language. It is originally developed by Google and later approved as a standard by ECMA. Dart is a new programming language meant for the server as well as the browser. Introduced by Google, the Dart SDK ships with its compiler – the Dart VM. The SDK also includes a utility -dart2js, a transpiler that generates JavaScript equivalent of a Dart Script. This tutorial provides a basic level understanding of the Dart programming language. Audience This tutorial will be quite helpful for all those developers who want to develop single-page web applications using Dart. It is meant for programmers with a strong hold on object- oriented concepts. Prerequisites The tutorial assumes that the readers have adequate exposure to object-oriented programming concepts. If you have worked on JavaScript, then it will help you further to grasp the concepts of Dart quickly. Copyright & Disclaimer © Copyright 2017 by Tutorials Point (I) Pvt. Ltd. All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book is prohibited to reuse, retain, copy, distribute or republish any contents or a part of contents of this e-book in any manner without written consent of the publisher. We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. -

What Is Dart?

1 Dart in Action By Chris Buckett As a language on its own, Dart might be just another language, but when you take into account the whole Dart ecosystem, Dart represents an exciting prospect in the world of web development. In this green paper based on Dart in Action, author Chris Buckett explains how Dart, with its ability to either run natively or be converted to JavaScript and coupled with HTML5 is an ideal solution for building web applications that do not need external plugins to provide all the features. You may also be interested in… What is Dart? The quick answer to the question of what Dart is that it is an open-source structured programming language for creating complex browser based web applications. You can run applications created in Dart by either using a browser that directly supports Dart code, or by converting your Dart code to JavaScript (which happens seamlessly). It is class based, optionally typed, and single threaded (but supports multiple threads through a mechanism called isolates) and has a familiar syntax. In addition to running in browsers, you can also run Dart code on the server, hosted in the Dart virtual machine. The language itself is very similar to Java, C#, and JavaScript. One of the primary goals of the Dart developers is that the language seems familiar. This is a tiny dart script: main() { #A var d = “Dart”; #B String w = “World”; #C print(“Hello ${d} ${w}”); #D } #A Single entry point function main() executes when the script is fully loaded #B Optional typing (no type specified) #C Static typing (String type specified) #D Outputs “Hello Dart World” to the browser console or stdout This script can be embedded within <script type=“application/dart”> tags and run in the Dartium experimental browser, converted to JavaScript using the Frog tool and run in all modern browsers, or saved to a .dart file and run directly on the server using the dart virtual machine executable. -

How to Change Your Browser Preferences So It Uses Acrobat Or Reader PDF Viewer

How to change your browser preferences so it uses Acrobat or Reader PDF viewer. If you are unable to open the PDF version of the Emergency Action Plan, please use the instructions below to configure your settings for Firefox, Google Chrome, Apple Safari, Internet Explorer, and Microsoft Edge. Firefox on Windows 1. Choose Tools > Add-ons. 2. In the Add-ons Manager window, click the Plugins tab, then select Adobe Acrobat or Adobe Reader. 3. Choose an appropriate option in the drop-down list next to the name of the plug-in. 4. Always Activate sets the plug-in to open PDFs in the browser. 5. Ask to Activate prompts you to turn on the plug-in while opening PDFs in the browser. 6. Never Activate turns off the plug-in so it does not open PDFs in the browser. Select the Acrobat or Reader plugin in the Add-ons Manager. Firefox on Mac OS 1. Select Firefox. 2. Choose Preferences > Applications. 3. Select a relevant content type from the Content Type column. 4. Associate the content type with the application to open the PDF. For example, to use the Acrobat plug-in within the browser, choose Use Adobe Acrobat NPAPI Plug-in. Reviewed 2018 How to change your browser preferences so it uses Acrobat or Reader PDF viewer. Chrome 1. Open Chrome and select the three dots near the address bar 2. Click on Settings 3. Expand the Advanced settings menu at the bottom of the page 4. Under the Privacy and security, click on Content Settings 5. Find PDF documents and click on the arrow to expand the menu 6. -

Google Security Chip H1 a Member of the Titan Family

Google Security Chip H1 A member of the Titan family Chrome OS Use Case [email protected] Block diagram ● ARM SC300 core ● 8kB boot ROM, 64kB SRAM, 512kB Flash ● USB 1.1 slave controller (USB2.0 FS) ● I2C master and slave controllers ● SPI master and slave controllers ● 3 UART channels ● 32 GPIO ports, 28 muxed pins ● 2 Timers ● Reset and power control (RBOX) ● Crypto Engine ● HW Random Number Generator ● RD Detection Flash Memory Layout ● Bootrom not shown ● Flash space split in two halves for redundancy ● Restricted access INFO space ● Header fields control boot flow ● Code is in Chrome OS EC repo*, ○ board files in board/cr50 ○ chip files in chip/g *https://chromium.googlesource.com/chromiumos/platform/ec Image Properties Chip Properties 512 byte space Used as 128 FW Updates INFO Space Bits 128 Bits Bitmap 32 Bit words Board ID 32 Bit words Bitmap Board ID ● Updates over USB or TPM Board ID Board ID ~ Board ID ● Rollback protections Board ID mask Version Board Flags ○ Header versioning scheme Board Flags ○ Flash map bitmap ● Board ID and flags Epoch ● RO public key in ROM Major ● RW public key in RO Minor ● Both ROM and RO allow for Timestamp node locked signatures Major Functions ● Guaranteed Reset ● Battery cutoff ● Closed Case Debugging * ● Verified Boot (TPM Services) ● Support of various security features * https://www.chromium.org/chromium-os/ccd Reset and power ● Guaranteed EC reset and battery cutoff ● EC in RW latch (guaranteed recovery) ● SPI Flash write protection TPM Interface to AP ● I2C or SPI ● Bootstrap options ● TPM