On Reproducible Econometric Research

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Evaluation of Statistical Software for Research and Instruction

Behavior Research Methods, Instruments, & Computers 1985, 17(2),352-358 An evaluation of statistical software for research and instruction DARRELL L. BUTLER Ball State University, Muncie, Indiana and DOUGLAS B. EAMON University of Houston-Clear Lake, Houston, Texas A variety of microcomputer statistics packages were evaluated. The packages were compared on a number of dimensions, including error handling, documentation, statistical capability, and accuracy. Results indicated that there are some very good packages available both for instruc tion and for analyzing research data. In general, the microcomputer packages were easier to learn and to use than were mainframe packages. Furthermore, output of mainframe packages was found to be less accurate than output of some of the microcomputer packages. Many psychologists use statistical programs to analyze ware packages available on the VAX computers at Ball research data or to teach statistics, and many are interested State University: BMDP, SPSSx, SCSS, and MINITAB. in using computer software for these purposes (e.g., Versions of these programs are widely available and are Butler, 1984; Butler & Kring, 1984). The present paper used here as points ofreference to judge the strengths and provides some program descriptions, benchmarks, and weaknesses of the microcomputer packages. Although evaluations of a wide variety of marketed statistical pro there are many programs distributed by individuals (e.g., grams that may be useful in research and/or instruction. see Academic Computer Center of Gettysburg College, This review differs in several respects from other re 1984, and Eamon, 1983), none are included in the present cent reviews of statistical programs (e. g., Carpenter, review. -

Statistics with Free and Open-Source Software

Free and Open-Source Software • the four essential freedoms according to the FSF: • to run the program as you wish, for any purpose • to study how the program works, and change it so it does Statistics with Free and your computing as you wish Open-Source Software • to redistribute copies so you can help your neighbor • to distribute copies of your modified versions to others • access to the source code is a precondition for this Wolfgang Viechtbauer • think of ‘free’ as in ‘free speech’, not as in ‘free beer’ Maastricht University http://www.wvbauer.com • maybe the better term is: ‘libre’ 1 2 General Purpose Statistical Software Popularity of Statistical Software • proprietary (the big ones): SPSS, SAS/JMP, • difficult to define/measure (job ads, articles, Stata, Statistica, Minitab, MATLAB, Excel, … books, blogs/posts, surveys, forum activity, …) • FOSS (a selection): R, Python (NumPy/SciPy, • maybe the most comprehensive comparison: statsmodels, pandas, …), PSPP, SOFA, Octave, http://r4stats.com/articles/popularity/ LibreOffice Calc, Julia, … • for programming languages in general: TIOBE Index, PYPL, GitHut, Language Popularity Index, RedMonk Rankings, IEEE Spectrum, … • note that users of certain software may be are heavily biased in their opinion 3 4 5 6 1 7 8 What is R? History of S and R • R is a system for data manipulation, statistical • … it began May 5, 1976 at: and numerical analysis, and graphical display • simply put: a statistical programming language • freely available under the GNU General Public License (GPL) → open-source -

Statistical Software

Statistical Software A. Grant Schissler1;2;∗ Hung Nguyen1;3 Tin Nguyen1;3 Juli Petereit1;4 Vincent Gardeux5 Keywords: statistical software, open source, Big Data, visualization, parallel computing, machine learning, Bayesian modeling Abstract Abstract: This article discusses selected statistical software, aiming to help readers find the right tool for their needs. We categorize software into three classes: Statisti- cal Packages, Analysis Packages with Statistical Libraries, and General Programming Languages with Statistical Libraries. Statistical and analysis packages often provide interactive, easy-to-use workflows while general programming languages are built for speed and optimization. We emphasize each software's defining characteristics and discuss trends in popularity. The concluding sections muse on the future of statistical software including the impact of Big Data and the advantages of open-source languages. This article discusses selected statistical software, aiming to help readers find the right tool for their needs (not provide an exhaustive list). Also, we acknowledge our experiences bias the discussion towards software employed in scholarly work. Throughout, we emphasize the software's capacity to analyze large, complex data sets (\Big Data"). The concluding sections muse on the future of statistical software. To aid in the discussion, we classify software into three groups: (1) Statistical Packages, (2) Analysis Packages with Statistical Libraries, and (3) General Programming Languages with Statistical Libraries. This structure -

STAT 3304/5304 Introduction to Statistical Computing

STAT 3304/5304 Introduction to Statistical Computing Statistical Packages Some Statistical Packages • BMDP • GLIM • HIL • JMP • LISREL • MATLAB • MINITAB 1 Some Statistical Packages • R • S-PLUS • SAS • SPSS • STATA • STATISTICA • STATXACT • . and many more 2 BMDP • BMDP is a comprehensive library of statistical routines from simple data description to advanced multivariate analysis, and is backed by extensive documentation. • Each individual BMDP sub-program is based on the most competitive algorithms available and has been rigorously field-tested. • BMDP has been known for the quality of it’s programs such as Survival Analysis, Logistic Regression, Time Series, ANOVA and many more. • The BMDP vendor was purchased by SPSS Inc. of Chicago in 1995. SPSS Inc. has stopped all develop- ment work on BMDP, choosing to incorporate some of its capabilities into other products, primarily SY- STAT, instead of providing further updates to the BMDP product. • BMDP is now developed by Statistical Solutions and the latest version (BMDP 2009) features a new mod- ern user-interface with all the statistical functionality of the classic program, running in the latest MS Win- dows environments. 3 LISREL • LISREL is software for confirmatory factor analysis and structural equation modeling. • LISREL is particularly designed to accommodate models that include latent variables, measurement errors in both dependent and independent variables, reciprocal causation, simultaneity, and interdependence. • Vendor information: Scientific Software International http://www.ssicentral.com/ 4 MATLAB • Matlab is an interactive, matrix-based language for technical computing, which allows easy implementation of statistical algorithms and numerical simulations. • Highlights of Matlab include the number of toolboxes (collections of programs to address specific sets of problems) available. -

Software Packages – Overview There Are Many Software Packages Available for Performing Statistical Analyses. the Ones Highlig

APPENDIX C SOFTWARE OPTIONS Software Packages – Overview There are many software packages available for performing statistical analyses. The ones highlighted here are selected because they provide comprehensive statistical design and analysis tools and a user friendly interface. SAS SAS is arguably one of the most comprehensive packages in terms of statistical analyses. However, the individual license cost is expensive. Primarily, SAS is a macro based scripting language, making it challenging for new users. However, it provides a comprehensive analysis ranging from the simple t-test to Generalized Linear mixed models. SAS also provides limited Design of Experiments capability. SAS supports factorial designs and optimal designs. However, it does not handle design constraints well. SAS also has a GUI available: SAS Enterprise, however, it has limited capability. SAS is a good program to purchase if your organization conducts advanced statistical analyses or works with very large datasets. R R, similar to SAS, provides a comprehensive analysis toolbox. R is an open source software package that has been approved for use on some DoD computer (contact AFFTC for details). Because R is open source its price is very attractive (free). However, the help guides are not user friendly. R is primarily a scripting software with notation similar to MatLab. R’s GUI: R Commander provides limited DOE capabilities. R is a good language to download if you can get it approved for use and you have analysts with a strong programming background. MatLab w/ Statistical Toolbox MatLab has a statistical toolbox that provides a wide variety of statistical analyses. The analyses range from simple hypotheses test to complex models and the ability to use likelihood maximization. -

Introducing SPM® Infrastructure

Introducing SPM® Infrastructure © 2019 Minitab, LLC. All Rights Reserved. Minitab®, SPM®, SPM Salford Predictive Modeler®, Salford Predictive Modeler®, Random Forests®, CART®, TreeNet®, MARS®, RuleLearner®, and the Minitab logo are registered trademarks of Minitab, LLC. in the United States and other countries. Additional trademarks of Minitab, LLC. can be found at www.minitab.com. All other marks referenced remain the property of their respective owners. Salford Predictive Modeler® Introducing SPM® Infrastructure Introducing SPM® Infrastructure The SPM® application is structured around major predictive analysis scenarios. In general, the workflow of the application can be described as follows. Bring data for analysis to the application. Research the data, if needed. Configure and build a predictive analytics model. Review the results of the run. Discover the model that captures valuable insight about the data. Score the model. For example, you could simulate future events. Export the model to a format other systems can consume. This could be PMML or executable code in a mainstream or specialized programming language. Document the analysis. The nature of predictive analysis methods you use and the nature of the data itself could dictate particular unique steps in the scenario. Some actions and mechanisms, though, are common. For any analysis you need to bring the data in and get some understanding of it. When reviewing the results of the modeling and preparing documentation, you can make use of special features embedded into charts and grids. While we make sure to offer a display to visualize particular results, there’s always a Summary window that brings you a standard set of results represented the same familiar way throughout the application. -

Minitab Connecttm

TM MinitabMinitab Connect ConnectTM Data integration, automation, and governance for comprehensive insights 1 Minitab ConnectTM Minitab ConnectTM Access, blend, and enrich data from all your critical sources, for meaningful business intelligence and confident, informed decision making. Minitab ConnectTM empowers data users from across the enterprise with self-serve tools to transform diverse data into a governed network of data pipelines, to feed analytics initiatives and foster organization-wide collaboration. Users can effortlessly blend and explore data from databases, cloud and on-premise apps, unstructured data, spreadsheets, and more. Flexible, automated workflows accelerate every step of the data integration process, while powerful data preparation and visualization tools help yield transformative insights. 1 Data integration, automation, and governance for comprehensive insights Accelerate your digital transformation Minitab ConnectTM is empowering insights-driven organizations with tools to gather and share trusted data and actionable insights that drive their company forward. Enable next-generation analysis and reporting “The things we measure today are not even close to the things we measured three years ago. We’re able to do new things and iterate into the next generation of where we need to be instead of being mired in spreadsheets and PowerPoint decks.” - Senior Manager, Digital Search Analytics Telecommunications Industry Focus on business drivers, not manual tasks “Bringing this into our environment has really freed our analytics resources from the tactical tasks, and really allowed them to think strategically.” - Marketing Technology Manager, Finance Industry Empower users with sophisticated, no-code tools “The platform is really powerful. It has an ETL function but also has a front-end face to it. -

Assessment of the Impact of the Electronic Submission and Review Environment on the Efficiency and Effectiveness of the Review of Human Drugs – Final Report

Evaluations and Studies of New Drug Review Programs Under PDUFA IV for the FDA Contract No. HHSF223201010017B Task No. 2 Assessment of the Impact of the Electronic Submission and Review Environment on the Efficiency and Effectiveness of the Review of Human Drugs – Final Report September 9, 2011 Booz | Allen | Hamilton Assessment of the Impact of the Electronic Submission and Review Environment Final Report Table of Contents EXECUTIVE SUMMARY .............................................................................................................. 1 Assessment Overview .......................................................................................................... 1 Degree of Electronic Implementation ................................................................................... 1 Baseline Review Performance ............................................................................................. 2 Exchange and Content Data Standards ............................................................................... 4 Review Tools and Training Analysis .................................................................................... 5 Current Organization and Responsibilities ........................................................................... 6 Progress against Ideal Electronic Review Environment ....................................................... 6 Recommendations ............................................................................................................... 8 ASSESSMENT OVERVIEW ........................................................................................................ -

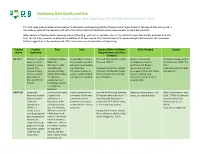

Optimizing Data Quality and Use Free Analysis, Visualization and Reporting (AVR) Software Selection Tool

Optimizing Data Quality and Use Free Analysis, Visualization and Reporting (AVR) Software Selection Tool This tool helps planners select a free Analysis, Visualization, and Reporting (AVR) software product appropriate for the type of data being used. It also outlines some of the necessary skill sets of the data analyst and additional system requirements to use these products. Note: Several software products requiring licensing fees (e.g., ESRI ArcGIS, Pentaho, SAS, STATA, SUDAAN) were intentionally excluded from this tool. This list is by no means an exhaustive collection of all open source AVR products relevant to epidemiological data analysis. PHII welcomes further suggestions to the development of this tool. Please send feedback to [email protected]. Product Product Pros Cons System/Other Software Skills Needed Source Name Summary Requirements and Data Formats Epi Info 7 Different modules Available in desktop Not available on Mac or Microsoft Windows XP or newer; Basic to intermediate Centers for Disease Control allow for survey (Microsoft Linux operating systems Microsoft .NET 4.0 knowledge of statistics; and Prevention. 2016. “Epi creation and data Windows), mobile (although these projects familiarity with Boolean Info.” capture. The and web/cloud are underway); Accepted data formats include: expressions and some https://www.cdc.gov/epiin Analysis module versions; Epi Map limitations in ability to ASCII text file, Microsoft Access, knowledge of Microsoft Access fo/index.html. can be used with and Epi Report allow support various ANOVA Microsoft Excel, Microsoft SQL helpful; mapping, map data imported for additional and regression methods Server Database projections and GIS basics if from 24 different visualization and using Epi Map formats. -

Simulation Study to Compare the Random Data Generation from Bernoulli Distribution in Popular Statistical Packages

Simulation Study to Compare the Random Data Generation from Bernoulli Distribution in Popular Statistical Packages Manash Pratim Kashyap Department of Business Administration Assam University, Silchar, India [email protected] Nadeem Shafique Butt PIQC Institute of Quality Lahore, Pakistan [email protected] Dibyojyoti Bhattacharjee Department of Business Administration Assam University, Silchar, India [email protected] Abstract In study of the statistical packages, simulation from probability distributions is one of the important aspects. This paper is based on simulation study from Bernoulli distribution conducted by various popular statistical packages like R, SAS, Minitab, MS Excel and PASW. The accuracy of generated random data is tested through Chi-Square goodness of fit test. This simulation study based on 8685000 random numbers and 27000 tests of significance shows that ability to simulate random data from Bernoulli distribution is best in SAS and is closely followed by R Language, while Minitab showed the worst performance among compared packages. Keywords: Bernoulli distribution, Goodness of Fit, Minitab, MS Excel, PASW, SAS, Simulation, R language 1. Introduction Use of Statistical software is increasing day by day in scientific research, market surveys and educational research. It is therefore necessary to compare the ability and accuracy of different statistical softwares. This study focuses a comparison of random data generation from Bernoulli distribution among five softwares R, SAS, Minitab, MS Excel and PASW (Formerly SPSS). The statistical packages are selected for comparison on the basis of their popularity and wide usage. Simulation is the statistical method to recreate situation, often repeatedly, so that likelihood of various outcomes can be more accurately estimated. -

Use of STATA in Pediatric Research -An Indian Perspective Who Is a Pediatrician ?

Use of STATA in Pediatric Research -An Indian Perspective Who is a Pediatrician ? Dr. Bhavneet Bharti, PGIMER- Chandigarh Background • Research is an important part of curriculum of pediatric medicine • Research Project is necessary for Postgraduates • In order to fulfill their MD/DM/MCH requirements thesis mandatory 3 Research Questions Pediatrics-Endless queries? • Which needle causes less pain in infants undergoing vaccination? • Which drug is better for the treatment of Pediatric HIV, sepsis and many other diseases Statistics and Pediatric Research • For answering these queries -Statistics plays increasingly important role • It is not possible, for example, to have a new drug treatment approved for use without solid, statistical evidence to support claims of efficacy and safety Statistics and research • Many new statistical methods have been developed with particular relevance for medical researchers • these methods can be applied routinely using statistical software packages Statistical softwares • Statistical knowledge of most physicians may be best described as “limited” Available Statistical Packages Proprietary Free Software Excel EpiInfo SPSS R STATA Revman MINITAB LibreOffice Calc SAS PSPP Comprehensive metanalysis Microsoft Excel Microsoft Excel COST PRO Nearly ubiquitous and is Individual License for Microsoft Office often pre-installed on new Professional $350 computers User friendly Volume Discounts available for large Very good for basic organizations and descriptive statistics, universities charts and plots -

Comparison of Three Common Statistical Programs Available to Washington State County Assessors: SAS, SPSS and NCSS

Washington State Department of Revenue Comparison of Three Common Statistical Programs Available to Washington State County Assessors: SAS, SPSS and NCSS February 2008 Abstract: This summary compares three common statistical software packages available to county assessors in Washington State. This includes SAS, SPSS and NCSS. The majority of the summary is formatted in tables which allow the reader to more easily make comparisons. Information was collected from various sources and in some cases includes opinions on software performance, features and their strengths and weaknesses. This summary was written for Department of Revenue employees and county assessors to compare statistical software packages used by county assessors and as a result should not be used as a general comparison of the software packages. Information not included in this summary includes the support infrastructure, some detailed features (that are not described in this summary) and types of users. Contents General software information.............................................................................................. 3 Statistics and Procedures Components................................................................................ 8 Cost Estimates ................................................................................................................... 10 Other Statistics Software ................................................................................................... 13 General software information Information in this section was