Definitions ,Ieed in Lr;Cle Program Management. Part 2 of The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Inquiry Problem Finding 1 Running Head

Open Inquiry Problem Finding 1 Running Head: OPEN INQUIRY PROBLEM FINDING The Creative Process of Problem Finding Manifested in Open Inquiry Frank LaBanca, EdD Center for 21st Century Skills at EDUCATION CONNECTION Litchfield, CT presented at: The Qualitative Report Third Annual Conference Ft. Lauderdale, FL January, 2012 em: [email protected] web: http://www.labanca.net blog: http://problemfinding.labanca.net ph: 203.947.2850 mail: 355 Goshen Road, PO Box 909, Litchfield, CT 06759-0909 Open Inquiry Problem Finding 2 Abstract Problem finding is a creative process where individuals develop original ideas for study. Secondary science students who successfully participate in authentic open inquiry studies must engage in problem finding to determine viable and suitable topics. A multicase study examined problem finding strategies employed by highly successful students who presented a project at a regional and international science fair. Behaviors are examined through lenses of inquiry, creativity, and situated cognition. Open Inquiry Problem Finding 3 The Creative Process of Problem Finding Manifested in Open Inquiry Frank LaBanca, EdD Introduction Secondary school teachers have long valued developing student problem solving skills. Students are often challenged to use a variety of strategies to identify problems and their implications, develop action plans, utilize a variety of relevant sources, information, and data to address the problems, and formulate solutions. Problem solving techniques can be highly idiosyncratic. However, in perhaps too many educational settings involving problem solving, teachers provide students with the problem or question, and sometimes even the methodology for determining the solution. This approach may be due to curricular requirements, time factors, the limited scope and goals of particular learning modules or the inability of teachers to effectively employ inquiry-oriented instructional techniques. -

3 Visual Analogies: Static and Dynamic

Developing and Evaluating Visual Analogies to Support Insight and Creative Problem Solving Eric Luchian, BFA, MFA School of Computing and Communications Lancaster University A thesis submitted for the degree of Doctor of Philosophy 31st March 2019 Reviewer: Reviewer: Day of the defence: Head of PhD committee: ii Declaration This written thesis, including the portfolio of created visual analogies in static and multimedia formats (i.e., www.luchian.info), results entirely from my own work except where jointly authored (see Appendix 14-18) in publications as follows: 1. Luchian, E., & Sas, C. (2019). Erroneous Features in Freehand Sketching: Opportunities to Generate Visual Analogies. Proceedings of the 23rd World Multi-Conference on Systemics, Cybernetics and Informatics: WMSCI 2019. 1, pp. 86-92. Orlando, USA: IIIS. 2. Luchian, E., & Sas, C. (2011). Image Schemata in Animated Metaphors for Insight Problem Solving. C&C ’11 Proceedings of the 8th ACM conference on Creativity and Cognition (pp. 369-370). Atlanta, USA: ACM. 3. Luchian, E. (2011). Develop and Evaluate Visual Analogies to Support Insight and Creative Problem Solving. Proceedings of the DESIRE'11 Conference on Creativity and Innovation in Design (pp. 433-434). Eindhoven, The Netherlands: ACM. 4. Sas, C., Luchian, E., & Ball, L. (2010). Investigating Visual Analogies for Visual Insight Problems. Proceedings of the 1st DESIRE Network Conference on Creativity and Innovation in Design (pp. 90-100). Aarhus, Denmark: ACM. I hereby declare that work from this thesis has not been offered previously for any other degree or diploma to this or any other university, and to the best of my knowledge and belief, contains no material written or published by another person, except where due reference has been made in the text. -

Problem Discovery As a Collaborative, Creative, and Method-Guided Search for The

No. 14-0003 Problem Discovery as a Collaborative, Creative, and Method- Guided Search for the “Real Problems” as Raw Diamonds of Innovation by Gerald Steiner, Visiting Scholar, Program on Transatlantic Relations Associate Professor, Institute of Systems Sciences, Innovation and Sustainability Research, University of Graz Working Paper Series 1737 CAMBRIDGE STREET • CAMBRIDGE, MA 02138 • TEL 617.495.4420 • FAX 617.495.8292 [email protected] • http://www.wcfia.harvard.edu Problem Discovery as a Collaborative, Creative, and Method-Guided Search for the “Real Problems” as Raw Diamonds of Innovation Gerald Steiner Visiting Scholar, Program on Transatlantic Relations, Weatherhead Center for International Affairs, Harvard University Associate Professor for Systemic and Sustainability Management, Institute of Systems Sciences, Innovation, and Sustainability Research, University of Graz, Austria Acknowledgements: This work was conducted as part of Visiting Scholarship at the Weatherhead Center for International Affairs (WCFIA) at Harvard University. I would like to thank my colleagues from the WCFIA for their generous support and for helpful discussions and comments on various aspects of this research. Abstract This paper poses that the creative search for frequently hidden “real” problems is critical if innovation aims at comprehensive system improvements and changes in thinking paradigms, rather than simple, incremental changes. These hidden real problems can perhaps best be symbolized by raw diamonds, which one strives to find in order to then grind them into sparkling diamonds, i.e. innovation. Currently, problem solving-related research focuses on the analysis and solution of predefined problems, with little emphasis on problem reframing and systemic discovery; moreover, inter- and transdisciplinary collaborations for problem finding and the application of convoluted methods receive little attention. -

Problem Finding and Its Impact on Problem Solving. a Dissertation Su

UNIVERSITY OF CALIFORNIA, MERCED What am I supposed to do? Problem Finding and its impact on Problem Solving. A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Cognitive and Information Sciences by Daniel Matthew Holman Committee in charge: Professor Jeffrey Yoshimi, Chair Professor David C. Noelle Professor Michael Spivey 2018 Copyright © Daniel Holman, 2018 All rights reserved The Dissertation of Daniel Matthew Holman is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Co-Chair (if applicable) Chair University of California, Merced 2018 iii Dedicated to my mom, dad and sister, without whom I would not have been able to reach this point, and whose love, support and belief in me have been invaluable. I also dedicate this work to the many friends, family members, colleagues and communities that have supported and encouraged me to succeed. I am immensely grateful to you all, and fully aware of how I fortunate I am to have more names to list than I possibly could. iv Chapter 1 : Problem Finding and Problem Solving .......................................... 1 1.1 Definition of Problems .............................................................................................. 3 1.2 The Problem Cycle .................................................................................................... 6 1.3 Prior Experimental Work ........................................................................................ 13 Chapter 2 -

Innovative Design Thinking Process with TRIZ Kyeongwon Lee

Innovative Design Thinking Process with TRIZ Kyeongwon Lee To cite this version: Kyeongwon Lee. Innovative Design Thinking Process with TRIZ. 18th TRIZ Future Conference (TFC), Oct 2018, Strasbourg, France. pp.241-252, 10.1007/978-3-030-02456-7_20. hal-02279754 HAL Id: hal-02279754 https://hal.inria.fr/hal-02279754 Submitted on 5 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Innovative Design Thinking Process with TRIZ Kyeongwon, LEE Dept. of Mechanical Design Engineering, Korea Polytechnic University, Korea Siheung City, Gyeonggi-Do, 429-793, Korea [email protected] Abstract. This paper describes an innovative design thinking process with simplified TRIZ that can be used to resolve contradictions in all domains with words such as “dilemma”, “con- flict”, “contradiction” and “paradox”. The design thinking process that have been used at d.school at Stanford and Potsdam University are popular as a human-centered innovation pro- cess with “Empathy” and “Define” stages for human-centered problem finding. However, many results may be not innovative because it uses mostly “Group brainstorming” at “Ideate” stage. -

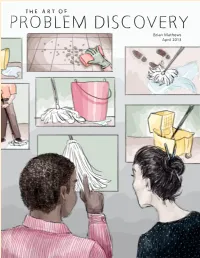

ART of PROBLEM DISCOVERY Brian Mathews March 2013 the ART of PROBLEM DISCOVERY

THE ART OF PROBLEM DISCOVERY Brian Mathews March 2013 THE ART OF PROBLEM DISCOVERY Brian Mathews Associate Dean for Learning & Outreach at Virginia Tech Invited Paper, ACRL Conference 2013, Indianapolis Prologue Introduction How can we make the floors cleaner? That’s We’re all problem solvers. Every day we deal with the question that Proctor & Gamble asked its unexpected issues commanding our attention. We chemists.1 Years of working on this problem, fix things when they break and correct errors when however, yielded no improved cleaning solution. we detect them. We answer tough questions and make difficult decisions. So Proctor & Gamble took a different approach and hired a design firm.2 Rather than focusing on This paper isn’t about problem solving. Instead, I chemical improvements, the designers watched want to draw attention to how we think about people clean. Observations uncovered the real problems: how we can discover and convert them problem: mops. People spent more time cleaning into new strategic initiatives. Often we jump their mops than they did cleaning their floors. The prematurely into problem solving mode before mop was an ineffective tool for the task at hand. fully exploring the wide range of possibilities.3 This oversight can ultimately generate solutions that This insight led to the development of the are good enough, but not as good as they could Swiffer—a billion-dollar product line for Proctor be. & Gamble. The lesson learned is that innovation isn’t simply about asking the right questions; it Just to be clear, the problems under consideration also involves framing questions differently. -

Recent Trends in Problem Solving Through TRIZ: a Review

ISSN (Online): 2319-8753 ISSN (Print) : 2347-6710 International Journal of Innovative Research in Science, Engineering and Technology (A High Impact Factor, Monthly, Peer Reviewed Journal) Visit: www.ijirset.com Vol. 7, Issue 1, January 2018 Recent Trends in Problem Solving through TRIZ: A Review Shiv Kumar 1, P. S. Bajwa 2 , Kulbhushan3 P.G. Student, Department of Mechanical Engineering, L. R. Institute of Engg. & Technology, Solan (H. P.), India1 Asst. Professor, Department of Mechanical Engineering, L. R. Institute of Engg. & Technology, Solan (H. P.), India 2 Asst. Professor, Department of Mechanical Engineering, L. R. Institute of Engg. & Technology, Solan (H. P.), India 3 ABSTRACT: With the latest advances in technology along with the human advancements, a hard-hitting contest exists between various organizations and the top management. At this stage, the Research and Development (R & D) and Marketing of products are more important. As a result, the multinational enterprises should rely on both the innovations and marketing strategies of products for higher competency. TRIZ is a premier disruptive technology for innovation that can be used all through numerous industries and sciences. Essentials of TRIZ can be successfully used by a wide range of people -- from children to adults. The genesis of TRIZ is derived from empirical data, patents. This paper introduces the concept of ideality and an overview over the literature of TRIZ aiming to benefit the production industries in India. KEYWORDS: TRIZ, Productivity, Innovation, inventive I. INTRODUCTION TRIZ is a Russian acronym that indicates, "Theory of Inventive Problem Solving". In 1946, Genrich Altshuller, the creator of TRIZ, was a patent assessor at the Russian naval patent office at the young age of 20. -

The Problems with Problem Solving: Reflections on the Rise, Current Status, and Possible Future of a Cognitive Research Paradigm1

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Purdue E-Pubs The Problems with Problem Solving: Reflections on the Rise, Current Status, and Possible Future of a Cognitive Research Paradigm1 Stellan Ohlssoni Abstract The research paradigm invented by Allen Newell and Herbert A. Simon in the late 1950s dominated the study of problem solving for more than three decades. But in the early 1990s, problem solving ceased to drive research on complex cognition. As part of this decline, Newell and Simon’s most innovative research practices – especially their method for inducing subjects’ strategies from verbal protocols - were abandoned. In this essay, I summarize Newell and Simon’s theoretical and methodological innovations and explain why their strategy identification method did not become a standard research tool. I argue that the method lacked a systematic way to aggregate data, and that Newell and Simon’s search for general problem solving strategies failed. Paradoxically, the theoretical vision that led them to search elsewhere for general principles led researchers away from stud- ’ ies of complex problem solving. Newell and Simon’s main enduring contribution is the theory that people solve problems via heuristic search through a problem space. This theory remains the centerpiece of our understanding of how people solve unfamiliar problems, but it is seriously incomplete. In the early 1970s, Newell and Simon suggested that the field should focus on the question where problem spaces and search strategies come from. I propose a breakdown of this overarching question into five specific research questions. Principled answers to those questions would expand the theory of heuristic search into a more complete theory of human problem solving. -

Scaling up Analogical Innovation with Crowds and AI

Scaling up analogical innovation with crowds and AI Aniket Kittura,1, Lixiu Yub, Tom Hopec, Joel Chand, Hila Lifshitz-Assafe, Karni Gilonc, Felicia Nga, Robert E. Krauta, and Dafna Shahafc aHuman–Computer Interaction Institute, Carnegie Mellon University, Pittsburgh, PA 15213; bRobert Bosch, Research and Technology Center, Division 3, Pittsburgh, PA 15222; cSchool of Computer Science and Engineering, The Hebrew University of Jerusalem, 9190401 Jerusalem, Israel; dCollege of Information Studies, University of Maryland, College Park, MD 20742; and eDepartment of Information, Operations and Management Sciences, Leonard N. Stern School of Business, New York University, New York, NY 10012 Edited by Ben Shneiderman, University of Maryland, College Park, MD, and accepted by Editorial Board Member Eva Tardos November 7, 2018 (received for review May 20, 2018) Analogy—the ability to find and apply deep structural patterns Wide Web. Here we describe initial steps toward a future where across domains—has been fundamental to human innovation people, augmented by machines, can search through billions of in science and technology. Today there is a growing opportu- sources based on deep structural similarity rather than simple nity to accelerate innovation by moving analogy out of a single keywords to solve important societal problems. For example, sci- person’s mind and distributing it across many information pro- entists or designers might find potential solutions in other fields cessors, both human and machine. Doing so has the potential to the problems they are trying to solve, and lawyers or legal to overcome cognitive fixation, scale to large idea repositories, scholars might find legal precedents sharing similar systems of and support complex problems with multiple constraints. -

An Exploration of Developing Active Exploring and Problem Solving Skill Lego Robot Course by the Application of Anchored Instruction Theory

Vol. 8(17), pp. 1551-1558, 10 September, 2013 DOI: 10.5897/ERR012.153 Educational Research and Reviews ISSN 1990-3839 © 2013 Academic Journals http://www.academicjournals.org/ERR Full Length Research Paper An exploration of developing active exploring and problem solving skill Lego Robot Course by the application of anchored instruction theory Chen-Yuan Chen Global Earth Observation and Data Analysis Center (GEODAC), National Cheng Kung University, No 1, Ta-Hsueh Road, Tainan 701, Taiwan. Accepted 5 August, 2013 In recent years, researches had shown that the development of problem solving skill became important for education, and the educational robots are capable for promoting students not only understand the physical and mathematical concepts, but also have active and constructive learning. Meanwhile, the importance of situation in education is rising, since studies had declared that knowledge’s acquirement always occurs when people experience in a particular situation. The situation is indivisible from one’s learning activities. In this study we tried to integrate four theories: the anchored instruction which originated from the situated cognition; the web-based learning which is the main way of searching information today; the problem-based learning which focus on development of students’ problem solving skills; and the flow theory which is the best way to understand motivation. Key words: Lego mindstorms NXT robotic course, anchored instruction, active exploring, problem solving skill, flow theory. INTRODUCTION Researchers had declared that it was increasingly situation. Brown et al. (1989) had indicated that the important for teachers to help students develop skills to situation is indivisible from people’s cognition. -

'Putting Blinkers on a Blind Man'

‘Putting blinkers on a blind man’ Providing cognitive support for creative processes with environmental cues. Christensen, B. T. & Schunn, C. D. Abstract Random cues may be both beneficial and harmful to creativity. Theories of analogical transfer and association assume that cues are helpful in generating new ideas. However, theories of Path-of- least-resistance, fixation, and unconscious plagiarism say that cues can lead you into traps. Empirical research partly supports both theories. So what is a practitioner to do in selecting random cues for enhancing creativity? It is suggested that the answer is found in looking at the relationship between cues and the creative cognitive processes and their functions, and how this leads to creative outcome originality and usefulness. Two processes are examined: analogical transfer and mental simulation. It is recommended that random between-domain cues be used to increase between domain analogizing primarily with instruction to make connections, leading to product originality. Random within-domain cues should be used to increase within-domain analogizing. Due to property transfer, close analogies will have a negative impact on the originality of the outcome in problem solving instances, but to increased usefulness in problem identifying instances. Random end-user cues will lead to greater amounts of end-user simulations of usability and user preferences, and thus to higher levels of product usefulness. 1 1.Introduction Are you stuck on a creative problem, and don’t know where to go from here? Try this: In what ways might you use a stork to solve your problem? A key chain? A foreign country? Two friends? A pair of pliers? Have you solved the problem yet? Random or blind input1 into the ideational stages has long been thought to be potentially beneficial for solving creative problems. -

The Influence of Teaching Methods on Creative Problem Finding

Thinking Skills and Creativity 24 (2017) 86–94 Contents lists available at ScienceDirect Thinking Skills and Creativity j ournal homepage: http://www.elsevier.com/locate/tsc The influence of teaching methods on creative problem finding a,b a,b,∗ a,b a,b a,b Xiaojuan Jia , Weiping Hu , Fengchun Cai , Haihong Wang , Jing Li , c,∗∗ d Mark A. Runco , Yinghe Chen a MOE Key Laboratory of Modern Teaching Technology, Shaanxi Normal University, Xi’an, China b National Innovation Center for Assessment of Basic Education Quality, China c American Institute for Behavioral Research & Technology, La Jolla, CA, USA d Beijing Normal University, Beijing, China a r t i c l e i n f o a b s t r a c t Article history: Problem finding is an important component of creativity, but research on it does not offer Received 24 May 2015 much guidance to teaching. The present research takes a step in that direction with two Received in revised form 5 January 2017 investigations. The first was a between-subjects evaluation of a short-term classroom teach- Accepted 2 February 2017 ing process, using creative Chinese problem finding (CCPF) to assess the impact. The second Available online 13 February 2017 was a long–term, mixed-design of creative scientific problem finding (CSPF) as it developed in response to teaching that emphasized problem finding. Results showed that there were Keywords: improvements, but different teaching methods had varied impact on students’ creative Teaching methods problem finding (CPF) performance. A mixed teaching method that included both lecture- Inquiry-based teaching and inquiry-based teaching was superior to the lecture-based or inquiry-based methods Lecture-based teaching when used separately.