Moderation / Interactions – Variation in slopes across groups

Inconsistency as a moderator – A review of moderator variables.

[DataSet1] G:\MdbR\0DataFiles\FOR_090823.sav

We’ve looked at inconsistency of self report in some of our recent research.

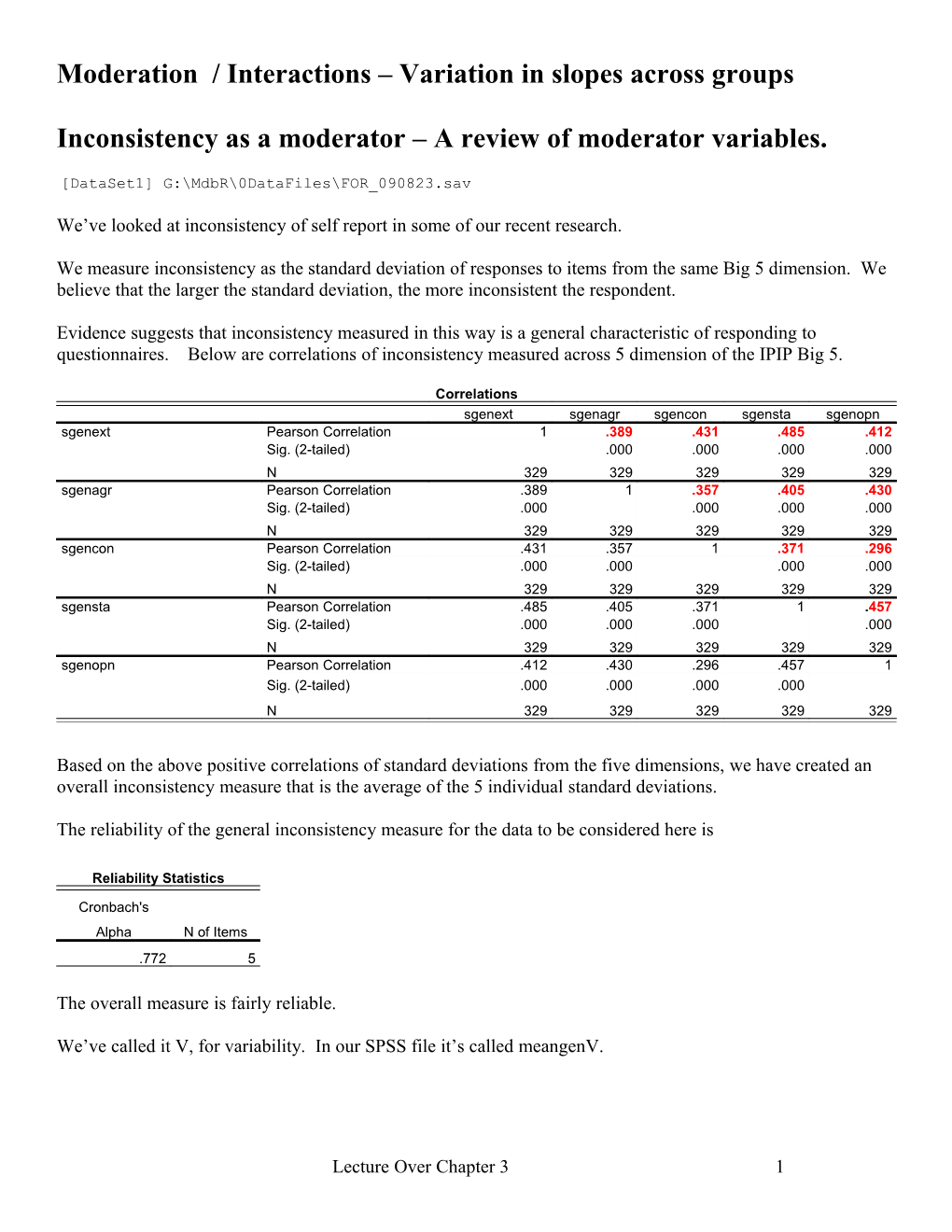

We measure inconsistency as the standard deviation of responses to items from the same Big 5 dimension. We believe that the larger the standard deviation, the more inconsistent the respondent.

Evidence suggests that inconsistency measured in this way is a general characteristic of responding to questionnaires. Below are correlations of inconsistency measured across 5 dimension of the IPIP Big 5.

Correlations sgenext sgenagr sgencon sgensta sgenopn sgenext Pearson Correlation 1 .389 .431 .485 .412 Sig. (2-tailed) .000 .000 .000 .000 N 329 329 329 329 329 sgenagr Pearson Correlation .389 1 .357 .405 .430 Sig. (2-tailed) .000 .000 .000 .000 N 329 329 329 329 329 sgencon Pearson Correlation .431 .357 1 .371 .296 Sig. (2-tailed) .000 .000 .000 .000 N 329 329 329 329 329 sgensta Pearson Correlation .485 .405 .371 1 .457 Sig. (2-tailed) .000 .000 .000 .000 N 329 329 329 329 329 sgenopn Pearson Correlation .412 .430 .296 .457 1 Sig. (2-tailed) .000 .000 .000 .000 N 329 329 329 329 329

Based on the above positive correlations of standard deviations from the five dimensions, we have created an overall inconsistency measure that is the average of the 5 individual standard deviations.

The reliability of the general inconsistency measure for the data to be considered here is

Reliability Statistics

Cronbach's Alpha N of Items .772 5

The overall measure is fairly reliable.

We’ve called it V, for variability. In our SPSS file it’s called meangenV.

Lecture Over Chapter 3 1 Now that we have a new hammer, the next thing to do is find out what kinds of nails it drives.

We correlated the overall inconsistency measure with a collection of variables. Here are the correlations . . .

Correlations

meanGenV Mean of Gen Condition B5 scale SDs

Pearson Correlatio n Sig. (2-tailed) N wpt Wonderlic Personnel Test score. -.239 .000 310 eosgpa Acc Records GPA at end of semester in which -.159 .004 329 participated genext General Instructions Extraversion Scale score -.107 .052 329 genagr -.016 .778 329 gencon .105 .056 329 gensta -.172 .002 329 genopn .067 .229 329 BIDR self deception scale scores .063 .256 329 BIDR impression management scale scores -.016 .773 329 Sbidrsd: SD of responses to BIDR self deception items .585 .000 329 Sbidrim: SD of responses to BIDR impr management items .396 .000 329

As you might expect, the largest correlations in the above table are of overall inconsistency measured from the Big 5 questionnaire with inconsistency measured in the BIDR questionnaire. The BIDR is a questionnaire designed to assess socially desirable responding. We could have included the BIDR SDs in the computation of meangenV but we wanted to base it on only Big 5 items, since the Big 5 is the questionnaire most likely to be found in selection settings.

The 2nd largest correlation is that of inconsistency with cognitive ability, as measured by the WPT.

Inconsistency is also correlated with eosgpa – end-of-semester grade point average.

Finally, it’s negatively correlated with overall emotional stability. What’s that about??

Our main interest was on the correlation with eosgpa. Since overall inconsistency is a “free” measure, obtained simply by putting the already existing Big 5 items into the standard deviation formula, if it were to increase the validity of prediction of criteria like gpa, that would make it very special indeed.

Of course, the correlation with wpt suggests that inconsistency might be redundant with cognitive ability as a predictor. We’ll only know that after the regression.

But since there are many instances in which a measure of cognitive ability would not be used in selection, the overall inconsistency measure might be quite useful regardless of whether or not it correlated with cognitive ability.

Lecture Over Chapter 3 2 So the first analysis was a three-predictor analysis with eosgpa as the criterion and gencon, wpt, and overall inconsistency as predictors. Here’s the result . . .

Model Summary Adjusted R Std. Error of the Model R R Square Square Estimate 1 .357a .128 .119 .565897 a. Predictors: (Constant), meanGenV Mean of Gen Condition B5 scale SDs, gencon, wpt Wonderlic Personnel Test score.

Coefficientsa Standardized Unstandardized Coefficients Coefficients Model B Std. Error Beta t Sig. 1 (Constant) 1.731 .278 6.227 .000 wpt Wonderlic Personnel .026 .006 .263 4.746 .000 Test score. gencon .177 .040 .241 4.458 .000 meanGenV Mean of Gen -.193 .103 -.103 -1.874 .062 Condition B5 scale SDs a. Dependent Variable: eosgpa Acc Records GPA at end of semester in which participated

Inconsistency does not officially add to multiple R when controlling for wpt and gencon (p=.062), although it is close enough to tantalize.

Does inconsistency add incremental validity to just conscientiousness, leaving cognitive ability out of the equation, as would be the case if an organization was concerned about the adverse impact associated with use of cognitive ability tests?

The correlation of eosgpa with gencon alone is .196 (p < .001). Here are the results when inconsistency is added to the mix.

Model Summary Adjusted R Std. Error of the Model R R Square Square Estimate 1 .267a .071 .065 .579975 a. Predictors: (Constant), meanGenV Mean of Gen Condition B5 scale SDs, gencon Coefficientsa Standardized Unstandardized Coefficients Coefficients Model B Std. Error Beta t Sig. 1 (Constant) 2.588 .212 12.185 .000 gencon .157 .039 .215 4.007 .000 meanGenV Mean of Gen -.338 .100 -.182 -3.390 .001 Condition B5 scale SDs a. Dependent Variable: eosgpa Acc Records GPA at end of semester in which participated

Oh, yeah!! The contribution of inconsistency to prediction is significant. Adding this free measure increases R from .196 to .267, an increase of 30% in R. Although the overall R is not terribly high, the cost to the test administrator is minimal.

Now that inconsistency has been established as a predictor of an academic criterion, it’s time to think more about what inconsistency means. It would seem that inconsistency of responding would mean that the Lecture Over Chapter 3 3 respondent’s picture of himself or herself is a little bit cloudy or fuzzy. This suggests that the score on the conscientiousness scale, for example, for an inconsistent responder may not be as accurate a representation of that person’s conscientiousness as would the same score obtained from a consistent responder.

This suggests that if conscientiousness is a predictor of eosgpa (which it is), the relationship of conscientiousness scores of inconsistent responders to gpa would be sloppier, fuzzier than the relationship of conscientiousness scores of consistent responders. This suggests that the strength of the eosgpa to conscientiousness relationship might depend on the consistency of the responders - that inconsistency might moderate the relationship of eosgpa to conscientiousness. Following is a moderated regression analysis testing this hypothesis.

Model Summary Adjusted R Std. Error of the Model R R Square Square Estimate 1 .296a .087 .079 .575769 a. Predictors: (Constant), genCXGenV, gencon, meanGenV Mean of Gen Condition B5 scale SDs

Coefficientsa Standardized Unstandardized Coefficients Coefficients Model B Std. Error Beta t Sig. 1 (Constant) 1.010 .689 1.465 .144 gencon .481 .140 .658 3.431 .001 meanGenV Mean of Gen 1.030 .578 .554 1.783 .076 Condition B5 scale SDs genCXGenV -.279 .116 -.907 -2.404 .017 a. Dependent Variable: eosgpa Acc Records GPA at end of semester in which participated

The product is significant, which indicates that moderation is occurring. But what does that mean?

Here’s the equation

Y = 1.010 + .481*C + 1.030*V - .279*V*C

Y = 1.010 + 1.030*V + (.481 - .279*V)*C The red’d term is the moderator.

Suppose we identify a group for which V = 0, so that the respondents are perfectly consistent: Y = 1.010 + .481*C

Suppose we identify a group for which V = 1.5, so that the respondents are quite inconsistent: Y = 2.555 + .062*C

This shows that the larger the amount of inconsistency, the shallower the slope relating Y to C. Conversely, the less the amount of inconsistency, the steeper the slope – the stronger the relationship.

The difference can be better appreciated by forming two inconsistency groups – those respondents with high inconsistency and those with low inconsistency. Using a median split, two such groups were formed. The relationship of eosgpa to C for each group is plotted in the following figure.

Lecture Over Chapter 3 4 Group 0 (Consistent): r= .369

Group 1 (Inconsistent): r=.095

Note from the SPSS output on the previous page that the R for the whole sample is now .296, up from .196 for conscientiousness alone and up from .267 based on conscientiousness and inconstancy. So adding the product has done two things . . .

First, a theoretical payoff. It has broadened our understanding of the role of inconsistency in personality questionnaires. We now know that while inconsistency is itself a predictor, we also know that inconsistency affects how other personality characteristics predict.

Secondly, a practical payoff. The product term has increased validity significantly, bringing the validity of this single Big 5 personality questionnaire nearer the respectable range, reserved for only job knowledge and cognitive ability tests and unstructured interviews (as shown in Frank Schmidt’s RCIO presentation.)

So, moderation occurs when one variable (inconsistency) affects the slope of the relationship of a criterion to some predictor (conscientiousness).

This can be investigated in multilevel contexts.

It may be that organizational characteristics affect the slope of the relationship of a level 1 criterion to some level 1 predictor.

Such a dependence is easily handled in the multilevel framework. We’ll first examine the effect of simple random variation in slopes from school to school.

Lecture Over Chapter 3 5 Step 4. (p 93) Level 1 Relationship with intercept related to Level 2 characteristics as in previous step and random Level 1 slope. (presented horribly on p93) (data are still ch3multilevel.sav)

Level 1 Model Yij = B0j + B1j(sesij) + eij

Level 2 Intercept Model B0j = g00 + g01(ses_mean)j + g02(per4yrc)j + g03(public)j + u0j

Level 2 Slope Model B1j = g10 + u1j This is new – the u1j is a random slope difference from the whole sample mean slope.

Subscript rules for g

1st subscript designates which Level 1 coeff is being modeled – 0 for intercept; 1, 2, etc for slopes 2nd subscript designates which coeff this is – 0 for intercept of the model; 1, 2, etc for a slope

This is a simple, but profound addition to the previous model of Step 3. It’s says that the average slope across all groups is g10, but that each individual group slope may vary about that average. The deviation of group j’s slope from the average is u1j. We won’t estimate the u1js, but we will estimate the variance of the u1js. If that variance is significantly greater than 0, it will cause us to look for reasons for that variation.

Combined Model B B 0j 1j

Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + g03(public)j + u0j + (g10 + u1j )(sesij) + eij

Fixed Random F R R

Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + g03(public)j + u0j + g10(ses) + u1j (ses) + eij

Intercept Slope

Note that I multiplied ses times g10 and u1j in the last equation. This highlights the moderation – the slope of ses may vary from group to group. Because u1j is a random quantity, the moderation is random.

Having two random components spawns a creature, the child of u0j and u1j, their covariance.

The addition of a 2nd random component, creates an emergent parameter that is the result of the inclusion of both in the model – the covariance of u0j and u1j. This covariance did not exist when there was only one random component (e.g., u0j) but it emerges whenever there are two or more random components in a model. We will have to account for it when specifying the model to SPSS.

Lecture Over Chapter 3 6 MIXED Specification

Since public is a dichotomy, it can be either a covariate or a factor. It was a factor in the previous analysis, I decided to make it a covariate in this analysis

Lecture Over Chapter 3 7 This tells MIXED that there is some random variation in the intercept from group to group.

This tells MIXED that there is some random variation in the slope from group to group.

The inclusion of ses in the Model: field is new – the slope of ses is now a random effect.

Lecture Over Chapter 3 8 Note that I have left out the “Covariances of random effects” specification for this example.

It should be checked. See the explanation below.

MIXED math WITH public ses_mean per4yrc ses /FIXED=ses_mean per4yrc ses | SSTYPE(3) /METHOD=REML /PRINT=G SOLUTION TESTCOV /RANDOM=INTERCEPT ses | SUBJECT(schcode) COVTYPE(VC).

I did not check the “Covariances of random effects” specification for this example because checking it caused the program to freak out. As the text mentions on page 98 in the paragraph just prior to “Step 5: . . .”, the problem was associated with inclusion of the public factor in the analysis.

I don’t know why I checked “Covariances of residuals” – it should not have been checked, since it’s not applicable here.

Lecture Over Chapter 3 9 Mixed Model Analysis

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + g03(public)j + u0j + g10(ses) + u1j (ses) + eij

[DataSet1] g:\MdbT\P595C(Multilevel)\Multilevel and Longitudinal Modeling with IBM SPSS\Ch3Datasets&ModelSyntaxes\ch3multilevel.sav

Model Dimensionb

Number of Covariance Number of Subject Levels Structure Parameters Variables Fixed Effects Intercept 1 1 public 1 1 ses_mean 1 1 per4yrc 1 1 ses 1 1 Random Effects Intercept + sesa 2 Variance 2 schcode Components Residual 1 Total 7 8

a. As of version 11.5, the syntax rules for the RANDOM subcommand have changed. Your command syntax may yield results that differ from those produced by prior versions. If you are using version 11 syntax, please consult the current syntax reference guide for more information. b. Dependent Variable: math.

Information Criteriaa -2 Restricted Log Likelihood 48121.839 Akaike's Information Criterion 48127.839 (AIC) Hurvich and Tsai's Criterion 48127.842 (AICC) Bozdogan's Criterion (CAIC) 48151.342 Schwarz's Bayesian Criterion 48148.342 (BIC)

The information criteria are displayed in smaller- is-better forms. a. Dependent Variable: math.

Lecture Over Chapter 3 10 Fixed Effects

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + g03(public)j + u0 j + g10(ses) + u1j (ses) + eij Type III Tests of Fixed Effectsa

Source Numerator df Denominator df F Sig. Intercept 1 419.501 14339.792 .000 g00 public 1 407.915 .191 .662 g03 ses_mean 1 698.064 71.910 .000 g01 per4yrc 1 410.212 8.449 .004 g02 ses 1 635.541 350.952 .000 g10 a. Dependent Variable: math. Estimates of Fixed Effectsa

95% Confidence Interval

Parameter Estimate Std. Error df t Sig. Lower Bound Upper Bound Intercept 56.469785 .471568 419.501 119.749 .000 55.542854 57.396716 g00 public -.119986 .274402 407.915 -.437 .662 -.659405 .419433 g03 ses_mean 2.659588 .313631 698.064 8.480 .000 2.043815 3.275361 g01 per4yrc 1.360179 .467933 410.212 2.907 .004 .440334 2.280025 g02 ses 3.163898 .168888 635.541 18.734 .000 2.832252 3.495544 g10 a. Dependent Variable: math. Covariance Parameters Estimates of Covariance Parametersa

95% Confidence Interval

Parameter Estimate Std. Error Wald Z Sig. Lower Bound Upper Bound

Residual 62.114614 1.111312 55.893 .000 59.974229 64.331386 Var eij

Intercept [subject = Variance 2.112261 .445499 4.741 .000 1.397075 3.193563 Var u0j schcode]

ses [subject = schcode] Variance 1.314246 .566455 2.320 .020 .564675 3.058824 Var u1j

a. Dependent Variable: math.

So there is significant variation in the slopes from school to school. This suggests that we should look for some reason for this variation – something that causes there to be a weak relationship of math to ses in some schools but a strong relationship in other schools.

Lecture Over Chapter 3 11 Step 4 redone, leaving out public. (as hinted at on p. 98)

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + u0 j + g10(sesij) + u1j (ses) + eij

Lecture Over Chapter 3 12 Note that I requested Unstructured as the Covariance Type: for this analysis. Doing that causes the program to estimate the covariance of the random intercept and the random slope.

MIXED math WITH per4yrc ses ses_mean /CRITERIA=CIN(95) MXITER(100) MXSTEP(10) SCORING(1) SINGULAR(0.000000000001) HCONVERGE(0, ABSOLUTE) LCONVERGE(0, ABSOLUTE) PCONVERGE(0.000001, ABSOLUTE) /FIXED=per4yrc ses ses_mean | SSTYPE(3) /METHOD=REML /PRINT=G SOLUTION TESTCOV /RANDOM=INTERCEPT ses | SUBJECT(schcode) COVTYPE(UN).

Lecture Over Chapter 3 13 Mixed Model Analysis

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + u0 j + g10(sesij) + u1j (ses) + eij

[DataSet1] G:\MDBO\html\myweb\PSY5950C\ch3multilevel.sav

Model Dimensiona Number of Levels Covariance Structure Number of Subject Variables Parameters Intercept 1 1

per4yrc 1 1 Fixed Effects ses 1 1

ses_mean 1 1 Random Effects Intercept + sesb 2 Unstructured 3 schcode Residual 1 Total 6 8 a. Dependent Variable: math. b. As of version 11.5, the syntax rules for the RANDOM subcommand have changed. Your command syntax may yield results that differ from those produced by prior versions. If you are using version 11 syntax, please consult the current syntax reference guide for more information.

Information Criteriaa -2 Restricted Log Likelihood 48095.742 Akaike's Information Criterion (AIC) 48103.742 Hurvich and Tsai's Criterion (AICC) 48103.747 Bozdogan's Criterion (CAIC) 48135.080 Schwarz's Bayesian Criterion (BIC) 48131.080 The information criteria are displayed in smaller-is-better forms. a. Dependent Variable: math.

Fixed Effects

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + u0 j + g10(ses) + u1j (sesij) + eij

Type III Tests of Fixed Effectsa Source Numerator df Denominator df F Sig. Intercept 1 443.713 17653.626 .000 per4yrc 1 439.342 7.864 .005 ses 1 711.595 357.305 .000 ses_mean 1 382.531 76.531 .000 a. Dependent Variable: math.

Estimates of Fixed Effectsa Parameter Estimate Std. Error df t Sig. 95% Confidence Interval Lower Bound Upper Bound

Intercept 56.431147 .424719 443.713 132.867 .000 55.596435 57.265858 g00 per4yrc 1.318279 .470094 439.342 2.804 .005 .394367 2.242191 g02 ses 3.185864 .168542 711.595 18.903 .000 2.854965 3.516763 g10 ses_mean 2.457101 .280869 382.531 8.748 .000 1.904860 3.009342 g01 a. Dependent Variable: math.

Lecture Over Chapter 3 14 Covariance Parameters

Model is Yij = g00 + g01(ses_mean)j + g02(per4yrc)j + u0 j + g10(sesij) + u1j (ses) + eij

Estimates of Covariance Parametersa Parameter Estimate Std. Error Wald Z Sig. 95% Confidence Interval Lower Bound Upper Bound Residual 62.190380 1.105319 56.265 .000 60.061293 64.394939 Var eij UN (1,1) 2.036402 .440471 4.623 .000 1.332754 3.111553 Var u0j Intercept + ses [subject = UN (2,1) -1.594239 .330983 -4.817 .000 -2.242953 -.945525 schcode] Cov(u0j,u1j) UN (2,2) 1.329850 .532481 2.497 .013 .606702 2.914941 Var u1j a. Dependent Variable: math.

Random Effect Covariance Structure (G)a Intercept | ses | schcode schcode

Intercept | schcode 2.036402 -1.594239 Cov(u0j,u1j) ses | schcode -1.594239 1.329850 Var u1j Unstructured a. Dependent Variable: math.

The covariance of u0j and u1j is negative and significant. This means that in schools in which the intercept was large, the slope was small and in schools in which the intercept was small, the slope was large. The negative relationship between intercept and slope is often found.

Small intercept Large slope

Lecture Over Chapter 3 15 Step 5. Level 1 Relationship with intercept related to Level 2 characteristics and slope related to Level 2 characteristics (p98) START HERE ON 2/13/13 Level 1 Model Yij = B0j + B1j(sesij) + eij

Level 2 Intercept Model Eq 3.12 B0j = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j

Level 2 Slope Model (new to this Step; the text’s Eq 3.15 is incorrect) B1j = g10 + g11*ses_meanj + g12*per4yrcj + g13*publicj + u1j

Combined Model . . simply substituting for B0j and B1j

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj+ u0j + (g10 + g11*ses_meanj + g12*per4yrcj + g13*publicj + u1j)(ses) + eij

Level 2 Intercept Model for B0j Level 2 Slope Model for B1j

Rewriting the Combined Model propagating ses into the parentheses . . .

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j <---(Same as above)

+ g10*sesij + g11*ses_meanj*sesij + g12*per4yrcj*sesij + g13*publicj*sesij + u1j*sesij + eij

Note that this rewriting shows that having a Level 2 model of the Level 1 slope implies that the Level 2 variables moderate the relationship of Y to the Level 1 factor, ses.

g11*ses_meanj*sesij means that ses_mean moderates the relationship of Y to ses g12*Per4yrcj*sesij means that per4yrc moderates the relationship of Y to ses g13*Publicj*sesij means that public moderates the relationship of Y to ses

Rewritten to show the moderation of the Y~~ses relationship for each variable.

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j

+ g10*sesij + g11*ses_meanj*sesij + g12*per4yrcj*sesij + g13*publicj*sesij + u1j*sesij + eij

So, the slope of the relationship of Y to ses may depend on ses_mean; it may depend on per4yrc; and it may depend on public.

Showing the fixed and random effects

Fixed Random Fixed R R

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j + g10*sesij + g11*ses_meanj*sesij + g12*per4yrcj*sesij + g13*publicj*sesij + u1j*sesij + eij Lecture Over Chapter 3 16 Specifying the above model to MIXED

Specify all of the variables - Level 1, Level 2 intercept, and Level 2 slope variables.

In this case, the Level 2 intercept and Level 2 slope variables are identical. That won’t always be the case.

Lecture Over Chapter 3 17 Building the interaction terms . . . Done differently from the manner shown in the text.

First, put each variable into the model as a Main Effect. Click on each variable name and then click on Add.

Add the interaction terms using a method different from and easier than that used in the text.

Click on ses_mean, then CTRL-click on ses.

Make sure “Interaction” is showing on the button between the fields.

Click on “Add”

Repeat for each interaction . . .

Lecture Over Chapter 3 18 This tells MIXED that there is some random variation in the slope from group to group.

The above series of screen shots showed the specification of fixed variation from group to group depending on ses_mean, per4yrc, and public.

MIXED math WITH public ses_mean per4yrc ses /FIXED=public ses_mean per4yrc ses ses_mean*ses per4yrc*ses public*ses | SSTYPE(3) /METHOD=REML /PRINT=G SOLUTION TESTCOV /RANDOM=INTERCEPT ses | SUBJECT(schcode) COVTYPE(VC).

Lecture Over Chapter 3 19 Mixed Model Analysis

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j + g10*sesij + g11*ses_mean*sesij + g12*per4yrc*sesij + g13*public*sesij + u1j*sesij + eij

[DataSet1] g:\MdbT\P595C(Multilevel)\Multilevel and Longitudinal Modeling with IBM SPSS\Ch3Datasets&ModelSyntaxes\ch3multilevel.sav Model Dimensionb

Number of Covariance Number of Subject Levels Structure Parameters Variables Fixed Effects Intercept 1 1 public 1 1 ses_mean 1 1 per4yrc 1 1 ses 1 1 ses_mean * ses 1 1 per4yrc * ses 1 1 public * ses 1 1 Random Effects Intercept + sesa 2 Variance 2 schcode Components Residual 1 Total 10 11

a. As of version 11.5, the syntax rules for the RANDOM subcommand have changed. Your command syntax may yield results that differ from those produced by prior versions. If you are using version 11 syntax, please consult the current syntax reference guide for more information. b. Dependent Variable: math.

Information Criteriaa -2 Restricted Log Likelihood 48117.449 Akaike's Information Criterion 48123.449 (AIC) Hurvich and Tsai's Criterion 48123.452 (AICC) Bozdogan's Criterion (CAIC) 48146.951 Schwarz's Bayesian Criterion 48143.951 (BIC)

The information criteria are displayed in smaller- is-better forms. a. Dependent Variable: math.

Lecture Over Chapter 3 20 Fixed Effects

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j + g10*sesij + g11*ses_mean*sesij + g12*per4yrc*sesij + g13*public*sesij + u1j*sesij + eij

Type III Tests of Fixed Effectsa

Source Numerator df Denominator df F Sig.

Intercept 1 450.229 13555.159 .000 g00

public 1 405.467 .191 .662 g03

ses_mean 1 759.554 69.732 .000 g01

per4yrc 1 439.899 8.072 .005 g02

ses 1 518.400 38.483 .000 g10

ses_mean * ses 1 303.798 .209 .648 g11

per4yrc * ses 1 479.307 .048 .826 g12

public * ses 1 404.838 4.072 .044 g13

a. Dependent Variable: math.

Estimates of Fixed Effectsa

95% Confidence Interval

Parameter Estimate Std. Error df t Sig. Lower Bound Upper Bound

Intercept 56.505254 .485329 450.229 116.427 .000 55.551462 57.459046 g00 public -.119925 .274238 405.467 -.437 .662 -.659031 .419182 g03 ses_mean 2.706473 .324107 759.554 8.351 .000 2.070222 3.342724 g01 per4yrc 1.361887 .479336 439.899 2.841 .005 .419814 2.303960 g02 ses 3.757343 .605681 518.400 6.203 .000 2.567451 4.947235 g10 ses_mean * ses -.136539 .298571 303.798 -.457 .648 -.724069 .450990 g11 per4yrc * ses -.130132 .592163 479.307 -.220 .826 -1.293688 1.033423 g12 public * ses -.668237 .331145 404.838 -2.018 .044 -1.319216 -.017258 g13

a. Dependent Variable: math.

OK, so based on this, among kids equal on the other factors . . . Main effects Kids in schools with higher average ses (ses_mean) have generally higher math scores. Kids in schools with higher % of students going to 4-yr colleges (per4yrc) generally have higher math scores. Kids with higher individual ses have higher math scores. Moderation The slope of the relationship of math scores to ses depends on whether the kid goes to public or private school. Specifically, Y-hat = Other stuff + 3.757*ses - .120*public - 0 .668*public*ses Other stuff -.120*public + (3.757 - 0.668*public)*ses

So, the relationship of math to ses is weaker (shallower, flatter by 0.668) for kids in public schools.

Lecture Over Chapter 3 21 Covariance Parameters

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j + g10*sesij + g11*ses_mean*sesij + g12*per4yrc*sesij + g13*public*sesij + u1j*sesij + eij

Estimates of Covariance Parametersa

95% Confidence Interval

Parameter Estimate Std. Error Wald Z Sig. Lower Bound Upper Bound Residual 62.101323 1.111295 55.882 .000 59.960979 64.318068 Var eij

Intercept [subject = Variance 2.087257 .445340 4.687 .000 1.373924 3.170949 Var u0j schcode] ses [subject = schcode] Variance 1.345094 .566327 2.375 .018 .589346 3.069979 Var u1j a. Dependent Variable: math.

There is still some random variation in slopes from group to group not accounted for by the model.

The full equation is (this repeats the explication of the interaction shown above in excruciating detail)

Yij = g00 + g01*ses_meanj + g02*per4yrcj + g03*publicj + u0j + g10*sesij + g11*ses_mean*sesij + g12*per4yrc*sesij + g13*public*sesij + u1j*sesij + eij

The nonsignificant predictors have been struck through . .

Yij = 56.505254 + 2.706473*ses_meanj + 1.361887*per4yrcj - .119925*publicj + u0j

+ 3.757343*sesij - .136539*ses_meanj*sesij -.130132*per4yrcj*sesij - .668237*publicj*sesij + u1j*sesij + eij.

The value in red is the addition/subtraction to the slope of the relationship of Y to ses due to public.

For private schools, for which public=0, slope of relationship of Y to ses is slightly larger, since -.668237*0 = 0.

For public schools, for which public=1, slope is slightly smaller by a factor of -.668237*1.

The covariances of the random effects . . .

Alas, the text and this example did not address the issue of the covariance (or correlation) of the random intercept residuals with the random slope residuals. This is because the program was unable to achieve a solution when the covariance was estimated. That is a problem.

Lecture Over Chapter 3 22 Graphical description of the significant interaction of public and ses . . .

56.505254 +2.706473*ses_meanj + 1.361887*per4yrcj - .119925*publicj

3.747343*sesij = .668237*publicj*sesij

The equation with nonsignificant terms not involved in interactions deleted is

Predicted Y = 56.505254 + 2.706473*ses_meanj + 1.361887*per4yrcj - .119925*publicj <--- Group Intercept

+ 3.757343*sesij - .668237*publicj*sesij <----- Within-group relationship to ses

Lecture Over Chapter 3 23 The significant variance of u1j . . .

The fact that it is significant means that there is significant random variability in the slopes from school to school, as I’ve tried to illustrate in the above figure. That is variability in the tilts of the lines, not in the positions of the lines.

Note that variability in the tilts of the lines implies that there will also be variability in the intercepts of those lines. This leads to the topic of centering, which is covered next.

Lecture Over Chapter 3 24