DATA CLUSTERING

1. Introduction

The clustering analysis is a process of identifying the physical or abstract groups in data. It is considered as a branch of pattern recognition and artificial intelligence. Clustering is a technique from which we keep the objects or things together based on the common characteristics, attributes or general behavior. In modern science, it is applied to any fields ranging from engineering to medicine, astronomy to psychology, economics to sociology, geography to chemistry, population to statistics and others. There are different types of clustering techniques; the use of each entirely depends on the type of data, the purpose and the nature of algorithms. It is also possible to apply different techniques to the same set of data to find the relevancy of the technique. The more general objectives of the cluster analysis are to partition or sub-divide a set of objects into homogenous sub- groups or into hierarchical sub-groups. The clustering technique is therefore designed to the followings:

a. Identify natural clusters within the mixture of entities. b. Construct a useful conceptual scheme for classifying objects or entities. c. Generate the hypothesis within a body of raw data by discovering suspected clusters. d. Test the hypothesized classes believed to present within a certain groups of cases. e. Identify homogenous sub-groups characterized by attribute patterns useful for prediction.

In earlier times clustering analysis was based on subjective approach, i.e. by relying on the perception and judgment of the researcher. However, with the recent need of classifying very large dimensional data and with tough upcoming objectivity standard has given rise to automatic classification procedure. Even a full-fledged classification journal (Journal of Classification) is in vogue now. The International Federation of Classification Societies even got founded since 1985.

Data clustering is under tremendous development now. Now, the contributing areas of research, which make the technique more efficient, are data mining, statistics, machine learning, spatial database technology, biology, and marketing.

2. Conceptual problems in cluster analysis

The novice user of cluster analysis soon finds that even though the intuitive idea of clustering is clear enough, the details of actually carrying out such an analysis entails a host of problems. The foremost difficulty is that cluster analysis is not a term for a single integrated technique with well-defined rules of utilization; rather it is an umbrella term for a loose collection of heuristic procedures and diverse elements of applied statistics (probability and mathematical statistics). The actual search for the clusters in real data involves a series of intuitive decisions as to which elements of cluster analysis should be utilized and what the basic criteria to chose the type of techniques and how they are relevant to the particular object for which clustering is made.

The role of analyst is very important in clustering. The analyst should be able to know the context of the problem and he should have thorough knowledge to the context. Depending upon the context, the variables may be different. Moreover, he should be clear for the objective of the research. It is possible to analyze the data with different angles. So the clustering should be made to fulfill the goal of the research. The clustering criteria vary with each research. Beforehand decisive 1 criteria based on various variables associated with the dataset should be finalized. The following are a list of criterion taken into consideration to go for the clustering analysis.

2.1 Choice of data units

For any type of research, the type of data is very much important. The data should be collected based on the objective, purpose and destination of the research. The object of the analysis may be crops, type of nutrients or slope if the research is related with the agriculture engineering. The attributes on the other hand, may be yield, nitrogen content, or soil types. In mathematical form, we can arrange the data in the form of matrix. The objects are written in the form of row and the attributes in column or vice versa.

Another important parameters during data collection are the units for different types of data. Initially all types of data have different units which are to be converted into the same unit before analysis. There are two different situations in the choice of data units. The first thing to consider for the choice of data unit is to find that the object is a complete representation of the analysis. It will not matter whether the proposed data unit will apply for the other types of classifications. The principal consideration is to make sure that the proposed unit will represent without omitting the important data units.

In the second case, when the sample is taken from the population, statistically it would have to represent the attribute of the whole population. However, in case of cluster analysis, it does not do so. The non-randomly selected samples may not represent the whole population. Rather it will infer the possibility of having less deviation from the reality when the extrapolation is made.

The data units cannot be merely arrayed for study like pieces of statuary. They must be consistently described in terms of their characteristics, attributes, class memberships, traits, and other such properties. Collectively, these descriptors are the variables of the problem It is probably the choice of variables that has the greatest influence on the ultimate results of the cluster analysis.

Randomization procedure should be followed while selecting samples from a dataset. That means, all data units are equally likely for selection as part of the sample. Under random selection, any groups that exist in the data will tend to be represented in the sample in proportion to their relative size in the population. Again, due to randomization, small or rare groups will tend to become lost amid the larger clusters. Therefore, it is necessary to be very selective about the data units to obtain any sizeable sample from such groups. Due to this very nature of randomization, there is possibility of getting lost of some important information, which is associated with the object whose contribution for the whole population is insignificant. Therefore, clustering is carried out by taking the samples in a non-random manner in order to get the representation of the small samples to the clustering.

2.2 Choice of attributes

A careful definition of the attribute domain is of considerable importance incase of cluster analysis. In most of the cases, the attributes with different dimensions are not similar to each other. The similar attributes of the same objects are not necessarily similar with the other attributes. For example, three persons with the same age may not all be male or female. And their interest towards the sports may not be the same. Therefore there is no single way to categorize the entities based on 2 the attribute types. The investigator must set up a procedure for selecting a broadly representative sample of attributes based on the interest of his or her research. The choice of the all attributes of the entities depends upon the goal of the research. The most important thing to be considered that one has to be sure with the two extreme quantative values of the attributes that should be considered before entering into the analysis. Besides the attributes, the objects when are taken into consideration are to make sure for the representation of the all types of characters that are the interest of the research. These attributes might be binary, categorical (nominal), ordinal data, or a mixture of these data types.

2.3 Choice of variables and scales

The choice of variables is the most important factor for cluster analysis. The result of the cluster analysis will be entirely different if the choices of variables are different. There will be very less discriminations among the data if the variables are largely the same for all types of data. If the variables are very much different, there is the possibility of masking the important features of the clusters. Probably the clusters may provide the misleading results. Thus it cannot necessarily be said that inclusion of strong discriminations may not particularly relevant for the purpose of the research at hand.

Regarding with the clustering criteria, many have been proposed and used. It is very good practice to define different criteria and analyze the same data to come with various aspect of analytical results. Because, different criteria gives various facets of the result. One result might be more suitable than the other in regard with the purpose of the data and clustering. This selection of clustering criteria will be discussed later.

Another important factor is to decide the number of clusters in the data. Different mechanics of clustering have different ways for numbering. For example, hierarchical clustering methods give the configuration for every number of clusters from one up to the number of entities. Some find a best fitting structure for a given number of clusters. Some algorithms begin with chosen number of groups and then alter this number as indicated by certain criteria with the objective of simultaneously determining both the number of clusters and their configuration. For example, while classifying an aerial image, we should be aware enough to discriminate different land uses present in the ground. Thus, our selection of number of cluster will be based our preemptive knowledge. Therefore, the number of clusters could be usefully done.

When clustering the data units, it is always necessary to amalgamate all the variables to the single index of similarity. The contribution of each individual variable depends upon the scale of variability and the relative interaction of one variable to the others. For example, the choice between “mm (millimeter)” and “m (meter)” may be similar between the choice between the “grams” and “tons”. But if one tries to co-relate between “mm” and “ton” it will be drastically different. One of the best possible ways is to transfer all different variables into zero means and unit variance. This is called the normalization of the data values.

2.4 Scale of measurements for different Attributes

Attributes can be measured both in continuous quantative scale like temperature or on discrete qualitative scale like sex, age etc. There is not only one universal scale that can represent

3 the different types of attributes. The researchers use different types of scales based upon their interest and the mode of research. Mainly there are four kinds of scales in use. They are:

a. Nominal scale b. Ordinal scale c. Interval scale d. Ratio scale

Nominal scale measures unrecorded set of classes. The scales are numerically listed inferring the characteristic of the object. For example, if the sex is to be scaled, “0” can list for male and “1” for female. For different colors, like zero for blue, one for red, two for yellow etc can also be scaled in this scaling mechanism.

Ordinal scales are used to show the ordered set of attributes. For example, there are ten students in a class whose marks in a particular subject varies from 90 to 50. If they have to be scaled, they can be scaled in the set of numbers like 1 = 90 (first), 2 = 80 (second), 3 = 75 (third) etc.

Interval and ratio both scales are continuous scales and have the same meaning. However, the bottom limit in interval scale is fixed by arbitrarily but in the case of ratio scale, it is inherently included. For example the temperature is an interval scale. The difference in value has certain difference in degree of heat content in water. When the temperature scale is at zero, it means that the water has arbitrarily been set to freezing point even if it has some heat content. If it is measured in degree Kelvin, it has some amount of heat content in water with that value of temperature. On the other hand, the assignment of zero in the ratio scale is implicitly has certain meaning. Like zero weight means no weight at all.

The choice of scales depends upon the trade off between the benefit and cost analysis. The resemblance matrix is constructed by considering different scales in accordance to the nature of the problem and suitability of the scales.

2.5 Data matrix, standardization and resemblance coefficients

Initially when large numbers of data are collected, it cannot be said what information the data can provide. How much the information would be qualitative. Does the information will fulfill the research goal? How can we extract more information from the dataset? All these kinds of questions and lots more should be answered before the dataset are processed for further analysis. In order to pre-process the data, it should be arranged in a particular format. Depending upon the object and attributes, the data can be set in row or column. The normal way is to arrange the objects in columns and attributes in row. However, it is also found the reverse pattern as well in different literatures.

The goal of the data arrangement is to find the similarity among the data. For example, in case of land use classification, the objective could be to differentiate plots similar to each other with respect to color in the image, with respect to the texture in the image, and with respect to some other physical attributes etc. The objects are the plots of land and attributes are the color and texture in image.

4 For very small dataset, the data can be assembled into different clusters by simply from visual inspection. However, for a very large dataset, the analysis technique should be applied. To begin with, the data should be standardized. It means that the attributes should be converted into unit less quantity.

There are mainly two important reasons for the data standardization. Firstly the units we choose for the attributes can significantly affect the similarities among the objects. Secondly the standardized attributes more uniformly represent the similarity among the objects. By standardizing, the relative weight to be assigned will be diminished and the objects will be more homogenous. For example, if the range of value for the first object is very large than the second, the relative weight taken by the first is significantly higher. If the normalization is carried out, the influence will be decreased. To standardize the attributes, the functions should be standardized at first.

As an example, suppose we have attributes i = 1, 2, 3, ……,n of objects, j = 1, 2, 3,……,t, the value of data in data matrix is represented by xij. The standardized data matrix can be written as zij where,

xi zij 2.1 si where

t t 2 xij xij xi j1 j1 xi and s 2.1.1 t i t 1

Thus, to obtain the standardized data matrix of any objects, the particular value xij is subtracted from the mean of the objects xi and divided by the standard deviation si. This will give the standard data matrix as a unit less quantity. Note that the mean z i of each its row of standardized data matrix will be z i = 0 and standard deviation s`i = 1. Moreover all the values of zij lie in the range 2.

When data is standardized, some researchers use mean absolute deviation instead of standard deviation when the original data are changed to standardized data. This is because for example, suppose one of xij has been wrongly recorded, so that it is much too large. In this case standard deviation will be unduly inflated because xij- xi is squared. So if mean absolute deviation is considered, the dispersion will not be unduly inflated. The mean absolute deviation can be computed by:

1 si x1i xi x2i xi ...... xni xi 2.2 n

No matter which type of equation is used, the main aim is to find robustness in standardized data matrix. For any kinds of objects and their attributes, the data matrix can be converted into resemblance matrix from simply by calculating mean and standard deviation. The other methods of normalizing the data for data standardization purpose are as follows:

5 Normalization means dividing by a norm of the vector, for example, to make the Euclidean length of the vector equal to one. In the NN literature, "normalizing" also often refers to rescaling by the minimum and range of the vector, to make all the elements lie between 0 and 1. Normalization can be done by means of subtracting a measure of location and dividing by a measure of scale; e.g. if the vector contains random values with a Gaussian distribution, subtract the mean and divide by the std, to obtain a "standard normal" variable with mean 0 and std 1. This can help in bringing the data set under normal distribution. The equation for this is:

meanOfAll X i X Normalized X (2.3) St.DeviationOfAll X Mean X i (2.3.1) N 2 (X i mean) Std (N 1) (2.3.2) Where, N = Number of training cases Xi = Value of the raw input variable X for the ‘ith’ training case

In case of data volatility (check the std.), intermediate functions (ratio) can reduce volatility (Stein, 1993). Different examples of use of “ratio” for normalization:

Std(all ) (2.4) NormalizedStdForDataSeries X i mean(all ) X i After the data standardization, the next step is to compute the resemblance coefficients. Resemblance coefficient measures the degree of similarity between each pair of objects. The resemblance coefficient is a mathematical formula whose value is computed by entering for a given pair of objects the values from their columns in the data matrix or standardized data matrix. The obtained value shows the degree of similarity among the objects. There are different kinds of resemblance coefficients. The following resemblance coefficients are described in the next section.

2.5 Other problems associated with data clustering

Noisy data is a major happening in case of real world data collected. In these data, there were outliers, missing data, unknown, or erroneous data. Therefore, due to the sensitivity of clustering algorithm to these kind of data leads to the erroneous clustering of the dataset. We must try to reduce the noise in the data.

Some clustering algorithms are insensitive to the order of the dataset. If the data is rearranged, the clustering result becomes more significant. Thus, it is vital to develop clustering algorithms, which are insensitive to the order of the data. Again, high dimensionality and constraint based data need robust clustering approach.

3. Resemblance coefficient for quantitative attributes

6 Resemblance coefficients are the measure of the degree of similarity among the data to establish homogeneous clusters in the dataset. There are six different resemblance coefficients used for the quantative attributes that use interval and/or ratio scales. However they are equally applicable for ordinal scales. A brief descriptions of each coefficients is shown below:

3.1 Euclidean and Average Euclidean Distance Coefficient, ejk and djk

These distance coefficients are almost similar with each other. The Euclidean distance coefficient measures the nearness or farness of the attributes between the objects. This is very simple in mathematics. The square root in difference of square of the attributes gives the Euclidean th th distance. Suppose xij is the symbol for the value of the i attribute measured on the j object. And similarly if xik is the symbol for the value of the ith attributes measured on the kth object, the Euclidean distance can be computed simply by:

1 2 2 2 e jk xij xik 3.1 i1

Equation 3.1 shows the Euclidean distance for the objects j and k for two attributes i = 2. This equation can be extended for the three attributes and thus for the n attributes. Ultimately, for n attributes the Euclidean distance will be:

1 n 2 2 e jk xij xik 3.2 i1

Thus the square root of the sum of the squares of the differences of the values on the n attributes will give the Euclidean distance coefficient for the objects j and k. The range of ejk is therefore 0 to infinity. The average Euclidean distance djk on the other hand is like e jk but it is divided by n to the square of the difference that is: 1 n 2 2 d jk xij xik / n 3.3 i1

The range of value for djk is also between 0 and infinity. If it is further analyzed between the Euclidean distance and average Euclidean distance, it can be seen that e d jk 3.4 jk n1/ 2

When the cluster analysis is performed using Euclidean and average Euclidean distance coefficients the matter of n1/ 2 is the numerical difference between the two. Thus in regard to the clustering, it will not have much significance. However, the average Euclidean distance can be used where the data are missing irrespective to the Euclidean distance.

3.2 The coefficient of shape difference, zjk

7 Another coefficient for quantitative attributes is coefficient of shape difference zjk. It is computed by dividing total number of attributes by less the number times, square root of square of 2 2 difference of average Euclidean coefficient and q jk. Where q jk is: n n 2 2 1 q x x 3.5 jk 2 ij ik n i1 i1

Thus the coefficient of shape difference will be: 1 2 n 2 2 z d q 3.6 jk jk jk n 1

In this way the shape difference coefficient can also cluster in the data in the ways the formula is presented. It has some modification in comparison to other methods described above. The range of zjk also varies from zero to infinity.

3.3 The cosine coefficient or Congruency coefficient cjk

This coefficient also defines the similarity and dissimilarity coefficients. It is frequently used to access similarity between different profiles. The range of this coefficient is between –1 to +1. The value of +1 means the objects are maximum similar and the value of –1 means they are minimum similar that is maximum dissimilar. The equation can be written in the following mathematical form as: n xij xik i1 c jk 1 1 3.7 n 2 n 2 2 2 x ij xik i1 i1

Although it is difficult to visualize the attributes of any two objects, which are more than three, the equation 8 can be generalized to any n attributes. The value cjk gives the corresponding similarity and dissimilarity coefficient from which we can infer the respective nearness and farness between any two objects. If we perform the same calculations for m objects, we can cluster the data easily. In this equation scatter is equalized because the operation normalizes both profile vectors. This means it transforms to unit length.

3.4 The correlation coefficient rjk

The correlation coefficient is also defined in the range from –1 to +1. The value of +1 signifies the maximum similarity between the objects and –1 signifies maximum dissimilarity between them. The correlation coefficient is also called as Pearson product moment correlation coefficient. In the same way it also can be said as cophenetic correlation coefficient in some circumstances. The difference between correlation coefficient and cophenetic coefficient is that correlation coefficient is calculated between the objects with their ‘n’ attributes at the same time. But for the other, we calculate in the hierarchical fashion by taking the advantage of similarity between the objects and clustering them accordingly. For example, suppose objects A, B, and C

8 have attributes X, Y, and Z. The correlation coefficient is directly computed among the attributes X, Y and Z taking A and B, A and C and B and C. However for the case of cophenetic coefficient, we first compute Euclidean distance coefficient between A and B, A and C and B and C. Then we compare the coefficients and cluster them accordingly. Suppose A and B are more similar than C then the cophenetic coefficient between B and C would be equal to that of A and C.

The mathematical expression for the correlation coefficient is: n 1 n n xij xik xij xik i1 n i1 i1 rjk 1/ 2 3.8 n n 2 n n 2 2 2 xij 1/ n xij x ik 1/ n xik i1 i1 i1 i1

Equation 9 mainly deals with the attributes of j and k objects. The nth attributes can thus be compared with this equation. Although the physical visualization is somewhat difficult the similarity can be compared between them from this equation. The denominator measures the degree of scatter among the attributes. Because of the different units among the attributes, it is better to reduce the mean of zero and variance of one prior to compare the attributes among the objects. Substantial numbers of researches are been conducted using this equation.

3.5 The Canberra metric coefficient ajk

It is also one type of resemblance coefficients. Its range varies from 0 to 1. The value 0 indicates maximum similarity and 1 indicates maximum dissimilarity. It primarily deals with the absolute value between the attributes of the objects. The mathematical expression can be written as below: n xij xik a jk 1/ n 3.9 i1 xij xik

Thus by averaging for the nth attributes, the similarity and dissimilarity coefficients can be computed.

3.6 Bray-Curtis Coefficient, bjk

This is also one of the dissimilarity coefficients whose value ranges from 0 to 1 with 0 meaning of maximum similarity and 1 with maximum dissimilarity. It is almost similar with Canberra metric coefficient except the division by total number of attributes and the summation sign. The expression is: n xij xik i1 b jk n 3.10 xij xik i1

The Canberra coefficients and Bray-Curtis coefficient have some limitations regarding with the value of data set. The negative value they cannot take. In this condition the standardized data

9 matrix cannot be used to calculate these coefficients. The difference between ajk and bjk is ajk uses a sum of normalized terms to equally weight the contribution of each attribute to overall resemblance, whereas, bjk weights them unequally.

3.7 Rank correlation coefficient Rjk

Sometimes rank correlation coefficient is also used to analyze the clusters. It is in fact a usual way to rank the similarity of the objects based on the ranking from the highest to the lowest. Spearman was the first to develop such rank correlation coefficient which can be expressed mathematically as: n 6 x x 2 ij ik R 1 i1 3.11 jk nn 2 1

Thus for the objects j and k the ranking can be made by taking their nth attributes from the equation 12.

4. Resemblance coefficients for qualitative attributes or measures of associations among binary variables

Binary variables have only two possible outcomes, for example yes/no, true/false, or presence/absence etc. In the data matrix the two possible outcomes are denoted by two binary variables “1” or “0”. There is no certain rule to denote for which particular outcome it is to denote by 1 or 0. However, in all the cases it should be consistent and should represent the same meaning. The two-way association table is very important to measure the similarity and dissimilarity among the data. When computing the similarity s(j, k) and dissimilarity d(j, k) between two objects j and k, a 2-by-2 contingency or association table can be constructed as

Table 1: Association table for object j and k

Object k 1 0 Object j 1 a b a+b 0 c d c+d a+c b+d p

The value of “a” in Table 1 represents 1 for both objects j and k. Analogously, “b” is the number of variables (suppose f) for which xjf = 1 and xkf = 0 and so on. Obviously a+b+c+d = p, the total number of variables. When missing value occurs one has to replace p by the number of variables that are available for both j and k. It is also possible to compute the weighted sum. In this case, the value of “p” is replaced by the weight sum. Depending upon the combination different coefficients has been derived. Most of the widely used coefficients are described below.

4.1 Jaccard Coefficient

10 According to Jaccard the similarity coefficient is written as:

a c 4.1 jk a b c

The value of Jaccard coefficient ranges from 0 to 1. It indicates maximum similarity when two objects have identical values. This means b = c = 0 and a = 1. Thus cjk = 1.0. Conversely it indicates maximum dissimilarity when cjk =0. This coefficient is sensitive for the direction of coding. Instead of equation 3.1, equation 3.2 can also be used where the given weights are symmetric. This means when the possibility of not having both positive and negative is equal.

a d c 4.2 jk a b c d

It is found that Jaccard coefficient has been used mostly in taxonomic research.

4.2 Yule coefficient

The similarity Yule coefficient is simply written as

ad bc c 1.0 c 1.0 4.3 jk ad bc jk

The maximum similarity occurs when either b = 0 or c = 0. At this time, the value of Cjk = 1.0 and maximum dissimilarity occurs when either a or d = 0. It is found that this coefficient has mostly been used in psychological research.

4.3 Hamann Coefficient

The similarity coefficient is expressed as

(a d) (b c) c 1.0 c 1.0 4.4 jk (a d ) (b c) jk

When the value of cjk is equal to 1 it is called maximum similarity and when it is –1 it is called maximum dissimilarity. Maximum similarity occurs when b = c = 0. And maximum dissimilarity occurs when a = d = 0.

4.4 Sorenson Coefficient

The Sorenson similarity coefficient is written as 2a c 0.0 c 1.0 4.5 jk 2a b c jk The maximum similarity occurs when the value of cjk is equals 1 and maximum dissimilarity will result when the value of cjk is equal to 0. Multiplying twice to a and ignoring d, the Sorenson similarity coefficient is matched with the Bray-Curtis coefficient when the data is restricted to 0 and

11 1. The Sorenson coefficient is also called Czekanowski coefficient and the Dice coefficient due to the consequence of independent reinvention.

4.5 Rogers and Tanimoto coefficient

The similarity coefficient is written as

a d c 0.0 c 1.0 4.6 jk a 2(b c) d jk

Perfect similarity occurs when cjk equals 1 and maximum dissimilarity occurs when cjk equals 0.

4.6 Sokal and Sneath coefficient

This coefficient is written as

2(a d ) c 0.0 c 1.0 4.7 jk 2(a d) b c jk

Perfect similarity and dissimilarity occurs when the value of cjk is 1 and 0 respectively. For perfect similarity, the value of b = c = 0 and for perfect dissimilarity the value of a = d = 0 making cjk equals 0.0.

4.7 Russel and Rao coefficient

The similarity coefficient for Russel and Rao coefficient can be written as

a c 0.0 c 1.0 4.8 jk a b c d jk

Perfect similarity occurs when cjk is equal to 1 and dissimilarity will be when cjk equals 0. To occur perfect similarity, the value of b = c = d = 0.0 and to get perfect dissimilarity, the value of a = 0.0. This means that when the attributes of both objects are the same the perfect similarity will occur. It therefore resembles Jaccard coefficient.

4.8 Baroni-Urbani and Buser coefficient

The similarity coefficient is expressed as

a (ad)1/ 2 c 0.0 c 1.0 4.9 jk a b c (ad)1/ 2 jk

The maximum similarity occurs when cjk equals 1.0 and dissimilarity occurs when cjk equals 0.0. The inventers of this coefficient claim that the properties of distribution are superior.

12 4.9 Sokal Binary Distance coefficient

The dissimilarity coefficient developed by Sokal is expressed as

(b c) c 0.0 c 1.0 4.10 jk (a b c d) jk

The minimum value of cjk equals 0.0 indicates perfect similarity and the maximum value of cjk equals 1.0 indicates perfect dissimilarity. For maximum similarity, b = c = 0.0. This coefficient is equivalent to average Euclidean distance coefficient.

4.10 Ochiai coefficient

The Ochiai similarity coefficient is written as

a c 0.0 c 1.0 4.11 jk [(a b)(a c)] jk

The maximum value of cjk is equal to 1.0 indicates perfect similarity and occurs when b = c = 0.0. The maximum dissimilarity occurs when a = 0.0. This coefficient is identical to cosine coefficient when the values are 0.0 and 1.0.

4.11 Phi coefficient

Sometimes the similarity coefficient is denoted by Greek symbol phi, which is mathematically expressed as

ad bc c 1.0 c 1.0 4.12 jk [(a b)(a c)(b d)(c d)]1/ 2 jk

The maximum similarity occurs when b = c = 0.0 and maximum dissimilarity occurs when a =d =0.0. This coefficient is equivalent to correlation coefficient rjk when the data are of 0.0 and 1.0.

4.12 Kendall’s Tau coefficient jk

This is also dealt as the special resemblance coefficient for ordinal-scaled attributes. Mathematically it is written as s jk 4.13 jk (0.5)( p)( p 1)

Where Sjk is the sum of difference between the rightmost attributes of the variables with the other attributes of particular object. And “p” is the number of rows in the data matrix. The Kendall’s tao is one of the rank correlation coefficients developed in statistics that can be used as a resemblance coefficient when the attributes are ordinal-scaled. There are however some restrictions on the direction of rank that can be run.

13 5. Comparative studies of qualitative resemblance coefficients

The comparative study among different coefficients is listed in the following table

Table 2: Comparison among different resemblance coefficients

Jaccard coefficient Simple matching coefficient Sorenson coefficient Very highly used in almost This is also much used in This is also used in different all the research almost all the research purposes Proportion is meaningful Only difference from Jaccard The only difference is the coefficient is that of multiplication of “a” by the factor inclusion of “d” which 2 than Jaccard coefficient gives the doesn’t have any significant twice the normal weight. Although difference between Jaccard it seems less straightforward than coefficient Jaccard coefficient Irrespective of the formula Unlike the Jaccard It does have the sensitivity for the ultimate result doesn’t coefficient, it doesn’t have direction of coding have much difference with sensitivity for direction of other coefficients coding The variables range from 0 In this case the variables also The variables in this case too also to 1 signifying that “1” range from 0 to 1 signifying range from 0 to 1 signifying that means maximum similar and that “1” means maximum “1” means maximum similar and “0” means maximum similar and “0” means “0” means maximum dissimilar dissimilar maximum dissimilar

Table 3: Comparison among different resemblance coefficients

Yule coefficient Hamann coefficient Rogers and Tanimoto coefficient The range of maximum and The range of similarity is The range of maximum and minimum similarity is higher equivalent with Yule minimum similarity is between than Hammann and Rogers coefficient 0 and 1. Thus it is more dense coefficient in comparison to Hamann coefficient For perfect similarity, there But in this case, both b = 0 and This is similar with the should be either b = 0 or c = 0 c = 0 should be satisfied for the Hamann coefficient. and for perfect dissimilarity perfect similarity. And similar either a = 0 or d = 0 should be with the maximum satisfied dissimilarity both a = 0 and d = 0 should be satisfied Irrespective of the formula the Unlike the Jaccard coefficient, It also does not have the ultimate result doesn’t have it doesn’t have sensitivity for sensitivity for direction of much difference with other direction of coding coding Table 5: Comparison among different resemblance coefficients

Sokal and Sneath Russell and Rao coefficient Baroni-Urbani and Buser

14 coefficient coefficient The range of maximum and The maximum and minimum This is also similar with other minimum similarity is similarity of “1” and “0” are two equivalent with Russell and similar with other two Rao and Baroni-Urbani and Buser coefficients It is not sensitive for the It is sensitive towards the It doesn’t have any sensitivity direction of coding direction of coding of directions for coding. Perfect similarity occurs It is equivalent with the Sokal The range of maximum and when the coefficients is “1” and Sneath coefficient minimum similarity is similar and dissimilarity occurs with Sokal and Sneath when it is “0” coefficient

Table 6: Comparison among different resemblance coefficients

Sokal binary distance Ochiai coefficient Phi coefficient coefficient The range of maximum and The maximum and minimum The maximum value of minimum similarity is similarity of “1” and “0” are coefficient “1” indicates the equivalent with Ochiai similar with Sokal and binary perfect similarity and “-1” coefficient distance coefficient indicates perfect dissimilarity. It is not sensitive for the It is sensitive towards the It doesn’t have any sensitivity direction of coding direction of coding of directions for coding. Perfect similarity occurs It is equivalent with the Sokal The range for maximum and when the coefficients is “1” and binary distance coefficient minimum values is higher than and dissimilarity occurs the other two when it is “0”

6. How to select the method of clustering

Once the investigator has decided that the cluster analysis might reveal suspected subsets, a study plan is needed. What kind of sample should be collected? What kinds of attributes should be measured or recorded? What measure of similarity should be chosen to compare entities? Once the similarity measures have been computed there are other questions: What cluster search technique should be chosen? Should the set of entities be partitioned into separate clusters or should a hierarchical arrangement be sought? These are some of the problems encountered in conducting a cluster analysis. A well-designed study usually involves a sequence of steps such as the followings:

1. Select a representative and adequately large sample of entities for study. 2. Select a representative set of attributes from a carefully specified domain of similarity. 3. Describe or measure each entity in terms of the attribute variables. 4. Conduct a dimensional analysis of the variables if these are very numerous. 5. Choose a suitable metric and convert the variables into comparable units. 6. Select an appropriate index and assess the similarity between pairs of entity profiles. 7. Select and apply an appropriate clustering algorithm to the similarity matrix after choosing a cluster model. 8. Compute the characteristic mean profiles of each cluster and interpret the findings.

15 9. Apply a second cluster analytic procedure to the data to check the accuracy of the results of the first method. 10. Conduct a replication study on a second sample if possible.

7. Different types of clustering techniques

In order to determine the number of clusters and their partitions, numerous clustering algorithms exists, which fall in one of two categories: hierarchical and non-hierarchical clustering.

A) Hierarchical Clustering:

The similarity of a cluster is evaluated by using a ‘distance’ measure. The minimum distance between clusters will give a merged cluster after repeated procedures from a starting point of pixel wise clusters to final limited number of clusters. The distances to evaluate the similarity are selected from the following methods:

1. Nearest Neighbor Method (nearest neighbor with minimum distance will form a new merged cluster). 2. Furthest Neighbor Method (Furthest neighbor with maximum distance will form a new merged cluster) 3. Centroid Method (distance between the gravity centers of two clusters is evaluated for merging a new merged cluster) 4. Group Average Method (Root Mean Square distance between all pairs of data within two different clusters, is used for clustering) 5. Ward Method (Root Mean Square distance between the gravity center and each member is minimized)

Hierarchical clustering can be defined into two major groups such as:

1. Top-down (Splitting) technique; (Hierarchical mixture models) 2. Bottom up (Merging) technique; (Hierarchical agglomerative clusters)

B) Non-hierarchical Clustering:

At an initial stage, an arbitrary number of clusters should be temporarily been chosen. The member belonging to each cluster will be checked by selected parameters or distance and relocated into the most appropriate clusters with higher separability. These clustering methods are also known as ‘flat clustering technique’. Few of the examples of clustering techniques belonging to this group is as the following:

1. K-Means clustering 2. Fuzzy C-Means clustering 3. Self-organizing Map classification procedure

C) Other clustering methods

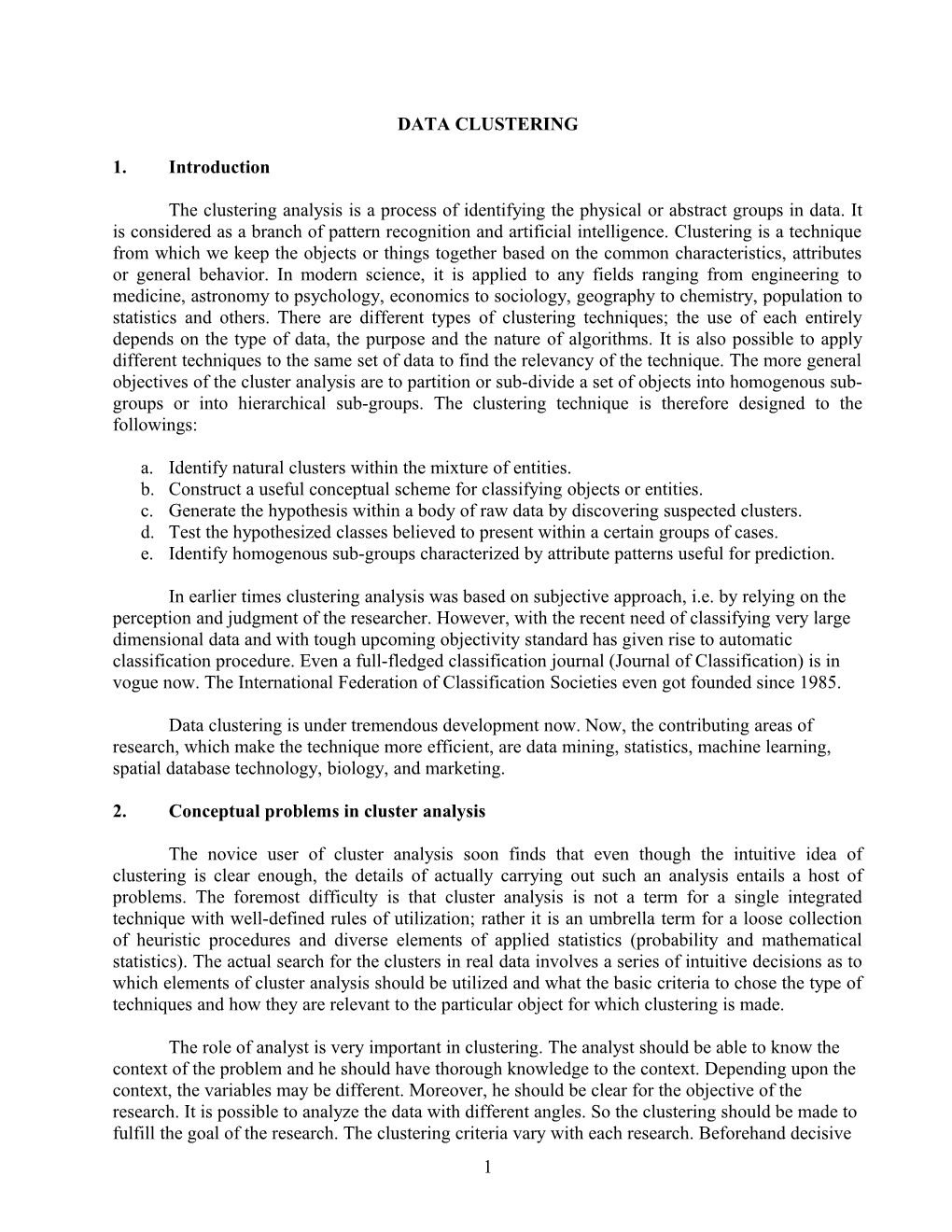

1. Single linkage clustering 16 2. Complete linkage clustering 3. Wards minimum variance method 4. ISODATA clustering method According to the processes used by the users to find clusters in the data set can be defined in two other major groups. They are such as: supervised clustering and unsupervised clustering approach. These approaches are widely used, while clustering the images based on their spectral or textural characteristics relating to the field (ground) based knowledge.

Supervised clustering approach:

In this approach, the user has the external knowledge procured from the ground truthing. Thus, training samples of cluster groups are created in the image (gray value matrix) and using some other ‘maximum likelihood’, ‘minimum distance’, or ‘parallelepiped’ distance calculation techniques are used to bring other non-classified gray values into the user defined training classes.

Unsupervised clustering approach:

In this approach, there is less information in an area to be classified; only the image characteristics are used as follows. This technique is also followed when the analyst has very less external knowledge about the dataset (e.g., gray scale matrix of the image). Therefore, multiple groups, from randomly sampled data, will be mechanically divided into homogeneous spectral classes using a clustering technique.

Table 7: Comparison between Supervised vs. Unsupervised Classification in Image processing

Supervised Unsupervised Pre-defined classes Unknown classes Serious classification errors detectable Comparative less classification errors Defined classes may not match natural classes Natural classes may not match desired classes Classes based on information categories Classes based on spectral properties Selected training data may be inadequate Derived clusters may be unidentifiable A priori class training is time-consuming and A posteriori cluster identification is time- tedious consuming and tedious Only pre-defined classes will be found Unexpected categories may be revealed

7.1 K-Means Clustering Algorithm

K-means clustering is one of the unsupervised and non-hierarchical techniques of grouping the objects into different entities. Let us consider there are j objects, varies from 1, 2,….t with attributes ranging from i = 1, 2,….n. According to Hartigan (1975), the sum of every attribute for each variable is given by,

n x j aij for all j 1,2,...... t 7.1 i1

th where aij is i attribute of variable j.

17 Suppose, 2 < c < t be the number of partitions based on variation in xj. The mean of each cluster can be computed by,

1 k mc aic c 7.2 k i1 where k = total number of variables within the cluster (k Calculating the square of Euclidean distance for each variable from their respective means, k 2 d ic aic mc c 7.3 i1 The summation of Euclidean distance gives the discordance between the data and the given partition. The error is reduced from an initial partition, searching through the set of partitions at each step moving from a partition to that partition in its neighborhood for which dic is minimum. In this way the process is repeated. In a flowchart format the technique of K-means clustering can be viewed as the following. 1. Take the sum of attributes of each object. 2. Partition the data based on the variation of sum. 3. Calculate the mean of each attribute within every cluster. 4. Calculate the euclidean distance of each attribute from their mean. 5. Sum the euclidean distance for all clusters that gives the total error during first step of computation. 6. Compute the euclidean distance of each object from the mean of every cluster not belonging the object. 7. Sum the euclidean distance in step six that gives the error when the object is not belonging to those clusters. 8. Compute the difference in error between step five and seven. 9. If the computed error in step eight is positive, shift the object to that cluster (where the computation of error was less) and re-compute the centroid. 10. Repeat the process for every object until the minimum error is obtained. 11. Repeat the process for the next step. Stop the computation when the calculated error is less than ultimate desired error. Much statistical software like SAS, SPSS have the K-means clustering technique embedded with them. Free K-means coding is also available on Web. Those can run in any environment like, MS-Dos, Windows, and Unix. The MATLAB version of a K-means freebie coding is very easy to operate. This method is simple to calculate and easy to apply for the clusters with computer driven algorithm. This technique can be used very extensively for feature extraction. The compactness of this technique is decided by the order from which the data are selected. The implicitly decided order of variables may not guarantee to produce the robust partitions. 18 One simple workout example can better illustrate, how K-means clustering works to cluster dataset for practical use purpose. In general, the following steps are carried out in this clustering technique. i) Partition or cluster the objects into different clusters after summing the features of the objects. There are eight students in a class, the marks they obtained in physics, chemistry and math are as below. Table 1 Students and the marks obtained in different subjects No Attributes Physics Chemistry Math Total Objects 1 Ram 11 29 1 41 2 Shyam 8 30 1 39 3 Hari 13 21 1 35 4 Gopal 12 27 1 40 5 Sita 6 31 2 41 6 Rita 4 29 1 34 7 Umesh 5 36 1 42 8 Dinesh 5 37 2 44 The total marks obtained by these students (sum of features) can be grouped into three different clusters, i.e., Cluster one: total marks more than or equal to 41 Cluster two: total marks in between 38 to 40 Cluster three: total marks equal to 37 and below The cluster of the students therefore can be categorized as below: Cluster 1: (Ram, Sita, Umesh, Dinesh) Cluster 2: (Shyam, Gopal) Cluster 3: (Hari, Rita) In this way the process of seeding the cluster can be implemented for any kinds of objects with the corresponding attributes. The criteria that is normally chosen depends upon the initial choice and the difference in between the numerical values of the features. ii) In the next step, the mean of the Jth variable over the cases in Lth cluster is computed, which is denoted by B(L, J). As in example, for cluster one, B(1, 1) indicates the mean of cluster one for the first variable. That is B(1, 1) = (11+6+5+5)/4 = 6.75 B(1, 2) = (29+31+36+37)/4 = 33.25 B(1, 3) = (1+2+1+2)/4 = 1.5 19 Similarly for cluster two: B(2, 1) = (8+12)/2 = 10 B(2, 2) = (30+37)/2 = 28.5 B(2, 3) = (1+1)/2 = 1 Likewise for cluster 3: B(3, 1) = (13+4)/2 = 8.5 B(3, 2) = (21+29)/2 = 25 B(3, 3) = (1+1)/2 = 1 iii) Next step is the calculation of errors for the initial partition. This can be done by summing the squared distances of each object from their corresponding mean of the clusters. For above example e[P(8, 3)] = errors of the matrix with eight rows and three columns. Cluster 1 Cluster 2 Cluster 3 Objects Ram Sita Umesh Dinesh Shyam Gopal Hari Rita Attributes Physics 11 6 5 5 8 12 13 4 Cluster 6.75 6.75 6.75 6.75 10 10 8.5 8.5 mean Square of 18.062 0.5625 3.0625 3.0625 4 4 20.25 20.25 difference Chemistry 29 31 36 37 30 27 21 29 Cluster 33.25 33.25 33.25 33.25 28.5 28.5 25 25 mean Square of 18.062 5.0625 7.5625 14.062 2.25 2.25 16 16 difference Math 1 2 1 2 1 1 1 1 Cluster 1.5 1.5 1.5 1.5 1 1 1 1 mean Square of 0.25 0.25 0.25 0.25 0 0 0 0 difference Total 36.375 5.875 10.875 17.375 6.25 6.25 36.25 36.25 The total error is sum of total row, which is equal to 155.5 iv) Compute the distance of each object from all the clusters. As an example above the distance of Ram’s features from cluster one is: D(1, 1) = (11.6.75)2 + (29-33.25)2 + (1-1.5)2 = 36.40 20 Similarly the distance of Ram’s features from cluster two is: D(1, 2) = (11-10)2 + (29-28.5)2 + (1-1)2 = 1.25 Likewise the distance of Ram’s features from cluster three is: D(1, 3) = (11-8.5)2 + (29-25)2 + (1-1)2 = 22.25 Similarly for all the objects we can compute the distance from respective clusters. v) compute the change in error when the objects (students in above example) shift from one to another clusters. For example in the case of Ram, when it is transferred from cluster one to cluster three, the decrease in error is: (36.4*4/3-22.25*2/3) = 33.70 But when it is transferred from cluster one to cluster two, the decrease in error is: (36.4*4/3-1.25*2/3) = 47.70 Therefore when Ram is shifted from cluster one to cluster two, the decrease in error is more than when it is shifted from cluster one to cluster three. When it is shifted from cluster one to two, the new value of error will be, (155.5-47.7) = 108.20 In this way when one object is shifted from one to another cluster the error decreases. vi) Updating the cluster means: After shifting the objects from cluster on to cluster two, the mean of the new clusters changes. The updated mean can be computed as B(2, 1) = (11+2*10)/3 = 10.33 B(2, 2) = (29+2*28.5)/3 = 28.68 B(2, 3) = (1+2*1)/3 = 1.0 Similarly for cluster one: B (1, 1) = (6+5+5)/3 = 5.33 B(1, 2) = (31+36+37)/3 = 34.67 B(1, 3) = (2+1+2)/3 = 1.67 The new clusters are therefore assigned as: Cluster one: (Sita, Umesh, Dinesh) Cluster two: (Ram, Shyam, Gopal) Cluster three: (Hari, Rita) 21 In this way for all the objects (students in this example) the repetition is to be made. Finally when the error is the least or the difference in error between the final run and its consecutive run is negligible the algorithm will stop. For above example, if it is continued in this way the final cluster will be Cluster one: Hari Cluster two: Shyam, Gopal, Ram Cluster three: Sita, Rita, Umesh, Dinesh The characteristics of the above three clusters is as follows: Cluster one: In this cluster the marks obtained in physics is high in comparison to chemistry. The marks obtain in chemistry is low. Cluster two: This cluster signifies the marks obtained in both subjects physics and chemistry is high Cluster three: This cluster signifies that the marks obtained in chemistry is high but low in physics. The marks obtained in mathematics are insignificant for all types of clusters. In this way K- means clustering can cluster the objects into different partitions in accordance with the features represented by the objects. For different types of attributes, different weights can also be given. However the matter of weight depends upon the interest of the research for the particular features. 7.2 Fuzzy clustering This technique is evolved from the fact that the data consists of some sorts of uncertainty due to which the crisp partition cannot represent the real distribution of the entities among the clusters. This method allows the smooth transition of features from one to another cluster. The contribution of entity is represented by the membership values assigned to the variables. Basically the objective function is to minimize the difference between the entities, which can be expressed by (Liew, 2000), t C m 2 J f (C,m) (u j,i ) D j,i 7.4 j1 i1 where the weights uj,i are the elements of fuzzy membership matrix U which is defined as CN U u j,i j 1,....t i 1,...C u j,i 0,1U R 7.5 The exponent ((m) (0, 1)) appearing in equation (8) determines the incidence of fuzzy 2 memberships (uj, i ) on the partition. D j, i is the squared euclidean distance. The cluster centers (Vi ) is defined as the weighted fuzzy sum of Xj points. 22 t m (u j,i ) X j j1 Vi t ,i 1,.....C 7.6 m (u j,i ) j1 It can be seen that fuzziness enters into the determination of the cluster centers (Vi) since the points (Xi ) are weighted with their fuzzy membership. The data with a high membership will attract the centroid more than points with a low membership. Further, since each matrix element is bounded, increasing m results in a fuzzier, less discriminating partition. Jf(C, m) is minimized by assigning suitable values to the fuzzy matrix U of equation (9), reminding that the sum of the memberships of each point (Xj) to all the clusters must be unity, which is, C u j,i 1, for all j 1,....t 7.7 i1 Now minimizing the composite function obtained from equation (8) and (10) yields C C m 2 FU j , (u j,i ) D j,i u j,i 1 7.8 i1 i1 Where acts as a Lagrange multiplier. In this way, a tentative set of cluster centers is determined from the C mutually farthest points. An initial fuzzy partition (U0) is determined satisfying the consistency constraint. A new fuzzy partition Ut is determined using equation (9). Then the cluster centers Vt are recomputed on the basis of Ut. When two successive fuzzy partitions Ut-1 and Ut differ by less than a given amount, the iteration is terminated and Ut is considered as the final partition. This technique can represent the real representation of homogeneity among the dataset. In nature, the influence of one variable is associated to all the clusters although the variable completely is assigned to only one cluster. Thus, it is able to accommodate some of the uncertainties associated within the data. However, as the number of objects increases, the rate of growth of output is very rapid. The technique is mathematically complicated and takes considerable computation time. 7.3 Nearest and furthest neighborhood clustering techniques Nearest neighborhood clustering technique is also called single linkage clustering technique. Let us consider we have a number of entities j =1, 2, ….t. According to Rosemburg (1984), this technique can be summarized as follows. 1. Consider all entities as c clusters. 2. Calculate the centroid of each cluster. 3. Compute the Euclidean distance between the centroid of each cluster. 4. Compare the Euclidean distance calculated in step three and find the minimum. 5. Converge the clusters whose Euclidean distance is minimum. Denote the new cluster as k. 23 6. Find the centroid of new cluster k and find its distance to other clusters (suppose h and m) etcetera. 7. Merge the clusters k and h if dkh In farthest neighborhood technique, all entities are also considered as c clusters, each with one member. Find and merge the two clusters within the set that are separated by the largest distance. Suppose new cluster be denoted as k. The distance between the new cluster k and some other cluster h is defined as the distance between their farthest members. The procedure is as follows (Rosemburg, 1984). 1. Consider all entities as c clusters. 2. Calculate the centroid of each cluster. 3. Compute the Euclidean distance between the centroid of each cluster. 4. Find the maximum Euclidean distance between two clusters. 5. Converge the two clusters in step four (let it be denoted as cluster k). 6. Find the centroid of cluster k and find its distance to new cluster (suppose h and m). 7. Merge the clusters k and h if dkh >dkm, else merge k and m. 8. Repeat the process for all the clusters. Both techniques are very widely used for hierarchical dataset. The nearest neighborhood technique can effectively detect the elongated clusters but not effective enough for compact and equal sized clusters. The compact and equal sized clusters can be detected by farthest neighborhood technique. However, each dataset within every cluster gets evaluated to find minimum/maximum distance between them in every step. This will eventually consume more time during computation. 7.4 ISODATA method: ISODATA clustering technique is the example of an unsupervised clustering technique. ISODATA stands for "Iterative Self-Organizing Data Analysis Technique. It is iterative in the sense that it repeatedly performs an entire classification (outputting a thematic raster layer) and recalculates statistics. "Self-Organizing" refers to the way in which it locates the clusters that are inherent in the data. The ISODATA clustering method uses the minimum spectral distance formula to form clusters. It begins with either arbitrary cluster means or means of an existing signature set, and each time the clustering repeats, the means of these clusters are shifted. The new cluster means are used for the next iteration. The ISODATA utility repeats the clustering of the image until either: a. A maximum number of iterations has been performed, or b. A maximum percentage of unchanged pixels have been reached between two iterations. In a precise way, the ISODATA clustering according to Anderberg (1973) works with the following procedures: 1. Let there are j = 1, 2…..t entities 2. Consider each entity as c cluster. 3. Randomly select the point (seeding the clusters) and compute the distance from the selected point to other clusters. 4. The clusters are assigned to the point for the nearest distance from that cluster. 24 5. Fix those clusters until all the clusters are assigned to pre-specified seeding points for that cycle of computation. 6. Re-compute the cluster centroid and assign the entity to the nearest centroid until convergence is achieved in the next step. 7. If the clusters have less number of data than the threshold, discard the cluster. 8. Finally, stop the iteration when the numbers of clusters in successive iterations are the same. This technique is not geographically biased to any particular portion of the data. It is highly successful at finding inherent spectral clusters. It however, has some disadvantages, as the analyst doesn’t know a priori number of seeding points. The iteration takes more time to decide the number of clusters. An example of ISODATA clustering technique used for image clustering could be as such. We have an aerial image of 125 x 125 pixels, thus, having 15625 gray values. As per the above mentioned technique, arbitrary cluster centers (seeds) were generated by the program (ISOCLUST of Idrisi 32). Actually, these seeds are perceived by analyzing the peaks and valleys of the image gray values histogram, which envisages the cluster groups present in the image. Then the computation iteration continued, until it covered all the gray values and grouped them into the intended numbers of clusters (user defined). However, the technique could create the finest possible clusters, if the user fail to determine the number clusters required. The following image is clustered using the ISODATA clustering technique. In the image (Figure 1), we intended to find six clusters based on our field knowledge. These six clusters represented six land use classes. They are water bodies, swamp area, bare land, sparse vegetation, medium density vegetation, and dense vegetation etc. Original image ISODATA clustered image Figure 1: Image classification using ISODATA clustering approach. 7.5 Ward’s method of clustering technique 25 According to Anderberg (1974), Ward’s method of clustering begins with c clusters, each containing one object, and ends with one cluster containing all objects. It makes whichever merger of two clusters that will result in the smallest increase in the value of an index E, called the sum-of- squares index, or variance. Suppose we have j = 1, 2.…..t entities. 1. Consider each entity as c cluster. 2. Calculate the mean of each cluster. 3. Find the Euclidean distance of each attribute from the mean for all the clusters. 4. Square the Euclidean distance and add for the entire object that gives the sum of square index, E. 5. Repeat step four for entire clusters. 6. Merge the clusters whose sum of square index in step five is minimum. 7. Repeat the process for every cluster in next step. It follows that E increases nonlinearly as the step is processed successively. Because, the index E is a function of squares of the attribute differences, and the attribute differences will increase as more objects are forced into clusters as the method progresses. The number of computations is reduced very much in this technique. However, the implicitly specified dissimilarity coefficient E is very sensitive to additive and proportional coefficients. Moreover, it does not guarantee an optimal portioning of objects into clusters. However, Ward method of minimum variance could be used as a tool of cluster merging. Suppose, with any unsupervised clustering method (say ISODATA), we had the finest possible clusters (can be more than 50). The Ward minimum variance technique could determine the close cluster means, which should be merged in each iterative step. Most of the statistical software like SAS and SPSS have the Ward clustering technique. An example of this procedure of clustering merging phenomenon is as follows. We have an aerial image of 125 x 125 pixels, thus, having 15625 gray values. The image was clustered using unsupervised ISODATA clustering technique without providing any user defined intended clusters. Thus, we obtain 56 clusters from the image (Figure 2). Then, the gray value mean of each pixels (in ASCII format) were determined from the 56 clusters. Those were fed to the WARD clustering method of SAS to determine the ‘cluster merging dendrogram’ (Figure 3), which suggests that how and which clusters could be merged together with each forwarding step. 26 Original image (125 x 125 pixels) Fine clustered image with 56 clusters Figure 2: Image clustered with fine 56 clusters using ISODATA clustering technique 7.6 Self-organizingFigure Map3: Ward (SOM) clustering technique dendrogram showing merging clusters 27 SOM clustering technique is an unsupervised method of reducing the dimensionality and simultaneous preservation of the topological features. It can be used in a supervised way in its Learning Vector Quantization (LVQ) form. It is a very good method for clustering the large set of homogeneous and higher dimensional data. Tuevo Kohonen is the inventor of this neural network based classification/clustering technique. Kohonen’s SOM architecture consists of two layers, such as input layer and a competitive layer known as Kohonen layer, or SOM layer as shown in the Figure 4 below. These two layers are fully connected. Each input layer neuron has a feed forward connection to each neuron in the Kohonen layer. A weight vector (w) is associated with each connection from the input layer to a neural unit (Kohonen layer). Inputs to the Kohonen layer are calculated with the following equation. n (7.9) I j wij xi i1 Where w is the weight vector, and x is the input vectors and is a dot product. The SOM technique works with the approach of winner takes all. Thus, the output winning neuron becomes the neuron with biggest Ij. The winning neuron is chosen by finding the neuron whose weight vector has the minimum Euclidean distance, d, from the input vector, i.e., (7.10) j i x d w i In the case of Euclidean distance calculation, the weight, w, and the input vector, x, are not normalized. The Kohonen net is trained by the competitive learning. The neurons in the Kohonen layer compete with each other to be the winning one when the input neuron is added to the layer. The winning neuron is trained every time with the following equation. new old ( old ) (7.11) W ij W ij xi W ij Where ‘h’ is the learning parameter. The weight vectors corresponding to the winner and the neural units in its topological neighborhood are updated to align them towards the input vector. The learning rate is updated from time to time and the neighborhood size is reduced during the course of the learning process. The SOM then determines the output cluster (expected) values of the input vector. If, an image is clustered, the output cluster values are brought into their binary form using any programmable softwareWinning using their Neurode topographic output features, which was preserved. Then it is visually represented to be viewed as different clusters in the image. An example of SOM clustering technique and ISODATA clustering used to an aerial image is provided in Figure 5 below. In the image, we obtain seven clusters. This also tells about the visual veracity of each clustering technique. Kohonen Layer W W W W W W W 1 2 3 4 5 6 7 Input Layer 28 Input Pattern Figure 4: SOM neural architecture for data classification Figure 5: Original (left), SOM clustered (7 clusters) (middle), and ISODATA clustered (7 clusters) (right) of R-band image 7.7 Unsupervised classification using Bayesian approach Bayesian clustering approach is an unsupervised classification technique, which is very much useful for classification or clustering of multidimensional data. Bayesian estimation, which regards the number of classes, the data partition and the parameter vectors that describe the density of classes as unknowns. It computes their MAP estimates simultaneously by maximizing their joint posterior probability density given the data. The problem of intensity image segmentation is posed as an unsupervised classification problem and solved using the Bayesian formulation in a multiscale set up. The method is also applied to data sets that occur in statistical literature and target tracking. The results obtained demonstrate the power of Bayesian approach for unsupervised classification. Acknowledgement: I appreciate the help; I received from my friend Mr. Ramesh Kumar Gautam in developing the paper. 29 References: Anderberg, M. R. 1973. Cluster analysis for applications, Academic press, 359. Anonymous, 2000. “Reference Guide of NeuralWare”. NeuralWare. Carnegie, PA. Benjamin, S. D., and P. L. Odell. 1974. Cluster analysis; a survey, Berlin, New York, Springer- Verlag, 137. Dondik, E. M., and V. P. Tikhonov, 1995. Automatic searching for cluster centers in the problems of grey image clustering, Earth observation remote sensing, 12: 698-706. Eastman J.R., 1999. “Guide to GIS and Image Processing”. Volume 1 and 2. Idrisi Publications, Clarks Labs, Clarks University, 950 Main Street, Worcester, MA. 01610-1477, USA Everitt, B. 1980. Cluster analysis, published on behalf of the Social Science Research Council by Gautam, R. and S. Panigrahi. 2001. “An Overview of Different Clustering Techniques and Their Potential for Nutrient Zone Mapping” Presented in 2001 ASAE/CSAE North Central Sections Conference, Brookings, South Dakota. September 28-29, 2001 Heinemann Educational Books; New York : Halsted Press, 136. Han, J and M. Kamber, 2001. “Data Mining: Concepts and Techniques”. Morgan Kaufmann Publishers, A Harcourt Science and Technology Company. San Francisco. CA. Hartigan, J. A. 1975. Clustering algorithms, John Wiley & sons, publication, 351. Haykin, S., 1999. “Neural Networks A Comprehensive Foundation”, Second Edition, Prentice Hall Inc., NJ, USA Jakkola, T. 2001. “Clustering and Markov Models”. A lecture note on Machine Learning and Neural Networks at MIT, MA. Kaufman, L., and P. Rousseeuw. 1990.Finding Groups in Data, An introduction to Cluster Analysis, A Wiley-Interscience Publication, 342. Kohonen, T. 2001. “SOM-Tool Box Home”. http://www.cis.hut.fi/projects/somtoolbox/ Liew, A. W. C., S. H. Leung and W. H. Lau 2000. Fuzzy image clustering incorporating spatial continuity, IEE proc.–Vis. Image Signal Process, 147, (2): 185-192. Marsili-Libelli, S. 1989. Fuzzy clustering of ecological data, department of systems and computer science, University of Florence, via S. Marta 3, 50139 Florence, Italy, Geonoses 4 (2): 95-106. 30 MATLAB 5.1, the Language of Technical Computing, Math Works Inc., USA. 1994 –2001 Maurice, L. 1983. Cluster analysis for social scientists, San Francisco: Jossey-Bass, 233. Pandya, A. S., and Macy, R.B., 1996. “Pattern Recognition with Neural Networks in C++”. CRC Press Inc. Boca Raton, Fl Romesburg, H. C. 1984. Cluster Analysis for Researchers, Lifetime learning publications, 334. Ryzin V. J. 1977. Classification and clustering: proceedings of an advanced seminar conducted by the Mathematics Research, New York: Academic Press, 467. Sodha, J., 1997. “P21H Neural Networks”, Lecture 4. Manchester University, UK. http://scitec.uwichill.edu.bb/cmp/online/p21h/lecture4/lect4.htm Spath, H. 1985. Cluster dissection and analysis, theory, Fortran programs, examples, Ellis horwood limited, chichester publication, 226 Tryon, R. C., and D. E. Bailey. 1970. Cluster analysis, New York, McGraw-Hill, 347. 31