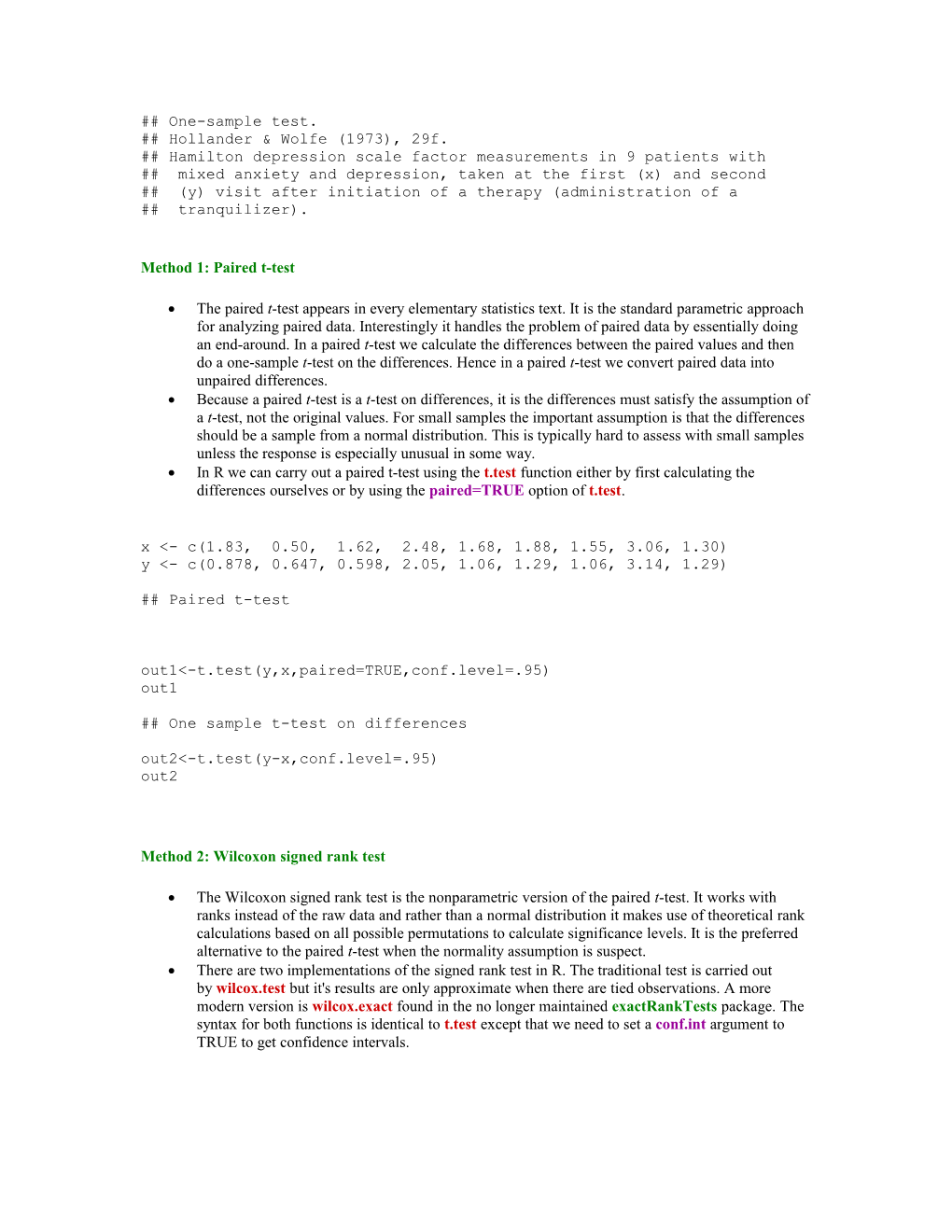

## One-sample test. ## Hollander & Wolfe (1973), 29f. ## Hamilton depression scale factor measurements in 9 patients with ## mixed anxiety and depression, taken at the first (x) and second ## (y) visit after initiation of a therapy (administration of a ## tranquilizer).

Method 1: Paired t-test

The paired t-test appears in every elementary statistics text. It is the standard parametric approach for analyzing paired data. Interestingly it handles the problem of paired data by essentially doing an end-around. In a paired t-test we calculate the differences between the paired values and then do a one-sample t-test on the differences. Hence in a paired t-test we convert paired data into unpaired differences. Because a paired t-test is a t-test on differences, it is the differences must satisfy the assumption of a t-test, not the original values. For small samples the important assumption is that the differences should be a sample from a normal distribution. This is typically hard to assess with small samples unless the response is especially unusual in some way. In R we can carry out a paired t-test using the t.test function either by first calculating the differences ourselves or by using the paired=TRUE option of t.test. x <- c(1.83, 0.50, 1.62, 2.48, 1.68, 1.88, 1.55, 3.06, 1.30) y <- c(0.878, 0.647, 0.598, 2.05, 1.06, 1.29, 1.06, 3.14, 1.29)

## Paired t-test

out1<-t.test(y,x,paired=TRUE,conf.level=.95) out1

## One sample t-test on differences out2<-t.test(y-x,conf.level=.95) out2

Method 2: Wilcoxon signed rank test

The Wilcoxon signed rank test is the nonparametric version of the paired t-test. It works with ranks instead of the raw data and rather than a normal distribution it makes use of theoretical rank calculations based on all possible permutations to calculate significance levels. It is the preferred alternative to the paired t-test when the normality assumption is suspect. There are two implementations of the signed rank test in R. The traditional test is carried out by wilcox.test but it's results are only approximate when there are tied observations. A more modern version is wilcox.exact found in the no longer maintained exactRankTests package. The syntax for both functions is identical to t.test except that we need to set a conf.int argument to TRUE to get confidence intervals. out3 <- wilcox.test(x, y, paired = TRUE, alternative = "greater") out3 out4 <- wilcox.test(y, x, paired = TRUE, alternative = "less") out4 out5 <- wilcox.test(y - x, alternative = "less") # The same. Out5

## large sample approximation out5 <- wilcox.test(y - x, alternative = "less",exact = FALSE, correct = TRUE)

## To compute the Hodges-Lehmann Estimator associated with WSR statistic:

The set of Walsh averages can be easily calculated in R using the outer function to produce a matrix of Walsh averages and then the lower.tri and diag function to extract the ones we need. Here's how the Hodges-Lehman estimate of the median is calculated for the Hamilton dataset. ham.diff<-y-x #Walsh averages Wmat<-outer(ham.diff,ham.diff,'+')/2 Walsh<-c(Wmat[lower.tri(Wmat)],diag(Wmat)) ham.median<-median(Walsh) print(ham.median)

To obtain a confidence interval for the Hodges-Lehman estimate of the median we sort the Walsh averages and then extract the values that lie at the appropriate positions of the empirical distribution. The locations are obtained from the quantiles of the signed rank distribution which can be obtained with the qsignrank function in R.

Walsh.sort<-sort(Walsh) # number of transects ham <- cbind(x,y) n.ham<-dim(ham)[1] # obtain the positions q1<-n.ham*(n.ham+1)/2+1-qsignrank(.985,n.ham) q2<-qsignrank(.985,n.ham) ham.lower95<-Walsh.sort[q1] ham.upper95<-Walsh.sort[q2] c(ham.median,ham.lower95,ham.upper95)

## This also works…. print(z <- sort(y - x)) walsh <- outer(z, z, "+") / 2 print(walsh <- sort(walsh[!lower.tri(walsh)])) median(walsh) ## Code for the Confidence Interval, this code might be better conf.level <- 0.95 print(z <- sort(y - x)) print(n <- length(z)) walsh <- outer(z, z, "+") / 2 print(walsh <- sort(walsh[!lower.tri(walsh)])) print(m <- length(walsh)) alpha <- 1 - conf.level k <- qsignrank(alpha / 2, n) if (k == 0) k <- k + 1 ## print(k) cat("achieved confidence level:", 1 - 2 * psignrank(k - 1, n), "\n") c(walsh[k], walsh[m + 1 - k])

## The data on annual salaries, Example 3.2 x <- c(11750, 20900, 14800, 29900, 21500, 18400, 14500, 17900, 21400, 43200,15200,14200) y <- c(12500,22300,14500,32300,20800,19200,15800,17500,23300,42100,16800, 14500)

## Remember, there is ties in the data. Let’s run wilcox.test(y - x, alternative = "greater", correct = F)

## Download library exactRankTests”, now type wilcox.exact(y - x, alternative = "greater")

## Now, let’s use the large sample results and see. Exact = FALSE mean you are using approximate test ## correct = FALSE imply you are NOT using continuity corrections. wilcox.test(y - x, alternative = "greater",exact = FALSE, correct = FALSE) wilcox.test(y - x, alternative = "greater",exact = FALSE, correct = TRUE)

## Let’s check the Comment 11 in book, Page 46-48. ## Check that Z is -12, -10, 10 and 12 ## So, we just type wilcox.exact(c(-12,-10,10,12), alternative = "greater") ## or wilcox.exact(c(-12,-10,10,12), alternative = "g")

## Type the data here….. x <- c() y <- c() mu <- 0 # hypothesised value of median print(z <- sort(y - x)) print(n <- length(z)) print(b <- sum(z > mu)) pbinom(b - 1, n, 1 / 2, lower.tail=FALSE)