1215 Patterson Office Tower Lexington, KY, USA, 40506-0027 [email protected] http://www.uky.edu/~tmclay/

NEWSLETTER, 28 March 2008

Dear Colleagues:

It is time once again to review our teaching evaluations and to see what we can learn from them.

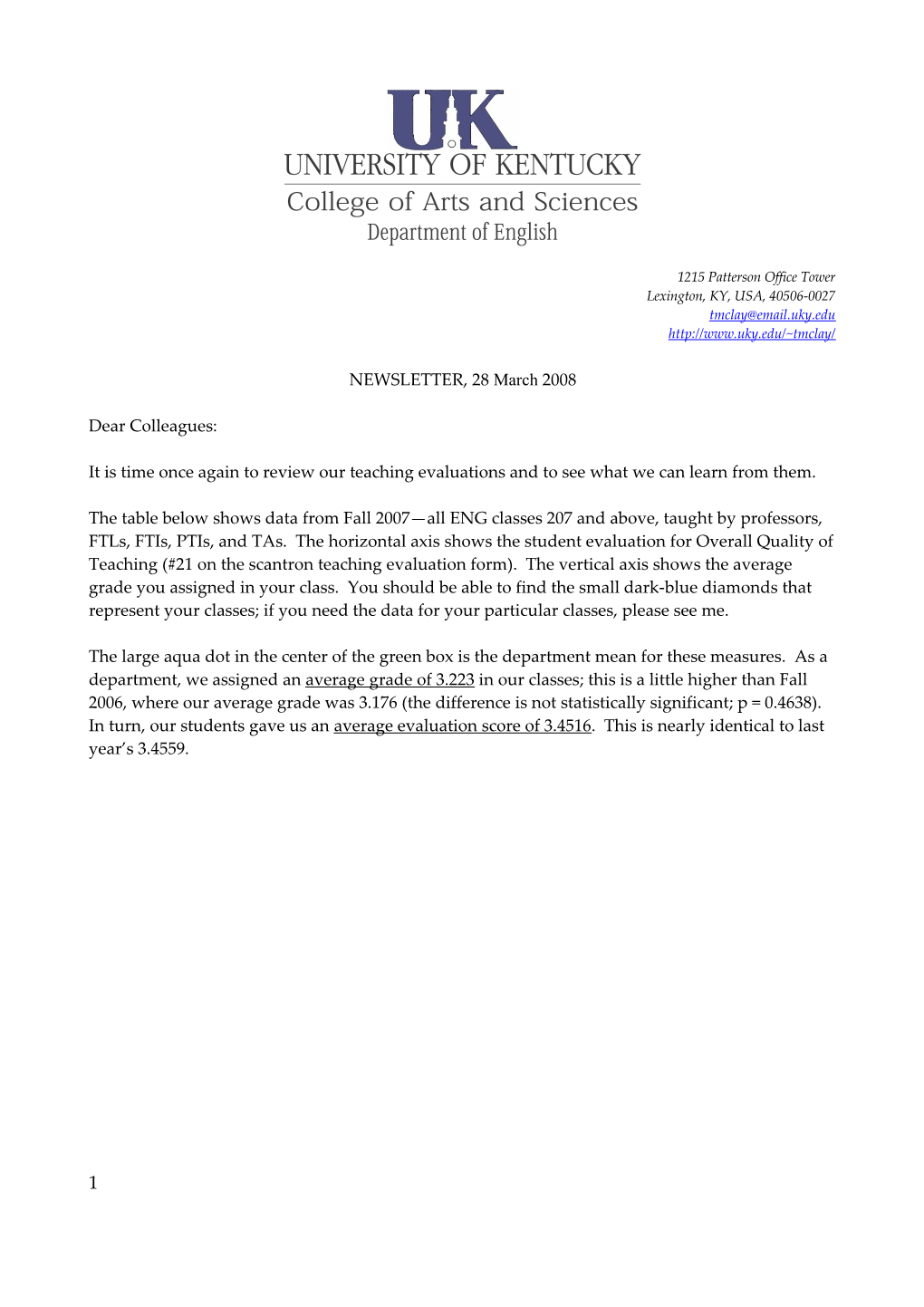

The table below shows data from Fall 2007—all ENG classes 207 and above, taught by professors, FTLs, FTIs, PTIs, and TAs. The horizontal axis shows the student evaluation for Overall Quality of Teaching (#21 on the scantron teaching evaluation form). The vertical axis shows the average grade you assigned in your class. You should be able to find the small dark-blue diamonds that represent your classes; if you need the data for your particular classes, please see me.

The large aqua dot in the center of the green box is the department mean for these measures. As a department, we assigned an average grade of 3.223 in our classes; this is a little higher than Fall 2006, where our average grade was 3.176 (the difference is not statistically significant; p = 0.4638). In turn, our students gave us an average evaluation score of 3.4516. This is nearly identical to last year’s 3.4559.

1 2 The ascending blue line shows a weak correlation between GPA and student evaluations. We saw this last year, too: when students get higher grades, they perceive their teachers to have provided a higher quality of teaching, and vice versa.

The green box encloses course data that fall within one standard deviation of the mean (standard deviation is the average distance from the mean of all points in the data set). In a perfect distribution, 68 percent of data will fall within one SD of the mean. We are a little low, with about 58 percent of classes within one SD of each mean— apparently, we aren’t perfect. In any event, as last year, I will consider these classes “normal”: They are not far from the means, and thus they do not invite any particular scrutiny.

If your classes are outside the box, however, they do invite scrutiny. Of course I realize there may be good reasons for this. In one class, for example (data point 2.0 eval, 3.5 GPA), only one student evaluation was turned in; this data is not reliable. In another class (data point 3.5 eval, 2.365 GPA), five students failed, and this pulled the GPA down about half a point.

In general, though, I encourage your pedagogical reflection and attention if your classes are falling very far outside the green box. I will discuss each of the four Quadrants outside the box in a moment.

Before moving on to the Quadrants, however, let me introduce one further data set. The table below shows the actual GPA you assigned in your classes versus the GPA your students expected in your class (from the “expected grade” section on the first page of the scantron summary form), as well as the spread between the two. The final column gives a p value from t-tests of the respective data sets. T-tests compare the means of two sets of data; data sets would be considered statistically significantly different with p values of less than 0.05—that is, where there would be a greater than 95 percent probability that something other than chance explained the difference in the means.

3 Table 1: Actual v. Expected Grades Actual Expected GPA GPA spread p = All Classes 3.2226 3.4331 0.2105 0.00037998 Quadrant 1 2.7354 3.1349 0.3995 0.00930374 Quadrant 2 3.5648 3.6174 0.0526 0.77654871 Quadrant 3 3.9251 3.8023 -0.1228 0.04706142 Quadrant 4 2.5723 3.1423 0.5700 0.00000151 As you can see, students aggregated from all our classes expected to receive higher grades than you actually gave them—statistically significantly higher (p = 0.00037998). This means there is a 99.96 percent probability that something other than chance explains the difference between actual grades and expected grades. Perhaps students misjudged the quality of their final paper, for instance, or forgot just how many classes they missed.

Let’s keep these data on grade expectations in mind as we consider classes that fell more than one standard deviation from the means.

Some of you in Quadrant 1 are new to UK and may be operating with a different grading paradigm in mind—that is, our grading conventions may be different than where you taught previously. In Table 1 (above), note relatively large spread between actual and expected grades in Quadrant 1. In at least some of these classes, students’ grade expectations might be more accurate for our institution than the grades you actually gave. This semester, you might experiment by adjusting your grades up a bit; the trendline suggests that that your student evaluations may follow.

Most of you in Quadrant 3 are teaching graduate seminars or creative writing classes, and I know there are good reasons for giving A’s in these classes. I would point out, however, that in these

4 classes students received significantly higher grades than they expected (p = 0.04706). In other words, some of you are giving grades that even students don’t think they deserve. Check out that “expected grade” section on the back page of the scantron summary sheet, and think about it next time you assign your grades.

Those of you in Quadrant 4 received very high evaluations from your students while at the same time giving fairly low grades. On one hand, you deserve our respect for inspiring your students without bribing them. Do note, however, the huge spread between expected and actual grades for this quadrant in Table 1. Why did students expect their grades to be so much higher? It may have been their midterm grades, which in several cases in Quadrant 4 classes were notably higher than the final grades. For those of you in this quadrant, be sure that you are not raising students grade expectations unfairly with marks at midterm that are more generous than you will ultimately give.

Finally, you do not want to be in Quadrant 1, where students returned low evaluations despite receiving high grades. The department will be working with teachers in Quadrant 1 independently on improving pedagogical practice. That’s all for now. Please write or stop by with questions.

Tom Clayton

5