OEM Ready Software Qualification Tool

Instruction Manual

1. Machine Setup 1.1. Perform a clean install of Microsoft Vista SP1 Ultimate 32-bit edition.

1.2. Install the Microsoft OEM Ready Qualification Tool

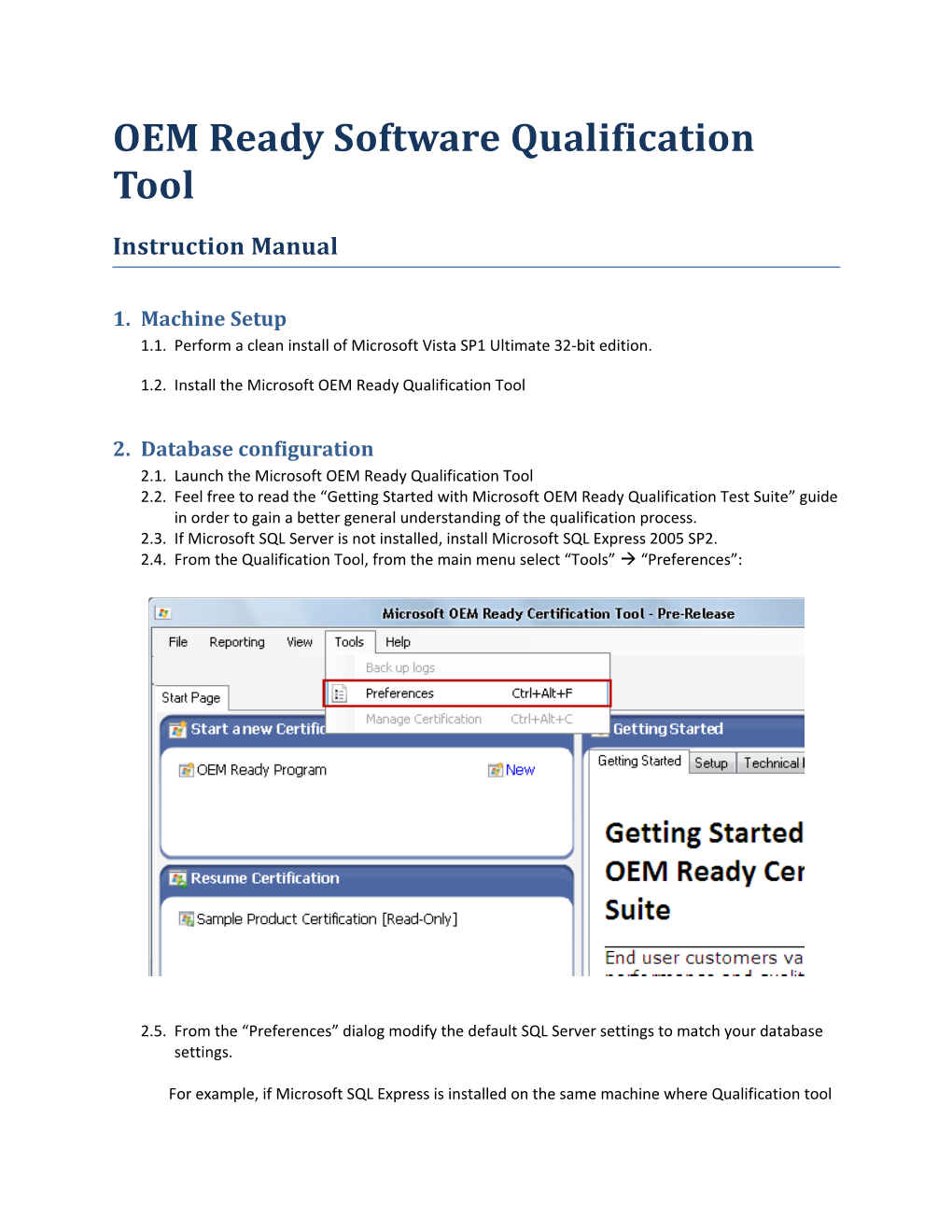

2. Database configuration 2.1. Launch the Microsoft OEM Ready Qualification Tool 2.2. Feel free to read the “Getting Started with Microsoft OEM Ready Qualification Test Suite” guide in order to gain a better general understanding of the qualification process. 2.3. If Microsoft SQL Server is not installed, install Microsoft SQL Express 2005 SP2. 2.4. From the Qualification Tool, from the main menu select “Tools” “Preferences”:

2.5. From the “Preferences” dialog modify the default SQL Server settings to match your database settings.

For example, if Microsoft SQL Express is installed on the same machine where Qualification tool is running, specify the following settings:

Server Name: (local)\sqlexpress Select as Authentication “Windows Authentication” from the drop down list.

2.6. Click on the Connect button. A confirmation dialog should pop up informing you that the test connection was successful:

If you did not receive this confirmation, please verify your connection string and SQL installation

2.7. Close the dialog by clicking on OK. 2.8. If this is the first time you are using the Qualification Tool on this machine, select the “Create Database” option:

2.9. Type the name you would like to create a new database: 2.10.Click the “Create” link to create the database:

2.11.You should be greeted by a message that lets you know the database was successfully created:

2.12.Close confirmation dialog by clicking on the OK button. 2.13.If you have already used the Qualification Tool and would like to add data to the existing database, choose the option “Select Database” and then select the database you would like to work within from drop down list. 2.14.Once the database is configured, the Preferences dialog should look similar to the image below: Figure 1: Preferences dialog after establishing connection to the CleanRun database (in this case, a local database of Microsoft SQL Express).

3. Log and report location configuration 3.1. From the Preferences dialog, enter the path to the folder where the report and log files will be located.

It is recommended that the folder where the files will be created should include the name of the application to be tested.

For instance, if foo.exe is going to be checked the target folder could have the name of: c:\FooCertification

3.2. You can either type the path to the folder in the edit box or you can also navigate to the target folder using Browse button. By using the Browse approach, a new folder can be created by navigating to the target directory where the new folder should be located and clicking on the “Make New Folder” button. Figure 2: Everything should be configured in the Preferences dialog: SQL Server and Database settings, as well as the folder path where all logs and reports for the Foo app will be placed.

3.3. Click on OK to close the Preferences dialog.

4. Test tool configuration 4.1. From the Getting Started pane (on the right), click on the Setup tab: 4.2. Click on the “Preparing the test environment” button and follow instructions to download and install all needed tools:

4.3. On the right pane in Getting Started page, click on the Tool Box tab: 4.4. For every test tool in the application, the path needs be set. To carry out this operation, follow these steps:

4.4.1.Click on a tool under the “Name” column (in the picture below ORCA is being selected as an example): 4.4.2.On the Getting Started pane, under Misc it is possible to modify the default settings of the tool. To do so, click on the path row on the right:

4.4.3.Click on Browse button under the Path row: 4.4.4.Navigate to the folder where the binaries for the specific tool are located.

4.4.5.Select the executable and click on Open.

4.4.6.The path for that test tool is now defined.

4.4.7.Repeat steps Click on a tool under the “Name” column (in the picture below ORCA is being selected as an example): to The path for that test tool is now defined. for each tool in Table 1 below:

Table 1 below should provide the typical file path for each of the binaries. Please note, these paths will be slightly different for 64-bit operating systems.

Application Name File Path Orcas C:\Program Files\Orca\Orca.exe Log Parser C:\Program Files\Log Parser 2.2\LogParser.exe Application Verifier C:\WINDOWS\system32\appverif.exe Signtool C:\tools\signtool.exe SigCheck C:\tools\sigcheck.exe Driver Verifier verifier.exe Windows Debugger C:\Program Files\Debugging Tools for Windows (x86)\windbg.exe Appverifier Logs Parser C:\Program Files\Microsoft\Certification Tool\bin\Scripts\AppVerifierLogsParser.exe Restart Manager Test C:\Program Files\Microsoft Corporation\Logo Testing Tools for Windows\Restart Manager\x86\RMTool.exe

Table 1: Paths to binaries for required test tools. 5. Creating the New Qualification Project 5.1. On the left pane, from the “Start a new Certification” window, click on the New button:

5.2. The New Qualification dialog should open

5.3. Under the Certification Name field, type in the name for the project. It is recommended that you give the project a name that is related to the application name to be tested. Figure 4: The “New Certification” dialog. In this case, the certification name is set to “Foo App”.

5.4. The logs for this Qualification project will be located in:

For example, if the folder name was defined as “c:\FooCertification” and the Certification project name was defined as “Foo App” then all logs and reports which are related to the tested application Foo will be found under:

c:\FooCertification\Foo App_Logs

6. Product details configuration 6.1. Under the Product details section, 3 different options are available:

6.1.1.Automatically detect files installed during installation Select this option if you’d like to run automatic test cases to verify the tested application. The application should already be installed on when carrying out this type of testing.

The full path including the name of the manifest file should be specified as shown below: By default, the manifest file will be created in the same folder where the logs and reports will be placed. The default manifest file name is “submission.xml”.

To change default value for the file path of the manifest file, click on the Browse button and navigate to the desired location.

Please note that the default name of the manifest file is not a configurable option, it will always be called submission.xml

The manifest file will include all the logs and test results, and other information that is gathered during the collecting phase about the installed applications.

It is recommended that you select the same folder that was previously selected to store logs and reports to keep all application/qualification related information in the same place.

6.1.2.Provide an existing manifest

If you have already run a test that generated the manifest file and you need to run the tool again, then you can reuse the existing manifest. In this case the full path to the manifest file should be provided. It has to be the same path as it was given during step Automatically detect files installed during installation Select this option if you’d like to run automatic test cases to verify the tested application. The application should already be installed on when carrying out this type of testing., path for the manifest file.

6.1.3.Run all test cases manually

Select this option if you plan to run all test cases manually and do not wish to take advantage of any of the automated tests.

Note: by selecting this option, it will not be possible to run any of automated test cases for this Qualification Project. If you decide you do want to run automated tests, a new Qualification Project must be created.

6.2. Once you have selected the option that best suits your needs, click the OK button. 6.3. If option Automatically detect files installed during installation Select this option if you’d like to run automatic test cases to verify the tested application. The application should already be installed on when carrying out this type of testing. (Automatically detect files installed during installation) was selected, follow these steps:

6.3.1.The Application Selection Tool wizard should now open. Click on Next button:

6.3.2.The application will collect information on the applications that are installed on your machine. This process may take a few minutes to complete.

6.3.3.Once the collection process has finished, a list of installed applications on this machine will be displayed: 6.3.4. Select the application from the list that is going to be tested. Click the Next button.

6.3.5.The list of files that belong to the selected application will be shown. If all related files are not listed, any missing files will need to be added manually.

KNOWN LIMITATION: if an application contains drivers, each .sys and .cat file must be added manually.

6.3.6.To manually add files, click on the “Add a file” button

6.3.7.Navigate to the file that should be added. Select the file and click on the Open button to add the selected file to the list.

6.3.8.If a file was added by mistake, select it from the Application Selection Tool dialog and click the “Remove file” button. Please note that only files that were added manually can be removed.

6.3.9.Once all binaries relating to the application are listed, click on the “Export XML” button. The Application Selection Tool dialog will close.

6.4. Once the file collection process is complete (if option Automatically detect files installed during installation Select this option if you’d like to run automatic test cases to verify the tested application. The application should already be installed on when carrying out this type of testing. was selected) or if either option Provide an existing manifest or Run all test cases manually was selected, the left pane will list the test cases to run.

6.5. If options Automatically detect files installed during installation Select this option if you’d like to run automatic test cases to verify the tested application. The application should already be installed on when carrying out this type of testing. or Provide an existing manifest were selected, then test cases 1.1.1, 1.2.1, 1.2.3, 3.1.1 can be executed automatically. If option Run all test cases manually was selected, all test cases must be executed manually.

7. Running the Automation 7.1. Once the XML file is generated, the Qualification Tool will display the OEM Read y tests as shown below: 7.2. Select a test case on the left pane to run

NOTE: currently, only 1.1.1, 1.2.1, 1.2.3, 3.1.1 are automated. These test cases correspond to tests 1, 2.1, 2.3, and 7 from the OEM Ready Program Documentation.

7.3. From the right pane click on the Test Steps tab.

7.4. The right pane window contains the manual steps required to perform the test case as show in the figure below:

Figure 5: Test case 1.1.1 was selected to run

7.5. To run the test case automatically, click on the green arrow button in the bottom right corner as show in the figure below: Figure 6: The green button to run automated test case 1.1.1 is located on the upper right corner.

7.6. Some of the automated test cases will launch tools that will display interactive dialog. It is imperative that you do NOT interact with the machine until the test case is complete.

7.7. At the end of the test case a message box will appear with information on whether the test case passed or failed.

7.8. Results are automatically uploaded to the database of the Qualification tool.

7.9. The path to the latest log file is also automatically uploaded to the Qualification tool’s database.

7.10.To display the results from the Qualification Tool, from the left pane click on the Test Result tab.

7.11.To see the path to the log file in the Qualification Tool, from the left pane click on the Test Result tab. The path is located in the Logs/ Other files section at the bottom part of the window. Figure 7: In this example, test case 1.1.1 failed. Path to the log file is at the bottom. (c:\FooCertification\Foo App_Logs\Foo App_Test Case_1.1.1_Log.xml)

7.12. Paths to more logs/ files can be added to the Logs/Other files section as needed. For example, supporting documents could be added. Each file whose path is listed in this section will be included in the Submission package.

7.13. When another test case is selected from the right pane, the title of the previously executed test case will be displayed in green or red, corresponding to pass or fail results.

8. Verifying and Analyzing Logs 8.1. To analyze the logs in a more user friendly format, use SvcTraceViewer.exe. This tool is included with the Windows SDK.

8.2. All logs can be found under

8.3. All automated test cases check each executable and report their results to the log file. If any of executables/drivers that belong to the application failed to pass the test, the entire test is failed.

8.4. If the automation is run multiple times, logs from previous runs are archived in the directory

c:\FooCertification\Foo App_Logs\BackupLogs

9. Running the Automation Multiple Times 9.1. It is possible to run automation multiple times for the same application as needed.

9.2. The results for each test run for the same test case might be different if the list of application has changed since the last run or if the manifest file (submission.xml) was changed. In such cases, the results are overwritten in the database.

9.3. If the Qualification Tool was closed and there is a need to continue with an existing project: Launch the Qualification Tool and click on the project name from the right pane. The Test cases tree will open. If test cases were previously completed, they will appear with their results.

10. Generating the Report and Submission Package 10.1.To generate the report about the qualification project: from the main menu select Reporting Generate Report.

10.2.A save dialog will open pointing by default to the folder that was previously defined. It is recommend to keep the report in the same folder as the log files that belong to the qualification project, i.e.

10.3.The default file name for a report is OEMReadyProgram-

10.4.Click on the Save button.

10.5.The report will be generated and will be placed in the folder previously defined.

10.6.To generate a Submission package, from the main menu click on: Reporting Generate Submission Package

10.7.A save dialog will open pointing by default to the folder that was previously defined. It is recommend to keep the submission file in the same folder as the log files that belong to the qualification project, i.e.

10.8.The default file name for a submission package is OEMReadyProgram-

10.9.Click on the Save button.

10.10. The Submission package will be generated and will be placed in the folder previously defined. 10.11. The Submission package will include all logs that were uploaded to the Qualification Tool for automatic and manual results and the final report.

NOTE: paths to log files for test cases that were run automatically are added to the Qualification tool database immediately upon completion, however, paths to the log files that were created during manual execution be added manually. It is recommened to store these logs in the previously defined path for the project.

10.12. NOTE: The submission package will include only those files/ logs that were defined in the Qualification tool in the Logs/ Other files section.

10.13. NOTE: In order to access the Logs/ Other files section:

Select any test case

In the right pane, click on Test Results

Go to the bottom section, the last section is Logs/ Other files

To add files, click on the Browse button and add files.

11. FAQ

11.1. Q: How can I find out where the log files are saved?

A: From Qualification Tool menu, click on File, Close. This will close the current project. From the Qualification Tool menu, click on Tools Preferences. The Preferences dialog will display allsettings. Under “folder path” the root folder for all logs and reports is displayed.

11.2. Q: Reviewing results/logs, I found that some executables were tested do not belong to my application. How can I remove them from the results? A: You have to open the manifest file (in our example, it was c:\FooCertification\Foo App\submission.xml – figure 4). In this file you can see the list of executables, drivers, catalogs and shortcuts that belong to the tested application. “Noise” during the collection process could have caused the creation of some redundant files. Remove these redundant files carefully without modifying the XML tags. Once the file has been updated, you must re- run the automated test cases again.

If files are missing, add paths to the missing files into the appropriate sections:

exe files should be added to executable section, sys files should be added to Drivers sections,

cat files should be added to Catalogs section,

lnk files should be added to Shortcuts section

It is recommended that you avoid direct modification of manifest file.

11.3. Q: It seems like the Qualification tool hangs during the test case execution. What should I do?

A: The test execution should not take longer than

11.4. Q: There are a lot of message dialogs opening and closing during the automated testing. Is it normal?

A: Yes, it is normal. For some test cases each executable that belongs to the application is launched and closed. Also, cmd windows are opened for each executable during the test. Do NOT interact with the machine while any tests are running. This may adversely affect the test results.

11.5. Q: After running some test cases, open dialog messages may be left on the machine. Is it normal?

A: Yes, it is normal. To perform some test cases, executables that belong to an application are launched. In some cases, not all launched applications are killed at the end of the test. Once testing is complete, (once the notification dialog is displayed) these messages can be closed.