POL 681 STATA NOTES: ORDINAL LOGIT and ORDINAL PROBIT

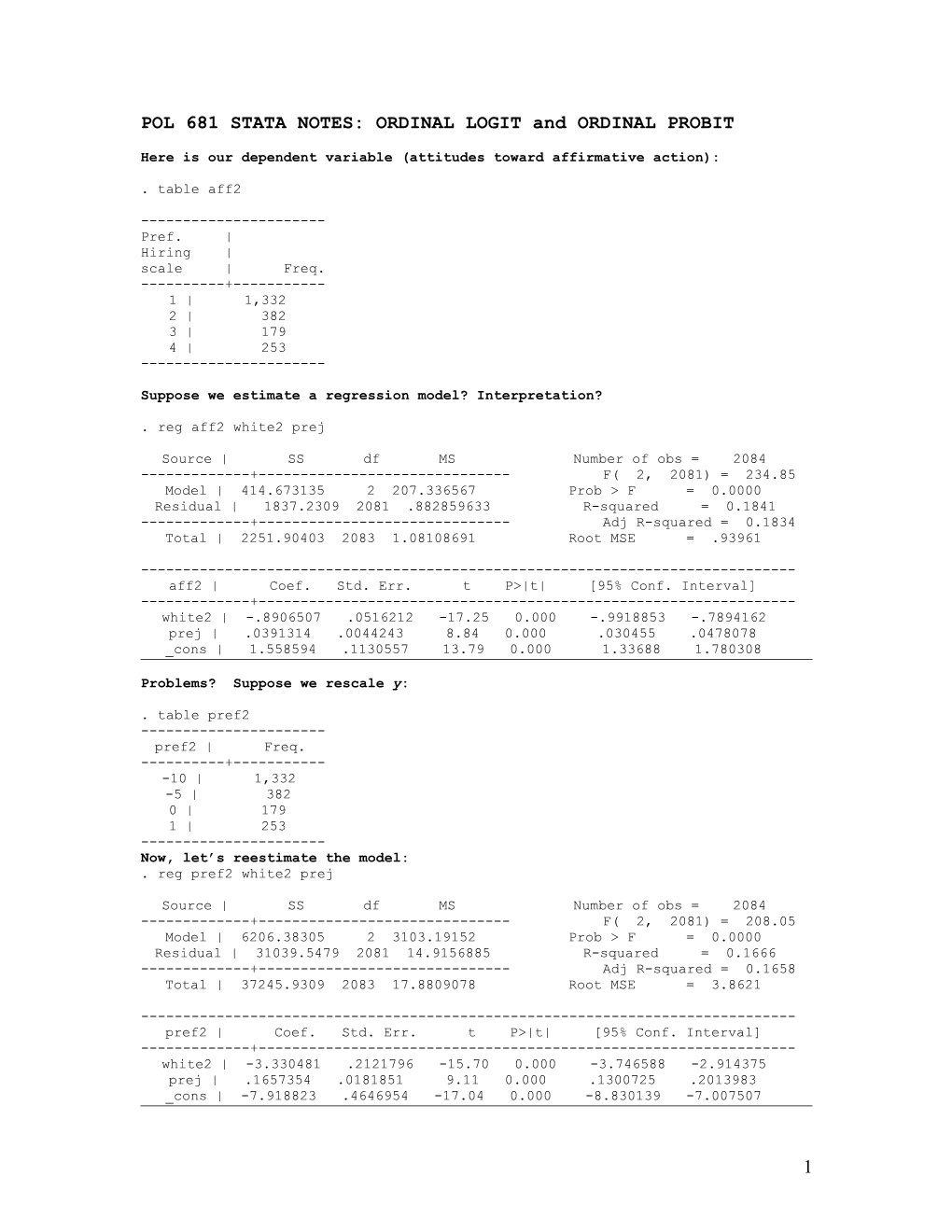

Here is our dependent variable (attitudes toward affirmative action):

. table aff2

------Pref. | Hiring | scale | Freq. ------+------1 | 1,332 2 | 382 3 | 179 4 | 253 ------

Suppose we estimate a regression model? Interpretation?

. reg aff2 white2 prej

Source | SS df MS Number of obs = 2084 ------+------F( 2, 2081) = 234.85 Model | 414.673135 2 207.336567 Prob > F = 0.0000 Residual | 1837.2309 2081 .882859633 R-squared = 0.1841 ------+------Adj R-squared = 0.1834 Total | 2251.90403 2083 1.08108691 Root MSE = .93961

------aff2 | Coef. Std. Err. t P>|t| [95% Conf. Interval] ------+------white2 | -.8906507 .0516212 -17.25 0.000 -.9918853 -.7894162 prej | .0391314 .0044243 8.84 0.000 .030455 .0478078 _cons | 1.558594 .1130557 13.79 0.000 1.33688 1.780308

Problems? Suppose we rescale y:

. table pref2 ------pref2 | Freq. ------+------10 | 1,332 -5 | 382 0 | 179 1 | 253 ------Now, let’s reestimate the model: . reg pref2 white2 prej

Source | SS df MS Number of obs = 2084 ------+------F( 2, 2081) = 208.05 Model | 6206.38305 2 3103.19152 Prob > F = 0.0000 Residual | 31039.5479 2081 14.9156885 R-squared = 0.1666 ------+------Adj R-squared = 0.1658 Total | 37245.9309 2083 17.8809078 Root MSE = 3.8621

------pref2 | Coef. Std. Err. t P>|t| [95% Conf. Interval] ------+------white2 | -3.330481 .2121796 -15.70 0.000 -3.746588 -2.914375 prej | .1657354 .0181851 9.11 0.000 .1300725 .2013983 _cons | -7.918823 .4646954 -17.04 0.000 -8.830139 -7.007507

1 Uh oh.

Just for a sneak peek, suppose I apply a model for ordinal categorical data (like ordinal logit or ordinal probit) to this newly created variable? First, let’s take the original variable:

. ologit aff2 white2 prej

Iteration 0: log likelihood = -2208.5474 Iteration 1: log likelihood = -2046.6793 Iteration 2: log likelihood = -2041.0539 Iteration 3: log likelihood = -2041.0492

Ordered logit estimates Number of obs = 2084 LR chi2(2) = 335.00 Prob > chi2 = 0.0000 Log likelihood = -2041.0492 Pseudo R2 = 0.0758

------aff2 | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -1.527657 .1085576 -14.07 0.000 -1.740426 -1.314888 prej | .0886513 .0101943 8.70 0.000 .0686709 .1086318 ------+------_cut1 | 1.200597 .2541018 (Ancillary parameters) _cut2 | 2.215088 .2574794 _cut3 | 2.950627 .2613229 ------

Now, let’s take the rescaled version: ologit pref2 white2 prej

Iteration 0: log likelihood = -2208.5474 Iteration 1: log likelihood = -2046.6793 Iteration 2: log likelihood = -2041.0539 Iteration 3: log likelihood = -2041.0492

Ordered logit estimates Number of obs = 2084 LR chi2(2) = 335.00 Prob > chi2 = 0.0000 Log likelihood = -2041.0492 Pseudo R2 = 0.0758

------pref2 | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -1.527657 .1085576 -14.07 0.000 -1.740426 -1.314888 prej | .0886513 .0101943 8.70 0.000 .0686709 .1086318 ------+------_cut1 | 1.200597 .2541018 (Ancillary parameters) _cut2 | 2.215088 .2574794 _cut3 | 2.950627 .2613229 ------

Moving on to probabilities. To keep things simple, I’m only going to use a single binary covariate. Let’s run the following model:

. ologit aff2 white2

Iteration 0: log likelihood = -2256.8146 Iteration 1: log likelihood = -2131.0014 Iteration 2: log likelihood = -2126.6825 Iteration 3: log likelihood = -2126.6804

2 Ordered logit estimates Number of obs = 2128 LR chi2(1) = 260.27 Prob > chi2 = 0.0000 Log likelihood = -2126.6804 Pseudo R2 = 0.0577

------aff2 | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -1.688567 .1051639 -16.06 0.000 -1.894684 -1.482449 ------+------_cut1 | -.839396 .0938652 (Ancillary parameters) _cut2 | .1540845 .0918702 _cut3 | .8629134 .0954182

As with many Stata commands, we could use predict to generate predicted probabilities. Note that I have to define the probabilities (why?): . predict p1 p2 p3 p4 (option p assumed; predicted probabilities) (26 missing values generated)

For illustrative purposes, let’s tabulate them by the value of the race indicator: . table p1 white2

------Pr(aff2== | white2 1) | 0 1 ------+------.301662 | 563 .7003931 | 1,896 ------

. table p2 white2

------Pr(aff2== | white2 2) | 0 1 ------+------.1628688 | 1,896 .2367831 | 563 ------

. table p3 white2

------Pr(aff2== | white2 3) | 0 1 ------+------.0644109 | 1,896 .1648239 | 563 ------

. table p4 white2

------Pr(aff2== | white2 4) | 0 1 ------+------.0723271 | 1,896 .296731 | 563 ------

3 Of course I could have computed these by hand using the following functions (for the case when white2=1):

. display 1/(1+exp(_b[white2]*1-_b[_cut1])) .70039314

. display 1/(1+exp(_b[white2]*1-_b[_cut2]))-1/(1+exp(_b[white2]*1-_b[_cut1])) .16286881

. display 1/(1+exp(_b[white2]*1-_b[_cut3]))-1/(1+exp(_b[white2]*1-_b[_cut2])) .06441093

. display 1-1/(1+exp(_b[white2]*1-_b[_cut3])) .07232712 Verify these are identical to the above calculations. Now let’s look at the cumulative probabilities (for the case of white2=0). The first cumulative probability is Pr(y<=1), which is:

. display p1 .301662

The second is Pr(y<=2): . display p1+p2 .53844507

The third is Pr(y<=3) . display p1+p2+p3 .70326896

The fourth is Pr(y<=4) (trivial case) . display p1+p2+p3+p4 .99999999

[Why must this one be 1?] Now look at the following. What is the difference between the second cumulative probability and the first?

.54-.30=.24

What is the difference between the third cumulative probability and the first and second?

.70-.54=.16

What is the difference between the fourth cumulative probability and the first three?

1.0-.70=.30.

What do these numbers correspond to?

<------> y* | | | 1 2 3

Pr=0 | | | Pr=1 Pr(y<=1) Pr(y<=2) Pr(y<=3) =.30 =.54 =.70 ------| | | .24 .16 .30

4 These numbers should look familiar. Return to the table of probabilities. The differences across the cumulative probabilities are equal to conditional probabilities. This is obvious. However, the important thing to note is that in this example, since white2=0, the only component that shifts the cumulative probabilities are the cutpoints. This raises a more general point: since the regression coefficients, x’β, are the same for all J cutpoints, changes to the cumulative probability curves will be solely due to the cutpoints. This is what is known as the “parallel slopes” assumption.

Let’s turn now to ordinal probit. Here I estimate a model with the same covariate as before. The coefficients are now expressed in terms of inverses of the cumulative normal distribution (i.e. 1/Φ).

. oprobit aff2 white2

Iteration 0: log likelihood = -2256.8146 Iteration 1: log likelihood = -2118.8459 Iteration 2: log likelihood = -2118.7591

Ordered probit estimates Number of obs = 2128 LR chi2(1) = 276.11 Prob > chi2 = 0.0000 Log likelihood = -2118.7591 Pseudo R2 = 0.0612

------aff2 | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -1.019296 .0612999 -16.63 0.000 -1.139441 -.8991502 ------+------_cut1 | -.4874818 .0552574 (Ancillary parameters) _cut2 | .1004991 .0548217 _cut3 | .4979683 .0557226 ------

I can use Stata to compute the probabilities (note again I must identify the probabilities):

. predict pr1 pr2 pr3 pr4 (option p assumed; predicted probabilities) (26 missing values generated)

For illustrative purposes, let’s tabulate them by the value of the race indicator:

. table pr1 white2 ------Pr(aff2== | white2 1) | 0 1 ------+------.3129585 | 563 .7025726 | 1,896 ------

. table pr2 white2

------Pr(aff2== | white2 2) | 0 1 ------+------.1660268 | 1,896

5 .2270675 | 563 ------

. table pr3 white2

------Pr(aff2== | white2 3) | 0 1 ------+------.0668006 | 1,896 .1507208 | 563 ------

. table pr4 white2

------Pr(aff2== | white2 4) | 0 1 ------+------.0646 | 1,896 .3092532 | 563 ------

I could also compute these probabilities using Stata as a calculator. For the case when white2=1, we obtain: . display norm(_b[_cut1]-_b[white2]*1) .70257259

. display norm(_b[_cut2]-_b[white2]*1)-norm(_b[_cut1]-_b[white2]*1) .16602683

. display norm(_b[_cut3]-_b[white2]*1)-norm(_b[_cut2]-_b[white2]*1) .06680057

. display 1-norm(_b[_cut3]-_b[white2]*1) .06460001

Verify that these agree with Stata’s computations. (They do).

One last little thing. There is an equivalency between the binary version of these models and the ordinal version. To “prove” this, return to logit. Let’s apply ologit to a binary dependent variable:

. ologit hihi white2

Iteration 0: log likelihood = -673.904 Iteration 1: log likelihood = -660.43261 Iteration 2: log likelihood = -659.29047 Iteration 3: log likelihood = -659.28661

Ordered logit estimates Number of obs = 2459 LR chi2(1) = 29.23 Prob > chi2 = 0.0000 Log likelihood = -659.28661 Pseudo R2 = 0.0217

------hihi | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -.8732477 .1561835 -5.59 0.000 -1.179362 -.5671336 ------+------_cut1 | 1.857531 .1233321 (Ancillary parameter)

Now let’s apply logit:

6 . logit hihi white2

Iteration 0: log likelihood = -673.904 Iteration 1: log likelihood = -660.43261 Iteration 2: log likelihood = -659.29047 Iteration 3: log likelihood = -659.28661

Logit estimates Number of obs = 2459 LR chi2(1) = 29.23 Prob > chi2 = 0.0000 Log likelihood = -659.28661 Pseudo R2 = 0.0217

------hihi | Coef. Std. Err. z P>|z| [95% Conf. Interval] ------+------white2 | -.8732477 .1561835 -5.59 0.000 -1.179362 -.5671336 _cons | -1.857531 .1233321 -15.06 0.000 -2.099257 -1.615804

What is the difference? The coefficient is identical; however, in the ordered logit model, _cut1=-b0. Why?

The answer is straightforward. Now figure it out.

7