Trinity College, Dublin Generic Skills Programme Statistics for Research Students

Laboratory 5: Feedback

1.1 Calculating the power to detect an unacceptably high nonconformance rate

Calculating nonconformance rates

Process 70 75 80 85 90 Mean Non-conformance 0.6 2.0 8.5 25 50 Rate, %

Comment on the acceptability of nonconformance rates such as these in modern industry.

In an era when hi-tech industries strive for "parts per million" non-conformance, rates of 0.6% may be acceptable, 2% is not, 8.5% is unrealistic.

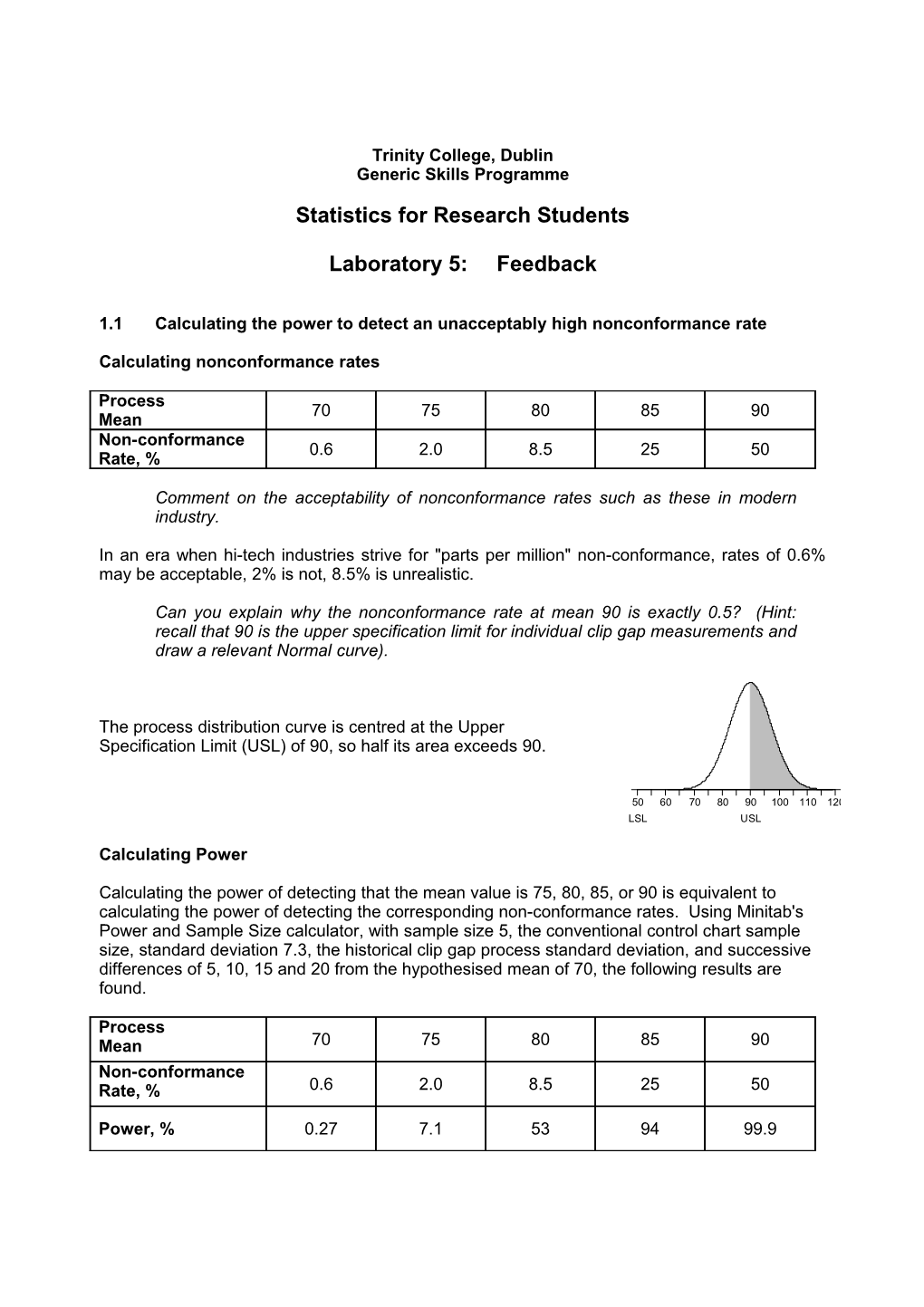

Can you explain why the nonconformance rate at mean 90 is exactly 0.5? (Hint: recall that 90 is the upper specification limit for individual clip gap measurements and draw a relevant Normal curve).

The process distribution curve is centred at the Upper Specification Limit (USL) of 90, so half its area exceeds 90.

50 60 70 80 90 100 110 120 LSL USL

Calculating Power

Calculating the power of detecting that the mean value is 75, 80, 85, or 90 is equivalent to calculating the power of detecting the corresponding non-conformance rates. Using Minitab's Power and Sample Size calculator, with sample size 5, the conventional control chart sample size, standard deviation 7.3, the historical clip gap process standard deviation, and successive differences of 5, 10, 15 and 20 from the hypothesised mean of 70, the following results are found.

Process Mean 70 75 80 85 90 Non-conformance Rate, % 0.6 2.0 8.5 25 50

Power, % 0.27 7.1 53 94 99.9 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

Comment on the acceptability of power values such as these for detecting the corresponding nonconformance rates.

A 7% chance of detecting a 2% non-conformance rate is unacceptably low, as is a 53% chance of detecting an 8.5% non-conformance rate. Very high chances of detecting very high non- conformance rates such as 25% and 50% are irrelevant.

Note that power defaults to significance level when the mean is 70, the null hypothesis.

Can you explain why the power at mean 80 is almost exactly 0.5? (Hint: recall that the upper control limit on the clip gaps Xbar control chart was almost exactly 80 and draw a relevant Normal curve).

The sampling distribution of Xbar is centred at 80, just above the Upper Control Limit (UCL) of 79.8, so just over half its area exceeds 79.8.

50 60 70 80 90 100 110 120 LCL UCL

1.2 Calculating the sample size required to achieve acceptable power

Scatterplot of Power vs Sample Size 1.0

0.8

0.6 r e w o P 0.4

0.2

0.0 10 20 30 40 50 60 70 80 Sample Size

What power is achieved by doubling the sample size (to 10)? Is this satisfactory?

2% power for detecting a 2% non-conformance rate is not satisfactory.

What power is achieved by doubling the sample size again? Is this satisfactory?

page 2 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

53% power for detecting a 2% non-conformance rate is not satisfactory.

What sample size is required to achieve a power of 90%? Is this economical?

To achieve 90% power, a sample size of almost 40 is required. (The power at sample size 40 is just over 90%). This may be an impractical requirement every 2 hours in a busy plant.

Note that Minitab's Power and Sample Size calculator may be used to calculate the sample size exactly. It turns out to be 40.

Calculating the power of a one sample t-test

What power did your t-tests have for detecting a nonconformance rate of 2%?

With sample size 40, the power is 98.8%, with sample size 85, the power is 100%.

Note that, with sample size 40, the t-test is calculated to have much greater power than that of the Z-test, see above. This is because the significance level of the former was 5% while that of the latter was 0.27%, that is, a "2-sigma" test rather than a "3-sigma" test. The choice between "2-sigma" and "3-sigma" is explained in Stuart (2003, p. 168) as follows.

Shewhart proposed his '3 standard error' control limits on the basis that the relatively low false alarm rate of less than 3 in 1,000 would be tolerable in the context of the frequent sampling typically involved in parts manufacturing. By contrast, a false alarm rate of approximately 1 in 20 (5%) would quickly lead to lack of confidence in using a procedure which led to frequent shut downs, investigations etc. which later proved unnecessary. For example, sampling every two hours, as in the clip gap example, and using '2 standard error' control limits would mean getting a false alarm roughly once a week in the case of a 40 hour work week or three or more times a week with shift working. This would quickly kill the use of control charts.

On the other hand, in a process capability analysis based on historical data, the data on which significance tests are based involve combining several samples over a longer period of time. Thus, a combined sample of 80 clip gap measurements which involves combining sixteen two-hourly samples covers a period of 160 hours. Assuming a 40 hour week, the Fisherian prescription of 5%, or 1 in 20, would lead to a test error roughly every 80 weeks, almost 2 years, in that case. Such an error rate is unlikely to cause upset.

It may be worth noting that Shewhart set up the control chart for use on a regular and frequent basis whereas Fisher initiated the systematic use of significance tests in the context of scientific, specifically agricultural, experimentation, where the perspective was over a much longer term. The chosen error rates make sense in these contexts.

This discussion pays no attention to power. In a later section, (p.202), it is noted that

There is a benefit in using the narrower limits. Narrower limits lead to more "out- of-control" signals. While this means more false alarms when the process is "in control", it means more correct signals when the process is not "in control", which is clearly desirable.

page 3 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

What power did they have for detecting shifts equal to those actually observed? Note that the mean of the clip gap measurements up to Subgroup 17 was 73.88 and that the mean of the clip gap measurements after Subgroup 17 was 66.75

The first test, with sample size 85, had power of 99.8% for detecting a mean of 73.88, as observed.

The second test, with sample size 40, had power of 78% for detecting a mean of 66.75, as observed.

Calculating the power of a two sample t-test

The calculated power is 39%.

Comment

This means that there is a high chance that a mean difference is much as 5 will not be detected by samples of this size. Recall that the power is even smaller when the sample sizes are not equal, as was the case in the IQ example.

There are two possible explanations for the actual result found. One is that the sample size was not big enough and that a further study should be conducted to confirm the result apparently found (a mean difference of 5). The second is that the result of the test was correct; there was no mean difference of the order of 5 and a repeat of the study would have produced another mean difference value which would be equally likely to be negative as positive and, in all likelihood, not as big as 5.

The resolution of this dilemma is to carry out a power study in advance of experimentation or observation. This involves determining what size of deviation from the null hypothesis is of substantive importance and what power a test should have for detecting such deviations. Making such determinations is not easy and may be highly subjective.

Sample size calculation

Report the details of the result.

The required sample size is 144 x 2.

Comment on the resource implications.

This is almost 4 times the sample size used in the actual study. Assuming that a case had to be made to get approval for the expense involved in the actual study, the case for a statistically realistic study may be more difficult to make. On the other hand, the inconclusive result from the actual study makes the expense involved appear wasted.

If a statistically realistic study in not economically realistic, it may be best to abandon the idea. More often than not, a compromise is reached which, however, faces the danger of being inconclusive.

In a medical context, for example, drug trials, there is the additional danger of unnecessarily exposing patients to risk, for example, of not getting the standard treatment and therefore not gettig its benefits, or of getting a new treatment that may have unanticpated side effects. Such ethical issues add a further dimension to the problem of choosing sample sizes.

Computer maintenance charges in a Building Society

page 4 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

Management Report

Following concern being expressed regarding monthly computer maintenance charges in this branch, a small study was undertaken. Figures for this branch were compared with figures from another branch. A summary follows.

Branch Mean StDev Own 645.58 84.25 Other 613.50 152.65

It appears that the charges in this branch are somewhat higher than those in the other branch, the mean difference being €32.08. However, a test of significance showed that this difference was not statistically significant. The details of the test are reported in a separate appendix.

Although the difference appears to be not statistically significant, there is still concern about its magnitude. Also, there appears to be a substantial difference between the standard deviations in the two branches. Further investigation is being undertaken.

Appendix

Two-Sample T-Test and CI

Estimate for difference: 32.1 95% CI for difference: (-84.8, 149.0) T-Test of difference = 0 (vs not =): T-Value = 0.58 P-Value = 0.569 DF = 16 Both use Pooled StDev = 110.2806

End of report

Unequal variances?

Two-Sample T-Test and CI

Estimate for difference: 32.1 95% CI for difference: (-131.6, 195.8) T-Test of difference = 0 (vs not =): T-Value = 0.48 P-Value = 0.649 DF = 6

Compare and contrast the two sets of results.

Explain any discrepancies found.

The second test is less statistically significant, p-value increases from 0.569 to 0.649.

The standard error, calculated from a different formula, is bigger. This results in a smaller t- value. This also results in a wider confidence interval.

The sampling distribution of the test statistic is approximated by a t distribution with much smaller degrees of freedom. This results in a bigger critical value, which also contributes to the reduction in statistical significance.

page 5 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

Test for Equal Variances

F-Test (Normal Distribution) Test statistic = 0.55, p-value = 0.382

Management Report 2

In the first report, it was noted that the other branch had a much bigger standard deviation. To take this into account, an alternative significance test was used; results appended. However, this made no great difference to the conclusions.

Furthermore, a formal check of the difference between the standard deviations resulted in no significant difference being found; results are also appended.

These results are not readily explained. Further investigation is being undertaken.

Appendix

Two-Sample T-Test and CI

Estimate for difference: 32.1 95% CI for difference: (-131.6, 195.8) T-Test of difference = 0 (vs not =): T-Value = 0.48 P-Value = 0.649 DF = 6

Test for Equal Variances

F-Test (Normal Distribution) Test statistic = 0.55, p-value = 0.382

End of report

Informal exploratory analysis and revised formal analysis

Dotplot of Own Branch, Other Branch

Own Branch Other Branch 450 500 550 600 650 700 750 800 850 900 Computer Maintenance Costs

Boxplot of Own Branch, Other Branch

Own Branch

Other Branch

400 500 600 700 800 900 Data

page 6 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

Histograms, Version 1

500 600 700 800 900 Own Branch

500 600 700 800 900 Other Branch

Histograms, Version 2

500 600 700 800 900 Own Branch

500 600 700 800 900 Other Branch

Descriptive Statistics: Own Branch, Other Branch

Variable N Mean StDev Minimum Maximum Own Branch 12 645.6 84.3 494.0 769.0 Other Branch 6 613.5 152.7 464.0 894.0

page 7 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

Provide a short management report of the results, including reference to and making allowances for exceptional aspects.

Management Report 3

From the accompanying dotplots, it may be seen that the costs reported by the other branch appear generally lower than those in this branch, apart from a single (most recent) measurement in the other branch, which appears exceptionally high. This is reflected in the numerical summary, where the mean cost in this branch exceeds that in the other branch, but standard deviation and range are higher in the other branch.

End of report

Compare and contrast the various graph forms as used in this case.

The dotplots provide the best graphical view in this case, where both data sets are very small.

The boxplots, based on the 5-number summary (Min, Q1, Median, Q3, Max), are not suited to small data sets, particularly one with just 6 values. In the case of the Other branch, the exceptional case is not picked up, similar to the numerical summary.

The histograms are unsuitable and uninformative. In Version 2, the bins for the second were adjusted to match the first, giving one bin per value.

Formal statistical tests revisited

Two-sample T for Own Branch vs Other Branch

SE N Mean StDev Mean Own Branch 12 645.6 84.3 24 Other Branch 5 557.4 74.3 33

Difference = mu (Own Branch) - mu (Other Branch) Estimate for difference: 88.2 95% CI for difference: (-4.5, 180.9) T-Test of difference = 0 (vs not =): T-Value = 2.03 P-Value = 0.061 DF = 15 Both use Pooled StDev = 81.7223

Compare the result of the t test with the corresponding earlier result.

The Other Branch mean is lower than before (as is the standard deviation). The mean difference is now 88.2, compared to 32.1. The result is now close to being statistically significant at the 5% level, with a p-value of 6%.

Test for Equal Variances: Own Branch, Other Branch

95% Bonferroni confidence intervals for standard deviations

N Lower StDev Upper Own Branch 12 56.9729 84.2523 155.741 Other Branch 5 41.6095 74.3223 257.121

page 8 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

F-Test (Normal Distribution) Test statistic = 1.29, p-value = 0.876 Test for Equal Variances for Own Branch, Other Branch

F-Test

Ow n Branch Test Statistic 1.29 P-Value 0.876 Levene's Test Other Branch Test Statistic 0.42 P-Value 0.528 50 100 150 200 250 95% Bonferroni Confidence Intervals for StDevs

Ow n Branch

Other Branch

450 500 550 600 650 700 750 Data

Given the similarity of the variances and the result of the 2-Variances test, explain the difference between confidence interval for the two variances.

The sample size is much smaller (less than ½) in the other branch.

One-sided test

Two-sample T for Own Branch vs Other Branch

Difference = mu (Own Branch) - mu (Other Branch) Estimate for difference: 88.2 95% lower bound for difference: 11.9 T-Test of difference = 0 (vs >): T-Value = 2.03 P-Value = 0.030

Note that the alternative hypothesis is "difference > 0", that is, Own Branch greater than Other Branch .

Prepare a final management report on comparison of the maintenance charges in the two branches.

Management Report 4

Our original suspicions that this branch's costs were too high are borne out by the one-side t- test comparing this branch's costs with those of the other branch. The t-value of 2.03, with 15 degrees of freedom, exceeds the one-sided critical value of 1.75. The p-value is 3%.

Checking Normality

page 9 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

200 Mean -2.00624E-14 StDev 79.13 N 17 100 AD 0.280 P-Value 0.599 s l a u

d 0 i s e R

-100

-200 -2 -1 0 1 2 Score Report on your investigation.

There is no evidence of departure from Normality. (Reference Normal plots not needed here).

Given the dotplot of the Other Branch data, use reference dotplots to check its validity:

What is your conclusion?

Repeated simulation produced a high proportion of dotplots showed patterns similar to that in the Other Branch dotplot. However, in no case did the simulated standard deviation exceed 1.8, the ratio of Other Branch to Own Branch standard deviation observed in the actual data. Thus, while cases that appear exceptional within 6 simulated numbers occurred frequently, their standard deviation was not such as to make them exceptional in the context of the comparison of the two branches.

Lessons to be learned

What can be learned from tests based on summary data? What cannot?

Assuming that the standard assumptions apply, assessment of statistical significance and calculation of confidence intervals may be implemented. However, assessment of the assumptions referred to is not possible, so that, without other evidence, the test results must be suspect.

Is the variance test appropriate in this case? Explain.

No. The sample sizes are too small.

The sample sizes required for testing hypotheses concerning variances and standard deviations are much bigger than those required for testing hypotheses concerning means

Which is more appropriate in this case, a one-sided or two-sided alternative to the equal means hypothesis? Explain.

If it is clear from the start (and not just a matter of opinion) that the alternative to the null hypothesis is one-sided, then it is appropriate to use a one-sided test. If the suggestion of a one-sided alternative arises from the data under consideration, then a one-sided test is not appropriate. It may be appropriate if a further study of the original question is being undertaken, using new data, but only if there is firm evidence in favour of the one-sided alternative. In practice, this is unlikely in a situation where a further study is needed.

How effective are Normal plots in these circumstances? Explain.

page 10 Trinity College, Dublin Statistics for Research Students Generic Skills Programme Laboratory 5, Feedback

As with variance tests, Normal plots also need large sample sizes to detect departures from a null hypothesis, in this case the Normal model. In practice, even with small samples sizes, if departures from the Normal model are not evident in the Normal plot, then the use of t-tests will be satisfactory.

page 11