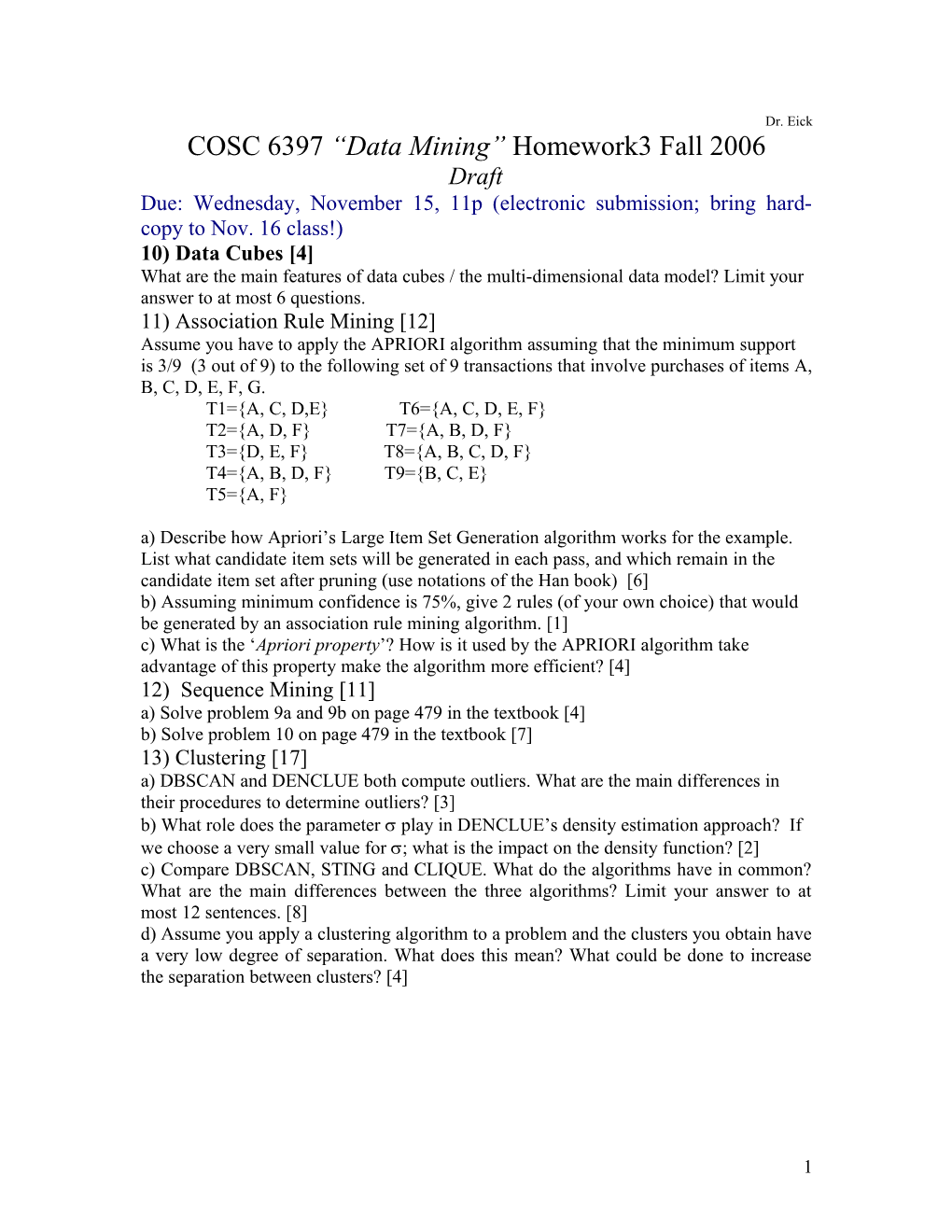

Dr. Eick COSC 6397 “Data Mining” Homework3 Fall 2006 Draft Due: Wednesday, November 15, 11p (electronic submission; bring hard- copy to Nov. 16 class!) 10) Data Cubes [4] What are the main features of data cubes / the multi-dimensional data model? Limit your answer to at most 6 questions. 11) Association Rule Mining [12] Assume you have to apply the APRIORI algorithm assuming that the minimum support is 3/9 (3 out of 9) to the following set of 9 transactions that involve purchases of items A, B, C, D, E, F, G. T1={A, C, D,E} T6={A, C, D, E, F} T2={A, D, F} T7={A, B, D, F} T3={D, E, F} T8={A, B, C, D, F} T4={A, B, D, F} T9={B, C, E} T5={A, F} a) Describe how Apriori’s Large Item Set Generation algorithm works for the example. List what candidate item sets will be generated in each pass, and which remain in the candidate item set after pruning (use notations of the Han book) [6] b) Assuming minimum confidence is 75%, give 2 rules (of your own choice) that would be generated by an association rule mining algorithm. [1] c) What is the ‘Apriori property’? How is it used by the APRIORI algorithm take advantage of this property make the algorithm more efficient? [4] 12) Sequence Mining [11] a) Solve problem 9a and 9b on page 479 in the textbook [4] b) Solve problem 10 on page 479 in the textbook [7] 13) Clustering [17] a) DBSCAN and DENCLUE both compute outliers. What are the main differences in their procedures to determine outliers? [3] b) What role does the parameter play in DENCLUE’s density estimation approach? If we choose a very small value for ; what is the impact on the density function? [2] c) Compare DBSCAN, STING and CLIQUE. What do the algorithms have in common? What are the main differences between the three algorithms? Limit your answer to at most 12 sentences. [8] d) Assume you apply a clustering algorithm to a problem and the clusters you obtain have a very low degree of separation. What does this mean? What could be done to increase the separation between clusters? [4]

1 Problem 10: Points students forgot to mention include: Support for class hierachies when definining dimensions Cube operatations Cube allow to view measures with respect to multiple dimensions

Problem 11:

Some students made some error not properly creating candidate item set; for example, If abc and abd and acd and ade are frequent; only abcd will be created as a candidate item set, and not acde (items are only combined, if they agree in their n-1-prefix)

Problem 12:

See solution

Problem 13c:

Observations that many students failed to mention include: CLIQUE finds clusters in subspaces and clusters are not necessarily disjoint DBSCAN forms clusters based on connectivity in graphs that are formed with respect to the points to be clustered CLIQUE and STING form clusters by merging grid-cells, and outliers are identified as grid-cells that do not contain a sufficent number of points STING is more general in that objects can be preselected based on a query and that it provides an efficient data structures to speed up clustering and query processing.

Problem 13d:

Remedies for the problem proposed by students include:

Add new attributes Cluster in a subspace Use other algorithm Postprocess clusters agglomeratively Use noise removal prior to clustering

Most proposed solutions were not particularly specific, and therefore most students received 2 points for this problem

Other solutions, not proposed in homework solutions include: Reduce k (the number of clusters) either directly or indirectly by changing alg. parameters

2