ANNEX I – TECHNICAL ANNEX

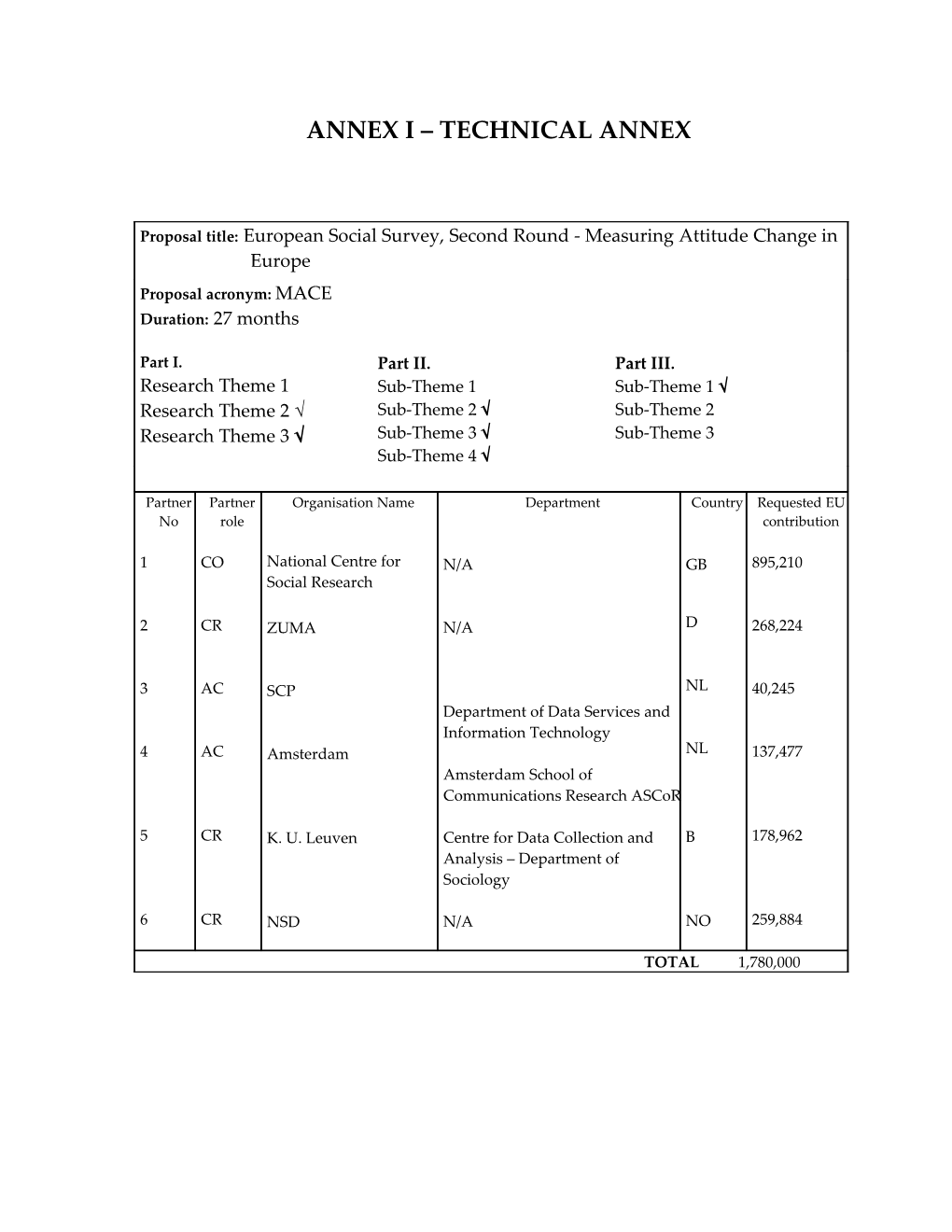

Proposal title: European Social Survey, Second Round - Measuring Attitude Change in Europe Proposal acronym: MACE Duration: 27 months

Part I. Part II. Part III. Research Theme 1 Sub-Theme 1 Sub-Theme 1 √ Research Theme 2 √ Sub-Theme 2 √ Sub-Theme 2 Research Theme 3 √ Sub-Theme 3 √ Sub-Theme 3 Sub-Theme 4 √

Partner Partner Organisation Name Department Country Requested EU No role contribution

1 CO National Centre for N/A GB 895,210 Social Research

2 CR ZUMA N/A D 268,224

3 AC SCP NL 40,245 Department of Data Services and Information Technology 4 AC Amsterdam NL 137,477 Amsterdam School of Communications Research ASCoR

5 CR K. U. Leuven Centre for Data Collection and B 178,962 Analysis – Department of Sociology

6 CR NSD N/A NO 259,884

TOTAL 1,780,000 Scientist Responsible Gender Partner No E-mail

1 Prof. Roger Jowell m [email protected]

2 Prof. Dr. Peter Ph. Mohler m [email protected]

3 Ineke Stoop f [email protected]

4 Prof. Willem Saris m [email protected]

5 Prof. Jaak Billiet m [email protected]

6 Bjørn Henrichsen m [email protected]

2 1. Title and Partnership

“European Social Survey, Round 2 – Monitoring Attitude Change in Europe”

Partner No. Organisation Principal Scientist Country

1 National Centre for Social Research Prof. Roger Jowell GB

Zentrum fuer Umfragen, Methoden und 2 Prof. Dr. Peter Ph. Mohler D Analysen 3 Sociaal en Cultureel Planbureau Dr. Ineke Stoop NL

4 University of Amsterdam Prof. Willem Saris NL

5 Catholic University of Leuven Prof. Jaak Billiet B

6 Norwegian Social Science Data Services Mr Bjorn Henrichsen NO

3 2. Objectives

The principal objective of the project is to consolidate and improve the infrastructure of organisations, individuals and data gathering facilities involved in the European Social Survey (the ESS), that have been set up to undertake the systematic and rigorous monitoring of changing social attitudes in 24 European nations.

The project is the second round of the biennial ESS, set up to chart and explain the interaction between Europe’s changing institutions, its political and economic structures, and the attitudes, beliefs and behaviour patterns of its diverse populations. As with Round 1, its data and outputs will be freely available to the social science and policy communities throughout Europe and beyond.

The ESS will thus provide an invaluable source of data for academics from a very broad range of social science disciplines (notably political science, sociology, public administration, social policy, economics, social psychology, statistics, mass communication, modern social history and social anthropology), as well as to politicians and civil servants (at a national and European level), think thanks, journalists and the public at large. Round 2 is the start of the time series element to the ESS, allowing comparisons between attitudes in 2002 and 2004. As survey builds upon survey, the ESS will provide a unique long-term account of change and development in the social fabric of modern Europe.

Above all, the project seeks to provide reliable data about the speed and direction of change in people’s underlying attitudes and values which, even in this increasingly well-documented age, has thus far been deficient. To the extent that this deficiency exists at a national level in many countries, it is even larger, more persistent and more serious at a European level, since most multinational surveys are still conducted to standards that fall well below what would be commonplace at a national level. So, while the social sciences in Europe have a long tradition in empirical analysis of national and multinational data, many important comparative data are either missing altogether or are available in such different forms in different countries that the basis for comparison is extremely fragile. This is overwhelmingly true of data in respect of a range of individual orientations (attitudes, values, beliefs) that are central to an understanding of modern societies and of change within them1.

Therefore the ESS aims to pioneer and ‘prove’ a standard of methodology for cross-national attitude surveys that has hitherto not been attempted anywhere in the world. It is in particular a pioneering project in respect of the difficult methodological problems posed by cross-national attitude surveys. The European Union is the natural laboratory for such work, not only because it possesses the ideal combination of diversity and homogeneity, but also because it has a strong vested interest in the existence of reliable cross-national data.

1 Studies such as the Eurobarometer, the European Values Surveys and the International Social Survey Programme all have quite different aims and priorities. 4 In Round 2 of the study, we will continue to tackle and mitigate a number of longstanding problems faced by cross-national, cross-cultural studies, such as:

Achieving comparable national samples using strict probability methods Attaining high response rates across nations Designing and translating rigorously-tested and functionally equivalent questionnaires Imposing consistent methods of fieldwork and coding Ensuring easy and speedy access to well-documented datasets

As a result of the solid social science infrastructure that has already been created during Round 1, the stage is thus set for an early working example of the European Research Area in practice. While the rules of FP5 permit the application for only one round of the time series at a time, FP5 3rd Call provides a bridge to FP6, whose rules could permit the ESS to be consolidated into a longer-term European infrastructure arrangement.

The project fits the following parts of the 5th Framework’s Key Action: “Improving the socio- economic knowledge base”.

In relation to Part I, Theme 2, a central aim of the project is to measure social trends related to societal and individual well-being. Indeed, its twin aims are on the one hand to create better tools for measuring societal change and on the other to produce relevant and rigorous data about such changes. This emphasis on change requires repeat measures; hence this application for a second round. The project should also be able to assess the impact of structural change and technological development on social values through cross-national comparisons not only of the survey data, but also of a wide range of contextual macrodata we will compile that will allow us to interpret and explain variations between nations.

In relation to Part I, Theme 3, the project is intrinsically concerned with issues of citizenship and governance in a multinational context. Indeed, one of the specific modules in Round 1 is devoted to this broad theme. By collecting systematic and consistent comparative data about political, social and economic aspects of national and European ‘citizenship’, the study will seek to monitor and interpret progress towards European integration from the public’s perspective, as well as the factors and cross-pressures that inhibit or promote it. An important asset of the project is the participation of a substantial number of candidate and associate nations, which anticipates the enlargement of the EU and obtains valuable prior measures in those countries.

In relation to Part II, the project has been explicitly set up to develop a lasting Europe-wide infrastructure for rigorous quantitative social science research into key academic and policy concerns. This infrastructure will incorporate not only an outstanding facility for high quality data collection across Europe, but also a central data archiving and dissemination facility to ensure that the data produced will be speedily and conveniently distributed to researchers and analysts around the world.

5 In relation to Part III, the project’s explicit purpose is to generate knowledge that can be used by society and which actively promotes policy-related social science in Europe. For instance, issues covered in the core survey include public perceptions of and attitudes towards immigration, citizenship and multilevel governance. The findings themselves and interpretations of them will be made available not only through exemplary archiving practices, but also via presentations, articles in scientific and policy journals and newspapers, and through the project’s well-documented website. One of the anticipated Work Packages in the second round will be devoted to the training (primarily via the Internet) of young researchers in analysis methods, making use of the project’s dataset to do so.

6 3. Work Content

In total, there are 12 work packages. The following table provides an overview of these Work Packages, showing the title of each Package, the lead Partner and the other Partners involved2:

Work Other Partners package Work package title Lead Partner involved No Co-ordination and implementation of a National Centre for 1 multi-nation survey Social Research, UK _ Person months: 20 (NatCen)

Design, development and process National Centre for 2 quality control Social Research, UK All others Person months: 76 (NatCen)

Zentrum fuer Sampling co-ordination Umfragen, Methoden 3 NatCen Person months: 11 und Analysen, Germany (ZUMA) Zentrum fuer Equivalence of instruments Umfragen, Methoden Natcen, Amsterdam; 4 Person months: 9 und Analysen, Leuven Germany (ZUMA) Sociaal en Cultureel Fieldwork commissioning 5 Planbureau, NatCen; ZUMA Person months: 3 Netherlands (SCP)

Contract monitoring 6 ZUMA NatCen, SCP Person months: 10

Catholic University Piloting and quality control Amsterdam, Natcen, 7 of Leuven, Belgium Person months: 8 ZUMA (Leuven)

University of Design and analysis of pilot studies Amsterdam, ZUMA, Natcen, 8 Person months: 3.5 Netherlands Leuven (Amsterdam) Analysis of reliability & validity of Natcen, ZUMA, 9 mainstage questions Amsterdam Leuven Person months: 13

Norwegian Social Data archiving and dissemination Science Data 10 All others Person months: 48 Services, Norway (NSD)

2 Detailed information about the partners is provided in Section 4.1 below. 7 Norwegian Social Creation of internet-based training Science Data 11 resource All others Services, Norway Person months: 9.3 (NSD) Sociaal en Cultureel Collection of contextual data 12 Planbureau, NatCen Person months: 2.3 Netherlands (SCP)

The following 12 boxes contain the objectives, methodology, timing, resource allocation personnel and responsibilities within each of the work packages.

Work package 1 Objectives: Ensure delivery of the second round of a 24-nation European-wide social survey carried out to exacting standards and according to timetable.

Methodology: Leadership of project, responsible for all deliverables to timetable and for overall budget and contract. Assembling and co-ordinating 24 national project teams and two questionnaire design teams. Arranging and accounting for plenary sessions, board meetings and specialist meetings throughout the project.

Timing and duration: T03 – T26 (27 months)

Resource allocation: 71% - personnel, 12% - travel/subsistence; 17% - overheads.

People responsible for carrying out the work: Co-ordinator, research and administrative assistant.

3 T0 refers to the commencement month of the project. 8 Work package 2 Objectives: Design and implement consistent survey methods, instruments and procedures in 24 nations and ensure compliance throughout.

Methodology: Oversee the specified tasks allocated to all Partners on the one hand, and the national teams on the other. Assess equivalence of procedures and standards and remedy deviations, giving practical assistance where necessary. Embed methodological experiments, analyse them and document in detail the procedures and outcomes in methodological reports and papers to aid future cross-national research.

Timing and duration: T0 – T26 (27 months)

Resource allocation: 69% - personnel; 8% - travel/subsistence; 6% - other specific project costs; 17% - overheads.

People responsible for carrying out the work: Co-ordinator, senior researcher, researcher and research and administrative assistant.

Work package 3 Objectives: Design and implementation of workable and equivalent sampling strategies in all participating countries (with aid of panel of experts). Assessment and continued consultation with participating countries regarding the sampling strategy. Methodology: Implementing appropriate sampling frames and methods in all countries, assessing and ensuring meticulous application. Timing and duration: T3 – T14 (12 months)

Resource allocation: 46% - personnel; 15% - travel; 2% - other specific project costs; 37% - overheads.

People responsible for carrying out the work: Programme Director, Senior Scientist, Senior researcher.

9 Work package 4 Objectives: Coordinating the translation of questionnaires for mult-national, multi-cultural implementation, applying existing guidelines and methods for the translation of the source questionnaire. Reviewing, and adapting where necessary, guidelines and assessment procedures, based on findings from Round 1. Methodology: Developing an annotated source questionnaire in the light of tests and pilots. Providing guidance via an expert panel to national teams on translation and documentation procedures. Reviewing procedures based on findings from Round 1.

Timing and duration: T9 – T16 (8 months)

Resource allocation: 46% - personnel; 15% - travel; 2% - other specific project costs; 37% - overheads.

People responsible for carrying out the work: Programme Director, Senior Scientist, Senior researcher.

Work package 5 Objectives: Oversee commissioning of fieldwork institutes in all countries in accordance with consistent best practice guidelines and checklists.

Methodology: Design of a pro forma set of procedures and a fieldwork specification for participating countries; advice and support for funders and national coordinators in the selection process of survey organisations and in the contracting process. Consult with work package 6 on contract adherence.

Timing and duration: T3 – T8 (6 months)

Resource allocation: 51% - personnel; 9% - travel; 40% - overheads.

People responsible for carrying out the work: Senior scientist.

10 Work package 6 Objectives: Ensure adherence to contractual conditions of national fielding agencies.

Methodology: Apply developed guidelines and questionnaires to monitor contract adherence and implementation; propose remedial action where necessary. Adapt guidelines and questionnaires according to results from Round 1.

Timing and duration: T3 – T26 (24 months)

Resource allocation: 46% - personnel; 15% - travel; 2% - other specific project costs; 37% - overheads.

People responsible for carrying out the work: Programme Director, Senior Scientist, Senior researcher.

Work package 7 Objectives: Overseeing successful two-nation quantitative pilot study; assessment of the quality of questionnaire constructs in the pilot study; detection of problems in interviewer-response interaction in the pilot study; subsequently setting up and implementing evaluation procedures that help to assess and, wherever possible, improve data quality in the main survey. Methodology: Ensuring the uniform fielding of the two-nation quantitative diagnostic pilot studies. Analysis of within and across construct relationships with structural equation models. Analysis of taped interviews. Determining and applying in the main survey a series of measures for evaluating data quality. Preparing reports for potential data users on country-specific and more widespread problems with the data, and where possible, how to correct for them. Timing and duration: T9 – T26 (18 months)

Resource allocation: 25% - personnel; 3% - travel; 56% - other specific project costs; 17% - overheads.

People responsible for carrying out the work: Scientific researcher.

11 Work package 8 Objectives: Quality control of the questionnaire design process and designing experiments to evaluate new measures. Analysing the pilot survey results with a view to maximising the reliability and validity of the final questionnaire. Methodology: Checking content validity of measures. Using SQP for prediction of reliability and validity. Use of split ballot MTMM approach for experimental designs. Analysis and interpretation of measures within the two- nation quantitative pilot surveys to assess question reliability and validity and recommend ways of mitigating problems in final source questionnaire.

Timing and duration: T3 – T14 (12 months)

Resource allocation: 78% - Personnel; 5% - travel; 1% - other; 17% - overheads.

People responsible for carrying out the work: Senior researcher

Work package 9 Objectives: Designing experiments to evaluate new measures. Analysing the main stage survey results to measure the validity and reliability of the measures within it, in order to inform subsequent analysts in ways of mitigating residual problems in the data. Methodology: Report to the coordinating team (destined for all data users) at the time of data release.

Timing and duration: T9 – T26 (18 months)

Resource allocation: 78% - Personnel; 5% - travel; 1% - other; 17% - overheads.

People responsible for carrying out the work: Senior researcher.

12 Work package 10 Objectives: Merging, documenting, archiving, distributing and disseminating the Round 2 dataset.

Methodology: Developing database design, robust systems for merging, harmonising, archiving, distributing and enabling retrieval via internet and other means.

Timing and duration: T16 – T26 (11 months)

Resource allocation: 51% - personnel; 2% - consumables; 6% - travel; 41% - overheads.

People responsible for carrying out the work: Senior researcher, researcher, research personnel and academic experts.

Work package 11 Objectives: Setting up an internet-based training resource for students of comparative research using the data made available through the project. Methodology: Development of two or more internet learning packages, each based on a specific research topic, using data from the survey. Development of complementary on-line study material. Development of web-site, integrating study-material, examples of analysis, on-line descriptive analysis and graphics, and data resources for download and off-line advanced analysis. Timing and duration: T3 – T26 (24 months)

Resource allocation: 51% - personnel; 2% - consumables; 6% - travel; 41% - overheads.

People responsible for carrying out the work: Senior researcher, researcher, research personnel and academic experts.

13 Work package 12 Objectives: Assess, review and set guidelines for the collection of contextual and event data; collect contextual data from existing sources or refer to existing data bases, instruct and advise national co-ordinators in collecting additional contextual and event data. Methodology: Design of a set of procedures and guidelines for participating countries; advice and support for national coordinators in collecting event data and contextual data. Search and refer to existing databases of contextual data. Consult with data archive.

Timing and duration: T3 – T26 (24 months)

Resource allocation: 51% - personnel; 9% - travel; 40% - overheads.

People responsible for carrying out the work: Senior scientist.

The following flow chart shows the timing of the work packages in relation to one another, together with their starting and finishing dates. Month 0 refers to the commencement date of the project.

Timing of The Work Packages Year 0 Year 1 Year 2 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 Work Package 1 Work Package 2 Work Package 3 Work Package 4 Work Package 5 Work Package 6 Work Package 7 Work Package 8 Work Package 9 Work Package 10 Work Package 11 Work Package 12

14 4. Project management

4.1 Overall project co-ordination

As in the first round of the ESS, the overall direction of the project will be carried out by the Principal Investigator’s team within the National Centre for Social Research (NatCen), London (Partner 1). The team will consist of a full-time Principal Investigator (PI), Professor Roger Jowell, together with three full-time colleagues (a senior researcher – Caroline Bryson, a researcher (to be apppointed) and a research and administrative assistant – Mary Keane), all to be based within NatCen. As in Round 1, NatCen will provide all necessary infrastructure support. This team will, in effect, constitute the engine room of the project, with responsibility for implementing it, making it work, maintaining consistently high methodological standards in all participating countries and taking responsibility for its finances and deliverables.

The five other partner institutions and their senior figures (shown below) are the same team as in Round 1. They will all report to the PI’s team, and form the Central Coordinating Team (the CCT) of the ESS.

Partner No. Organisation Principal Scientist Country

1 National Centre for Social Research Prof. Roger Jowell GB

Zentrum fuer Umfragen, Methoden und 2 Prof. Dr. Peter Ph. Mohler D Analysen 3 Sociaal en Cultureel Planbureau Dr. Ineke Stoop NL

4 University of Amsterdam Prof. Willem Saris NL

5 Catholic University of Leuven Prof. Jaak Billiet B

6 Norwegian Social Science Data Services Mr Bjorn Henrichsen NO

The six Partners each have specified and self-contained responsibilities, some of which will continue throughout the study period, and others time-limited. Partner 2 will work closely with the PI’s team and take responsibility for deploying and controlling the work of expert (mobile) task forces. They will also take on the role of contract adherence more generally. Partner 3 will be responsible during the early part of the study for devising and implementing the rules of engagement for the competitive selection of fieldwork subcontractors. They will also be responsible for the new workpackage on contextual data. Partner 4 will be responsible for maximising the reliability and validity of questions within the core survey instrument. Partner 5 will take special responsibility for data quality control. Partner 6 will act as the official Archive for the survey.

As the details of the Work Packages show, the six partners have very unequal divisions of labour. This is because some of their responsibilities are confined to certain time periods,

15 others relate to certain intellectual or functional tasks. Above all, however, a project of this kind requires a great deal of central control in order to achieve consistent (and consistently high) standards. Thus we felt it important for the PI’s role, together with NatCen’s accompanying institutional role, to be performed by full-time people who will be present throughout the two year period. Their role is, as we have shown in Round 1, central to the project’s successful co-ordination. They will therefore not only be fulfilling the ‘engine room’ function. In addition, they will have considerable intellectual and managerial responsibilities together with the overall task of animating and orchestrating the whole operation.

The CCT will work closely together to ensure the achievement of uniformly high standards according to the specified timetable within all participating nations. They will also collaborate in the production of a series of accessible papers, reports, tables and analyses on which analysts of the data will be able to draw. These reports will also be designed to inform and influence future rounds of the ESS and future multinational studies in general. The CCT will ensure continuous adherence to contractual objectives, scientific methods and timetables.

As in Round 1, the budget sought from the Commission covers only around a third to a quarter of the total costs of a multi-nation ESS. The participating nations, via their respective NSFs, will cover the costs of the fieldwork, coding and keying of data in their own countries, plus the costs of at least a half-time National Co-ordinator (NC) in each country. Meanwhile, we anticipate that the European Science Foundation will cover the costs of travel, subsistence and organisation of the various meetings of scientific advisory boards, methodological committees, NC and Questionnaire Design Teams (QDTs) which the project requires to ensure its success.

The project will draw on expertise from a variety of specialist groups, all of which were set up for Round 1 and will be re-constituted as necessary for Round 2. The main advisory group is the Scientific Advisory Board (SAB), comprising one senior social scientist per participating country – each appointed by the respective national funding agency – plus two representatives each from the Commission and the ESF. The Board is chaired by Max Kaase, from the International University of Bremen. It meets once every six months or so to scrutinise the programme of work and advise on all matters of substance.

A second smaller specialist board, the Methods Group comprises five senior survey methodologists, each from a different country. They advise on new approaches to persistent methodological difficulties, such as maximising response rates, attaining equivalence and the possible future use of mixed modes of data collection. The Group is chaired by Denise Lievesley, from UNESCO Statistical Office, Canada.

A multinational Sampling Panel of five sampling specialists exists to advise on, guide and sign off all national sample designs to ensure that they adhere to the detailed guidelines they have produced in an attempt to ensure optimal comparability between nations in sample quality and coverage. Each member of the Panel is allocated responsibility for approving the detailed sample designs of a group of countries, with whom they work closely until an optimum strategy has been achieved. Where necessary or helpful, the relevant sampling expert might pay a short visit to a particular country to deal with problems and give practical 16 support. Our insistence on rigorous random sampling everywhere, with no substitution, is particularly important, marking the project out as one that seeks to raise rather than bow to prevailing standards of quantitative methodology in some parts of Europe. The Sampling Panel is chaired by Dr Sabine Haeder, from ZUMA.

A Translation Taskforce has been set up to provide and guide the protocols and training materials in respect of the all-important task of translating the questionnaire from its source language, English. Too many cross-national studies have been hijacked by sub-optimal translation procedures which in turn lead to non-comparable research instruments and artefactual differences between nations. The Translation Taskforce, chaired by Dr Janet Harkness, from ZUMA, draws up meticulous guidelines about the procedures to be followed on the ground, trains national co-ordinators in methods of approaching the task, and offers advice and practical help to individual nations as necessary, based on the most up-to-date theories and procedures for achieving equivalence. Once again, the project is breaking new ground in translation methodology and is hoping to export it throughout Europe.

As in Round 1, two specialist multinational Questionnaire Design Teams (QDTs) will be appointed in Round 2 following a Europe-wide competition. Potential applicants will be invited to argue why their particular topic and team is suitable for one of two 60-item ‘rotating’ modules of the questionnaire. The proposals will be assessed and judged by the SAB, who will collectively appoint the two teams of subject specialists. Once appointed, these teams will then work closely with the Central Co-ordinating Team to construct the two modules for piloting alongside the 120-item core questionnaire.

Within each of the participating countries in Round 1, a half-time National Co-ordinator (NC) plus a survey organisation were appointed by the national funding agency (in almost all cases via an open competitive process), but according to criteria contained in a specification produced by the CCT. In order both to achieve continuity and to maximise returns on the investments they have already made, many national funding agencies will wish to re-appoint the same teams for Round 2 (and possible future rounds).

Thus for the most part, the teams will be in a position to build on their working relationships with the CCT, the Sampling Panel and the Translation Taskforce and, based on their joint evaluations of Round 1 fieldwork, to work for still higher and more consistent quality in Round 2. By the same token, the national teams will be in a better position in Round 2 to contribute critically to important aspects of the project, based not only on their local knowledge of past research in their country, but also on their detailed experience of the first round of this project.

17 The following chart summarises the organisational structure we envisage:

ORGANISATIONAL STRUCTURE OF ESS ROUND 2

SCIENTIFIC ADVISORY BOARD 2 Q’aire Design Methods Teams Group

Archiving; OVERALL DESIGN Analysis training Partner 6 & COORDINATION 22 National Partner 1 Co-ordinators & Survey Institutes Pilots; Data quality Partner 5 Sampling; COUNTRY COUNTRY Translation; 1 2 Contract adherence Partner 2 COUNTRY 3

Question reliability Field Commissioning; & validity Context & event ETC Partner 4 data Partner 3

4.2 Schedule of meetings

As we have found during Round 1, a project of this scale and complexity needs to be held together by a sense of collective purpose and ‘ownership’, accompanied by strong mutual obligations. Otherwise, national interests will always tend to triumph over multinational interests. To achieve this sort of atmosphere, and above all to sustain it over the life of the project and beyond, regular face-to-face contact and discussion among key participants at all levels is invaluable. Individual voices need to be heard and difficult decisions arrived at by consent.

18 The table below shows the planned schedule of meetings for Round 2. Our experience from Round 1 confirms that such a schedule works well.

19 Who Frequency

Partners 12 times – every two months

Scientific Advisory Board 4 times – every 6 months

Methodological Committee 3 times – on demand

National Coordinators (NCs) 3 times before the start of fieldwork

Questionnaire design teams (QDTs) 4 times during the questionnaire design process

Representatives from the CCT will be present at all these meetings. In addition, the Commission’s Scientific Officer is given details of the schedule and is welcome to attend any scheduled meeting or to request ad hoc meetings with the PI or the Partners when the need arises.

4.3 Reporting procedure

The PI’s team will submit a Progress Report to the European Commission at 6 monthly intervals. The first and third of these will contain a short description of the work done in the previous six months, including the scientific progress, management and timetable considerations, and the policy relevance where applicable. These reports will be not more than 5 pages long and will be submitted to the Commission’s Scientific Officer via e-mail.

The Progress Report at the end of the first year will be fuller – around 20 pages long – referring in more detail to the whole of the period and assessing progress towards all the Deliverables. It will also include an executive summary which will be submitted in electronic format. It will be submitted to the Commission in conjunction with cost statements.

The PI’s team will also submit a final report within two months of the termination of the contract. It will be suitable for a scientific assessment of the work without the need for

20 referees to refer to any previous reports or papers. An edited version of this report will also be submitted to the Commission for publication.

21 5. Methodology

5.1 Participating countries

The following 24 countries are currently participating in the first round of the project, each taking responsibility for its own national co-ordination and data collection costs:

EU-countries: Austria, Belgium, Denmark, Finland, France, Germany, Greece, Ireland, Italy, Netherlands, Portugal, Spain, Sweden, United Kingdom

Candidate countries: Czech Republic, Hungary, Poland, Slovakia, Slovenia

Other countries: Israel, Liechtenstein, Norway, Switzerland, Turkey

In Round 2, we anticipate around 22 participating countries, allowing for the possibility of, say, two or three Round 1 nations dropping out of Round 2 and, say, one or two new nations joining in. As was the case at Round 1, until we get new or renewed commitments from each country, we cannot be certain of the precise number or identity of countries that will participate in Round 2. At a recent meeting of senior representatives of the Round 1 national funding agencies, unanimous support was expressed for a second round. Once again, we anticipate that national contributions should amount in total to around four times the Commission’s contribution.

5.2 Questionnaire structure and development

After the substantial development phase during Round 1, the content of the core questionnaire (around 30 minutes of an hour long interview) is more or less fixed for Round 2 (and any future rounds) of the ESS. In determining the content of the core questionnaire, we kept in mind the primary purpose of the project, which is to measure and explain continuity and change in three broad domains.

People’s value orientations (their world views, including their religiosity, their socio- political values and their moral standpoints)

People’s cultural/national orientations (their sense of national and cultural attachment and their – related - feelings towards outgroups and cross-national governance)

22 The underlying social structure of society (people’s social positions, including class, education, degree of social exclusion, plus standard background characteristics such as age, household structure and gender)

Questions on the following aspects of people’s value orientations cover:

Left-right orientations (egalitarianism and interventionism) Libertarian-authoritarian orientations Environmentalism Basic human and moral values

Trust in institutions and confidence in the economy Interest in politics and voting turnout Personal and system efficacy

Religious orientation, present and past

Questions on the following aspects of cultural and national orientation will be part of the regular core:

Citizenship and national identity Prejudice towards ‘outgroups’ Attitudes to the EU and other forms of multi-level governance Attitudes to migration

The following socio-economic and socio-demographic items will form part of the regular core:

Respondent and household demographic characteristics Education of respondent, children and partner Racial/ethnic origin Work status and unemployment experience Occupation and SES of respondent and partner Economic standing/income of household Subjective health status of respondent Social trust and networks Objective and subjective indicators of poverty Experience and fear of crime Access to and use of mass media

23 The final part of the core is a supplementary questionnaire reserved for two purposes. First, it is the vehicle for a well-established 21-item scale developed by the Israeli psychologist, professor Shalom Schwartz, designed to classify respondents according to their basic value orientations. The second purpose of the supplement is methodological. It carries question wording experiments and other methodological tests designed to inform future rounds or generally to advance the state of the art.

Within the confines of a 30-minute core questionnaire, no topic can of course be tackled exhaustively. The two 15-minute rotating modules offer much greater scope for that.

In Round 1, we advertised Europe-wide for proposals from potential multinational Questionnaire Design Teams. The SAB then selected two teams from the applications. A similar Europe-wide competition will be held for the Round 2 QDTs. We hope that by being able to conduct the tendering procedure under a more relaxed time schedule than in Round 1 and within an academic environment that is more aware of the opportunity involved, we will receive a larger number of applications.

The development of the Round 2 questionnaire will follow the same stages as in Round 1, although in Round 2 more emphasis will of course be given to work on the rotating modules (with a relatively fixed core questionnaire). The development stages are as follows, and are all designed to ensure not only that all questions pass both a relevance and a quality threshold, but also that they can plausibly be asked within all participating nations.

Stage 1 The first stage - based on the teams’ proposals for the rotating modules (and specialist papers on the core) - tries to ensure that the various concepts to be included are represented as precisely as possible by the candidate questions and scales. Subsequent data users require source material that makes these links transparent, so the whole process is documented in detail (see below).

Stage 2 To achieve the appropriate quality standard, the proposed questions and scales undergo an evaluation using standard quality criteria such as reliability and validity. Where possible, the evaluations are based on the proven properties of similar questions in other surveys, but in the case of new questions, they are based on ’predictions’ that take into account their respective properties. But validity and reliability are not the only criteria that matter. Attention is also given to other considerations such as comparability of items over time and place, expected item non-response, social desirability and other potential biases, and the avoidance of ambiguity, vagueness and double-barrelled items.

Stage 3 The next step constitutes the first translation from the source language (English) into one other language for the purpose of two large-scale national pilots. A translation panel of in- ternational experts guides this process to ensure optimal comparability between the two versions (see Stage 6).

24 Stage 4 The fourth step is a two-nation large-scale pilot (500 cases per country), which also contains a number of split-run experiments on question-wording alternatives. Some of these interviews are tape-recorded for subsequent analysis of problems and unsatisfactory interactions.

Stage 5 The pilot is then analysed in detail to assess both the quality of the questions and the distri- bution of the substantive answers. Problematical questions, whether on grounds of weak reliability or validity, or because they turned out to produce deviant distributions or weak scales, are sent back to the drawing board.

Stage 6 The final step is the production of a fully-fledged ‘source questionnaire’, incorporating the core and new rotating modules, ready for translation from English into the languages of all participating countries. The Translation Panel carries out or commissions a small number of cognitive interviews with translators who think aloud as they attempt to translate the source questionnaire, helping to identify recurring problems and to enable relevant annotations to be added to the source questionnaire. The various question authors also provide material for annotations. The aim of these annotations is to provide guidance to the translators on the intention behind, and proposed measurement properties of, each question. This is especially useful where the English words have no direct equivalents in certain other languages. Each participating country carries out a small-scale pre-test to iron out any remaining translation or substantive issues, a process which may in turn lead to further minor adjustments to the source questionnaire.

As noted, every stage of the above process is documented in detail and the resulting report is distributed to all national co-ordinators. It is also made available on the web both for subsequent users of the data and for other researchers planning similar cross-cultural studies.

5.3 Contextual and event data

All survey responses can be affected by timing and context. But certain types of attitudinal data are particularly prone to such effects. So we wish to integrate national and European- level contextual data into the datasets in order to increase their analytic power – in particular to help identify those national variations that owe more to exogenous factors than to underlying attitudinal differences. Country-based demographic and socio-economic macro statistics are only part of this story. Equally important are contextual socio-political factors in a particular country such as the proximity of an election, industrial or political unrest, or even a natural disaster. Sometimes a major event may occur in the middle of fieldwork, in which case responses before and after the event can be compared. We need to monitor circumstances or events in the period running up to fieldwork as well as during fieldwork itself.

In order to enable cross-national comparisons and historical micro analyses based on our data to take account of these sorts of factors, we are creating what might be referred to as an ‘event data bank’ which will briefly document all major political, social and economic factors that are 25 likely to have a substantial bearing on a particular country’s (or a group of countries’) response patterns in a particular time period. Compiled largely by the NCs in a format provided by the CCT, the data are added to the national datasets before their release.

Routine country-specific background information is also important to an appreciation of subtle differences of context within participating countries. Many statistics collected and published by Eurostat already provide this information in an appropriate form, country by country. Examples are GDP, unemployment rates, measures of inflation, birth rates, longevity statistics, and so on. But by no means all participating countries are members of the EU, so some of these data may not be available in similar form for all participants. We nonetheless hope to be able to compile a parsimonious list of comparable background statistics for all participating countries (allowing for a few gaps) to accompany their datasets. The list will also include descriptive features of each society, such as its electoral system, the nature of its incumbent government, its religious profile, and the size, composition and distribution of its major minority groups.

26 5.4 The sample

The survey aims to be representative of all people in the residential population of each participating nation aged 15 years old and above (with no upper age limit), resident within private households, regardless of their nationality, citizenship or language. A ‘resident’ is defined as anyone who has been living in the country concerned for at least one year and who has no immediate concrete plans to return to his or her country of origin.

Although we recognise that the population of a country also includes the ‘homeless’ and people living in certain institutions (such as old age homes, hospitals and prisons), for reasons of economy and consistency, we exclude the homeless and parts of the institutional population from our universe.

In any country where a minority language is spoken as a first language by at least 5% of the resident population, the questionnaire is translated into that language too and appropriate interviewers will be deployed to administer the interviews.

In some countries, registers of persons or voters exist, from which national samples are routinely drawn, and they are supplemented where necessary to satisfy the ESS’s more inclusive definition of the universe.

Each national sample is selected by strict probability methods (random sampling) at every stage so that the relative selection probabilities of every sample member are known and recorded in the data set. Quota sampling will not be allowed at any stage. All interviews will be conducted face-to-face in people’s homes.

A key function in the study’s co-ordination is to ensure that each national sample is designed to give every member of the relevant population in that country a known, non-zero probability of selection. We have allowed in the budget for sampling specialists to visit a number of countries, as necessary, to help ensure that this aim is properly achieved.

Rather more important than each country’s sample size per se is its ‘effective sample size’, since different sample designs can generate very different standard errors, even when they are of the same size. In particular, the clustering of a sample into a number of discrete geographical areas (highly desirable on budgetary grounds in most surveys, including the ESS) produces statistical ‘design effects’ which reduce its ‘effective’ size. Thus, in combination, different national response rates and different ‘design effects’ often lead to large discrepancies in ‘effective’ sample sizes. We therefore specify the size of simple random sample we need to produce the same standard errors as the design actually used. To achieve such symmetry, actual sample sizes will all be larger and vary between countries. We also ensure through tight co-ordination that the actual sample design in all countries minimises the effects of different selection probabilities for different households - which would otherwise contribute further to design effects.

27 The minimum specified ‘effective’ sample size is 1500 in all countries, amounting to numerical sample sizes of at least around 2000 per country and more in some cases.

Every step of the selection process has to be documented and ‘signed off’ in advance by a member of the expert task force on sampling that we have set up. At the same time, consideration is given to the procedures proposed for achieving higher than normal response rates in most countries and lower than normal variation between them. A minimum target response rates of 70 per cent is specified, recognising that this target is high (or very high) for certain countries but wishing nonetheless to raise rather than accept current norms. Although in the end response rates cannot be legislated for, they can be heavily influenced by insisting - as the specification will do - on certain fieldwork procedures that maximise the chances of recruiting elusive sample members.

5.5 Fieldwork and data preparation

For Round 2 of the ESS, as in Round 1, the mode of data collection is face-to-face interviewing in all countries, almost universally considered to be the most effective form of data collection for social surveys, both in respect of response rates and data quality.

In most countries, the fieldwork is sub-contracted to a high quality special fieldwork agency (whether governmental or commercial), rather than carried out in-house by the NC. We have developed a detailed central specification for all participating nations, containing details of what is required, by when and in what form. It specifies, for instance:

details of the sampling principles and methods the target minimum response rate (70%) and the procedures needed to maximise it outcome codes required for each address so that response rates and non-response are defined, documented and consistently calculated target maximum non-contact rates (3%) and procedures for minimising it detailed procedures for randomising selected individuals at each primary unit permitted assignment sizes for interviewers should not exceed 24 issued sampling units (i.e. 24 named individuals, households or addresses) and no interviewer should carry out more than two assignments procedures for identifying ‘difficult-to-contact’ cases the requirement to translate questionnaires into minority languages (‘the 5% rule’) and procedures for doing so the requirement to conduct and document approved quality control back-checks (by telephone, by post or in person) on at least 5% of respondents, 10% of refusals and 10% of non-contacts the ethical guidelines to be followed (International Statistical Institute norms) the deadlines to be met and the forms of data delivery

Potential field agencies also have to supply details of, for instance:

their spread of interviewers, level of training and experience 28 their proposed arrangements for survey-specific briefing and training their calling strategies to maximise response rates, eg the minimum number and spread of calls per address across times of day and days of week the procedures and arrangements for translating their arrangements for documentation and reporting their existing and proposed supervisory and back-checking arrangements

Since it may later be necessary to post-stratify or weight the survey data to correct for non- response bias, a small number of extra data items (such as observable dwelling and area characteristics) are recorded by interviewers about every selected primary unit, whether or not they are successfully interviewed.

We insist on the implementation of quality control procedures, involving meticulous, consistent and continuous checks on quality control within every participating country. Each nation, for instance, has to keep records of:

date and time and outcome of each call at each address interviewer characteristics (including gender, age, education and certain value orientations) reasons for non-response, coded into various detailed and pre-specified categories detailed data about non-respondents (including, where possible, details of their gender, age band and housing conditions how representative each national sample is (relative to known population distributions) full details of item non-response details of interviewer variance in response rates and response sets

To maximise potential response rates, the overall duration of fieldwork should be between 30 and 90 days from a specified starting point.

The ‘official’ questionnaire is accompanied by an ‘official’ code-book that specifies the standard codes and data formats to be adopted by every participating country. Almost all questions are pre-coded to standard formats. But each country’s team carries out a series of pre-specified range and logic checks where these have not already been done such as in countries who employ computer-assisted personal interviewing (CAPI).

We ensure overall contract adherence in each country via a series of established measures.

5.6 Archiving and dissemination

As in Round 1, all participating nations will undertake to submit their data to the Archive (Partner 6) in a pre-specified format and according to the specified timetable. In fact, there will be a choice of format, but each option will be readily convertible into widely used statistical package formats, or ASCII, without losing information. The available formats will be made known to all NCs well in advance of the fieldwork period so that all documents and procedures are prepared with the deposit stage in mind.

29 Once at the Archive, all the datasets will be assembled, merged, documented and disseminated in a user-friendly format within 6 months. A key function of the CCT during the post-fieldwork phase will be to avoid timetable slippages and ensure that these ambitious plans are faithfully implemented.

The Archive’s roles include the following tasks:

evaluating and assessing the content, structure and format of datasets and documenta- tion error checking, correction and validation, in consultation with the NCs, the CCT and where appropriate the QDTs standardising or harmonising national variables and merging contextual data merging all national data into an integrated multinational dataset documenting the datasets according to the ‘DDI meta data standard’ converting the datasets, meta data and accompanying technical report into the DDI standard generating portable and system files for all common statistical packages (SPSS, SAS, SYSTAT, etc), based on the generic DDI format generating a single comprehensive codebook for integration into the technical report incorporating the questionnaires into a universal document exchange format, such as PDF producing a full range of indexes and associated meta data to enable searches and retrieval functions to be employed, including a multilingual thesaurus that enables users to search for key words in different languages

To ensure that the datasets and documentation are readily and speedily available, a meta database will be developed containing all textual elements (such as questionnaires, technical report, data definitions etc). All of the following measures will thus be employed in both the short and longer term:

the use of lasting media for data storage doubling-up in backup systems a state-of-the-art storage environment storage of meta data in a generic format regular control and migration of data to more timely storage media regular migration of data files to appropriate formats regular adjustments of conversion software to developments in statistical package for- mats

In accordance with data protection regulations in various countries, only anonymised data will be made available to users. As an extra precaution, each national team will check their own data with confidentiality in mind before deposit.

Distribution of data will be offered at no charge to the scientific community via several media and will, where possible, be tailored to the preferences of individual users. Data users will have access to data either directly from Partner 6, or via CESSDA/IFDO archives. Within the 30 same general conditions of access, other Archives will be free to develop and implement their own technologies for access and distribution.

The availability of both Online download (via the Internet) and offline distribution (via disks) will give maximum flexibility to users, who will receive the data ready for analysis in all common statistical packages, together with the technical report, codebook and questionnaires in a universal document exchange format.

The CCT will determine the content and structure of the meta data documents that accompany the dataset, but they too will be available within six months of the deposit of national datasets to the Archive. Until then, trial datasets will be made available in confidence to the NCs, the QDTs and the CCT so that preliminary analyses may be undertaken as a means of ‘proving’ the data in advance of their widespread distribution.

The website will contain full details of the data content, access arrangements, codebooks and other documentation. In addition, news about the project will be distributed to mail groups of users. Over time, all articles, books and papers based on the project – whether substantive or methodological - will be documented and catalogued by the Archive.

A publicly-available and well-documented dataset of this quality and range will clearly be in wide demand among data analysts of all sorts, whether in mainstream academia, policy research institutes, government or the private sector. As noted above, the NCs, QDTs and the CCT will have a small lead among potential users arising out of their involvement with the trial dataset. Although they will be free to exploit that lead and to be among the earliest to publish findings after the dataset has been released, we will on no account delay publication to exaggerate that natural advantage. As soon as the dataset is ‘proved’, it will be released to the social science community at large with as much fanfare as we can muster.

As far as possible we will present the dataset in a way that encourages its full potential to be realised. So we will use what influence we have via the release or accompanying documentation to discourage its use as just a ‘league table’ of nations in relation to whatever variable comes to mind. Too many comparative datasets are used predominantly in that way and therefore tend to raise as many questions as they answer, notably about the source of such differences (whether historical, cultural, political or circumstantial). Part of the reason for including event and contextual data in the dataset, plus such a wide range of background variables for each country, is that it will help encourage secondary analysts to take due account of variations in each country’s circumstances and arrangements as possible explanations for differences in individual attitudes and behaviour patterns. This should add an important dimension of explanatory power to the dataset and to the analyses that emerge from it.

5.7 Methodological experiments and developments

31 A new feature of Round 2 of the ESS will be a comparison of the quality of survey measures in the different countries. Such a comparison is necessary because it is hazardous to compare results from different countries without a correction for measurement error. Otherwise, no distinction is impossible between substantive differences and variations in data quality. We therefore propose to carry out a modest test of differences in the quality of measures in all participating countries within the self-completion questionnaire supplement. These experiments will be designed during the early stages of Round 2 and carried out during the main fieldwork period. Partner 4 will be responsible for the design and analysis of the experiments, which would employ a split ballot Multitrait-Multimethod design to establish the validity and reliability of the measures. The results will be written up in time for them to inform subsequent analysis and to provide ways of mitigating residual problems in the data.

A second new feature of Round 2 will be its contribution to training in survey measurement and analysis in Europe. We have already referred to the fact that the ESS is intended as a standard-bearer for methodological rigour, making all its procedures as accessible and as transparent as possible - from mundane accounts of various stages of questionnaire construction to sophisticated statistical tests based on embedded experimental designs. Indeed, to publicise and disseminate this work, several of the national grant applications are attempting to make provision for seminars and conferences on both substantive and methodological aspects of the project.

But we also intend at Round 2 to provide an internet-based training resource for students of comparative research, based on the data collected at each round of the project – an excellent extra way of announcing and promoting the results to the social science community at large. The new training programme will be accessible via standard web-browsers connected to the Internet.

It will consist of two or more learning packages, each containing a carefully-compiled and thoroughly-documented dataset. Each dataset will consist of variables from one or more of the topics in the survey, as well as background variables and relevant contextual data. Examples of possible topics are attitudes towards immigrants, political participation and political interest, and moral values.

For each learning package, on-line study material will be available that has developed by leading European scholars in the field, plus examples and guidelines on the limits of survey data. Students will thus be guided gently through the process of empirical analysis. The intention of these packages is to inspire and stimulate both the students' curiosity and the collaborative spirit of comparative research. The learning packages will be designed with an increasing degree of complexity, from basic descriptive statistics to advanced multivariate and multilevel analysis. But while descriptive analysis and graphics will be fully integrated into the web site, more advanced statistical analysis will require datasets to be downloaded into all standard statistical packages – an available option within the package which might well attract more sophisticated users too.

The main users of the training resource are likely to be European postgraduate students with limited training in social science methodology, an important community to introduce both to 32 the dataset itself and to quantitative data more generally. The packages may, however, also appeal to students in secondary education, for instance in their empirical coursework.

A third methodological refinement in the second round will be to make better use of the national pre-tests than we did in Round 1, where they were designed only to be ‘dress rehearsals’ of the translated questionnaire in all countries. Important though this function is, we missed the opportunity presented by the fact that 30 trial interviews in each of some 20 countries adds up to a sizeable database that would sustain a measure of generic analysis. Had a sample of these pre-test interviews been tape-recorded, transcribed and translated, we might well have been able to spot and rectify certain generic weaknesses in questions that were likely to affect their reliability and validity at the main stage. Discovering and attempting to rectify these problems in retrospect is clearly a less good option.

So we will seriously consider using part of the methodology budget in Round 2 for enhanced use of the multi-nation pre-tests at the final stage of questionnaire design.

33

6. Deliverables and milestones

6.1 Deliverables

The following table lists the project’s principal deliverables:

Deliverable Deliverable Title Delivery Nature Dissemination No Date 16 Level 15 17 1 Report on fieldwork contracts 10 R CO 2 Report on pilot question reliability 15 R RE 3 Sampling Report 16 R RE 4 Report on questionnaire equivalence 18 R RE 5 ESS Final Report 26 R PU 6 Quality Assessment Report 26 R PU 7 Report on contract adherence 26 R CO 8 Report on process quality 26 R RE 9 Report on main stage question 26 R PU reliability 10 Data Set and Code Book 26 Other PU 11 Fully archived dataset 26 Other PU 12 Data distribution mechanism 26 Other PU 13 Information and support system for 26 Other PU ESS data users 14 Internet-based learning resource 26 Other PU 15 Database of contextual and event data 26 Other PU for all participating countries

15 Month in which the deliverables will be available. Month 0 marking the start of the project, and all delivery dates being relative to this start date. 16 R = Report W = Workshop involving people external to the project C = Conference 17 PU = Public RE = Restricted to a group specified by the consortium (including the Commission Services). CO = Confidential, only for members of the consortium (including the Commission Services). 34 6.2 Milestones

The major milestones of the project are as follows:

Date of Milestone Questionnaire Design team appointments T4 National Coordinators and field agency appointments T8 Questionnaire development T9 Field quantitative pilots T12 Sample design and selection T15 Translation T17 Database design T19 Main fieldwork T21 Data coding/cleaning T23 Data deposit to NSD Archive T23 Data checking/weighting T25 Data proving T26 Data release T26 Technical reporting T26

35 7. Exploitation plan

The central aim of this project is to produce an exemplary multinational dataset and to make it freely and speedily available to the academic social science and social policy communities in Europe and beyond.

We will nonetheless strongly encourage researchers within the Partner institutions, as well as the NCs and QDTs, to make early use of the dataset as a rich source for primary analyses and reporting. But no funds are to be made available for such work and under no circumstances will we delay release of the dataset to accommodate it.

So the dissemination and exploitation activity within the project is of three kinds.

First, we will create and document a rigorous new dataset and distribute it widely via the most up-to-date electronic and other means available.

Secondly, we will make available via the web and other means all the working papers, background papers and methodological reports that we produce in the course of our work. These will act as a vital ‘record’ not only for the benefit of data analysts, but also for future rounds of ESS and for comparative research more widely. We will also produce and encourage journal articles on sampling, translation, question equivalence, data quality and so on, based on the outcomes of experiments and tests embedded into the survey design. And we will continue to give presentations at national and international meetings on important aspects of the project design and implementation.

Thirdly, we will be developing an internet-based training resource for students of comparative research, based on ESS data – an excellent extra way of announcing and promoting the results to the social science community at large (see Section 5.7).

Outside the timetable of the project itself, we intend to produce an edited volume outlining the survey’s methodology - its strengths and weaknesses, and what we can learn from the undertaking.

36