Theme: Effects of non-sampling errors on the total survey error

0 General information

0.1 Module code Theme- Effects of non-sampling errors on the total survey error

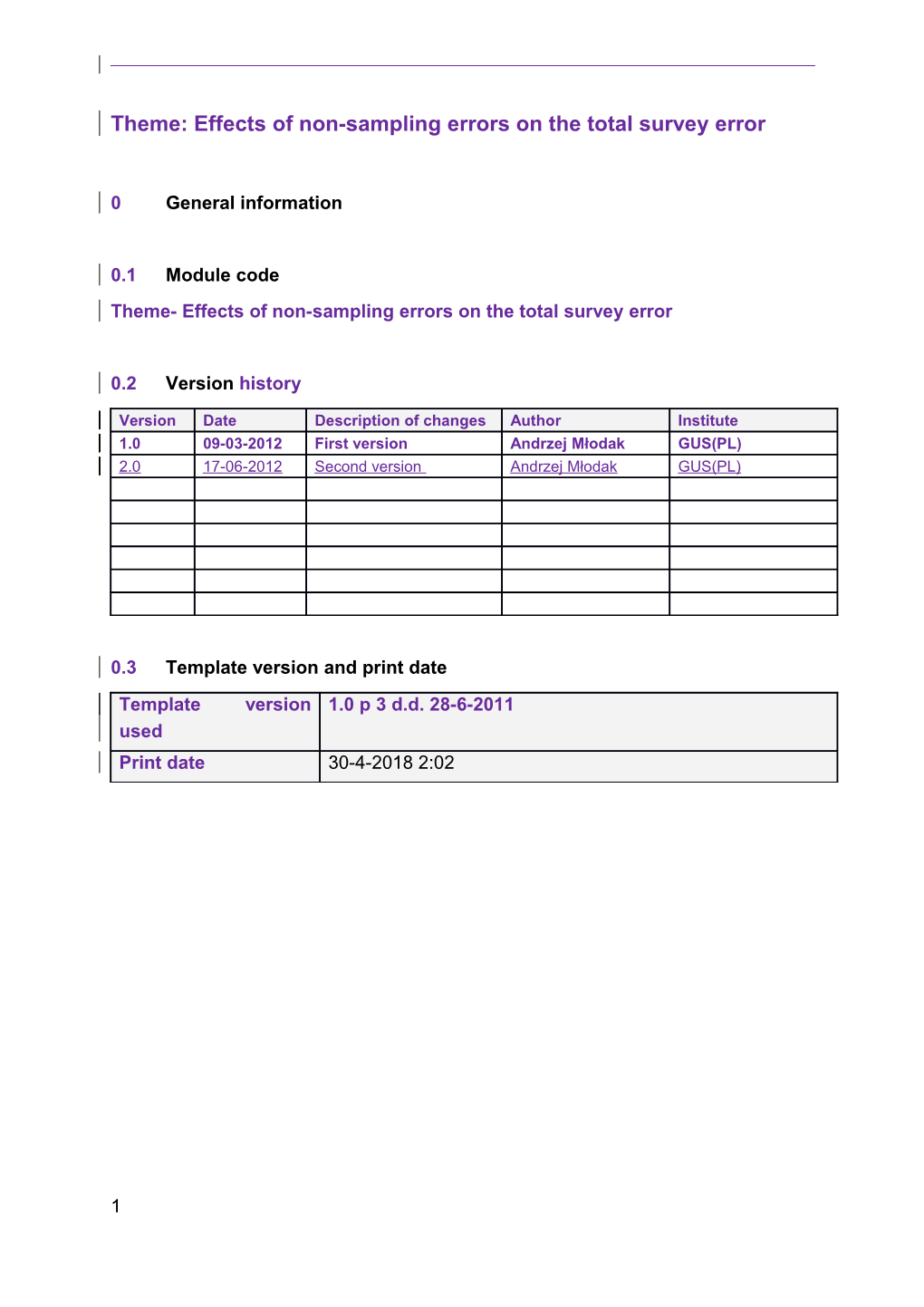

0.2 Version history

Version Date Description of changes Author Institute 1.0 09-03-2012 First version Andrzej Młodak GUS(PL) 2.0 17-06-2012 Second version Andrzej Młodak GUS(PL)

0.3 Template version and print date Template version 1.0 p 3 d.d. 28-6-2011 used Print date 30-4-2018 2:02

1

Contents

2

General section – Theme: Effects of non-sampling errors on the total survey error1

1. Summary

In this module we present the main problems connected with the impact of non– sampling errors on the total survey error and their connection and interaction with sampling (random) errors. We will show that non–sampling errors can affect the sampling ones (e.g. due to the fact that efficiency of the selected sampling scheme depends on the quality and properties of the sampling frame and the type of estimators to be used). The module classifies effects of frame errors, measurement errors, processing errors and non–response errors. This is followed by a deeper analysis of the different impact produced by unit non–response and item non-– response. The importance of models in survey sampling is also discussed in this context.

2. General description

2.1. Frame errors As it was stated in the main theme module and in the module “Populations, frames, and units of business surveys” – both of contained in the chapter “Statistical Registers and Frames”, the survey frame identifies the statistical units of the population beingunder study observed that are, measured by a survey. Not only does Iit not only enumerates the units of the population but it also givesprovides the required information to required to identify and contact these units. The set of the units is named the frame population. Recall, on the basis of the aforementioned sources, that the quality of the survey frame affects the quality of the survey’s final results. In this context, the coverage of the target population by the survey frame (frame population), the accuracy of the contact, stratification attributes of the units, the actuality, the timeliness of theinformation about units, quality of administrative registers in terms of their basic characteristics of them are important factors deciding onaffecting the proper quality of the sampling frame and, in consequencetly, of sampling, data collection, processing and analysis. The proper construction of the frame constitutes the basis of efficiently planned statistical surveys. It should take into account the final goal of the

1 I’m grateful to Mrs. Judit Vigh (Hungarian Central Statistical Office) for valuable comments and suggestions. 3

survey as well as resulting statistics to be collected. Data should also refer to a given time interval. Therefore, the preparation of the frame has to be based on available administrative registers, which are permanently updated and controlled. Despite careful treatment of databases used for frame definition, some unavoidable errors may occur. However, the problem is how to identify them and how their impact on final results of the survey can be minimized. One of the main problems to do with frame errors is the under- or over- coverage of the population, both of which denote deviations between the frame population and the target population. Namely, under-–coverage occurs if some units belonging to the target population are not included in the frame population, while over-–coverage is observed if some units included in the frame population do not belong to the target population. As a result of under-coverage, observations are not collected for a part (sometimes larger) of the target population and therefore the collected statistics for the population may be seriously biased. This can also imply inadequacy of variance estimation. In other words, if the omitted part of the population includes some outliers, which have no ‘equivalents’ in the actual frame, variance will be underestimated. On the other hand, if all (but relatively few) outliers are included in the frame and the omitted units are ‘typical’, then variance tends to be overestimated. Over-coverage occurs when the frame contains units that are of no interest. M. Bergdahl et at. (2001) state that over-coverage may be regarded as an “extra” domain of estimation and one of its consequences (in comparison with no over– coverage) is an increase in uncertainty when estimating the “regular” domains. If the unit’s membership in the target population is not checked, there may be a bias. The number of ‘defective’ units is the main factor which determines the level of inaccuracy of survey results. So, the question of how to reduce this problem can be a major concern for the researcher. M. Bergdahl et al. (2001) correctly observes that a unit outside the target population, which receives a questionnaire, may be more or less inclined to return it than a unit belonging to the target population – it is easy to return; on the other hand, there seems to be no reason to fill in the questionnaire. Similar unwillingness may be also expressed by over-covered units – they can feel that their participation in the survey is redundant. Such problems have various character and sources. Below we present the most important ones.

4

One significant factor contributing to over- or under-coverage are differences within the population. Of course, difference for the whole population can also be reflected in any subpopulation. On the other hand, under-coverage in one domain can simultaneously be over–coverage for another. For example, if we are going to conduct simultaneous sub-surveys for enterprises employing up to 10 persons and more than 10 persons, then a unit correctly belonging to the first group but erroneously included in the latter will be under–covered in the subpopulation of smaller businesses and over–covered in the subpopulation of larger units. However, sometimes such differences between units may be balanced, i.e. if some units are erroneously included in the higher subpopulation while others are incorrectly classified to the lower. So, some differences may ‘cancel out” and hence the bias and variance of statistics for the whole population may be not significantly distorted. But in business statistics such situations seem to be quite rare and may concern only some features. Many differences (e.g. in terms of the number of employees) are very hard to be ‘balanced out’ in this way. In general, problems with the sample frame result from the quality of data for statistical features characterizing the population and chosen as classifiers for possible subdivisions. These data usually come from administrative registers or the same survey conducted in previous periods or from a pilot survey, if applicable. There are different types of such errors, e.g.

not registering an active enterprise or not removing a inactive enterprise from the register (despite of relevant legal obligations), the syndrome of ‘shadow economy’,

improper classification codes (e.g. an erroneous SIC code, NACE code or territorial code, i.e. the code of a unit of territorial division, where the enterprise is located),

poor identification of local units or internal KAU within a given enterprise (e.g. no information about subsidiary companies in the relevant parent company),

incorrect or outdated basic data submitted during the registration process (e.g. in Poland every economic entity is obliged to submit information about every change in its legal status, ownership or organizational structure and the number of employees to the National Official Register of Entities of National Economy (REGON), but there are

5

many cases, especially for smaller businesses, where this obligation is not fulfilled,

problems with the quality of data from previous editions of a survey (i.e. uncorrected errors in the register, non–sampling errors, non–response, imputation inaccuracy, etc.). How to cope with these problems? Some of them are hard to identify and can remain undetected (for example erroneous SIC or NACE code numbers). Others can be detected for sampled units (e.g. the number of employees or data about local units) as well as at the population level (for example a general update of SIC and NACE codes between sampling and estimation). One can also improve imputation methods used in previous surveys and re-compute relevant results. The quality of the current frame should then improve as well. But the most efficient way may be to combine data from various existing administrative data sources. One can take into account that economic entities have reporting obligations (under current regulations) in relation to other institutions that are more empowered to enforce statistical reporting, i.e. tax offices or social insurance agencies. So, their databases may be an essential support for public statistics. A comparison of the Business Register with such sources can help to reveal and correct many errors in sampling frames. However, Oone can take, however, into account the possible differences in concepts used in such sources. The main of them concern usuallydifferences are to do with definitions of units of interest by particular authorities conducting, which administer these registers. For instance, the definition of the taxpayer in a tax register can be defined in adiffer from different way that of the respondent unit in a statistical frame (the enterprise usually pays usually taxes in the placer of its main basewhere it is based, regardless of where without respect to where its LAU/LKAU units lieare actually located). See also Eurostat (2009 a) for many examples of such problems. So, the M. Bergdahl et al. (2001) list many advantages and drawbacks of using such registers in the UK and Sweden. In Poland, for example, one can rely on the so-called Base of Statistical Units, which is a set of data based on the state business register (REGON), but it is permanently updated using data from statistical surveys and some administrative sources (e.g. Social Insurance Institution or National Court Register). It usually constitutes the main sampling frame for most business surveys. The tax register can be another valuable source of information. Because practically each legally active enterprise transfers advance tax payments (such as Personal Income Tax - – PIT) on wages and salaries of its employees and most of them are VAT payers (Value Added

6

Tax), a lot of their financial data are collected by tax offices in the central tax register. These are data about e.g. costs, revenue, investments, employment, etc. and therefore can be used to verify current statistical information. Of course, there are sometime difficulties resulting from the fact that such data are often stored in various differently structured sub- databases (e.g. different databases for PIT and VAT data). Therefore, combining these sets may not be as easy as one might expect, but can be done. This task can be facilitated by making use of the tax identification number (for economic entities), which contains information about KAUs or local units). Of course, there may be situations where verification of some data is impossible, e.g. in some countries large units may report VAT by type of activity with respect to its local units) and then such cases should be analyzed individually (maybe by applying some kind of estimation). The scale of differences which can occur between various administrative registers and data collected during surveys directly from respondents is discussed by A. Młodak and J. Kubacki (2010) who prepared the methodology and suggestions about the typology of individual farms for the National Agricultural Census 2010 and compared two main databases created for experimental purposes: the “master record’ combining data from administrative sources, such as the Tax Register of Real Estate or a database maintained by the Agency for the Restructuring and Modernization of Agriculture, and the ‘golden record’, containing relevant data about the same group of farms but collected during the test census conducted several months before the main one. Due to significant differences between the original sources in terms of timeliness and scope of information, many discrepancies within the data could be observed. For examples, in many cases the area of agricultural land under cultivation was larger than the total area of agricultural land; for some records, the area of meadows and pastures was larger than the total area of agricultural land; for many others, the area of arable land was larger than the total area of agricultural land or the area of meadows and pastures was positive, while the area of agricultural land under cultivation amounted to 0. In total, over 37% of records were defective. Differences between the ‘master record’ and ‘golden record’ were also very clear. It may be feared that similar problems can occur in the case of strictly understood business surveys. In general, final inaccuracies depend on the amount of coverage deficiencies, the possibility and effort made to detect them, and the efficiency of relevant actions, such as imputation or estimation. The most important elements of such strategies which should be taken into account are presented below (cf. M. Bergdahl et al. (2001): 7

to eliminate duplicates present due to the use of different sources, it is desirable to perform record identification,

business registers can strongly depend on current legal regulations in a given country,

questionnaires may be returned by post offices because the address is no longer valid,

the term frame error is not always a correct description – coverage deficiency is often more adequate, showing the consequence and not just blaming the frame, for example for not having included mergers in January 2012 in a frame constructed at the end of 2011.

the frame should not just include enterprises that are active at the time of the frame construction but all enterprises that have operated in a given year, whether active the whole year or only part of the year,

accuracy can be improved by stratification according to SIC or NACE codes (corresponding to domains: each stratum is equal to – or more detailed than – a domain), where strata with largest size have selection probabilities close to one,

it is worth using additional information outside the frame database, if possible; this can help to reduce bias and variance by relevant corrections and updates.

if we update the sample with data from different sources, the bias will be significantly reduced; however, since such information refers only to the sample, its inclusion – especially when it is a rare characteristic – can increase variance,

post-stratification based on auxiliary information can be useful. Inaccuracy caused by coverage deficiencies can be measured in three different ways (cf. also M. Bergdahl et al. (2001)):

by reviewing updating procedures of the Business Register to look for time delays,

by comparing units in the Business Register before and following the update using special measures of distances (e.g. the sum or average of Gower’s distance between particular observations),

by computing approximately the level of inaccuracy in terms of estimated statistics for the population and comparing them (Student’s t–test can be used).

8

Statistics Canada2 (2012) recommends, where possible, using the same frame for surveys with the same target population to improve coherence, avoid inconsistencies and facilitate combining estimates from consecutive surveys to reduce costs of frame maintenance and evaluation and use multiple frames (if they exist) to assess the completeness of one of the frames. On the other hand, in a ‘typical’ situation, i.e. if a single existing frame is adequate, the authors of the cited document recommend to avoiding usingthe use of multiple frames. They also suggest also toit is a good idea to ensure that the frame is as up-to-date as possible relative towith respect to the reference period for the survey as well asand to retain and store all information on actions preformed within the sampling design , (i.e. rotation and data collection) so that coordination between surveys can be achieved and respondent relations and response burden can be better managed. Moreover, it is another recommendedation is to determine and monitor coverage and negotiate required changes with the source manager for statistical activities from administrative sources or for derived statistical activities, where coverage changes may be outside the control of the immediate manager and to make adjustments to the data or use supplementary data from other sources to offset the coverage error of the frame. According to Statistics Canada (2012), other recommended measures includeT training and procedures for data collection and data processing staff aimed at minimizing coverage error (e.g. procedures to ensure accurate confirmation of lists of dwellings for sampled area frame units) are – according to Statistics Canada (2012) also desirable as well as incorporation of ng procedures aimed at eliminationng duplications and to updateing for births, deaths, out-of- scope units and changes in characteristics, frame updates in the timeliest manner possible, reviewing and improvement of ing the identification of the target units missed or wrongly coded and putting in place procedures to minimize this problem and to detect and minimize errors of omission and misclassification that can result in undercoverage, and to detect and correct errors of erroneous inclusion and duplication resulting in overcoverage. According to the cited document, the created documentation should contain definitions of the target and survey populations, any differences between the target population and the survey population, as well as the description of the frame and its coverage errors in the survey documentation as well as a report on the known gaps between key user needs and survey coverage. These postulates together with other important issues are onesome of the most important elements of the quality framework initiative (cf. M. Colledge (2006)). In this context it is also important to use GIS (Geographic Information System) to verify the spatial location of units and their delineation or the

2 http://www.statcan.gc.ca/pub/12-539-x/2009001/coverage-couverture-eng.htm 9

limits of industrial (or other business activity) areas. It is also necessary to improve the qualifications of staff involved in these surveys by permanent training aimed at minimizing costs and maximizing the efficiency of statistical research.

2.2. Measurement errors Measurement errors are connected with the value of a given variable reported by the respondent otr interviewer or by survey instruments. . Measurement errors occur if the registered value differs from the true one (which, in this case, is assumed to exist with no ambiguity). The absolute value of this difference may be perceived as the size of measurement error. Of course, in most cases true values are not known and that is why much more specialized methods of detecting such errors and estimating their magnitude are necessary. The responsibility for the occurrence of measurement errors may lie on the part of both the respondent and the interviewer or any other person involved in entering data into relevant computer systems. The first case can also be described as ‘response errors’. Problems on the part of people conducting or supervising the survey are strictly connected with processing errors and will therefore be analyzed together in the following sections. M. Bergdahl et al. (2001) argue that response errors may arise from three sources. The first one is connected with the fact that true values can be unknown or difficult to obtain. This is because various reference periods of reporting (for example, those used for financial purposed purposes may be different from those used in statistics: the financial year is usually shorter different than the calendar year (e.g. in Poland ithe financial year t can can include anybe moved to 12 subsequentconsecutive calendar months that do not correspond to than ina calendar year; on the other hand, the tax year is usually shorter if a unit started its economic activity in the middle of the the calendar year), sometimes in accounting it is assumed for simplification that each month has 30 days, etc.). If the survey is conducted after the legally required time of storing transaction documents (e.g. ad hoc purchases, claims or obligations) the respondent may no longer have access to information, because the relevant documents have been destroyed. Such problems negatively affect the final aggregated results for typical periods, generating bias. Measurement errors can also result from misunderstood questions or other slips. They can be caused by imprecise instructions in questionnaires or respondents’ failure to read them. Respondents may find it difficult to recall information or may lack relevant knowledge and

10

therefore give only rounded figures. All these problems may result in e.g. reporting data in wrong units, entering data in the wrong cell or strong over–(under–) estimation of the aggregates. Such measurement errors can be reduced by the careful control of current data, clarification of doubts and comparisons with data from previous survey editions. On the other hand, errors may also be found in information used by the respondent, who may be unaware of this fact or unable to correct them. If the respondent uses information from various computer databases, it can be entered by the staff with errors that are hard to identify. Comparisons with previous periods and available administrative databases (e.g. taxes) can help to recognize at least some of them. J. Banda (2003) states that non-sampling errors occur because procedures of observation or data collection are not perfect and their contribution to the total error of the survey may be substantially large, thereby adversely affecting survey results. In general, errors can occur for the following reasons:

failure to understand the question,

careless and incorrect answers,

not enough time for the respondent to carefully analyse the questionnaire,

respondents answering questions even when they do not know the correct answer.

inclination to hide true answers (e.g. in surveys dealing with sensitive issues, such as income, financial results, details on technology, etc.).

gaps in memory e.g. as in questions about commodities that do not exist anymore or information that is no longer valid Taking the above into account, we can say that one can distinguish some major occasional errors, which can occur in continuous variables: entries expressed in wrong units, or entered in the wrong cell (they can be sometimes recognized as outliers) or with inappropriate precision. There are probabilistic models of such errors relying on their occurrence probabilities (in relation to the true value). Using previously collected data, (e.g. from past several years) one can estimate the probability that such data will deviate from their true values. In the case of continuous variables misreported as zeros, these mistakes may be caused either by the respondent accidently filling the table or by their inability to provide relevant information (e.g. by analogy to the common practice by tax offices 11

of replacing fields left empty by the taxpayer with zero value). Errors in continuous variables can also include zero-–centered random errors, which cause total estimates to be approximately unbiased, but their variance can be high (cf. M. Bergdahl et al. (2001). Measurement errors may also result from the misclassification of relevant categorical variables. EThe errors caused by survey instruments haveare usually its source indue to 1) erroneous data characterizing units in the sampling frame used tofor sampling as well as, 2) not imprecise tools and equipment used by interviewers, 3) and estimates of quality measures. They can occur during the questionnaire administration (see the chapter “Questionnaire Design”) or software programming softwares or inconveniences and defects of tools used by interviewers. Thus, itoptimizing survey instruments is a matter of balancing between increasing the response rate by using a different mode and taking into account measurement errors due to this change. ItSuch optimization is also motivated also by the fact that most of modern statistical surveys are conducted by mixed mode surveys, i.e. one questionnaire can be constructed and used by different modes (see the chapter “Data Collection”). Hence the interchange of modes should not be not difficult. The Use of mixed modes enables tothe improvement of the response rate by using another possibilitiesy to contact a respondent and to use the appropriate mode for different groups. On the other hand, G. Brancato et al. (2006) .note that, on the other hand, it must be taken into consideration one should bear in mind that the use of multiple techniques may result in possible measurement errors even if the same questionnaire is used. Thus, an estimation of differences in response rates and measurement errors obtained using with various survey instruments or alternative modes of interviewing is desirablerecommended. In general, the total survey error due to measurement error can be written as , where is the sample, is the true value for –th unit, is its observed value and denotes its weight. If the true value is unknown, the distribution of errors should be modelled (e.g. using old or administrative data and relevant coherence tests). Then, and will be good measures of bias and variance for such errors, respectively. More details and practical issues in business statistics can be found in the handbook by M. Bergdahl et al. (2001)).

2.3. Processing errors To produce the final output, data collected from respondents are processed using many various algorithms and procedures. At each stage of such a procedure the quality aspect is crucial and any errors are called processing

12

errors. One can distinguish between system errors and data handling errors (cf. M. Bergdahl et al. (2001)). Systems errors occur in the specification or implementation of systems used to conduct surveys and process collected data. They may have various character: the operational system can be wrong or not efficient enough, mistakes in special programs written in programming languages, such as TURBO PASCAL, C++, Java, Visual Basic, SAS IML, etc. can also occur. System errors affect the quality of individual data and, as a result, estimates of population statistics. Errors in programming can be discovered by controlling algorithms, e.g. by horizontal approach (to check whether the sum of values of target variables is equal to their total values, e.g. whether the sum of employees in particular age groups in an enterprise is equal to the total number of employees) or the vertical approach (to check whether the sum for lower aggregates is equal to the sum for larger one, e.g. whether the sums of people employed in industry in given NUTS 5 units is equal to the total number of employees in industry in the corresponding NUTS 4 unit). The cost of correcting errors (staff and equipment costs, possible delay, necessity of publishing corrections, etc.) should also be taken into account. It is very important to detect as many errors as possible before staring the survey. Error correction is then quicker and significantly minimizes the subsequent (and total) costs of the survey. Testing procedures and simulation studies during programming are also recommended. In simulation studies, data are generated from various theoretical distributions and are then used to test the performance of algorithms. Artificial datasets to be analyzed are usually very large also in order to assess the capacity of the software. Given the same simulated data, it is possible to compare the performance of various programs and systems. It should be pointed out, however, that simulation studies, even if they are complex, need not fully reflect empirical structures and distributions which can occur in reality. The second type of processing errors are data handling errors. They are connected with processes and techniques used to capture and clean data used for the final production of estimates and data analysis. They can result from data transmission (errors arising during the transmission of information from the place where data are collected to the office where they are subjected to further processing; for example, if the survey is conducted by interviewers, errors may be caused by disturbances or interferences in transmission or location tools, such as GPS, mobile phones, hand–held terminals, access to the internet using laptops, etc. The necessary signal is not available everywhere (there are still many ‘white spots’ in the coverage 13

of telecommunication networks, especially wireless) or may not be of sufficient quality. Also, if respondents send data via e-mail, similar difficulties can occur. If data are collected using traditional paper questionnaires (this, however, is increasingly rare) errors in data transmission may be connected with the use of fax (postal deliveries are usually sent in sealed envelopes). M. Bergdahl et al. (2001) point out that faxed information may be illegible and information given over the telephone may be misunderstood or misrecorded. In both cases, if there is any doubt, the recorded value should be checked with the respondent before it is retained. Such problems are normally repaired by external firms managing these networks. Thus, the statistician should be in permanent contact with them and indicate problems, which are especially harmful from the point of view of needs of statistics. Another problematic area is that of data capture. Namely, data can be badly converted into suitable numeric format and therefore may not be correctly recognized by the computer (character recognition systems like Bar Code Recognition (BCR), Optical Mark Recognition (OMR), Optical Character Recognition (OCR) or Intelligent Character Recognition (ICR) are, of course, imperfect). Such errors can quite easily be corrected during the stage of designing software for the processing of collected data. Another problem to cope with is coding distortion (i.e. some units can be badly classified in predefined categories). One should take into account, however, that some errors of this type can only be detected after finishing the survey, because theoretical preliminary experiments cannot foresee all real situations. In this case, the only reasonable solution is to consider changing category definitions and repeating the classification procedure or dropping some less important sub-criteria that characterize the ‘bad’ unit, which have distorted its classification. Problems can also occur in automatic procedures of data editing that enable error detection. Such procedures are applied if their cost is much smaller than a manual analysis conducted item–by–item. They should be treated the same way as errors in computer programs. Errors may also be caused by any process involving the detection of outliers in the data, especially in seasonal adjustment procedures. These errors are connected with the recognition of occasional outliers (i.e. outliers observed in a very small number of periods) or incorrect estimation of the trend function. The assistance of highly-specialized experts is recommended in the construction of tolerance intervals or in time series analysis. M. Bergdahl et al. (2001) consider a number of data keying methods (keying responses from pencil and paper questionnaires, using scanning to capture images followed by automated data recognition to translate those images

14

into data records, keying by interviewers of responses during computer assisted interviews, etc.) and other errors, their measurement and ways of minimizing their impact on the final output. These methods involve technical improvements in data processing. However, nowadays more and more data are collected electronically and paper forms are progressively eliminated. Therefore, we face many new challenges connected with these modes. Some of them are presented above (managing wireless or cable data transmission), others concern terminal devices (like servers used by respondents and statistical offices, hand–held terminals, etc.). One can only hope that error correction in this area becomes much easier and faster in order to improve ways of getting in touch with respondents and guarantee almost immediate data transmission from the respondent to the survey taker (or the respondent’s assistant). Such forms of statistical activity require highly qualified staff, experienced in the use of new information technologies and efficient correction of errors. If they regularly control delivered reports, they can very quickly react to any possible problems. One should also guarantee efficient IT tools, which prevent the possibility of losing the entered data (devices like UPS in the event of unexpected interruption of power supply) or running out of disk space. Moreover, oneit should be mentioned that in business statistics modeling also plays also an important role and hence processing errors also include also modeling-related error–sources. For example, processing errors may be connected with algorithms of estimation (especially for non–sample parts of cut- off sampling), imputation or time series analysis (e.g. seasonal adjustment). But these aspects have specific charactersistics being , which are a consequence ofderived from properties of particular mathematical models. So, they are described in relevant chapters of the modules of this handbook (“imputation”, “Weighting and estimation”, “Seasonal adjustment” as well as in the module “Survey errors under non-probability sampling” in the current chapter). A detailed description of problems connected with data processing (with data processing operations, the main processing operations, their error structures and measures that can be taken to control and reduce these errors are also described by P. P. Biemer and L. E. Lyberg (2003). At the end of this section, we can discuss ways of measuring the impact of processing errors on final survey results using two main sources of approximate information: a) experience from previous periods, when the same survey was conducted,

15

b) simulation studies. In the first case, we can assess what percentage of items can be improperly coded or recognized. One can indicate areas where the most errors occur and, therefore, assess their impact on the quality of the current survey edition. Of course, we can modernize the software and conduct a simulation study based on similar assumptions as the ‘real’ survey to detect how the modernization can reduce the number of errors. Taking these results into account, we can estimate errors of final estimates for a population.

2.4. Non–response errors Sometimes respondents cannot, don’t want to or don’t have enough time to fill all questionnaire items. This problem differs from frame errors, because non-response errors concern only units sampled in the survey. Since the issue of non–response was broadly discussed in modules devoted to the response process and response burden, what follows is only a brief review of their most important aspects. There are three basic causes of the lack of response from a unit :

non–contact – the questionnaire form or the interviewer may not have reached an appropriate respondent for various reasons, for example owing to change of address, failure of the postal system, closure or interruption of economic activity without deregistration,

refusal – the respondent has received the form but hasn’t returned it or returned it with a note explaining their refusal to answer some items concerning sensitive business information or not having enough time to collect necessary data,

lack of necessary knowledge – the respondent hasn’t completed some important items owing to the lack of necessary knowledge or experience to do it correctly. As we can see, the scope of missing information can vary. If only some items are missing, one can use imputation methods or conduct trainings and consultations with respondents or simplify the questionnaire or provide additional explanations for questions which are hard to understand (depending on whether the reason for non-response was refusal or lack of time or necessary knowledge). In the case of a complete lack of information, we can distinguish two types of non–respondents (cf. M. Bergdahl et al. (2001)): 1) units which have never previously responded (these are mostly smaller units, which are sampled afresh during each survey edition, or those newly sampled in rotating schemes) – for such units the only

16

information available may be that recorded on the frame; 2) units which have previously responded (wave non–response) – these units usually include either completely enumerated units, which are sampled on every occasion or else larger units which are sampled over several occasions in a rotation design. One can construct a pattern of non–response for these units and use this information to make a decision about a suitable course of action. If non–response is occasional or rare, one can use previously collected data and administrative sources to estimate the actual missing information; otherwise, one can consider dropping it from the survey or treating it as a unit which has never previously responded. It is also very important to determine whether the item is empty due to non–response or because it is implicitly filled with zero. The latter case should, however, follow from the construction of the form. For example, if one of several items of the structure of financial assets expressed in EUR is missing, but the sum of the remaining ones is equal to the sum entered by the respondent on the questionnaire, we can be sure that this item should be zero. But ambiguous situations can occur as well. To avoid them, the questionnaire should specifically ask the respondent to enter zero values where applicable. The assessment of the non–response effect is based on the indicator defined to be 1 if a value is missing and zero otherwise. Thus, each non– response unit may be described by a vector of such indicators. These values can be assigned both arbitrarily (in the case of ex post treatment) or randomly (especially for a simulation testing study before the survey). In the latter, stochastic, case, indicators consist of a series of 0s and 1s, where the probability distribution is determined empirically. That is, if a unit has taken part in previous editions (where is a natural number) and has provided no response for a given item times (where is an integer number), then the empirical probability that the indicator for this item will be equal to 1 can be calculated as and equal to 0 – as . Using this modelling we can anticipate the inclination of a unit to non–response in the current survey edition. M. Bergdahl et al. (2001) considered that indices of nonresponse can be completely random (if the index is stochastically independent of relevant survey variables, e.g. if probability of nonresponse for smaller businesses is the same as in the case of larger ones) or random given an auxiliary variable (or variables, if the index is conditionally independent of relevant survey variables given the values of, e.g. if is the size of a unit, then the index will be random within a given class of businesses determined by ). They state that “A missing data mechanism which does not occur at random given available auxiliary variables is said to be informative or non- 17

ignorable in relation to the relevant survey variables. Consider, for example, item nonresponse on a complex variable, for which the higher the value of the variable, the more work will tend to be required of a business of a given size to retrieve the information. In such circumstances, it may be that even after controlling for measurable factors, such as size of the business, the rate of item nonresponse tends to increase as the value of the variable increases. Item nonresponse on this variable would therefore be informative in relation to this variable.” (M. Bergdahl et al. (2001)). The authors then go on to give a review of various methods of assessment of bias and variance caused by the non-response problem as well as imputation methods.

2.5. Unit non-–response Now let us look at the problem of non–response units, i.e. units for which no information is available. This lack can concern only the current survey round or its previous editions. If the unit has not taken part in previous editions, the problem is especially clear and harmful. M. Bergdahl et al. (2001) consider a simple business survey with stratified simple random sampling, defining the expansion estimator of population total of a survey variable as:

and the response expansion estimator of the form:

where is the sample mean in stratum , is the number of businesses on the frame in stratum , is the number of strata and denotes the sample mean for stratum based only on response units. The expectations of these estimators are, respectively, of the forms:

where is the mean of the survey variable in the stratum and is its mean in the same stratum for response units. Hence the bias is equal to

The most serious problem occurs where all non–response units are included in the survey sample for the first time. This may happen e.g. if the survey is conducted for the first time or if other units have filled all necessary items of the questionnaire. In such cases, the total means are unknown and therefore the value of statistics is also unknown. The only reasonable solution is to use administrative registers to derive information

18

on size and other aspects, which can be useful from the point of view of the survey subject and try to fill the gaps by relevant regression, whose function will be constructed using available data (regression imputation – see the Chapter “Imputation”). Let r(i) be an indicator of the response for -th unit and be auxiliary variables. Let F be the regression function on with respect to determined using data for response units. Define for every unit in the subsample drawn from -th stratum. Hence the total estimate (1) can be rewritten as:

where is the mean of the survey variable in the stratum (i.e. where the data for non-response units are filled with relevant regression estimates). Hence the estimate of bias can be presented as:

and the variance as:

where and is the number of sampled units in the -th stratum. Because

where and is the number of sampled response units in the -th stratum, the estimate of variance inflation caused by non-response units can be measured by the absolute difference between (5) and (6):

19

Consider now the case when unit i has taken part in previous b rounds of the survey and responded times (see item 2.4). So we can model the probability of response and take it into account in the aforementioned models. That is, sample this unit with probability . Hence the , and in (1) – (5) can be replaced with their weighted versions, i.e.

respectively. Therefore, the modified variance inflation takes into account both options: predicted possibility and actual situation of a unit in terms of its response. Of course, in any case coherence can be violated (i.e. some sums can deviate from relevant totals) and then some small corrections may be necessary. It is very important to distinguish between a non–respondent unit and a unit which is outside the target population. However, this is sometimes difficult and both removing units that should be included in the sample and including units from outside the target population can increase bias and variance, which will, in turn, distort these quality measures.

2.6. Item non-response The problem of item non–response treatment coincides, in general, with the imputation issues. Details on its treatment can be found in the chapter devoted to these problems and in the module of the current chapter, where variance estimation methods are discussed. So, extra variability caused by imputation can be estimated using decomposition presented in these sections. It should be noted that the models described in section 2.5 can also be applied in this case. We have analyzed single variable values for -th unit. This can be equivalent to non-–response item for this unit, if it is only partial non–response. To assess the bias impact of imputation applied for non– response items, one can observe that this component can be extracted from (4) as

where with , where is the imputed value for -th unit in -th stratum. Imputation can be performed by means of any arbitrarily selected method. Respectively, total variance caused by imputation is estimated by:

20

According to Eurostat (2010), item non–response can be seen as an intermediate category between measurement errors and estimation errors. The impact of non–response on final results may depend on the respondent’s characteristics and various circumstances. Information is often missing because the respondent is unable to provide it, or the respondent may be unwilling to provide information, which is considered too sensitive or personal. The authors of the Eurosatat publication suggest that in surveys, when providing microdata to researchers and other users, respondents may deliberately suppress some information, presumably for the sake of confidentiality and similar considerations. However, the statistical offices use the data of the highest quality available to produce composite and aggregate statistics. Information suppressed for confidentiality purposes areis only used only before publication. When providing microdata for researchers, statistical offices generally they are handled forconduct disclosure control to make sure, somethat confidential information is suppressednot disclosed. Although this does not have any effect on the aggregates published by the statistical office (as they are derived from the original, not unmodified data) tThisit may very strongly affect the quality of results for composite items obtained by researchers on the basis of such ‘truncated’ data (e.g. a target revenue target variable is composed of many detailed items and if some of them isare suppressed, thea serious errors can occur).

2.7. Models in survey sampling

21

Modelling in survey sampling comprises a definition of the sampling frame, sampling scheme and the choice of estimation formulas. Since problems connected with the sampling frame were discussed earlier in this module, we will just focus on two remaining aspects. The sampling scheme should account for the character of data which were the basis for the construction of the frame and which are predicted to be its output. This scheme should be designed to optimize the quality of estimation given a set of statistics. However, for various reasons, the sampling scheme can be inadequate, because of under– or over–representation of some groups of units in the sample (the problem of drawing a sample which is “not strong enough” – cf. C. E. Sårndal (2010 a, 2010 b)). For example, in systematic sampling, there is a possibility of sampling evenly distributed observations against the relevant distribution in the frame. Therefore, we must apply two main principles to avoid such situations as much as possible and optimize the choice of sampling methods. The first one is connected with precision, i.e. we should ensure that the difference between our estimate of the parameter of interest and its true value is caused only by random variation, assessed by the standard error. The value of this coefficient should be minimized. The second rule is to reduce systematic sampling errors. In other words, the sampling scheme should distinguish the effect of the same factor or different values of two explanatory variables. For example, say we wish to compare two estimators of the value of fixed assets use the following sampling scheme: first we draw one unit, then test the first estimator, next we change the estimator and draw the second unit. In this situation we cannot distinguish and compare the effect of both estimators. Systematic errors can be reduced by applying stratification and randomization. The former can lead to a decrease in variability within correctly defined strata. The latter is the process where various treatment combinations are randomly used in order to avoid systematic errors caused by evident or hidden orders of units. More details on these issues can be found in the chapter “Sample selection”.

22

Other problems are connected with the choice of estimation formulas. It is obvious that the precision of any estimate usually depends on such factors as the variability of the process estimator, the measurement error, the number of independent replications (sample size) as well as the efficiency of the sampling scheme. Thus, the quality of estimator use is, to a large extent, the consequence of properties of the adopted sampling scheme. R. M. Royall (1970) demonstrate that with a squared error loss function, the strategy of combing the probability proportional to size sampling plan with the Horvitz–Thompson estimator is inadmissible in many models for which the strategy seems ‘reasonable’ and even for those where it is optimal. Instead, he proposes estimating the required parameters for finite populations, when auxiliary information regarding variate the target variablevalues is available, using some linear regression with super- population models. More specific problems concerning various types of estimation and assessment of their variance can be found in the module “Variance estimation methods” of this chapter as well as in the chapter “Weighting and estimation” C. E. Sårndal (2010 a, 2010 b) discusses the usefulness of the model-based method for descriptive estimation and concludes that design-based estimation can be effective when it is supported by artificial devices (models). He suggests a compromise between pure concepts of design-based unbiasedness and design- based variance, which can solve many problems, such as what value there is in computing the design–based variance of survey estimates when the unknown squared bias (arising, for example, when missing data are imputed) is likely to be the dominating component of the mean squared error. In this context, he presents various design-based practices in small area estimation and calibration perspectives.

2.8. Total survey error model

23

As regards total survey error models, a paper by D. Marella (2007) is a good source of knowledge on this issue. Such models are designed to measure the relative impact of each error source on survey estimates and to make probability statements about the total error. Some very general models she discusses decompose the total error into fixed biases and variable errors and focus on three components of the non–sampling error: non–response bias, measurement bias and processing bias limited to imputation bias. In formal terms, the construction of total survey error models is used to translate the sequence of survey operations into a mathematical statement. This approach implies a careful balance of results from mathematical statistics and empirical studies. The paper by D. Marella (2007) presents a total survey error model that simultaneously treats sampling error, non–response error and measurement errors. The coverage error and data processing error are ignored. This research is motivated by the need to define a total survey design minimizing the total error, which can be implemented with costs that are consistent with the available budget. Therefore, the author tries to find an optimal economic balance between sampling and non–sampling error to obtain the best possible accuracy of final results. Thus, quantification of the total survey error using a model–based approach has an essential impact on the optimal allocation of the available budget designed for the total survey error reduction. Many more formal problems to do with survey planning and assessing errors can also be found in the book by D. Rasch and G. Herrendörfer (1986). A. Aitken et al. (2004) analyze another question connected with anthe impact of non– sampling errors on the total survey error, i.e. how to identify and determine key process variables that are related to non–response errors, measurement errors and productivity. They propose special cause–effect diagrams, which can be used as tools for a relevant decision procedure. That isIn particular, the idea is to use one diagram for each area in terms of: non–response, measurement and productivity is created and separate subdiagrams containing influential factors for any of them, irrespective of the potential to determine key process variables based on these factors are also generated. Theyse diagrams help to identify all factors that might have an influence on nonresponse errors, measurement errors and productivity in interviewing activities and they help to define possible key process variables for each factor in the cause–effect diagram. According to the cited documentIn other words, from athe total survey error perspective, reducing non–response errors involves applying an appropriate estimation procedure and non– response adjustment.

24

Eurostat (2009 b) suggests that the total survey errors is athe sum of various errors and the assessment of the total quality should depend on account for the importance of particulars factors, not only in terms of basic categories of errors but also by inconsidering their special cases. For instance, the importance of micro-data processing errors varies greatly betweendepending on different the statistical processes and, hence their treatment should also be also diversified and rationalized. When they are significant, their extent and impact on the results should be evaluated. The rate of unreported events (which could be regarded as a kind of coverage error) is – in opinion ofaccording to the authors of this paper – also a key quality factor that needs to be assessed. They give an example of price indexes, which are based on statistical surveys, and their objective is to monitor price differences in time or space for all products (goods or services) within their scope and to provide an overall estimate of price change/difference. In this case, Tthe total survey error is here athe resultant of non-sampling error and usedthe remedy used to offset itfor them, applied the estimation model applied and any mistakes made in the process (errors in calculation and the presentation of the macro-data presented to users). This document proposes the following indices which can help to measure the size of many errors: unit response rate (the ratio of the number of units for which data for at least some variables have been collected to the total number of units designated for data collection), item response rate (the ratio of the number of units which have provided data for a given data item to the total number of designated units or to the number of units that have provided data at least for some data items), design-weighted response rates (athe sum of the weights of the responding units according to the sample design) and size- weighted response rate (being athe sum of the values of auxiliary variables multiplied withby the design weights, instead of the design weights alone). Eurostat (2009 a) identifies the total survey error with the total error of an estimate and observes that it is appropriate to note that although there is no method to estimate it but, there are various approaches for acquiring somethat can give some indication of the total error exist. In this context this document proposes to makemaking a comparison with another source (e.g. data on employment collected in business surveys can be compared with relevant data collected as a result ofin the Labour Force Survey). In opinion of According to the authors of the paper, in practice the differences observed in comparisons betweenby comparing such sources arecan be attributed to combinations of errors and differences in definitions; that is why, and an analysis aiming at decomposing the such differences into their constituent parts can shed light on the total survey error. An important factor in this context This is closely connected with the issue of is also the consistency (see module “Coherence and consistency”) .

3. Glossary

Source of definition Synonyms Term Definition (link) (optional) Unit non-response failure by a unit to respond to a survey M. Bergdahl et al. (2001) 25

Item non-response failure by many units to respond to a particular M. Bergdahl et al. survey question. (2001) Partial non- failure by a unit to respond to some important original response survey questions

4. Design issues Elements of designing in this case can be addressed toconnected with the decision procedure concerning identification and assessment of particular non–sampling components of the total survey errors. They are described by A. Aitken et al. (2004).

5. Available software tools In general, this item is here not applicable here (we have here onlyconsidering the theoretical scope of these considerations), but for assessment of the errors relevant computer programs and procedures for assessment of errors are presented in chapters: “Imputation”, “Weighting and estimation”, “Seasonal adjustment” and “Sample Selection”.

6. Decision tree of methods

One can recommend here the decision diagrams presented by A. Aitken et al. (2004).

7. Glossary

Source of definition Synonyms Term Definition (link) (optional) Data handling errors Errors which are connected with M. Bergdahl et al. processes and techniques (2001) usedapplied to capture and clean data used for the final production of estimates and data analysis. They can result from data transmission (errors arising during the transmission of information from the place where data are collected to the office where they are subjected to further processing. Design–weighted response TheA sum of the weights of the Eurostat (2009 b) rate responding units according to the sample design. Item non–response Failure by many units to respond to a M. Bergdahl et al. particular survey question. (2001) Item response rate The ratio of the number of units which Eurostat (2009 b) have provided data for a given data item to the total number of designated units or to the number of units that have provided data at least for some data items. Partial non–response Failure by a unit to respond to some Original

26

Source of definition Synonyms Term Definition (link) (optional) important survey questions Quality profile A user–oriented summary of the main Eurostat (2012) quality features of indicators. Size-weighted response A sum of the values of auxiliary Eurostat (2009 b) rates variables multiplied withby the design weights, instead of the design weights alone. Systems errors Errors occurring in the specification or M. Bergdahl et al. implementation of systems used to (2001) conduct surveys and process collected data. Unit non-–response Ffailure by a unit to respond to a M. Bergdahl et al. survey. (2001)M. Bergdahl et al. (2001) Item non-response failure by many units to respond to a M. Bergdahl et al. particular survey question. (2001) Partial non-response failure by a unit to respond to some original important survey questions Unit response rate The ratio of the number of units for Eurostat (2009 b) which data for at least some variables have been collected to the total number of units designated for data collection.

8. Literature

7. Literature Aitken A., Hörngren J., Jones N., Lewis D., Zilhão M. J. (2004), Handbook on improving quality by analysis of process variables, General Editors: Nia Jones, Daniel Lewis, European Commission, Eurostat, Luxembourg, http://www.paris21.org/sites/default/files/3587.pdf . Banda J. P. (2003), Nonsampling errors in surveys, Expert Group Meeting to Review the Draft Handbook on Designing of Household Sample Surveys, 3-5 December 2003, draft, UNITED NATIONS SECRETARIAT, Statistics Division, No. ESA/STAT/AC.93/7, available at http://unstats.un.org/unsd/demographic/meetings/egm/Sampling_1203/docs/no _7.pdf Bergdahl M., Black O., Bowater R., Chambers R., Davies P., Draper D., Elvers E., Full S., Holmes D., Lundqvist P., Lundström S, Nordberg L., Perry J., Pont M., Prestwood M., Richardson I., Skinner Ch., Smith P., Underwood C., Williams M. (2001), Model Quality Report in Business Statistics, General Editors: P. Davies, P. Smith, http://users.soe.ucsc.edu/~draper/bergdahl-etal-1999-v1.pdf

27

Biemer, P. P. and Lyberg, L. E. (2003) Data Processing: Errors and Their Control, in Introduction to Survey Quality, John Wiley & Sons, Inc., Hoboken, NJ, USA. Brancato G., Macchia S., Murgia M., Signore M., Simeoni G., Blanke K., Körner T., Nimmergut A., Lima P., Paulino R., Hoffmeyer – Zlotnik J. H. P. (2006), Handbook of Recommended Practices for Questionnaire Development and Testing in the European Statistical System, European Commission Grant Agreement 200410300002, Eurostat, Luxembourg, document available at http://epp.eurostat.ec.europa.eu/portal/page/portal/quality/documents/RPSQDET270 62006.pdf Colledge M. (2006), Quality Frameworks: Implementation and Impact, Conference on Data Quality for International Organizations Committee for the Coordination of Statistical Activities Newport, Wales, United Kingdom, 27 – 28 April 2006, available at http://unstats.un.org/unsd/accsub/2006docs-CDQIO/Quality%20Framework%20M %20Colledge.pdf . Eurostat (2012), ESS Quality Glossary, Developed by Unit B1 "Quality, Methodology and Research", Office for Official Publications of the European Communities, Luxembourg, Available at http://ec.europa.eu/eurostat/ramon/coded_files/ESS_Quality_Glossary.pdf. Eurostat (2010), An assessment of survey errors in EU-SILC, Series: Methodologies and Working papers, 2010 edition, Statistical Office of European Communities, Publications Office of the European Union, Luxembourg, http://epp.eurostat.ec.europa.eu/cache/ITY_OFFPUB/KS-RA-10-021/EN/KS-RA- 10-021-EN.PDF. Eurostat (2009 a), ESS Handbook for Quality Reports, 2009 edition, Series: Eurostat Methodologies and Working papers, Office for Official Publications of the European Communities, Luxembourg, http://unstats.un.org/unsd/dnss/docs-nqaf/Eurostat- EHQR_FINAL.pdf. Eurostat (2009 b), ESS Standard for Quality Reports, 2009 edition, Series: Eurostat Methodologies and Working papers, Office for Official Publications of the European Communities, Luxembourg, http://epp.eurostat.ec.europa.eu/portal/page/portal/ver- 1/quality/documents/ESQR_FINAL.pdf. Marella D. (2007), Errors Depending on Costs in Sample Surveys, Survey Research Methods, Vol. 1, , pp. 85–96. Młodak A., Kubacki J. (2010), A typology of Polish farms using some fuzzy classification method, Statistics in Transition – new series, vol. 11, pp. 615 – 638.

28

Rasch D., Herrendörfer G. (1986), Experimental design: Sample size determination and block designs, D. Reidel Pub. Co., Dordrecht and Boston and Hingham, MA, U.S.A. Royall R. M. (1970), On finite population sampling theory under certain linear regression models, Biometrika, vol. 57, pp. 377–387. Sårndal C. E. (2010 a), Models in Survey Sampling, [in:] Carlson M., Nyquist H. and Villani M. (eds), “Official Statistics – Methodology and Applications in Honour of Daniel Thorburn”, pp. 15–27, available at http://officialstatistics.files.wordpress.com/2010/05/bok02.pdf. Sårndal C. E. (2010 b), Models in Survey Sampling, Statistics in Transition–new series, December 2010, vol. 11, pp. 539–554. Statistics Canada (2012), Coverage and Frames, available at http://www.statcan.gc.ca/pub/12-539-x/2009001/coverage-couverture-eng.htm [2012-06-11].

29

Specific section – Theme: Effects on non – sampling errors to the total survey error

A.1 Interconnections with other modules

• Related themes described in other modules 1. Imputation 2. Variance estimation methods 3. Calibration 4. Different types of surveys 5. Questionnaire Design 6. Model–based estimation 7. Data Collection

• Methods explicitly referred to in this module 1. Estimation of population parameters 2. Estimation of variance inflation

• Mathematical techniques explicitly referred to in this module n/a

• GSBPM phases explicitly referred to in this module

GSBPM Phases 4.1, 5.2 – 5.6.

• Tools explicitly referred to in this module n/a

• Process steps explicitly referred to in this modulen/a n/a

30