Answers Chapter 14

GaussMarkovUnivariate.xls Answers

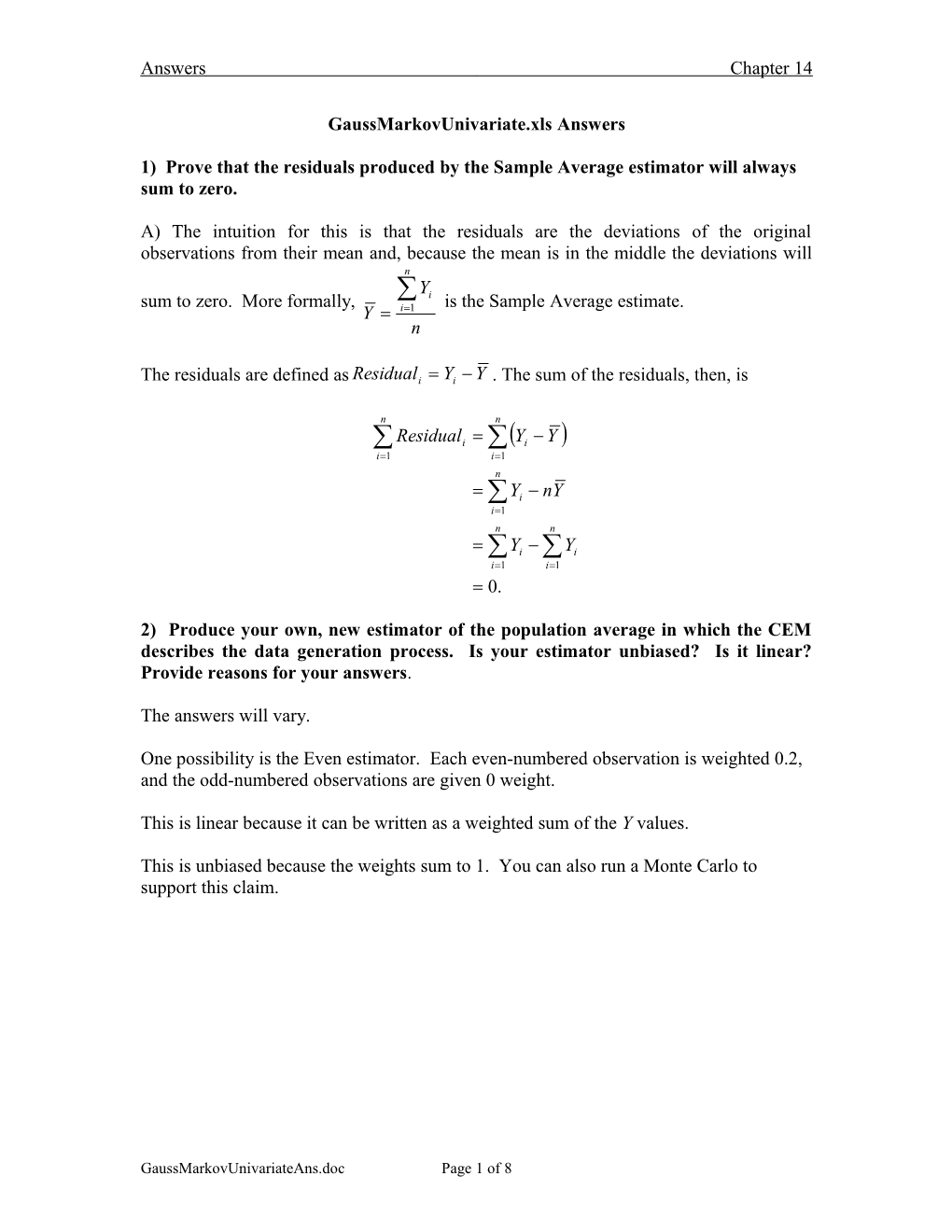

1) Prove that the residuals produced by the Sample Average estimator will always sum to zero.

A) The intuition for this is that the residuals are the deviations of the original observations from their mean and, because the mean is in the middle the deviations will n Y sum to zero. More formally, i is the Sample Average estimate. Y i1 n

The residuals are defined as Residuali Yi Y . The sum of the residuals, then, is

n n Residuali Yi Y i1 i1 n Yi nY i1 n n Yi Yi i1 i1 0.

2) Produce your own, new estimator of the population average in which the CEM describes the data generation process. Is your estimator unbiased? Is it linear? Provide reasons for your answers.

The answers will vary.

One possibility is the Even estimator. Each even-numbered observation is weighted 0.2, and the odd-numbered observations are given 0 weight.

This is linear because it can be written as a weighted sum of the Y values.

This is unbiased because the weights sum to 1. You can also run a Monte Carlo to support this claim.

GaussMarkovUnivariateAns.doc Page 1 of 8 Answers Chapter 14

3) (Requires use of algebra of expectations.) Show that the 0.9 Estimator is indeed biased, and compute its expected value assuming it follows the CEM.

A) We can compute the expected value of the 0.9 Estimator in exactly the same way as n we did for the Sample Average. The difference is that the sum of the weights, wi , is i1 0.9, not 1:

n n E wiYi E wi i i1 i1 i n n E wi E wi i i1 i i1 n n wi wi E i i1 i1 n 0.9 wi 0 i1 0.9 0 0.9.

Inasmuch as the expected value does not equal β, this estimator is biased.

GaussMarkovUnivariateAns.doc Page 2 of 8 Answers Chapter 14

4) (Requires use of algebra of expectations.) Assume that the data generation process is as described in Model 1 with SD(i)= 5. Someone proposes an estimator with the following weights: Obs Weight 1 1 2 1 3 1 4 1 5 1 6 1 7 1 8 1 9 1 10 -8 (a) Is this an unbiased estimator of the population average? Compute its expected value. A) It is unbiased because the sum of the weights is 1. The computation of the expected value looks just like that for the sample average:

n n E wiYi E wi i i1 i1 i n n E wi E wi i i1 i i1 n n wi wi E i i1 i1 n 1 wi 0 i1 0 (b) What is the SE of this estimator? A) You can use the ComputingSEs sheet to find that its SE is huge: 42. SD() 5 Variance() 25 2 2 Weights (wi ) wi SD()

Sample Estimator Sample Estimator for Observation Average for Q6 Average Q6 1 0.1 1 0.2500 25.0000 2 0.1 1 0.2500 25.0000 3 0.1 1 0.2500 25.0000 4 0.1 1 0.2500 25.0000 5 0.1 1 0.2500 25.0000 6 0.1 1 0.2500 25.0000 7 0.1 1 0.2500 25.0000 8 0.1 1 0.2500 25.0000 9 0.1 1 0.2500 25.0000 10 0.1 -8 0.2500 1600.0000 Sum 1 1 2.500 1825.000 SquareRoot 1.581 42.720

GaussMarkovUnivariateAns.doc Page 3 of 8 Answers Chapter 14

5) Provide a recipe for obtaining an estimate of the slope in a bivariate version of the standard econometric model: Yi = Intercept + Slope*Xi.

Imagine that there are five observations; thus, n = 5. Your recipe must involve the Y's and the X's. To be even more concrete, you might show how the estimator works with the following data set: Xi Yi 10 60.33 20 112.55 30 165.03 40 218.45 50 255.03,

or this data set:

Xi Yi 10 64.93 20 110.95 30 163.12 40 215.91 50 264.88, or another sample. Give us an estimator (a recipe) for obtaining the sample slope that we can use with data like this. Your recipe must be one we could use on any data set with five observations on X and Y, not just a particular sample. Also tell us whether your estimator is Linear and make a guess as to whether it is Unbiased.

A) Answers will vary. However, we obtain many wrong answers.

Wrong answer A: “The answer is 0.2.” To obtain this wrong answer, you probably tried to find the slope and have given us an estimate, not an estimator. An estimator is a recipe that must work with any data set, not just one of the data sets used as an example. That recipe should tell us how to combine the X’s and Y’s. If it is a linear estimator, it should look like this: n Slope wi Yi , where you need to specify the weights as functions of X1, X2, i1

through X5 (on the assumption that n = 5). Notice we do not specify it in terms of 10, 20, 30, through 50 because those are specific X values and the recipe needs to be general.

The most charitable thing we can say about 0.2 is that it is an estimator in the sense that you could just say 0.2 no matter what the X’s and Y’s are. It is linear in the sense

GaussMarkovUnivariateAns.doc Page 4 of 8 Answers Chapter 14

n that it looks like Slope 0.2 wi Yi , where all the weights are 0. It is clearly i1

biased unless the Slope ( 1 ) magically happens to be 0.2.

Wrong answer B: Some combination of the two data sets we gave you as examples. Again, you are using specific numbers and not giving us a general recipe. Also, note that, in practice, you typically have one data set and will not be able to combine observations from two data sets.

Wrong answer C: You give us an estimator, but it has the Y’s raised to some power other than 1 and you say the estimator is linear. No, in that case it is nonlinear.

Right, but suspicious answer D: You give us the Average Slopes or Extreme Points estimators. Perhaps you are really sharp; perhaps you read ahead and forgot to cite us as the source for your answer!

Bottom line: This is a very hard question, which is designed to get you to think about what the terms estimator and linear mean. You need to distinguish between estimates and estimators, linear and nonlinear, and unbiased and biased. Now that Section 14.7 has been covered, you have the tools to determine whether linear estimators are unbiased. Determining whether a nonlinear estimator is unbiased is really hard, though the default answer should be “probably not.” For example, in the univariate case, the median may or may not be unbiased for the population average. It depends on the distribution of the tickets in the box, whereas the Sample Average is guaranteed to be unbiased provided that the population average exists.

GaussMarkovUnivariateAns.doc Page 5 of 8 Answers Chapter 14

6) We set the SD of the errors to 200 in the UnivariateSample sheet, drew a sample, and got the result pictured below. Evaluate the following statement: "Holy cow! The Sample Average estimator is biased when the SD of the errors gets large enough. That estimate is way low! It’s off the chart!"

Population Parameters b 200 SD(e) 200 Sample Average Estimator

b ei Y i w i w i b w i ei w i Y i 200 212.14 412.14 0.1 20 21.214 41.214 200 -5.57 194.43 0.1 20 -0.557 19.443 200 -141.16 58.84 0.1 20 -14.116 5.884 200 -138.00 62.00 0.1 20 -13.800 6.200 200 21.33 221.33 0.1 20 2.133 22.133 200 -386.52 -186.52 0.1 20 -38.652 -18.652 200 -312.53 -112.53 0.1 20 -31.253 -11.253 200 -94.92 105.08 0.1 20 -9.492 10.508 200 -216.46 -16.46 0.1 20 -21.646 -1.646 200 -8.18 191.82 0.1 20 -0.818 19.182 Sum -1069.87 930.13 1.0 200 -106.987 93.013

215

210 Y

d 205 e v r 200 e s

b 195 O 190 185 1 2 3 4 5 6 7 8 9 10 Observation Number

A) From the table above we can tell that the estimator is unbiased—the sum of the weights is 1. Because the spread of the errors (SD()) is quite high, it is not surprising that individual estimates will be very far from the true value of . The estimator is unbiased because the long-run average value of the estimates will equal the true value of .

GaussMarkovUnivariateAns.doc Page 6 of 8 Answers Chapter 14

7) Is the exact SE of the Diminishing Weights estimator always a constant multiple of the exact SE of the Sample Average estimator no matter what the value of SD() is? If so, why? If not, why not?

A) Yes. The reason can be found in the formula for the Exact SE of a linear estimator in this case. Both the Sample Average and Diminishing Weights estimators are linear estimators whose SE can be easily computed. In each case the SE depends on the sum of the squares of the weights and the SD of the errors. n n SE wiYi Var wi i i1 i1 Because for this DGP, the SE of every linear n estimator is directly proportional to the SD of the Var wi wii error terms, the ratio of the SEs of two linear i1 estimators does not change when the SD of the n error terms changes. Var wii i1 This derivation can be verified for the special case n we considered. See the ComputingSEs sheet. We w2 SD 2 i chose different values of SD() (you must set i1 those values on the UnivariateSample sheet) and n 2 2 obtained the following table: SD wi i1 SE(Sample SE(Diminishing n 2 Average Weights SD wi i1 SD() Estimator) Estimator) Ratio 10 3.162 5.774 1.826 1 0.316 0.577 1.826 5 1.581 2.887 1.826

The ratio 1.826 is simply the ratio of the sum of the squared weights for the two estimators:

n Diminishing w2 i 0.52 0.252 0.00195312 i1 n 2 2 2 2 0.1 0.1 0.1 Sample Average wi i1 1.826.

GaussMarkovUnivariateAns.doc Page 7 of 8 Answers Chapter 14

8) Scroll down to row 100 of the UnivariateSample sheet. You are offered four estimators. Use the Monte Carlo simulation add-in to determine where to place them in the grid below. The grid is actually composed of four separate text boxes. Click on a box and type in your answer. Select cell range A93:I116, hold down the Shift key, and execute Edit: Copy Picture. Paste your picture in a Word document.

biased unbiased

linear arithmetic mean linear (aka, average)

geometric mean median harmonic mean nonlinear nonlinear

biased unbiased

The Monte Carlo results that support our answer can be accessed from GaussMarkovUnivariate.xls. Execute Format: Sheet: Unhide and select MCRawCompare.

The harmonic and geometric means are nonlinear estimators because they cannot be written as a linear function of the Y’s. The harmonic mean uses reciprocals of the Y’s in its formula and the geometric mean takes the Y’s multiplied by each other to the power 1/n, where n is the number of observations in the sample (10 in our case). For more on various means and how they are related to each other, see http://mathworld.wolfram.com/PowerMean.html

More specific information on the geometric mean is at http://mathworld.wolfram.com/GeometricMean.html

More specific information for the harmonic mean can be found at http://mathworld.wolfram.com/HarmonicMean.html.

GaussMarkovUnivariateAns.doc Page 8 of 8