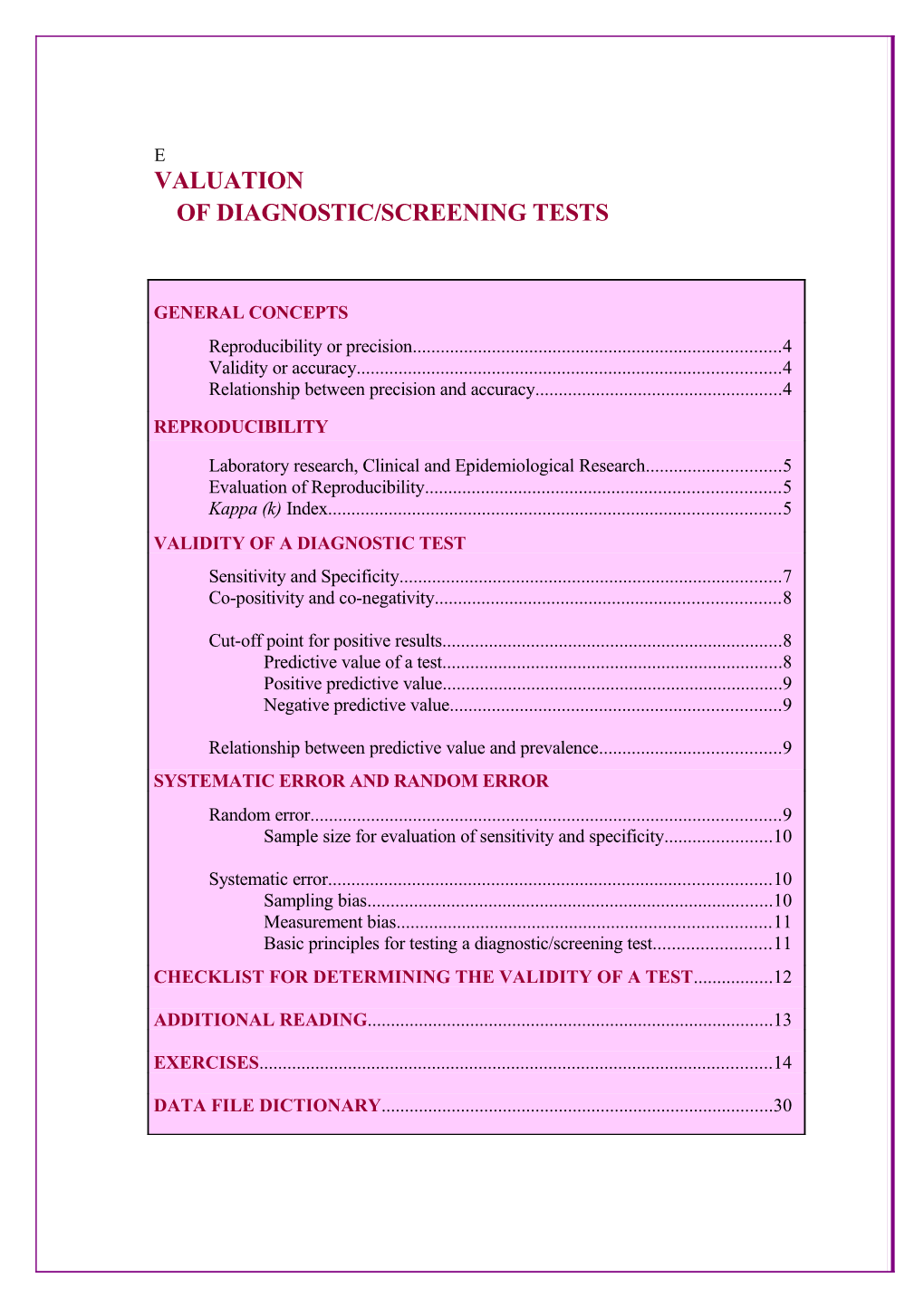

E VALUATION OF DIAGNOSTIC/SCREENING TESTS

GENERAL CONCEPTS Reproducibility or precision...... 4 Validity or accuracy...... 4 Relationship between precision and accuracy...... 4

REPRODUCIBILITY

Laboratory research, Clinical and Epidemiological Research...... 5 Evaluation of Reproducibility...... 5 Kappa (k) Index...... 5 VALIDITY OF A DIAGNOSTIC TEST Sensitivity and Specificity...... 7 Co-positivity and co-negativity...... 8

Cut-off point for positive results...... 8 Predictive value of a test...... 8 Positive predictive value...... 9 Negative predictive value...... 9

Relationship between predictive value and prevalence...... 9 SYSTEMATIC ERROR AND RANDOM ERROR Random error...... 9 Sample size for evaluation of sensitivity and specificity...... 10

Systematic error...... 10 Sampling bias...... 10 Measurement bias...... 11 Basic principles for testing a diagnostic/screening test...... 11 CHECKLIST FOR DETERMINING THE VALIDITY OF A TEST...... 12

ADDITIONAL READING...... 13

EXERCISES...... 14

DATA FILE DICTIONARY...... 30 Evaluation of diagnostic/screening tests

GENERAL CONCEPTS

The evaluation of the quality of diagnostic tests is a matter of interest to clinical and epidemiological research. In epidemiology research, the concept of a “diagnostic test” can be used not only for a biomedical or laboratory test, but also for the analysis of different procedures, such as a questionnaire, a clinical interview or physical examination. The quality of a diagnostic test relies on the absence of systematic deviation from the truth (that is, the absence of bias) and precision (the same test applied to the same patient or sample must produce the same results). This module discusses two basic concepts of the quality of a diagnosis test: reproducibility and validity. Different aspects of the design and analysis of these studies are discussed.

Reproducibility, repeatability or precision is the ability of a test to produce consistent results (nearly the same results) when independently performed under the same conditions. For example, a blood chemistry test is considered of high reproducibility when nearly the same result is obtained in repeated independent analysis. However, if the electronic instrument used in the analysis is not properly calibrated, the test may have high reproducibility, but produce consistently wrong results. The same concept of reproducibility can be used on more general situations - comparing for example, the results of the same observer examining blood slides in different occasions.

Validity or accuracy refers to the degree to which a measurement, or an estimate based on measurement, represents the true value of the attribute that is being measured. Validity tells whether the results represent the “truth” or how far they diverge from it. For example, an ECG is a test of higher accuracy than auscultation of the heart to detect cardiovascular arrhythmias. An antigen detection dip-stick test for P.falciparum malaria can be considered 100% accurate when it is able to give a positive result for all samples of infected patients and negative results for samples of all negative individuals.

Relationship between precision and accuracy. The figure below illustrates the relationship between the “true” value of a quantitative measurement and the value obtained by a study, in terms of high/low validity and reproducibility. Both indicators must be evaluated in the assessment of a new diagnostic test, as well as tests which are already in use, but applied in new settings.

Validity Hig Lo h w Measured values Hig h Reproducibility True value

Lo Measured values w True value

Adapted from Beaglehole et al., 1993

4 Evaluation of diagnostic/screening tests REPRODUCIBILITY

Laboratory Research, Clinical and Epidemiological Research

Good reproducibility is generally obtained in laboratory work, where working condi- tions can be better controlled (a single observer, working with a new, high-quality, calibrated apparatus; control samples; an environment free of major disturbances; and appropriate working hours). Clinical and epidemiological research rarely matches the reliability found in laboratory research. Clinical diagnosis, for example, is a subjective process and hence prone to divergent interpretations even among competent and experienced clinicians. In general, low reproducibility tends to obscure the real correlations among events. This limits the usefulness of clinical diagnosis in population-based research, in terms of the study of associations between risk factors and health problems.

Evaluation of reproducibility

There are different ways of both establishing agreements among different readings of the same event, as well as comparing different diagnostic methods and estimating any discordance in the comparison. Data can be expressed in the form of a dichotomous variable (e.g.: positive/negative parasitaemia), categorical (normal, abnormal, borderline biochemistry levels) or expressed in continuous units of measurement (antibody titres). This is one of the elements that influence how the results are analyzed. Generally, regardless of the type of data produced by a diagnostic test, clinicians/epidemiologists tend to reduce it to dichotomous or categorical variables to make the interpretation more useful in practice. The comparison of the results can be presented as the overall rate of agreement among examiners, or through the estimation of the Kappa index.

Kappa (k) index - It is a measure of the degree of nonrandom agreement between observers or measurements of the same categorical variable. K shows the proportion of agreements beyond that expected to occur by chance. If the measurements agree more often than expected by chance kappa is positive; if concordance is complete, kappa=1; if there is no more nor less than chance concordance, kappa=0; if the measurement disagree more than expected by change, kappa is negative. Table 1 presents the values of k and the respective interpretations.

Table 1 - Scale of agreement of Kappa

Kappa Agreement < 0.00 none 0.00-0.20 little 0.21-0.40 passable 0.41-0.60 fair 0.61-0.80 good 0.81-0.99 optimal

5 Evaluation of diagnostic/screening tests 1.00 perfect The Table 2 presents the malaria diagnosis of 120Adapted blood from smears Landis & prepared Koch, Biometrics,1977 under uniform conditions read by two independent microscopists. The first identified 20 positive and 100 negative slides, while the second diagnosed 30 positive and 90 negative, respectively, which yielded 106 (18+88) concordant results and 14 discordant (12+2). The general rate of agreement was 88.3% (106/120) and k = 65%.

Table 2 – Agreement between 2 blood smears readers

Microscopist 1 Total (+) ( - ) Microscopist 2 (+) 18 (a) 12 (b) 30 (-) 2 ( c) 88 (d) 90 Total 20 100 120

Po – Pe Kappa is estimated as: K = 1 – Pe

Where:Po = the proportion of agreement observed Pe = the proportion of agreement expected by chance

a + d Po = = (18+88)/120 = 0.883 a + b + c + d

[(a + b) (a + c)] + [(c + d) (b + d)] (30 x 20) + (90 x 100) = 0.667 Pe = = (a + b + c + d)2 120 2

0.216 K = = 64.8% 0.333

In the interpretation of k, attention must be paid to:

Type of event and other factors - the level of agreement depends on the type of event, factors relating to the examiner, the procedure being tested and the environment in which the observations are made. In addition, reducing the number of categories of results (positive and negative values instead of high, medium, low and very low values) tends to enhance agreement.

Prevalence - the prevalence of the diagnosis or event in the population affects the final result. Low prevalence tends to be associated with low levels of agreement and reproducibility, as the value of k depends on agreement owing to chance. It is possible to find low levels of reproducibility due to the low prevalence of the event and not to errors relating to the diagnostic procedure employed. As a result, the prevalence must be reported together with the results of k. 6 Evaluation of diagnostic/screening tests

Independence of the evaluation - evaluations must be independent of one another, a principle that also applies to the verification of accuracy. This means that when an examiner repeats a test he/she must ignore previous results obtained either by him/herself or by another examiner to avoid being influenced by that knowledge and, however involuntarily, prejudicing the evaluation.

VALIDITY OF A DIAGNOSTIC TEST

The validity of a test is the extent, in quantitative or qualitative terms, to which a test is useful for the diagnosis of an event (validity is either simultaneous or concurrent) or to make a prediction (predictive validity). To determine validity, test results are compared with a standard set of results (gold standard). These can be the true condition of the patient (if the information is available), a set of tests which is considered to be better, or another form of diagnosis that can serve as a reference standard. The ideal diagnostic test should always supply the correct answer, that is, a positive result in individuals who have a disease and a negative result in those who do not have it. In addition, it should be rapid in performance, safe, simple, innocuous, reliable and inexpensive.

Sensitivity and Specificity

To define the concepts of sensitivity and specificity, lets use as examples tests that yield dichotomous results, that is, results stated in two categories: positive and negative.

Table 3 presents a simplified illustration of the relationships between a test and a true diagnosis. The test result is considered positive (abnormal) or negative (normal), and the true illness status as present or absent. In the evaluation of a diagnostic test there are 4 possible interpretations of its results: two in which the test is correct and two in which it is incorrect. The test is correct when it is positive in the presence of the disease (true positive results) and negative in absence of the disease (true negative results). Conversely, the test is incorrect when it is positive in the absence of the disease (false positive) and negative in its presence (false negative). The best diagnostic tests are those that yield few false positives and few false negatives.

Table 3 . Validity of a diagnostic test

Disease (by a gold standard) Present Absent true false positive positive positive (a) (b) a + b

Test result (c) (d) c + d negative false true negative negative a+b+c+d=N a + c b + d

The following proportions/ indexes can be calculated from this comparison 7 Evaluation of diagnostic/screening tests

Sensitivity : a/(a+c) Specificity: d/(b+d) Prevalence (true): (a + c)/N Estimated (test) prevalence: (a+b)/N Positive predictive value: a/(a+b) Negative predictive value: d/(c+d) Correct classification (accuracy): (a+d)/N Incorrect classification: (b+c)/N Sensitivity – is the proportion of truly diseased persons identified by a diagnostic/ screening test (proportion of correctly diagnosing ill individuals).

Specificity - is the proportion of truly non-diseased persons who are so identified by a diagnostic/screening test (proportion of correctly identifying non-ill individuals).

Co-positivity and co-negativity - terms used as substitutes for sensitivity and specificity, respectively, when the standard used for comparison is another test regarded as a reference for the given disease, instead of a true diagnostic of the presence or absence of disease.

Cut-off point for positive results - The ideal test (with 100% sensitivity and 100% specificity) is rarely found in practice because the attempt to improve sensitivity frequently has the effect of reducing specificity. In some clinical situations there is no dichotomous diagnostic test result available and the results are presented as a continuous variable; in this cases the distinction between “normal” and “abnormal” is not straightforward.

To establish a cut-off point for positivity the investigator must take into account the relative importance of sensitivity and specificity in the diagnostic test, and judge the implications of the two parameters. False-positive results must be avoided when the a positive result implies in a high risk intervention - in this case, a cut-off point must be set such as to increase the specificity of the test. On the other hand, false-negative results should be avoided in situation where a missed positive case can put individuals at risk, such as in the serologic screenings of potential blood donors - in such cases, the cut-off point must be established with a view to achieving 100% sensitivity, so that false-negatives will be avoided at the cost of increasing the proportion of false-positives.

To increase sensitivity in a screening, more than one type of diagnostic test can be used in parallel, considering as positive the samples that present at least one positive result. In population surveys, tests of high sensitivity must be used when the prevalence of infection at large is probably low. In clinical work, on the other hand, it is common to perform tests in series. Additional tests are performed to confirm previous negative or positive results.

Predictive value of a test - In epidemiology or clinical practice, the validity of a test reflects the extent to which it can predict the presence of a disease or infection. The investigator must be prepared to answer the question - since the test yielded a positive (or negative) result, what is the probability that the individual is indeed sick (or is actually healthy). This attribute of a test known as its predictive value (PV), may be

8 Evaluation of diagnostic/screening tests positive (PPV) or negative (NPV), and it is depending of the sensitivity and specificity and the prevalence of the disease in the group under study.

Positive predictive value - the probability that a person with a positive test is a true positive (i.e. does have the disease). Using data from table 2, the PPV would be 60% (18/30), which essentially means that 6 individuals out of every 10 with a positive tests would actually be truly diseased.

Negative predictive value - the probability that a person with a negative test is a true negative (i.e. does not have the disease). From table 2, the NPV would be estimated as (88/90) = 98% - 98 out 100 negative tests would be from a non-diseased individual.

Relationship between predictive value and prevalence

Whereas the sensitivity and specificity of a test are inherent properties of the test and do not vary except in consequence of a technical error, PVs depend on the prevalence of the disease in the population under study. The PPV rises with prevalence while the NPV diminishes. Thus, when a disease is rare the PPV is low, most positive tests are from healthy individuals, and are false positives. On the other hand, the NPV is high in areas of low prevalence. False-positive and false-negative findings can be minimized by a combination of tests in parallel (two or more tests performed simultaneously) or in series (two or more tests performed in sequence). If the intention is to reduce false-positive results (and increase specificity), a positive diagnosis would be confirmed only when at least 2 different tests are positive. On the other hand, to reduce false negative results (and increase sensitivity), a single positive test would be sufficient to consider a positive diagnosis.

SYSTEMATIC ERROR AND RANDOM ERROR

Random error - Studies to evaluate the performance of diagnostic tests are subject to chance errors; a diagnostic test for a disease can yield normal results for some patients who have the disease. Confidence intervals for sensitivity and specificity of a new test can be estimated to evaluate the range of variation, which is expected among the results so that they may be compared with the results of conventional tests. For example, a conventional Test C used in practice has a known sensitivity of 80% and a specificity of 85%, calculated on the basis of hundreds of individuals; and a new Test N, was found to give positive results in all 10 patients with the disease of interest (100% sensitivity), and was negative in 9 out of 10 control individuals without the disease (90% specificity). While the sensitivity and specificity of the new test N are greater than those described for test C, the estimated 95% confidence interval for both sensitivity (61%-100%) and specificity (55%-97%) showed a wide variation, and the intervals overlap with those of the conventional test. The wide confidence interval is obtained because of the small number of individuals tested for the evaluation of the new test.

One strategy for minimizing chance errors is to estimate the sample size needed to determine the validity of the diagnostic test, by constructing confidence intervals and defining ranges that include the desired sensitivity and specificity of the test. This requires the calculation of sample sizes for sensitivity and specificity estimations.

9 Evaluation of diagnostic/screening tests

Sample size for evaluation of sensitivity and specificity

Sample sizes are calculated for dichotomous variables on the same principles for descriptive/ prevalence studies, for which the following information is required:

1. An estimate of the expected proportion of positivity in the study population (if greater than 50%, use the proportion of persons with negative results), 2. Desired width of the confidence level, and 3. Definition of the confidence level (generally 95%)

N = Z * Z[P (1 - P)]/(D * D)

Where: P = expected proportion D = half-width of the confidence interval Z = 1.96 (for confidence level = 0.05 and 95% CI)

For example, in a study to determine the sensitivity of a new diagnostic test for malaria, it is expected that 80% of the patients with malaria will test positive (results from a pilot study). How many individuals with malaria must be examined to estimate a test sensitivity of 80% with a precision of 4%, at 95% confidence level? Assuming: (1) expected proportion of malaria cases testing positive = 0.20 (80% is higher than 50%; hence the proportion of individuals with malaria but testing negative is 20%). (2) total range of the confidence interval = 0.08. Use the half-width (0.04) as the maximum acceptable error (3) confidence interval = 95% Using the above formula, 384 patients with malaria would be required to estimate a test sensitivity of 80% within a confidence interval of 76%-84%: n = 1.962 [0.20 (1-0.20)]/(0.042) n = 384 persons

The same procedures hold for calculations of sample size to determine the specificity of a test. For example, if 90% of individuals without malaria are expected to test negative, 216 individuals would be required to estimate a specificity of 90% (±0.04) with a 95% confidence interval.

Systematic error - Studies of diagnostic tests are generally subject to the same biases as observational studies; the most common are biases of sampling and test measurement.

10 Evaluation of diagnostic/screening tests Sampling bias – A sampling bias occurs when the samples tested are not representative of the target population on which the test is to be performed. For example, the selection of individuals from referral services tends to include persons with severe/advanced forms of the disease and patients whose test results may be more abnormal (or different) than they would be in other clinical forms of the disease. As a result, the study overestimates the sensitivity of the test under routine conditions. In the same way, the study will yield an increased specificity if individuals without the disease have been selected from volunteers, as they tend to be healthier than individuals recruited from ambulatory clinics who, although symptomatic, do not have the disease of interest. The strategy to minimize this type of error is to select population samples similar to the one on which the test will be used in the future.

Testing a panel of samples may result in an overestimation of positive predictive value of test. A common situation is to test of an equal number of individuals or samples with and without the disease, which is equivalent to a prevalence of 50%, which is usually higher than in the general population. To offset this bias the assessment should consider the predictive value of the test adjusted for other probabilities of disease, so that the investigator may evaluate the utility of the test in other clinical/epidemiological situation.

Measurement bias - Wherever possible the investigator must pay no attention to who has the disease and who does not in order to avoid corrupting his/her interpretation of the results, especially in borderline situations. Similarly, the researcher must remain blinded to perform the diagnostic tests. Cut-off point of a positive test must be established before the study is conducted.

Basic principles for testing a diagnostic/screening test The design of studies to evaluate/compare the clinical or public health utility of diagnostic tests should select participants at random and the comparison should be blind. Tests should be performed in the same individuals or samples to avoid any subsequent bias in clinical investigation, treatment decision and any external variation in the results. The tests should be conducted in the conditions and population under which it would be used in the future. The fact that a test identifies advanced/severe cases of a disease does not mean that it will be equally useful to distinguish patients with mild manifestation of the disease from other patients with similar symptoms.

11 Evaluation of diagnostic/screening tests

CHECKLIST FOR DETERMINING THE VALIDITY OF A TEST . Establish the need for the test

. advantages of the new test over those already in use . benefits for patients from introduction of a new test . costs considerations . Determine the sampling criteria

. define the reference population and study population . identify the source population from which participants are to be selected . qualify sampling material, patients, etc

. Describe the test and the gold standard . product, reagents, origin . laboratory, clinical procedures . interpretation and categorization of the parameters to be evaluated

. Describe operation for the test and gold standard

. coding of samples . blinding

. Calculate the size of the sample . state the minimum number of participants required to estimate the sensitivity and specificity of the test with a given 95% confidence interval. . estimate the number of cases that are potentially detectable (within the study time frame) from the source population. . Discuss ethical issues

. benefits and risks of the test and of detecting positive individuals . availability of medical care to positive individuals . confidentiality of test results . Analysis of the data . present the results in terms of sensitivity, specificity and predictive values with their respective 95% confidence intervals Adapted from Hulley & Cummings, 1988

12 Evaluation of diagnostic/screening tests ADDITIONAL READING

BEAGLEHOLE, R., BONITA, R. & KJELLSTRÖM, T. Basic Epidemiology. World Health Organization, Geneva, 1993.

BUCK, A.A. & GART, J.J. Comparison of a screening test and a reference test in epidemiologic studies. I. Indices of agreement and their relation to prevalence. American Journal of Epidemiology,83:586-92, 1966.

FLEISS, J.L. Statistical methods for rates and proportions, 2nd ed. New York, John Wiley & Sons, 1981.

FLETCHER, R.W.; FLETCHER, S.W. Clinical Epidemiology, the essentials. Lippincott Williams & Wilkins, 2005.

GORDIS, L. Epidemiology. Elsevier Science, 3rd edition, 2004

GALEN, R.S. & GAMBINO, S.R. Beyond normality: the predictive value and efficiency of medical diagnosis, New York:John Wiley & Sons ed., 1975.

HULLEY, S. B. B., THOMAS B. N., WARREN S.B. & CUMMINGS, S.R. Designing Clinical Research: An Epidemiologic Approach. Lippincott Williams & Wilkins 2nd edition, 2000.

KRAEMER, H.C. & BLOCH, D.A. Kappa coefficients in epidemiology: an appraisal of a reappraisal. Journal of Clinical,41:959-68, 1988.

13 Evaluation of diagnostic/screening tests EXERCISES

Files: 1. ViewEcge 2. ViewMalqc 3. Viewbloodche

Exercise 1

Agreement among electrocardiogram readings – data table ecge from EPIGUIDE.MDB project contains the results of two independent readings of 100 electrocardiograms.

**Before starting the exercise route out the results to a HTML file named “Results ECGE”. ROUTEOUT 'Results ECGE' [Figure 1]

[Figure 1 – Route Out results]

2- Define the folder to save the HTM file

4- Mark this box If 3- Write the file name you want to replace an existing file

1- Click on RouteOut

14 Evaluation of diagnostic/screening tests

Question 1. Calculate the agreement (Kappa) of the diagnosis of electrocardiographic abnormality made by two observers (A and B). Interpret the results.

Note 1: [Read the data file] [Figure 2] READ 'C:\EPIGUIDE\EpiGuide.mdb':viewECGE TABLES CENTERA CENTERB [Figure 3] [Note the results]

For Epitable: Run EPITABLE to calculate Kappa – select COMPARE, then select PROPORTIONS, then select RATER AGREEMENT (Kappa) Choose TWO CATEGORIES [Use data from preceding table] Press F10 to leave EPITABLE Return to ANALYSIS

For Open Epi: [Figure 4, Figure 5] Access OPEN EPI from the EpiGuide CD or from www.openepi.com From Open Epi menu choose DIAGNOSTIC/SCREENING. Click on ENTER NEW DATA button. On the dialogue box, when asked to ENTER THE NUMBER OF POSSIBLE OUTCOMES THE DIAGNOSTIC CAN REPORT, type “2”. Populate the table provided with the data from preceding table. Click CALCULATE when finished. A result page will be shown. Return to ANALYSIS

EXIT [to close ANALYSIS]

15 Evaluation of diagnostic/screening tests

[Figure 2 – Read data file]

1- Click on Read from the Analysis Commands tree

3 – Identify the data file you will use in the exercise: ViewECGE

2 – Change to the desired project: EPIGUIDE.MDB

4 – Click Ok [Figure 3 – Tables command]

3- Choose the 2- Choose the Outcome variable Exposure Variable

4 – Click Ok

1- Click on Tables

16 Evaluation of diagnostic/screening tests [Figure 4 – Open Epi – Diagnostic/Screening calculator]

1- Click Diagnostic/Screening

2- Click Enter New Data

[Figure 5 - Open Epi – Diagnostic/Screening calculator]

3- Enter # of possible outcomes

Populate the Input table

17 Evaluation of diagnostic/screening tests Exercise 2

Parasitological diagnosis of malaria – data table malqc from EPIGUIDE.MDB project contains the results of blood smear examination of 141 febrile individuals who presented spontaneously on the same day at 2 local malaria diagnosis units (National Health Foundation or FNS). All slides were routed independently to a Quality Control Center of the Ministry of Health, and the results were compared. The analysis was designed to evaluate the validity and agreement of the malaria diagnosis at the local health services and the Quality Control Center. Answer the following questions using the data file malqc.

**Before starting the exercise route out the results to a HTML file named “Results MALQC”. ROUTEOUT 'Results MALQC'

Question 1. What is the overall prevalence and 95% CI of malaria based on the results reported by the Quality Control Center? What is the frequency of each species among the cases with malaria?

Note 1: READ 'C:\EPIGUIDE\EpiGuide.mdb':viewMALQC FREQ SLIDEQC SELECT SLIDEQC = 2 [to select the individuals with malaria] [Figure 6] FREQ DIAGQC SELECT [to disable selection] [Figure 7]

[Figure 6 – Select command]

1- Click Select 3- Define the selection criteria

2- Choose the variable(s) 4- Click OK when to build the selection finished criteria

18 Evaluation of diagnostic/screening tests

[Figure 7 – Cancel Select]

1- Click Cancel Select

2 - Click OK to cancel current selection criteria

Question 2. What is the overall prevalence (95% CI) of malaria based on the results of the FNS diagnosis units? What is the frequency of diagnosis by species?

Note 2: FREQ SLIDEFNS SELECT SLIDEFNS = 2 [to select the individuals with malaria] FREQ DIAGFNS [Figure 8] SELECT [to disable selection]

[Figure 8 - Frequencies command] 2 – Choose the variable(s)

1- Click Frequencies

3 - Click OK when finished

19 Evaluation of diagnostic/screening tests

Question 3. Compare the proportion of malaria cases by species using the results provided by the FNS diagnosis units and the Quality Control Center (questions 2 and 3). What are the implications of these findings for the control program?

Question 4. What is the agreement (Kappa) of the diagnosis of malaria between the FNS diagnosis units and the Quality Control Center? Interpret the result of the local units.

Note 4: TABLES SLIDEFNS SLIDEQC [Note the results – absolute numbers]

For Epitable: Run EPITABLE to calculate Kappa -- select COMPARE, then select PROPORTIONS, then select RATER AGREEMENT (Kappa) Choose TWO CATEGORIES Press ESC to return to EPITABLE menu

For Open Epi: Access OPEN EPI from the EpiGuide CD or from www.openepi.com From Open Epi menu choose DIAGNOSTIC/SCREENING. click on ENTER NEW DATA button. On the dialogue box, when asked to ENTER THE NUMBER OF POSSIBLE OUTCOMES THE DIAGNOSTIC CAN REPORT, type “2”. Populate the table provided with the data from preceding table. Click CALCULATE when finished. A result page will be shown. Note the results and leave the result window open.

Question 5. What is the sensitivity (95% CI) of the FNS malaria diagnosis units? What is the total number of false-negative cases of each species? Take as gold standard the results of the Quality Control Center.

Note 5: For EPITABLE: From EPITABLE menu Select STUDY, then select SCREENING [Use the data in the table generated in question 4] Press F10 to leave EPITABLE Return to ANALYSIS

20 Evaluation of diagnostic/screening tests

For Open Epi: Go back to Open Epi result window and note the result. Return to ANALYSIS

TABLES DIAGFNS DIAGQC

Question 6. What is the positive predictive value (95% CI) of fever in the diagnosis of malaria at these reporting posts? What is your opinion about introducing presumptive malaria treatment (treatment of symptomatic individuals before parasitological confirmation) in this area?

Note 6: FREQ SLIDEQC EXIT [to close Analysis] [Figure 9]

Question 7. Discuss the implications of the results of this investigation in light of the Global Malaria Control Strategy- early diagnosis and prompt treatment. What strategies would you recommend to improve the quality of malaria diagnosis in this area?

Note 7: EXIT [to close Analysis] [Figure 9]

[Figure 9 – EXIT Analysis]

Click EXIT to close Analysis

21 Evaluation of diagnostic/screening tests

Exercise 3

Validation of screening for Chagas disease in blood banks – the data table bloodche, included in the EPIGUIDE.MDB project, contains the serologic results for T. cruzi infection in 1,513 first-time blood donors screened by hemagglutination (HA) and complement fixation (CF) in the 6 blood banks of the city of Goiânia (1988-1989). Samples of these sera were sent independently to a WHO Chagas Disease Reference Laboratories, and the results were compared. Details of the methodology may be found in Andrade et al., 1992. The purposes of the analysis were (1) to assess the sensitivity of the blood banks in preventing transfusion of Chagas disease tainted blood, and (2) to evaluate the agreement between serodiagnosis for T. cruzi infection performed under ideal conditions by the reference laboratory and in the routine practice of the blood banks. Use viewBloodche from EPIGUIDE.MDB project to answer the following questions:

**Before starting the exercise route out the results to a HTML file named “Results BLOODCHE”. ROUTEOUT 'Results BLOODCHE'

Question 1. Compare the prevalence of seropositivity for T. cruzi by the HA and IF techniques performed by the reference laboratory. Evaluate the benefit of screening in parallel against screening by only one of the techniques.

Note 1: READ 'C:\EPIGUIDE\EpiGuide.mdb':viewBLOODCHE [Once it will not be necessary to use the percents of the tables or the statistical analysis you can turn them off using the SET command.] [Figure 10] SET PERCENTS=(-) STATISTICS=NONE

[Create variables HAGR (HA>=16) and IIFGR (IF>=40)] DEFINE HAGR - standard [hemagglutination group] [Figure 11] IF HARL = -1 THEN ASSIGN HAGR = “S” END [without serology] [Figure 12] IF HARL > -1 AND HARL < 16 THEN ASSIGN HAGR = “N” END [negative result] IF HARL >= 16 THEN ASSIGN HAGR = “R” END [reactive result]

DEFINE IIFGR _ [immunoflorescence group] IF IFRL = -1 THEN ASSIGN IIFGR = “S” END IF IFRL > -1 AND IFRL < 40 THEN ASSIGN IIFGR = “N”

22 Evaluation of diagnostic/screening tests END IF IFRL >= 40 THEN ASSIGN IIFGR = “R” END

SELECT HAGR <> “S” [to exclude missing results from HAGR] FREQ HAGR SELECT [to disable selection]

SELECT IIFGR <> “S” [to exclude missing results from IIFGR] FREQ IIFGR SELECT [to disable selection] TABLES HAGR IIFGR

[Figure 10 – SET command]

1- Click SET

2- Uncheck Show Percents

3- Check Statistics None

[Figure 11 – Define new variable]

2 – Type the new variable name 1- Click Define to create a new variable

[Figure 12 – IF command] 23 Evaluation of diagnostic/screening tests

3

2 1 4

10

6

8

7

5 9

1 – Click IF to establish conditions for the new variable 2 – Choose the variable to build the condition 3 – Create the condition(s) to assign the values for the new variable 4 – Click THEN to access the THEN Block 5 – Click ASSIGN 6 – Choose the variable to receive the new values 7 – Choose from the Available variables to construct the expression 8 – Revise the assign expression 9 – Click ADD to return to the IF window 10 – Click OK when finished

Question 2. Calculate the Kappa agreement index between HA and IF tests performed in the reference laboratory. Interpret the results.

24 Evaluation of diagnostic/screening tests Note 2: [Use only the pairs available for both techniques] SELECT HAGR <> “S” AND IIFGR <> “S” TABLES HAGR IIFGR SELECT [Note the results]

For Epitable: Run EPITABLE to calculate Kappa – select COMPARE, then select PROPORTIONS, then select RATER AGREEMENT (Kappa) [Use the data from the previous table] Return to Analysis leaving EPITABLE active

For Open Epi: Access OPEN EPI from the EpiGuide CD or from www.openepi.com From Open Epi menu choose DIAGNOSTIC/SCREENING. Click on ENTER NEW DATA button. On the dialogue box, when asked to ENTER THE NUMBER OF POSSIBLE OUTCOMES THE DIAGNOSTIC CAN REPORT, type “2”. Populate the table provided with the data from preceding table. Click CALCULATE when finished. A result page will be shown. Note the results and close the result window.

[Attention: To continue the exercise with using this new variables it will be necessary to create a new data table, that will contain these new variables. Use the WRITE(export) command with the Replace option and generate a new data table inside the EPIGUIDE.MDB project called bloodche2. [Figure 13] To assess the new data table use the Read command to open the new table (option: show all).]

Read 'C:\EPIGUIDE\EpiGuide.mdb':bloodche2

Question 3. Calculate the agreement between the results of the reference laboratory and the blood banks. In order to do the comparison create 2 new variables: one for the results of the reference laboratory (RLRES= reference serology) and other for the blood banks (BBRES= positive result in screening

25 Evaluation of diagnostic/screening tests Note 3: [Create variable RLRES (IIFGR= R or HAGR= R)] DEFINE RLRES - standard IF HAGR = “S” AND IIFGR = “S” THEN ASSIGN RLRES = “S” ELSE ASSIGN RLRES = “N” END [Recoding to eliminate empty records] IF HAGR = “R” OR IIFGR = “R” THEN ASSIGN RLRES = “R” END [Create variable BBRES (HABB=R or CFBB=R)] DEFINE BBRES - standard IF HABB = “S” AND CFBB = “S” THEN ASSIGN BBRES = “S” ELSE ASSIGN BBRES = “N” END

IF HABB = “R” OR CFBB = “R” THEN ASSIGN BBRES = “R” END

[Use only the pairs available for both the reference laboratory and the blood banks] SELECT BBRES <> “S” AND RLRES <> “S” TABLES BBRES RLRES [Note results and set up the table]

For Epitable: Run EPITABLE to calculate Kappa -- select COMPARE, then select PROPORTIONS, then select RATER AGREEMENT (Kappa) [Use the data from the previous table] Return to return to ANALYSIS leaving EPITABLE active

For Open Epi: Go back to Open Epi Input Table Window, click on CLEAR, and use the data from the previous table. Note the results and close the result window. Return to ANALYSIS.

SELECT [to disable selection]

26 Evaluation of diagnostic/screening tests

[Figure 13 - Write (export)]

2 3

4

5

1 6

7

1 – Click Write (Export) to create a new data file 2 – Choose the variable(s) for the new data file 3 – Choose the output mode - Replace 4 – Choose the file outpu format – Epi 2000 5 – Choose the File Name (name of the project) 6 – Choose / Type the Data Table (name of the new data file) 7 – Click OK when finished

Question 4. Calculate the sensitivity, PPV and NPV blood banks result (BBRES). Construct a new variable (FRLRES) assuming as gold standard the co-positivity results by HA and IF in the reference laboratory. How many seropositives failed to be detected by the blood banks in their routine screening (false- negatives)? What was the proportion of diagnosis confirmation among donors found seropositive by the blood banks (answer using the PPV)?

Note 4: [Create variable FRLRES (IIFGR= “R” and HAGR= “R”) DEFINE FRLRES - standard IF IIFGR = “R” AND HAGR = “R” THEN ASSIGN FRLRES = “R” END IF IIFGR = “N” OR HAGR = “N” THEN ASSIGN FRLRES = “N” END IF IIFGR = “S” AND HAGR = “S” THEN ASSIGN FRLRES = “S” END

[Attention: To continue the exercise with using this new variables it will be necessary to create a new data table, that will contain these new variables. Use the WRITE(export) command and generate a

27 Evaluation of diagnostic/screening tests new data table inside the EPIGUIDE.MDB project called bloodche3. To assess the new data table use the Read command to open the new table (option: show all).]

Read 'C:\EPIGUIDE\EpiGuide.mdb':bloodche3

[Use only the pairs available for both the reference laboratory and the blood banks] SELECT BBRES <> “S” AND FRLRES <> “S” TABLES BBRES FRLRES [Note the results – absolute numbers]

For Epitable: To calculate sensitivity, use EPITABLE, select STUDY and then select SCREENING Press F10 to leave EPITABLE Return to ANALYSIS For Open Epi: Go back to Open Epi Input Table Window, click on CLEAR, and use the data from the previous table. Note the results and close the result window. Return to ANALYSIS.

SELECT [to disable selection]

Question 5. What is the number of seropositives detected by HA (HABB) and by CF (CFBB) in the blood banks. Which test would be best to use for screening in the blood banks? Evaluate the benefit of screening in the blood banks by both techniques compared with the use of only one.

Note 5: [Use only the pairs available for both techniques, HA and CF] SELECT HABB <> “S” AND CFBB <> “S” [Exclude BB6, as results are available only for HA] SELECT BB <> 6 TABLES HABB CFBB SELECT [to disable selection]

[Use SET command to activate the percents of the tables and the statistical analysis]. SET PERCENTS=(+) STATISTICS=COMPLETE

EXIT [to close ANALISYS]

28 Evaluation of diagnostic/screening tests

REFERENCES

ANDRADE, A.L.S.S., MARTELLI, C.M.T., LUQUETTI , A.O., OLIVEIRA, O.S.O., SILVA, S.A. & ZICKER, F. Serologic screening for Trypanosoma cruzi among blood donors in Central Brazil. Bulletin of the Pan American Health Organization,26(2):157-164, 1992.

ANDRADE, A.L.S.S., MARTELLI, C.M.T., OLIVEIRA, R.M., ARIAS, J.R., ZICKER, F. & PANG, L. High prevalence of asymptomatic malaria in gold mining areas in Brazil. Clinical Infectious Disease,20(2): 475, 1995.

For Analysis:

DEAN AG, ARNER TG, SUNKI GG, FRIEDMAN R, LANTINGA M, SANGAM S, ZUBIETA JC, SULLIVAN KM, BRENDEL KA, GAO Z, FONTAINE N, SHU M, FULLER G. Epi Info™ a database and statistics program for public health professionals. Centers for Disease Control and Prevention, Atlanta, Georgia, USA, 2002. http://www.cdc.gov/epiinfo/downloads.htm

DEAN A.G., DEAN J.A., COULOMBIER D. et al. Epi Info™, Version 6.04, a word processing, database, and statistics program for public health on IBM-compatible microcomputers. http://www.cdc.gov/epiinfo/Epi6/ei6.htm

DEAN, A., SULLIVAN, K, & SOE, M.M. OpenEpi - Open Source Epidemiologic Statistics for Public Health. http://www.openepi.com

29 Evaluation of diagnostic/screening tests DATA FILE DICTIONARY

Project: EPIGUIDE.MDB

File: ecge

Variable Description Code Description of code

ID Identification number 0 Normal CENTERA ECG reading by observer A 1 Abnormal 0 Normal CENTERB ECG reading by observer B 1 Abnormal

File: malqc

Variable Description Code Description of code

ID Identification number

1 Negative SLIDEFNS Slide result by FNS 2 Positive

1 Negative SLIDEQC Slide result by Quality Control 2 Positive

1 P. vivax Species diagnosis by Quality 2 P. falciparum DIAGQC 3 P. malariae Control 4 Mixed 5 Negative 1 P. vivax 2 P. falciparum DIAGFNS Specie diagnosis by FNS 3 P. malariae 4 Mixed 5 Negative

30 Evaluation of diagnostic/screening tests

File: bloodche

Variable Description Code Description of code

ID Identification number

1 Hemog 2 Araújo Jorge 3 Hemolabor BB Blood banks 4 Hemotherapy Institute 5 Goiânia Blood Bank 6 Hospital das Clínicas

R Reactive HABB Hemagglutination in blood bank N Negative S No serology R Reactive CFBB Complement fixation in blood bank N Negative S No serology -1 No serology Hemagglutination in reference HARL 0 – 8 Negative laboratory (antibody titres) 16 -1280 Positive -1 No serology Immunofluorescence in reference IFRL 0 - 20 Negative laboratory (antibody titres) 40 -1280 Positive -1 No serology ELISA in reference laboratory ELRL 0 - 0.9 Negative (optical density) 1.0-3.5 Positive

31