Software Enabled Load Balancer by Introducing the Concept of Sub Servers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mandatory Disclosure

Mandatory Disclosure Mandatory Disclosure As on Sep 2018 10.1 AICTE File No. F.No. North-West/1-3513762007/2018/EOA Date & Period of last dated 30.04.18 for the year 2018-2019 approval 10.2 Name of the Institution Seth Jai Parkash Mukand Lal Institute of Engineering & Technology (JMIT) Address of the Institution Radaur, District Yamuna Nagar City & Pin Code Yamuna Nagar – 135 133 State / UT Haryana Longitude & Latitude Longitude 770 09’35.90”E AND Latitude of 300 02’11.14”N Phone number with STD 01732-284195, 283700, 284799 code FAX number with STD code 01732-283800 Office hours at the 9:25 a.m. to 05:05 p.m. Institution Academic hours at the 9:30 a.m. to 05:00 p.m. Institution Email [email protected] Website www.jmit.ac.in Nearest Railway Jagadhri (YNR) (18 Kms.) Station(dist in Km) Nearest Airport (dist in Km) Chandigarh Airport (120 kms.) 10.3 Type of Institution Private -Self Financed Category (1) of the Non Minority Institution Category (2) of the Co-educational Institution 10.4 Name of the organization The Ved Parkash Mukand Lal Educational Society, Radaur running the Institution Type of the organization Society Address of the organization Radaur Registered with The Registrar of Societies, Yamuna Nagar Registration date 02.03.1987 Website of the organization www.jmit.ac.in 10.5 Name of the affiliating Kurukshetra University, Kurukshetra University Address Distt.- Kurukshetra, Haryana Website www.kuk.ac.in Latest affiliation period CG-II/18/ 16599 dated 02.07.18 10.6 Name of Principal / Director Dr. -

Download College Magazine

D.A.V. College For Girls YAMUNA NAGAR – 135 001 (HARYANA) Dear Elders, Brothers & Sisters, Corona Virus seems to be spreading at a fast pace, with more than 3,00,000 confirmed cases in the world. Practically every nation has gone into a Lock-down state. I think that this the first time that the entire human race, regardless of religion, language, colour, or nationality, have declared war against its common enemy - Corona Virus. Since time immemorial, a human being which is just a small organism, using the power of his brain, tried to control and rule over all other forms of life. And in his struggle for power, he played havoc with Nature. This epidemic has proven to us that when faced with the wrath of Nature, a micro-organism, like Corona Virus, is sufficient to paralyse, maim, and strangulate the entire human race. Let this be lesson to us, though a very expensive one, that all humanity must live with Nature and not at the expense of it. We, the disciples of Maharishi Dayanand ji, understand that we have to live as per the teachings of the Vedas, respecting all forms of life, and maintaining the balance of Nature. Let us pray: “Oh Lord, the Creator & Protector, Let the Celestial Shining region bestow peace upon us; Let the mid-space (outer space) bestow peace; Let there be peace on Earth; Let the waters be peaceful; Let the medicines & medicinal plants be peace giving; Let all Vegetation be peace giving; Let the Sages & Intellectuals be peace loving; Let all Divine Knowledge be peace giving; Let there be peace in peacefulness; Let peace & harmony be bestowed upon me and all of us.” DAV, always ready to face the National calamities, declared all its 920 Institutions closed in 23 states of the country, till 31st March 2020 in an endeavour to break the Corona Virus growth chain. -

Theinstituteof Electricaland Electronicsengineer, Inc

IEEE DELHI SECTION NEWSLETTER VOL 25 NO. 1 JUNE 2005 email : [email protected] URL : http://www.ewh.ieee.org/r10/delhi THE INSTITUTE OF ELECTRICAL AND E LECTRONICS E NGINEER, INC. INDO-PAK CON ERENCE IEEE PRESIDENT VISITS DELHI SECTION IEEE President W.C. Anderson visited India in mid January INTRODUCTION 2005 and was also at uring R 10 Meeting at Adelaide in April 2004, Delhi. His Section Chair from Delhi (Raj Kumar Vir) and D trip to India Vice-Chair of Karachi (Shahid Suleman Jan) Sections interacted and Concept of a joint conference so soon emerged after taking and the over as IEEE subject was President reviewed w.e.f. 1st Ja- in the resp- nuary 2005 shows how ective Ex- Mr. & Mrs. Anderson at Delhi Section Meeting coms of Del- much imp- hi and of the ortance he is giving to India in his scheme of things for 3 Sections of growth of IEEE. During his stay at Delhi he addressed stu- Pakistan. dent mem- Delhi Sec- Souvenir being released to mark the bers at Delhi tion took inauguration of the Conference College of initiative to Engineering hold first of such conferences. India council supported the explaining the propo-sal whole-heartedly. President IEEE also appreciated importance of the idea and IEEEmember- provided fin- ship and how ancial supp- it is relevant ort from the for the career headquar- Mr. Anderson at DCE growth of ters. Various young students. He also stressed an motivation to students public and for retaining the membership. private se- Contd. on Page 3 ctor organi- IEEE PRESIDENT MET PRESIDENT O INDIA sations spo- A view of participants in the Conference at Gulmohar nsored the During his visit to Delhi, President W.C. -

NATIONAL BOARD of ACCREDITATION (NBA) List of Accredited Programmes in Technical Institutions

NATIONAL BOARD OF ACCREDITATION (NBA) List of Accredited Programmes in Technical Institutions STATE : ANDHRA PRADESH Name of the Level of Period of Status Sl.No. Programmes Institutions Programmes Validity effective from 1. Adams Engg. College Electronics & Commu. Engg. UG 3 years July 19, 2008 Seetharamapatnam, Computer Science & Engg. UG 3 years July 19, 2008 Paloncha-507115, Electrical & Electronics Engg. UG 3 years July 19, 2008 Khammam District, AP Mechanical Engg. UG 3 years July 19, 2008 2. Al-Habeeb College of Electrical & Electronics Engg. UG 3 years May 05, 2009 Engg. & Tech., R.R. Computer Science & Engg. UG 3 years May 05, 2009 Distt., AP 3. Anil Neerukanda Inst. of Electrical & Electronics Engg. UG 3 years July 19, 2008 Tech. & Science, Computer Science & Engg. UG 3 years July 19, 2008 Visakhapatnam Electronics & Comm. Engg. UG 3 years July 19, 2008 Bio-Technology UG 3 years July 19, 2008 4. Annamacharya Institute of Mechanical Engg. (†) UG 3 Yrs April 16, 2009 Technology & Sciences, Electrical & Electronics Engg. UG 3 Yrs April 16, 2009 Thallapaka (Panchayath), Electronics & Commu. Engg. UG 3 Yrs April 16, 2009 New Boyanapalli (Post), Computer Science & Engg. UG 3 Yrs April 16, 2009 Rajampet (Mandal), Information Technology UG 3 Yrs April 16, 2009 Kadapa Distt., Andhra Pradesh – 516 126 5. Anurag Engg. College, Information Technology UG 3 years Feb. 10, 2009 kodad, Nalgonda, A.P. Electrical & Electronics Engg. UG 3 years Feb. 10, 2009 Electronics & Comm. Engg. UG 3 years Feb. 10, 2009 Computer Science & Engg. UG 3 years Feb. 10, 2009 6. Bapatla Engineering Mechanical Engineering (†) UG 3 Years March 16, 2007 College, Baptla, Gunture, Chemical Engineering UG 3 Years March 16, 2007 AP Electronics & Instru. -

DAFFODIL SOFTWARE LTD., HISAR 1. Ms

No. of Offers JMIT – 186 JMIETI- 19 205 Students Placed JMIT – 144 JMIETI- 14 158 JMIT, RADAUR Subject to correction SELECTED STUDENTS DURING 2020-21 (Starting from August 2020 to till date) DAFFODIL SOFTWARE LTD., HISAR 1. Ms. Anchal Kamboj CSE 2. Mr. Garvit Gupta CSE 3. Mr. Sahil Sharma CSE 4. Mr. Sourabh Kamboj CSE 5. Mr. Tanuj Nagpal CSE 6. Ms. Muskan Sharma IT SOFTPRODIGY SYSTEM SOLUTIONS (P) LTD., MOHALI 7. Mr. Lakshay Dutta CSE 8. Mr. Pankaj verma CSE BYJU’S, BENGALURU 9. Mr. Dhankash ECE 10. Ms. Palak Gulati ECE 11. Mr. Shubham Garg ECE 12. Mr. Rejesh Sethi ECE UNTHINKABLE SOLUTIONS LLP, GURUGRAM 13. Mr. Pankaj Verma CSE 14. Mr. Rajat Sharma CSE 15. Mr. Rijul Agrawal CSE 16. Mr. Sushant Kumar CSE 17. Mr. Naman Goyal IT 18. Mr. Sahil Choudhary IT TCS, NEW DELHI 19. Mr. Akshat Verma CSE 20. Ms. Anchal Kamboj CSE 21. Mr. Arsh Jethi CSE 22. Ms. Disha Chugh CSE 23. Mr. Garvit Gupta CSE 24. Ms. Muskan Sharma IT 25. Mr. Naman IT 26. Mr. Sahil IT 27. Mr. Bharat Kaushik CSE 28. Ms. Karmika Daryal CSE 29. Mr. Aakash Bhardwaj CSE 30. Mr. Akshat Verma CSE 31. Mr. Divyansh Kumar CSE 32. Mr. Himanshu Gupta CSE 33. Mr. Jai Bhandula CSE 34. Mr. Jai Kapoor CSE 35. Mr. Lakshay Dutta CSE 36. Mr. Lakshay Nandal CSE 37. Ms. Megha Goel CSE 38. Ms. Parneet Kaur CSE 39. Mr. Rohit Sharma CSE 40. Ms. Saloni Gupta CSE 41. Mr. Shobit Singla CSE 42. Ms. Shreya Sharma CSE 43. -

Seth Jai Parkash Mukand Lal Institute of Engineering & Technology Chhota Bans, Radaur, Yamunanagar (Haryana)

JMIT INFORMATION BROCHURE 2016-2017 SETH JAI PARKASH MUKAND LAL INSTITUTE OF ENGINEERING & TECHNOLOGY CHHOTA BANS, RADAUR, YAMUNANAGAR (HARYANA) SETH JAI PARKASH MUKAND LAL INSTITUTIONS OF KNOWLEDGE & SERVICE (www.mukand.org) FOUNDED : 1946 HARYANA UTTAR PRADESH Seth Mukand Lal Seth Jai Parkash (1892-1952) (1912-1971) 24,000+ STUDENTS 27 12,000+ INSTITUTIONS GIRL STUDENTS 200+ 12,000+ 36,000+ ACRES OF UNITS OF BLOOD PLACEMENTS DONATED LAND 2,800 76 COURSES HOSTELS' CAPACITY 1,601 Employees COMMITMENT TO EDUCATION, HEALTH CARE, ENVIRONMENT, SOCIAL UPLIFTMENT 1 JMIT - A Profile Seth Jai Parkash Mukand Lal Institute of Engineering & Technology (JMIT) is located in rural, pollution free and green habitat of Radaur at a distance of 18 km from Yamuna Nagar and 28 km from Pipli, Kurukshetra. The Institute was established in the year 1995 by The Ved Parkash Mukand Lal Educational Society (Regd. in the year 1987). Sh. Ashok Kumar, the Chairman of the Society and the Governing Body, a leading industrialist and philanthropist of Northern India manages 27 Institutions of Knowledge & Service spread over the states of Haryana and Uttar Pradesh. A Board of Governors consisting of persons of eminence from the industry and academia oversee the functioning of the Institute. The Institute has been approved by All India Council for Mission Technical Education (AICTE) and is affiliated to Kurukshetra University, "To Produce World Class Engineers, Kurukshetra. The Institute runs professional academic programmes of Managers and Technologists with B.Tech., M.Tech, MCA & MBA and there are over 3000 students studying Training of Head, Heart and Hands on the campus. -

Finance" -Subject=Finance"

Timestamp Name Phone Number 8/14/2014 11:22:16 Aarti 8901254778 8/12/2014 13:03:03 Abhilasha Vyas 7838738085 8/8/2014 6:20:21 Abhishek Chhilwar 9811682543 8/8/2014 1:39:22 Aditya dayal 9971915211 8/7/2014 18:02:52 Akash Gautam 9717063842 8/7/2014 20:55:30Finance Amit Kumar 7503659299 8/9/2014 10:51:20 Amit Mehta 9050097128 8/7/2014 22:23:03 Anchal Arora 7065299547 8/11/2014 20:03:46 Ankit Jain 9650484349 8/7/2014 17:51:35 Ankit Kalra 8860428961 8/12/2014 20:06:37 Ankit Kumar Khalotra 9971203246 8/7/2014 21:08:27 Anoushka Sharma 9711369684 8/8/2014 6:02:22Finance Arpit Garg 9971969008 8/7/2014 16:01:37Chaudhary Chandni Gupta Pradeep 8373941490 8/12/2014 8:13:28 Kumar 9711766640 8/7/2014 23:48:48 Chetan Bhandari 7838255646 8/7/2014 6:54:58 Chhavi 9654667829 8/14/2014 21:57:01 Devanshu Sahu 9873216788 8/12/2014 0:01:36 Divya Sharma 9711881651 8/9/2014 11:11:23 Divyanshu Yadav 7838494075 8/8/2014 7:18:26Finance Dushyant 9999366786 8/9/2014 1:59:44 Gaurang Manchanda 9650200403 8/7/2014 18:09:45 Geet Sehgal 7838338058 8/8/2014 12:35:49 Guneet Singh 7589175245 8/9/2014 11:40:23 Harsh Agarwal 9654052126 8/7/2014 19:17:00 Harsh Banger 9466948600 8/10/2014 19:15:38 Harshit Sharma 9953247202 8/13/2014 21:13:56 Harshita Jain 9911957854 8/7/2014 19:17:38 Indrajit Shaw 9474543764 8/7/2014 18:52:48 Ishant Dhawale 9868870336 8/9/2014 11:12:24 Jyoti Singla 8054157033 8/7/2014 23:40:08 Jyotsana Singh Bharati 9999496370 8/7/2014 14:14:33 Kapil Sharma 9650319008 8/7/2014 23:02:35 Finance Kinshuk Jaiswal 8826634589 8/7/2014 21:32:58 Malabika Mishra 9971494368 -

Cyber Security & Forensics

niversity School of Information, C'ommunication & Technology Guru Gobind Singh Indraprastha University Sector-16-C, Dwarka, New Delhi-78 Website: www.ipu.ac.in Dated:03/03/2021 With relerence to the Call for participation in the One Weck Online ACM@USICT Chapter, GGSIP University and NITTTR Chandigarh sponsored STTP Progranme on "Cyber Security & Forensics" to be held from 08th March 12" March 2021. please find attached the list of participants shortlisted for the STTP and Poster(tentative) of the Inauguration Ceremony. The STTP would be hosted on the Google Mect platform and the link would be shared with participants on email id. For any query, participants may email at [email protected]/ [email protected]. a (Dr Anuradha Chug) (Dr. Rahul Johari) Course Co-coordinator Course Coordinator Copy tO Dean, USICT for kind information 2. Registered Participants through e-mail 3. Faculy members through e-mail 4. Incharge, UFTS with a request to upload on university web-site 5. Notice Board One Week Online NITTTR Chandigarh and ACM@USICT Chapter, GGSIP University sponsored STTP Programme on Cyber Security & Forensics MARCH 08, 2021 (MONDAY) INAUGURAL CEREMONY SCHEDULE 10:00 A.M. ONWARDS 10:00 AM Openingof Inauguration by Dr AnuradhaChug, GGSIP University 10:03 AM KulGeet, GGSIP University, NITTTR Chandigarh 10:05 AM Welcome Address by Prof. Pravin Chandra, Dean USICT 10:10 AM Orientation about STTP by Prof Maitreyee Dutta. Dean(R&C), NITTTR Chandigarh 10:15AM AddressbyChief Guest:CPCyberCell, Delhi Police*. 10:25AM AddressbyGuest of Honour:TarunPandey, Scientist D, Cyber Security, R&D division Ministry of Electronics & IT. -

Download Special Session "Emerging Trends In

Call for the Papers for the Special Session “International Conference on Information Technology and Management for the Sustainable Development (ICITMSD-2019)” Uttaranchal Institute of Management, Uttaranchal University, Dehradun, Uttarakhand, India http://icitmsd.uim.lwtindia.com/ Conference Dates: August 09-10, 2019 Special Session Theme: Emerging Trends in Data Science Technologies Session Chairperson: Dr. Shakti Kundu Associate Professor, Teerthanker Mahaveer University, Moradabad, UP (INDIA). Aim of this session is to highlight the importance of emerging trends and challenges in the field of Data Science Technologies. These areas are one of the active thrust areas for the research and development in today’s scenario. The objective of session intends to cultivate and promote an instructive and productive interchange of ideas amongst leading academic scientists, developers, researchers and research scholars to exchange and share their experiences and research findings to advance the knowledge of latest trends and technologies in an area of Data Science. Areas of Coverage (sub-themes)- Suitable topics include, but are not limited, to the following: • Algorithms and Scientific Computing • Information Systems • Big Data Analytics • Image Processing and Computer Vision • Data Science • Intelligent Systems • Database Theory and Data Visualization • Multi Agent Systems and Software Agents • Database Management • Social Semantic Web and Network • Embedded Systems Software and Analysis Applications • Computer Organization and Architecture • Information Retrieval • Cyber and network Security • Internet of Things(IoT) PUBLICATION All Selected and presented papers will be Double Peer Blind Reviewed and will be published in SCOPUS/WoS-ESCI/UGC Indexed Journals, and Extended version of good Quality papers in Special Issues of Benthom Science, IGI Global, Inderscience. -

Seth Jai Parkash Mukand Lal Institute Of

JMIT INFORMATION BROCHURE 2015-2016 SETH JAI PARKASH MUKAND LAL INSTITUTE OF ENGINEERING & TECHNOLOGY CHHOTA BANS, RADAUR, YAMUNANAGAR (HARYANA) SETH JAI PARKASH MUKAND LAL INSTITUTIONS OF KNOWLEDGE & SERVICE (www.mukand.org) FOUNDED : 1946 HARYANA UTTAR PRADESH Seth Mukand Lal Seth Jai Parkash (1892-1952) (1912-1971) 24,000+ 27 STUDENTS 12,000+ INSTITUTIONS GIRL STUDENTS 200+ 12,000+ 36,000+ ACRES OF UNITS OF BLOOD LAND PLACEMENTS DONATED 76 2,800 COURSES HOSTELS' 1,601 CAPACITY Employees COMMITMENT TO EDUCATION, HEALTH CARE, ENVIRONMENT, SOCIAL UPLIFTMENT JMIT The function of education is to teach one to think intensively and to think critically. Intelligence plus character- that is the goal of true education. Martin Luther King, Jr. 02 14 THE HERITAGE DEPARTMENTS • Applied Sciences • Applied Mathematics 03 & Humanities SOCIAL COMMITMENTS • Chemical Engineering • Computer Science Engineering 04 • Electronics & JMIT - A Profile Communication Engineering • Electrical Engineering 05 • Information Technology BOARD OF GOVERNORS • Mechanical Engineering • Master of Business Administration (MBA) 06 • Computer Science & COURSES OFFERED Application (MCA) 07 24 FEES & FUNDS ANTI-RAGGING REGULATION 08 26 KEY HIGHLIGHTS TRAINING & PLACEMENTS 12 30 FACILITIES & SERVICES SETH JAI PARKASH MUKAND LAL INSTITUTIONS OF 13 KNOWLEDGE & LAURELS SERVICES CONTENTS 1 The Heritage THE GENESIS AND JOURNEY OVER 60 YEARS Seth Jai Parkash Mukand Lal Institutions of Knowledge In the third generation of the family Shri Swatanter and Service is a chain of Twenty Seven institutions Kumar, Shri Ashok Kumar and Shri Vijay Kumar carried spread over Haryana, U.P and Punjab and owe their on the tradition of their ancestors and opened a number existence to the munificent and philanthropic spirit of of institutions. -

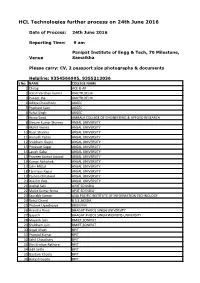

HCL Technologies Further Process on 24Th June 2016

HCL Technologies further process on 24th June 2016 Date of Process: 24th June 2016 Reporting Time: 9 am Panipat Institute of Engg & Tech, 70 Milestone, Venue Samalkha Please carry: CV, 2 passport size photographs & documents Helpline: 9354566995, 9355213036 S No. NAME COLLEGE NAME 1 Chirag ACE & AR 2 Harsh Vardhan Verma AIACTR,DELHI 3 Puneet Jha AIACTR,DELHI 4 Aditya Chaudhary AKGEC 5 Prashant Saini AKGEC 6 Rahul Singh AKGEC 7 Arzoo Sood AMBALA COLLEGE OF ENGINEERING & APPLIED RESEARCH 8 Shivam Kumar Sharma ANSAL UNIVERSITY 9 Akshit Verma ANSAL UNIVERSITY 10 Rajat Sharma ANSAL UNIVERSITY 11 Anirudh Yadav ANSAL UNIVERSITY 12 Shubham Gupta ANSAL UNIVERSITY 13 Priyavart Sagar ANSAL UNIVERSITY 14 Lavish Gaba ANSAL UNIVERSITY 15 Praveen Kumar Jaiswal ANSAL UNIVERSITY 16 Kumar Abhishek ANSAL UNIVERSITY 17 Jatin Mittal ANSAL UNIVERSITY 18 Tanmaye Kapur ANSAL UNIVERSITY 19 Yashna Chhatwal ANSAL UNIVERSITY 20 Rakshit Vats ANSAL UNIVERSITY 21 Anchal Sahi APIIT SD INDIA 22 Mohit Kumar Sinha APIIT SD INDIA 23 Saurabh Suman ASIA PACIFIC INSTITUTE OF INFORMATION TECHNOLOGY 24 Rahul Chand B.S.S.,NOIDA 25 Prateek Upadhyaya BBDNITM 26 Aransha Rana BHAGAT PHOOL SINGH UNIVERSITY 27 Gayatri BHAGAT PHOOL SINGH WOMENS UNIVERSITY 28 Mayank Jain BMIET,SONIPAT 29 Shubham Jain BMIET,SONIPAT 30 Anjali Khatri BPIT 31 Pramod Kumar BPIT 32 Sahil Chaudhary BPIT 33 Shri Krishan Rathore BPIT 34 Udit Sethi BPIT 35 Gautam Khosla BPIT 36 Aakash Gupta BPIT 37 Nikhil Sharma BPIT 38 Shubham Sharma BPIT 39 Antara Dutta BPIT 40 Vishal Kumar BPIT 41 Pooja Pandey BPS WOMEN UNIVERSITY 42 Bhavya Gera BPSMV,SONIPAT 43 SWhuchi Aggarwal CBPGEC 44 Shivam Sinha CBPGEC 45 Shubham Dimri CBPGEC 46 Nitin Jugran COER 47 Sachin Kumar Gupta COER,ROORKEE 48 Shehrazdeep Thind DAVIET 49 Shivani Sharma DAVIET 50 Arpit Sharma DIT 51 Kamaljeet DRONACHARYA COLLEGE OF ENGINEERING 52 Gurpreet Kaur DRONACHARYA COLLEGE OF ENGINEERING 53 Suhail Bajaal DTC,BAHADURGARH 54 Deepika Kandhoul DVIET 55 Palak Sindwani DVIET 56 Govind Narayan G.B. -

Department of Technical Education, Haryana Admission Brochure

Department of Technical Education, Haryana Admission Brochure for B.E./B.TECH./ B.ARCH PROGRAMMES for the session 2015-16 in The University Departments, Govt. / Govt. Aided / Private Institutions located in the State of Haryana Issued by Haryana State Technical Education Society Bay Nos. 7-12, S e c t o r -4, Panchkula Website: www.techeduhry.gov.in, www.hstes.org or www.hstes.in Toll free No. 1800 420 2026 1 VISION “To reorient Technical Education which shall be relevant to the real world-of-work, attractive to the students, responsive to the industry and connected to the community at large.” 2 Chief Minister, Haryana, Chandigarh Message I am happy to know that Haryana State Technical Education Society is bringing out the brochure to facilitate the admission in various Technical/ Non Technical courses which are in the ambit of Technical Education Department, Haryana. Technical education plays a vital role in socio-economic development of the country in the present age of knowledge based economy by upgrading skill and knowledge. Technical education is one of the major ingredients to ensure speedy upliftment of the society. The technical education institutes in the State are having well qualified teaching faculty & supporting technical staff and are equipped with latest machinery & equipments. Efforts are being made for more cleanliness & greenery in all the campuses of technical institutions. To promote “Beti Bachao Beti Padhao” scheme, 25% seats are reserved for girl candidates in all technical institutions of the State and no tuition fee is charged from girls admitted in Govt./ Govt. Aided Polytechnics. Efforts are being made to provide free books & scholarship to the outstanding girl students also of Govt./ Govt.