Impact of Visual Design Elements and Principles in Human Electroencephalogram Brain Activity Assessed with Spectral Methods and Convolutional Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Kazaa Ruling Oz Court Forces Music Biz Into Action >P.12 the Music Upfront Labels Tighten Links with Consumer Brands >P.32

KANYE'S BUSH BASHING IGNITES HIP -HOP PASSIONS >os #BXNCTCC SCH 3-DIGIT 90', IIIIIIII111I11I1II1III111I11III1I11III I II111II #BL2408043# FPRO6 A04 B009: MONTY GR.ETIL1 3740 ELM A\.E # A TONG BEACH CA 90807 -340 SEPT FOR MORE THAN 110 YEARS 17 2005 DOUBLE WHAMMY OIL PRICES, KATRINA ADD TO INDUSTRY WOES >P.5 THE KAZAA RULING OZ COURT FORCES MUSIC BIZ INTO ACTION >P.12 THE MUSIC UPFRONT LABELS TIGHTEN LINKS WITH CONSUMER BRANDS >P.32 U NT'S 55.99US E8.99CAP 74470 6.99, CAN $8.39, UK 8.95, JAPAN Y2,500 www.billboard.com www.billboard.biz www.americanradiohistory.com music.yahoo.com LET EHERE BE INO , 1 !r -on 11 r Over a million songs. 5 bucks a month. THIS IS HUGE. M MUSICrMUNLIMITED O N YIAHOO ! www.americanradiohistory.com SAVE THE BATE: ems Billb.aral SEPTEMBER 17, 2005 DAncE music summIT VOLUME 117, NO. 38 Union Sq.Jare Ballroom, NYC bil boardeverts.com ON THE CHARTS KOMI ALBUMS PAGE ARTIST / TITLE UPFRONT KANYE WEST / 5 News THE BILLBOARD 200 46 LATE REGISTRATION NICKEL CREEK / 15 Legal Matters TOP BLUEGRASS 56 WHV SHOULD THE HRE DIE? 16 Global YO -YO MA/THE SILK ROAD ENSEMBLE / 70P CLASSICAL '= 61 `r '" SILK ROAD JOURNEYS.: BEYOND THE HORIZON 18 The Indies TOP CLASSICAL CROSSOVER r 61 0 / 19 Retail Track BROOKS & DUNN / 20 Making The Brand TOP COUNTRY 56 HILLBILLY DELUXE GORILIAZ / 22 On The Road, Boxscore TOP ELECTRONIC F 59 DAYS DEMON 24 Touring KANYE WEST / TOP INTERNET 62 LATE REGISTRATION 25 Digital Entertainment TOP MICHAEL NUBLE / JAZZ 61 IT'S TIME 26 Q&A: Joe DiMuro HERBIE K / TOP CONTEMPORARY JAZZ 61 POSS IOILITIES COVER STORY SHAKIRA / TOP LATIN 58 FUACION ORAL VOL. -

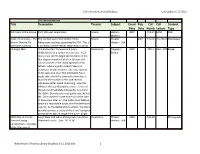

Web Historic Theatres Library Database 6-12-2016.Xlsx 1 STG Historical Library Database Last Update 6-12-2016

STG Historical Library Database Last Update 6-12-2016 STG Library Database Title Description Theater Subject Event Pub Call Call Content Date Date Numb Letters Type 100 Years at the Moore DVD 100 year celebration Moore History - 2007 792.09er MOO DVD Modern 100th Anniversary - The The contest was in the Seattle Times Moore Theater 2007 792.09 100 MOO Newspaper Moore Theatre My Newspaper and was sponsored by STG. This is History - USA Memories Contest a collection of the entries returned to STG on 12 Angry Men "12 Angry Men" focuses on a jury's Paramount Tragedy / 2007 792.1 AAA - FZZ Binder deliberations in a capital murder case. A 12- Drama man jury is sent to begin deliberations in the first-degree murder trial of an 18-year-old Latino accused in the stabbing death of his father - where a guilty verdict means an automatic death sentence. The case appears to be open-and-shut: The defendant has a weak alibi; a knife he claimed to have lost is found at the murder scene; and several witnesses either heard screaming - saw the killing or the boy fleeing the scene. Eleven of the jurors immediately vote guilty; only Juror No. 8 (Mr. Davis) casts a not guilty vote. At first Mr. Davis' bases his vote more so for the sake of discussion after all - the jurors must believe beyond a reasonable doubt that the defendant is guilty. As the deliberations unfold - the story quickly becomes a study of the jurors' complex personalities (which range from wise - bright 1928 Shot of Seattle Large black and white photograph printed on Paramount Theater 1928 792.09 Photograph Theatre During stretched canvas. -

James Blunt Back to Bedlam Full Album Zip

James Blunt Back To Bedlam Full Album Zip 1 / 4 James Blunt Back To Bedlam Full Album Zip 2 / 4 3 / 4 Get the complete list of James Blunt - Back To Bedlam mp3 songs free online. ... Blunt Back To Bedlam & Play Free Online Music on Hungama - Stream full .... InSight -- Totem Acoustic s Torrent Technology (August 2014).James Blunt-Back To Bedlam (Live In Paris) full album zip. Marco Brasil The .... James Blunt Back To Bedlam Zip mp3 download mp3mad, James Blunt Back To Bedlam Zip pagalworld. Itʼs also possible that your computer has been .... Discover releases, reviews, credits, songs, and more about James Blunt - Back To Bedlam at Discogs. Complete your James Blunt collection.. Aug 12, 2020 - james blunt back to bedlam, james blunt back to bedlam songs, james blunt back to bedlam vinyl, james blunt back to bedlam album download, .... James Blunt Back To Bedlam Full Album Zip james blunt back to bedlam, james blunt back to bedlam songs, james blunt back to bedlam vinyl, .... James Blunt Back To Bedlam Full Album Zip. james blunt back to bedlam, james blunt back to bedlam songs, james blunt back to bedlam vinyl, .... Back To Bedlam · High · You're Beautiful · Wisemen · Goodbye My Lover · Tears And Rain · Out Of My Mind · So Long Jimmy · Billy.. Soulful British crooner James Blunt's wistful debut infuses the listener - in ... Tracklist: Back to bedlam james blunt rare. James Blunt – The Truth – 3:43 (974 kbps .... James Blunt – Back To Bedlam (Deluxe Version) Zip Album Download. ... #MTVMAMA 2021 Full list of nominees mp3 download zamusic. Back to Bedlam (Deluxe) | James Blunt to stream in hi-fi, or to download in True CD Quality on Qobuz.com. -

Oh Pretty Woman Was a Hit Again in 1990 When It Provided the Inspiration for the Title of the Film Pretty Woman, Starring Julia Roberts and Richard Gere

1 УДК 811.11’24(075.8) ББК 81.2Англ-923 З 18 Печатается по решению редакционного издательского совета Татарского государственного гуманитарно-педагогического университета Составители: Закирова Л.Р., к.ф.н., ст. преподаватель кафедры английского языка и методики его преподавания ТГГПУ. Латыпов Н.Р., к.ф.н., ст. преподаватель кафедры английского языка и методики его преподавания ТГГПУ. Научный редактор: Сабирова Д.Р., д.п.н., доцент, зав. кафедрой английского языка и методики его преподавания ТГГПУ. Рецензенты: Свирина Л.О., к.п.н.,доцент, зав. кафедрой иностранных языков факультетов гуманитарного цикла ТГГПУ. Акимова О.В., к.ф.н., доцент кафедры контрастивной лингвистики и лингводидактики ТГГПУ. Закирова Л.Р., Латыпов Н.Р. English with favourite songs: учебно-методическоепособие. ‒ Казань: ТГГПУ, 2010. ‒ 192 с. ISBN 978-5-87730-487-1 Данное учебно-методическое пособие создано на основе обобщения многолетнего опыта преподавания английского языка. Основой построения пособия служат популярные английские, американские песни разных жанров. Предлагаемый авторами-составителями материал позволяет разнообразить использование песенного ресурса в учебном процессе с учетом творческого подхода, а также может стать важным средством оптимизации структуры занятия, которое обеспечивает активность и работоспособность студентов, повышает их мотивацию. Предложенные задания призваны решать не только учебные задачи, но и расширять кругозор студентов и вносить в обучение элементы занимательности. Материал пособия можно использовать как дополнение к основному учебному курсу для занятий в вузе, на дополнительных курсах английского языка или для самостоятельной работы всех тех, кто изучает английский язык и интересуется современной музыкой. Учебно-методическое пособие содержит разработанные авторами методические рекомендации, разные по степени сложности тексты для аудирования по музыкальной проблематике (от elementary до upper-intermediate), занимательные тесты. -

Remembering John F. Kennedy (Continued from Page 1) the North End Athletic Association Held of 6 to 8 Year Olds

VOL. 117 - NO. 47 BOSTON, MASSACHUSETTS, NOVEMBER 22, 2013 $.30 A COPY Buon Natale Remembering NORTH END ATHLETIC ASSOCIATION presents the 43rd Annual JOHN F. KENNEDY 2013 NORTH END John Fitzgerald Kennedy (May 29, 1917– November 22, CHRISTMAS PARADE 1963), commonly known as SUNDAY, DECEMBER 8, 2013 - 1:00 P.M. “Jack” or by his initials JFK, was the 35th President of the United States, serving from January 1961 until he was assassinated in November 1963. Kennedy represented Mas- sachusetts’ 11th congres- sional district in the U.S. House of Representatives from 1947 to 1953 as a Dem- Merry Christmas ocrat. Thereafter, he served in the U.S. Senate from 1953 SEE SANTA CLAUS ARRIVE AT NORTH END PARK until 1960. Kennedy defeated BY HELICOPTER AT 1:00 P.M. In case of bad weather, Parade will be held the next Sunday, December 15th Vice President and Republi- IN ASSOCIATION WITH The Nazzaro Center • North End Against Drugs • Mayor’s Offi ce of Arts, Tourism and Special Events can candidate Richard Nixon in the 1960 U.S. presiden- tial election. At age 43, he Dedicated in Memory was the youngest to have of been elected President. Events during his presi- JAMES “Jimmy” PALLOTTA dency included the Bay of Pigs Invasion, the Cuban Missile Crisis, the Space Race — by initiating Project Apollo (which would culmi- nate in the moon landing), News Briefs the building of the Berlin Wall, the African-American Caesar Donnaruma congratulating President John F. by Sal Giarratani Civil Rights Movement, and Kennedy. (Continued on Page 3) He Didn’t See it Coming th A school bus driver in Scotland claimed to be a 10 Annual Mayor’s Holiday Spectacular psychic refusing to pick up kids at a bus stop because he had a “premonition” that something Offers Half-Price Tickets to Over 120 Boston-Area Shows bad would happen. -

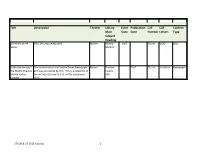

STGLIB-8-23-2013 Web.Xlsx 1

Title Description Theater Library Event Publication Call Call Content Main Date Date Number Letters Type Subject Heading 100 Years at the DVD 100 year celebration Moore History - 2007 792.09 MOO DVD Moore Modern 100th Anniversary - The contest was in the Seattle Times Newspaper Moore Theater 2007 792.09 100 MOO Newspaper The Moore Theatre and was sponsored by STG. This is a collection of History - My Memories the entries returned to STG on the newspaper USA Contest form. STGLIB-8-23-2013 web.xlsx 1 Title Description Theater Library Event Publication Call Call Content Main Date Date Number Letters Type Subject Heading 12 Angry Men "12 Angry Men" focuses on a jury's deliberations Paramount Tragedy / 2007 792.1 AAA - ZZZ Binder in a capital murder case. A 12-man jury is sent to Drama begin deliberations in the first-degree murder trial of an 18-year-old Latino accused in the stabbing death of his father - where a guilty verdict means an automatic death sentence. The case appears to be open-and-shut: The defendant has a weak alibi; a knife he claimed to have lost is found at the murder scene; and several witnesses either heard screaming - saw the killing or the boy fleeing the scene. Eleven of the jurors immediately vote guilty; only Juror No. 8 (Mr. Davis) casts a not guilty vote. At first Mr. Davis' bases his vote more so for the sake of discussion after all - the jurors must believe beyond a reasonable doubt that the defendant is guilty. As the deliberations unfold - the story quickly becomes a study of the jurors' complex personalities (which range from wise - bright and empathetic to arrogant - prejudiced and merciless) - preconceptions - backgrounds and interactions.