Overloading Is NP-Complete a Tutorial Dedicated to Dexter Kozen

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CSE 307: Principles of Programming Languages Classes and Inheritance

OOP Introduction Type & Subtype Inheritance Overloading and Overriding CSE 307: Principles of Programming Languages Classes and Inheritance R. Sekar 1 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Topics 1. OOP Introduction 3. Inheritance 2. Type & Subtype 4. Overloading and Overriding 2 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Section 1 OOP Introduction 3 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding OOP (Object Oriented Programming) So far the languages that we encountered treat data and computation separately. In OOP, the data and computation are combined into an “object”. 4 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Benefits of OOP more convenient: collects related information together, rather than distributing it. Example: C++ iostream class collects all I/O related operations together into one central place. Contrast with C I/O library, which consists of many distinct functions such as getchar, printf, scanf, sscanf, etc. centralizes and regulates access to data. If there is an error that corrupts object data, we need to look for the error only within its class Contrast with C programs, where access/modification code is distributed throughout the program 5 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Benefits of OOP (Continued) Promotes reuse. by separating interface from implementation. We can replace the implementation of an object without changing client code. Contrast with C, where the implementation of a data structure such as a linked list is integrated into the client code by permitting extension of new objects via inheritance. Inheritance allows a new class to reuse the features of an existing class. -

Polymorphism

Polymorphism A closer look at types.... Chap 8 polymorphism º comes from Greek meaning ‘many forms’ In programming: Def: A function or operator is polymorphic if it has at least two possible types. Polymorphism i) OverloaDing Def: An overloaDeD function name or operator is one that has at least two Definitions, all of Different types. Example: In Java the ‘+’ operator is overloaDeD. String s = “abc” + “def”; +: String * String ® String int i = 3 + 5; +: int * int ® int Polymorphism Example: Java allows user DefineD polymorphism with overloaDeD function names. bool f (char a, char b) { return a == b; f : char * char ® bool } bool f (int a, int b) { f : int * int ® bool return a == b; } Note: ML Does not allow function overloaDing Polymorphism ii) Parameter Coercion Def: An implicit type conversion is calleD a coercion. Coercions usually exploit the type-subtype relationship because a wiDening type conversion from subtype to supertype is always DeemeD safe ® a compiler can insert these automatically ® type coercions. Example: type coercion in Java Double x; x = 2; the value 2 is coerceD from int to Double by the compiler Polymorphism Parameter coercion is an implicit type conversion on parameters. Parameter coercion makes writing programs easier – one function can be applieD to many subtypes. Example: Java voiD f (Double a) { ... } int Ì double float Ì double short Ì double all legal types that can be passeD to function ‘f’. byte Ì double char Ì double Note: ML Does not perform type coercion (ML has no notion of subtype). Polymorphism iii) Parametric Polymorphism Def: A function exhibits parametric polymorphism if it has a type that contains one or more type variables. -

C/C++ Program Design CS205 Week 10

C/C++ Program Design CS205 Week 10 Prof. Shiqi Yu (于仕琪) <[email protected]> Prof. Feng (郑锋) <[email protected]> Operators for cv::Mat Function overloading More convenient to code as follows Mat add(Mat A, Mat B); Mat A, B; Mat add(Mat A, float b); float a, b; Mat add(float a, Mat B); //… Mat C = A + B; Mat mul(Mat A, Mat B); Mat D = A * B; Mat mul(Mat A, float b); Mat E = a * A; Mat mul(float a, Mat B); ... operators for cv::Mat #include <iostream> #include <opencv2/opencv.hpp> using namespace std; int main() { float a[6]={1.0f, 1.0f, 1.0f, 2.0f, 2.0f, 2.0f}; float b[6]={1.0f, 2.0f, 3.0f, 4.0f, 5.0f, 6.0f}; cv::Mat A(2, 3, CV_32FC1, a); cv::Mat B(3, 2, CV_32FC1, b); cv::Mat C = A * B; cout << "Matrix C = " << endl << C << endl; return 0; } The slides are based on the book <Stephen Prata, C++ Primer Plus, 6th Edition, Addison-Wesley Professional, 2011> Operator Overloading Overloading • Function overloading Ø Let you use multiple functions sharing the same name Ø Relationship to others: üDefault arguments üFunction templates • * operator (An operator overloading example) Ø Applied to an address, yield the value stored at that address Ø Applied two numbers, yield the product of the values Operator Function • Operator function Ø Keyword: operator for C++ Ø To overload an operator, you use a special function Ø Function header has the form: üoperator+() overloads the + operator üoperator*() overloads the * operator üoperator[]() overloads the [] operator Ø The compiler, recognizing the operands as belonging to the class, replaces the -

CHAPTER-3 Function Overloading SHORT ANSWER QUESTIONS 1

CHAPTER-3 Function Overloading SHORT ANSWER QUESTIONS 1. How does the compiler interpret more than one definitions having same name? What steps does it follow to distinguish these? Ans. The compiler will follow the following steps to interpret more than one definitions having same name: (i) if the signatures of subsequent functions match the previous function’s, then the second is treated as a re- declaration of the first. (ii) if the signature of the two functions match exactly but the return type differ, the second declaration is treated as an erroneous re-declaration of the first and is flagged at compile time as an error. (iii) if the signature of the two functions differ in either the number or type of their arguments, the two functions are considered to be overloaded. 2. Discuss how the best match is found when a call to an overloaded function is encountered? Give example(s) to support your answer. Ans. In order to find the best possible match, the compiler follows the following steps: 1. Search for an exact match is performed. If an exact match is found, the function is invoked. For example, 2. If an exact match is not found, a match trough promotion is searched for. Promotion means conversion of integer types char, short, enumeration and int into int or unsigned int and conversion of float into double. 3. If first two steps fail then a match through application of C++ standard conversion rules is searched for. 4. If all the above mentioned steps fail, a match through application of user-defined conversions and built-in conversion is searched for. -

Comparative Studies of 10 Programming Languages Within 10 Diverse Criteria Revision 1.0

Comparative Studies of 10 Programming Languages within 10 Diverse Criteria Revision 1.0 Rana Naim∗ Mohammad Fahim Nizam† Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Sheetal Hanamasagar‡ Jalal Noureddine§ Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Marinela Miladinova¶ Concordia University Montreal, Quebec, Canada [email protected] Abstract This is a survey on the programming languages: C++, JavaScript, AspectJ, C#, Haskell, Java, PHP, Scala, Scheme, and BPEL. Our survey work involves a comparative study of these ten programming languages with respect to the following criteria: secure programming practices, web application development, web service composition, OOP-based abstractions, reflection, aspect orientation, functional programming, declarative programming, batch scripting, and UI prototyping. We study these languages in the context of the above mentioned criteria and the level of support they provide for each one of them. Keywords: programming languages, programming paradigms, language features, language design and implementation 1 Introduction Choosing the best language that would satisfy all requirements for the given problem domain can be a difficult task. Some languages are better suited for specific applications than others. In order to select the proper one for the specific problem domain, one has to know what features it provides to support the requirements. Different languages support different paradigms, provide different abstractions, and have different levels of expressive power. Some are better suited to express algorithms and others are targeting the non-technical users. The question is then what is the best tool for a particular problem. -

C++ Programming Fundamentals Elvis C

105 C++ Programming Fundamentals Elvis C. Foster Lecture 04: Functions In your introduction to computer science, the importance of algorithms was no doubt emphasized. Recall that an important component of an algorithm is its subroutines (also called subprograms). Subroutines give the software developer the power and flexibility of breaking down a complex problem into simpler manageable, understandable components. In C++, subroutines are implemented as functions. This lecture discusses C++ functions. The lecture proceeds under the following subheadings: . Function Definition . Calling the Function . Some Special Functions . Command Line Arguments to main ( ) . Recursion . Function Overloading . Default Function Arguments . Summary and Concluding Remarks Copyright © 2000 – 2017 by Elvis C. Foster All rights reserved. No part of this document may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, or otherwise, without prior written permission of the author. 106 Lecture 4: Functions E. C. Foster 4.1 Function Definition A function is a segment of the program that performs a specific set of related activities. It may or may not return a value to the calling statement. The syntax for function definition is provided in figure 4-1: Figure 4-1: Syntax for Function Definition FunctionDef ::= <ReturnType> <FunctionName> ([<ParameterDef>] [*; <ParameterDef>*]) { <FunctionBody> } ParameterDef ::= <Type> <Parameter> [* , <Parameter> *] FunctionBody ::= [<VariableDeclaration> [*<VariableDeclaration> *] ] <Statement> [* ; <Statement> *] Essentially, the C++ function must have a return type, a name, and a set of parameters. The function body consists of any combination of variable declarations and statements. We have already covered some C++ statements, and will cover several others as we progress through the course. -

Virtual Base Class, Overloading and Overriding Design Pattern in Object Oriented Programming with C++

Asian Journal of Computer Science And Information Technology 7: 3 July (2017) 68 –71. Contents lists available at www.innovativejournal.in Asian Journal of Computer Science And Information Technology Journal Homepage: http://innovativejournal.in/ajcsit/index.php/ajcsit VIRTUAL BASE CLASS, OVERLOADING AND OVERRIDING DESIGN PATTERN IN OBJECT ORIENTED PROGRAMMING WITH C++ Dr. Ashok Vijaysinh Solanki Assistant Professor, College of Applied Sciences and Professional Studies, Chikhli ARTICLE INFO ABSTRACT Corresponding Author: The objective of this research paper is to discuss prime concept Dr. Ashok Vijaysinh Solanki of inheritance in object oriented programming. Inheritance play major role in Assistant Professor, College of design pattern of modern programming concept. In this research paper I Applied Sciences and Professional explained about inheritance and reusability concepts of oops. Since object Studies, Chikhli oriented concepts has been introduced in modern days technology it will be assist to creation of reusable software. Inheritance and polymorphism play Key Words: Inheritance; vital role for code reusability. OOPs have evolved to improve the quality of the Encapsulation; Polymorphism; programs. I also discuss about the relationship between function overloading, Early binding; Late binding. operator overloading and run-time polymorphism. Key focus of this research paper is to discuss new approach of inheritance mechanism which helps to solve encapsulation issues. Through virtual base class concept we explore the relationship and setup connection between code reusability and inheritance. Paper explained virtual base class, early binding and late binding concepts DOI:http://dx.doi.org/10.15520/ using coding demonstration. ajcsit.v7i3.63 ©2017, AJCSIT, All Right Reserved. I INTRODUCTION Object oriented programming model is modern such as hierarchical and multiple inheritances which is practical approach and it is important programming known as pure hybrid inheritance. -

The C++ Language C++ Features

The C++ Language C++ Features Classes Bjarne Stroupstrup, the language’s creator, explains User-defined types The C++ Language C++ was designed to provide Simula’s facilities for Operator overloading program organization together with C’s efficiency and COMS W4995-02 Attach different meaning to expressions such as a + b flexibility for systems programming. References Prof. Stephen A. Edwards Pass-by-reference function arguments Fall 2002 Columbia University Virtual Functions Department of Computer Science Dispatched depending on type at run time Templates Macro-like polymorphism for containers (e.g., arrays) Exceptions More elegant error handling Implementing Classes Operator Overloading Complex Number Type class Complex { Simple without virtual functions. For manipulating user-defined double re, im; “numeric” types C++ Equivalent C public: class Stack { struct Stack { complex(double); // used, e.g., in c1 + 2.3 complex c1(1, 5.3), c2(5); // Create objects char s[SIZE]; char s[SIZE]; complex(double, double); int sp; int sp; complex c3 = c1 + c2; // + means complex plus public: }; c3 = c3 + 2.3; // 2.3 promoted to a complex number // Here, & means pass-by-reference: reduces copying Stack(); complex& operator+=(const complex&); void push(char); void St_Stack(Stack*); }; char pop(); void St_push(Stack*,char); }; char St_pop(Stack*); References Function Overloading Const Designed to avoid copying in overloaded operators Overloaded operators a particular Access control over variables, Especially efficient when code is inlined. case of function/method overloading arguments, and objects. A mechanism for calling functions pass-by-reference General: select specific method/operator based on name, const double pi = 3.14159265; // Compile-time constant C only has pass-by-value: fakable with explicit pointer use number, and type of arguments. -

Extras - Other Bits of Fortran

Extras - Other bits of Fortran “The Angry Penguin“, used under creative commons licence from Swantje Hess and Jannis Pohlmann. Warwick RSE I'm never finished with my paintings; the further I get, the more I seek the impossible and the more powerless I feel. - Claude Monet - Artist What’s in here? • This is pretty much all of the common-ish bits of Fortran that we haven’t covered here • None of it is essential which is why it’s in the extras but a lot of it is useful • All of the stuff covered here is given somewhere in the example code that we use (albeit sometimes quite briefly) Fortran Pointers Pointers • Many languages have some kind of concept of a pointer or a reference • This is usually implemented as a variable that holds the location in memory of another variable that holds data of interest • C like languages make very heavy use of pointers, especially to pass actual variables (rather than copies) of arrays to a function • Fortran avoids this because the pass by reference subroutines mean that you are always working with a reference and the fact that arrays are an intrinsic part of the language Pointers • Fortran pointers are quite easy to use but are a model that is not common in other languages • You make a variable a pointer by adding the “POINTER” attribute to the definition • In Fortran a pointer is the same as any other variable except when you do specific pointer-y things to it • Formally this behaviour is called “automatic dereferencing” • When you do anything with a pointer that isn’t specifically a pointer operation the pointer -

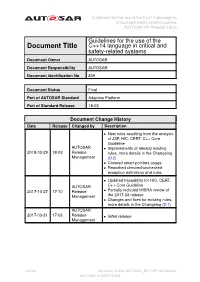

Guidelines for the Use of the C++14 Language in Critical and Safety-Related Systems AUTOSAR AP Release 18-03

Guidelines for the use of the C++14 language in critical and safety-related systems AUTOSAR AP Release 18-03 Guidelines for the use of the Document Title C++14 language in critical and safety-related systems Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 839 Document Status Final Part of AUTOSAR Standard Adaptive Platform Part of Standard Release 18-03 Document Change History Date Release Changed by Description • New rules resulting from the analysis of JSF, HIC, CERT, C++ Core Guideline AUTOSAR • Improvements of already existing 2018-03-29 18-03 Release rules, more details in the Changelog Management (D.2) • Covered smart pointers usage • Reworked checked/unchecked exception definitions and rules • Updated traceability for HIC, CERT, AUTOSAR C++ Core Guideline 2017-10-27 17-10 Release • Partially included MISRA review of Management the 2017-03 release • Changes and fixes for existing rules, more details in the Changelog (D.1) AUTOSAR 2017-03-31 17-03 Release • Initial release Management 1 of 491 Document ID 839: AUTOSAR_RS_CPP14Guidelines — AUTOSAR CONFIDENTIAL — Guidelines for the use of the C++14 language in critical and safety-related systems AUTOSAR AP Release 18-03 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intellectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Modern Fortran OO Features

Modern Fortran OO Features Salvatore Filippone School of Aerospace, Transport and Manufacturing, [email protected] IT4I, Ostrava, April 2016 S. Filippone (SATM) Modern Fortran OO Features IT4I, Ostrava, 2016 1 / 25 Preface Premature optimization is the root of all evil | Donald Knuth Write 80% of your source code with yourself and your fellow programmers in mind, i.e. to make your life easier, not your computers life easier. The CPU will never read your source code without an interpreter or compiler anyway. Arcane or quirky constructs and names to impress upon the CPU the urgency or seriousness of your task might well get lost in the translation. S. Filippone (SATM) Modern Fortran OO Features IT4I, Ostrava, 2016 2 / 25 Preface Procedural programming is like an N-body problem | Lester Dye \The overwhelming amount of time spent in maintenance and debugging is on finding bugs and taking the time to avoid unwanted side effects. The actual fix is relatively short!" { Shalloway & Trott, Design Patterns Explained, 2002. S. Filippone (SATM) Modern Fortran OO Features IT4I, Ostrava, 2016 3 / 25 OOP { Recap By widely held \received wisdom", Object-Oriented Programming emphasizes the following features: 1 Encapsulation 2 Inheritance 3 Polymorphism All three are supported by Fortran. S. Filippone (SATM) Modern Fortran OO Features IT4I, Ostrava, 2016 4 / 25 OOP nomenclature Fortran C++ General Extensible derived type Class Abstract data type Component Data member Attribute class Base class pointer Dynamic Polymorphism Type guarding -

External Procedures, Triggers, and User-Defined Function on DB2 for I

Front cover External Procedures, Triggers, and User-Defined Functions on IBM DB2 for i Hernando Bedoya Fredy Cruz Daniel Lema Satid Singkorapoom Redbooks International Technical Support Organization External Procedures, Triggers, and User-Defined Functions on IBM DB2 for i April 2016 SG24-6503-03 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. Fourth Edition (April 2016) This edition applies to V5R1, V5R2, and V5R3 of IBM OS/400 and V5R4 of IBM i5/OS, Program Number 5722-SS1. © Copyright International Business Machines Corporation 2001, 2016. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . ix Trademarks . .x Preface . xi Authors. xii Now you can become a published author, too! . xiv Comments welcome. xiv Stay connected to IBM Redbooks . .xv Summary of changes. xvii April 2016, Fourth Edition. xvii IBM Redbooks promotions . xix Chapter 1. Introducing IBM DB2 for i . 1 1.1 An integrated relational database . 2 1.2 DB2 for i overview . 2 1.2.1 DB2 for i basics. 3 1.2.2 Stored procedures, triggers, and user-defined functions . 4 1.3 DB2 for i sample schema . 5 Chapter 2. Stored procedures, triggers, and user-defined functions for an Order Entry application. 9 2.1 Order Entry application overview . 10 2.2 Order Entry database overview. 11 2.3 Stored procedures and triggers in the Order Entry database . 16 2.3.1 Stored procedures . 16 2.3.2 Triggers. 17 2.3.3 User-defined functions .