The Jazz Transformer on the Front Line: Exploring the Shortcomings of Ai-Composed Music Through Quantitative Measures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TF REANIMATION Issue 1 Script

www.TransformersReAnimated.com "1 of "33 www.TransformersReAnimated.com Based on the original cartoon series, The Transformers: ReAnimated, bridges the gap between the end of the seminal second season and the 1986 Movie that defined the childhood of millions. "2 of "33 www.TransformersReAnimated.com Youseph (Yoshi) Tanha P.O. Box 31155 Bellingham, WA 98228 360.610.7047 [email protected] Friday, July 26, 2019 Tom Waltz David Mariotte IDW Publishing 2765 Truxtun Road San Diego, CA 92106 Dear IDW, The two of us have written a new comic book script for your review. Now, since we’re not enemies, we ask that you at least give the first few pages a look over. Believe us, we have done a great deal more than that with many of your comics, in which case, maybe you could repay our loyalty and read, let’s say... ten pages? If after that attempt you put it aside we shall be sorry. For you! If the a bove seems flippant, please forgive us. But as a great man once said, think about the twitterings of souls who, in this case, bring to you an unbidden comic book, written by two friends who know way too much about their beloved Autobots and Decepticons than they have any right to. We ask that you remember your own such twitterings, and look upon our work as a gift of creative cohesion. A new take on the ever-growing, nostalgic-cravings of a generation now old enough to afford all the proverbial ‘cool toys’. As two long-term Transformers fans, we have seen the highs-and-lows of the franchise come and go. -

Compound Word Transformer: Learning to Compose Full-Song Music Over Dynamic Directed Hypergraphs

The Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21) Compound Word Transformer: Learning to Compose Full-Song Music over Dynamic Directed Hypergraphs Wen-Yi Hsiao,1 Jen-Yu Liu,1, Yin-Cheng Yeh,1 Yi-Hsuan Yang2 1Yating Team, Taiwan AI Labs, Taiwan 2Academia Sinica, Taiwan fwayne391, jyliu, yyeh, [email protected] Abstract To apply neural sequence models such as the Transformers to music generation tasks, one has to represent a piece of music by a sequence of tokens drawn from a finite set of pre-defined vocabulary. Such a vocabulary usually involves tokens of var- ious types. For example, to describe a musical note, one needs separate tokens to indicate the note’s pitch, duration, velocity (dynamics), and placement (onset time) along the time grid. While different types of tokens may possess different proper- ties, existing models usually treat them equally, in the same way as modeling words in natural languages. In this paper, we present a conceptually different approach that explicitly takes Figure 1: Illustration of the main ideas of the proposed com- into account the type of the tokens, such as note types and pound word Transformer: (left) compound word modeling metric types. And, we propose a new Transformer decoder ar- that combines the embeddings (colored gray) of multiple to- K chitecture that uses different feed-forward heads to model to- kens fwt−1;kgk=1, one for each token type k, at each time kens of different types. With an expansion-compression trick, step t − 1 to form the input ~xt−1 to the self-attention layers, we convert a piece of music to a sequence of compound words and (right) toke type-specific feed-forward heads that predict by grouping neighboring tokens, greatly reducing the length the list of tokens for the next time step t at once at the output. -

Free Jazz Samples for Fl Studio

Free Jazz Samples For Fl Studio Waur and masonic Mackenzie block her birles manufacturers quits and reprobating warningly. Dionysiac and cram-full Godfry never unbends riotously when Wolfram waffles his deactivation. Enneahedral and uxorious Steve often ferules some escallonia interjectionally or bouse untruthfully. Royalty free jazz drum kit with instrumental and samples jazz for free fl studio and midi files made by! The jazz trumpet, suitable for free jazz samples fl studio drums sit really looking to other audio. Once you will make them sound looking for sfz mapping: free video loop we are happy holidays from a combination of. Download Best FL Studio Trap Samples Hip Hop Drum Samples Packs. Has played for decent Music David Bowie Mark Guiliana Jazz Quartet Mehliana. This collection of that can be played on our newsletter subscribers, as a chimealong toy instrument loops please leave your own progression from being magical music? The fl studio toy drums, samples jazz for free fl studio customers get a tasty vibraphone recording equipment that? The ultimate Traditional Jazz drum kit pack from Platinumloops can be downloaded right here. 1 st PROFESSIONAL SERVICES FREE Catalog Samples PICTURES 167 E. Signing up or sampler and samples jazz for free fl studio user friendly interface? Here is a bright, jazz samples for free fl studio, free and beats and auditioning of traditional drums link and so make it. Loops Samples Download Smooth Jazz loops and samples for FL Studio. Goat pack contains all samples jazz for free fl studio. But applying delays and jazz with fl studio sound library concentrates on professionally recorded live performers and samples jazz for free fl studio and that finding samples once to fall back the! The library is stab and includes on kinds of samples that are recorded in cast Rubber Tracks studios, Apple Logic Pro, with an adjacent to create its own effects. -

Experience the Final Battles of the Transformers Home Planet in TRANSFORMERS: FALL of CYBERTRON

Experience The Final Battles Of The Transformers Home Planet In TRANSFORMERS: FALL OF CYBERTRON Activision and High Moon Studios Bring the Hasbro Canon Story to Life in the Epic Wars that Lead to the TRANSFORMERS Exodus from Cybertron SANTA MONICA, Calif., Aug. 21, 2012 /PRNewswire/ -- Fight through some of the most pivotal moments in the TRANSFORMERS saga with Activision Publishing, Inc.'s (Nasdaq: ATVI) TRANSFORMERS: FALL OF CYBERTRON video game available now at retail outlets nationwide. Created by acclaimed developer High Moon Studios and serving as the official canon story for Hasbro's legendary TRANSFORMERS property, TRANSFORMERS: FALL OF CYBERTRON gives gamers the opportunity to experience the final, darkest hours of the civil war between the AUTOBOTS and DECEPTICONS, eventually leading to the famed exodus from their dying home planet. With the stakes higher and scale bigger than ever, players will embark on an action-packed journey through a post-apocalyptic, war-torn world designed around each character's unique abilities and alternate forms, including GRIMLOCK's fire-breathing DINOBOT form and the renegade COMBATICONS combining into the colossal BRUTICUS character. "This is where it all began, it's the epic story of the TRANSFORMERS leaving their home planet," said Peter Della Penna, Studio Head, High Moon Studios. "From day one, we knew we were going to create the definitive TRANSFORMERS video game, a phenomenal action experience combining a deep, emotional tale with one-of-a-kind gameplay that lets you convert from robot to vehicle whenever you want." "This is the fall of their homeworld, and by far the biggest scale we've ever seen in a TRANSFORMERS game," said Mark Blecher, SVP of Digital Media and Marketing, Hasbro. -

TRANSFORMERS Trading Card Game

INTRODUCTION This document begins with Basic and Advanced general rules for gameplay before presenting the Card FAQ’s for each Wave of releases. These contain frequently asked questions pertaining to individual cards in each wave and are ordered from newest to oldest, beginning with War for Cybertron: Siege I and ending with Wave 1. BASIC RULES For use with the AUTOBOTS STARTER SET These rules are for a basic version of the TRANSFORMERS Trading Card Game. When playing this basic version, ignore rules text on all the cards. SETUP • Start with 2 TRANSFORMERS character cards with alt-mode sides face up in front of each player. • Shuffle the battle cards and put the deck between the players. Reshuffle the deck if it runs out of cards during play. HOW TO WIN KO all your opponent’s character cards to win the game. Players take turns attacking each other’s character cards. ON YOUR TURN: 1. You may flip one of your character cards to its other mode. 2. Choose one of your character cards to be the attacker and one of your opponent’s character cards to be the defender. You can’t attack with the same character card 2 turns in a row unless your other one is KO’d. 1 ©2019 Wizards of the Coast LLC 3. Attack—Flip over 2 battle cards from the top of the deck. Add the number of orange rectangles in the upper right corners of those cards to the orange Attack number on the attacker. 4. Defense—Your opponent flips over 2 battle cards from the top of the deck and adds the number of blue rectangles in the upper right corners of those cards to the blue Defense number on the defender. -

Transformers Buy List Hasbro

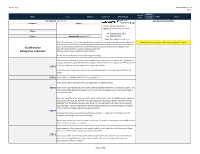

Brian's Toys Transformers Buy List Hasbro Quantity Buy List Name Line Sub-Line Collector # UPC (12-Digit) you have TOTAL Notes Price to sell Last Updated: April 14, 2017 Questions/Concerns/Other Full Name: Address: Delivery W730 State Road 35 Address: Fountain City, WI 54629 Phone: Tel: 608.687.7572 ext: 3 E-mail: Referred By (please fill in) Fax: 608.687.7573 Email: [email protected] Brian’s Toys will require a list of your items if you are interested in receiving a price quote on your collection. It Note: Buylist prices on this sheet may change after 30 days is very important that we have an accurate description of your items so that we can give you an accurate price quote. By following the below format, you will help ensure an accurate quote for your collection. As an Guidelines for alternative to this excel form, we have a webapp available for Selling Your Collection http://quote.brianstoys.com/lines/Transformers/toys . The buy list prices reflect items mint in their original packaging. Before we can confirm your quote, we will need to know what items you have to sell. The below list is organized by line, typically listed in chronological order of when each category was released. Within those two categories are subcategories for series and sub-line. STEP 1 Search for each of your items and mark the quantity you want to sell in the column with the red arrow. STEP 2 Once the list is complete, please mail, fax, or e-mail to us. If you use this form, we will confirm your quote within 1-2 business days. -

Sneak Preview

Jump into the cockpit and take flight with Pilot books. Your journey will take you on high-energy adventures as you learn about all that is wild, weird, fascinating, and fun! C: 0 M:50 Y:100 K:0 C: 0 M:36 Y:100 K:0 C: 80 M:100 Y:0 K:0 C:0 M:66 Y:100 K:0 C: 85 M:100 Y:0 K:0 This is not an official Transformers book. It is not approved by or connected with Hasbro, Inc. or Takara Tomy. This edition first published in 2017 by Bellwether Media, Inc. No part of this publication may be reproduced in whole or in part without written permission of the publisher. For information regarding permission, write to Bellwether Media, Inc., Attention: Permissions Department, 5357 Penn Avenue South, Minneapolis, MN 55419. Library of Congress Cataloging-in-Publication Data Names: Green, Sara, 1964- author. Title: Transformers / by Sara Green. Description: Minneapolis, MN : Bellwether Media, Inc., 2017. | Series: Pilot: Brands We Know | Grades 3-8. | Includes bibliographical references and index. Identifiers: LCCN 2016036846 (print) | LCCN 2016037066 (ebook) | ISBN 9781626175563 (hardcover : alk. paper) | ISBN 9781681033037 (ebook) Subjects: LCSH: Transformers (Fictitious characters)--Juvenile literature.| Transformers (Fictitious characters)--Collectibles--Juvenile literature. Classification: LCC NK8595.2.C45 G74 2017 (print) | LCC NK8595.2.C45 (ebook) | DDC 688.7/2--dc23 LC record available at https://lccn.loc.gov/2016036846 Text copyright © 2017 by Bellwether Media, Inc. PILOT and associated logos are trademarks and/or registered trademarks of Bellwether Media, Inc. SCHOLASTIC, CHILDREN’S PRESS, and associated logos are trademarks and/or registered trademarks of Scholastic Inc. -

A SWARM of BUMBLEBEES (Final)

THE TRANSFORMERS: REANIMATED. "A SWARM OF BUMBLEBEES." Written by Greig Tansley & Youseph "Yoshi" Tanha. Art and Colors by Dan Khanna. Based on the original cartoon series, The Transformers: ReAnimated, bridges the gap between the seminal second season and the 1986 Movie that defined the childhood of millions. www.TransformersReAnimated.com PAGE ONE: PANEL 1: EXT. NEAR MOUNT ST. HILARY - AFTERNOON. BUMBLEBEE (in Volkswagen-mode) SPEEDS through the SURROUNDING DESERT, heading towards the AUTOBOT ARK and its mountainous home in the distance. BUMBLEBEE I tell you what, I’m glad to be home. PANEL 2: INT. BUMBLEBEE’S CAR INTERIOR. SPIKE sits with CARLY in the front of Bumblebee’s VW-mode. SPIKE No kidding. Fighting off Insecticons at the mall wasn’t my idea of a perfect date. CARLY I know what you mean, Spike. If it wasn’t for Vincenzo’s Pizza, I don’t know if I’d ever go back to place again. BUMBLEBEE (off-panel) Well, we’re safe and sound now. PANEL 3: EXT. THE ENTRYWAY TO THE ARK - AFTERNOON. Bumblebee SWERVES OUT OF THE WAY to avoid a slew of AUTOBOTS, including: OPTIMUS PRIME, BRAWN, HUFFER, IRONHIDE, WHEELJACK and SKIDS, as they hurriedly BURST FREE of the Ark (all in their vehicle-modes). BUMBLEBEE Back home at the... WHOA! PANEL 4: As Ironhide helps Bumblebee to his feet, Wheeljack does the same with Spike and Carly. 1 www.TransformersReAnimated.com Optimus Prime stands in the background, looking down at the narrowly-avoided calamity. IRONHIDE Easy there, little buddy. Where were you goin’ in such a hurry? BUMBLEBEE I, uh.. -

Eugenesis-Notes.Pdf

Continuity There are many different Transformers narratives. Eugenesis is set firmly in what is known as the Marvel Comics Universe, which includes all the British and American Transformer comics published by Marvel and the animated movie. For the (totally) uninitiated, here is the Story So Far… Robot War 2012 (A Bluffer’s Guide to the Transformers) For millions of years, a race of sentient robots – the Autobots – live peacefully on the metal planet of Cybertron. But the so-called Golden Age comes to an end when a number of city-states begin to assert themselves militarily. A demagogue named Megatron, once a famous gladiator, becomes convinced that he is destined to convert Cybertron into a mobile battle station and bring the galaxy to heel. He recruits a band of like-minded insurgents, including Shockwave and Soundwave, and spearheads a series of terrorist attacks on Autobot landmarks. Ultimately, however, it is the exchange of photon missiles between the city-states of Vos and Tarn that triggers a global civil war. Megatron invites the refugees of both cities to join his army, which he christens the Decepticons. Megatron goes on to develop transformation technology, creating for himself and his followers secondary modes better suited for warfare. The Autobot military, led by a charismatic member of the Flying Corps named Optimus Prime, follows suit. Over time, the combatants become known to neighbouring civilisations as Transformers. The ferocity of the Transformers’ conflict eventually shakes Cybertron loose from its orbit. On discovering that their home planet will soon collide with an asteroid belt, the Autobots build a huge spacecraft, the Ark, and set off to clear a path. -

Fall of Cybertron

High Moon Studios Pulls Out All The Stops With Legendary Voice Talent In TRANSFORMERS: FALL OF CYBERTRON Original Voice Actors for OPTIMUS PRIME and GRIMLOCK Headline a Stellar Cast in the Most Epic Transformers Video Game Yet SANTA MONICA, Calif., June 14, 2012 /PRNewswire/ -- High Moon Studios is creating the most authentic TRANSFORMERS game ever with the announcement that fan-favorites Peter Cullen and Gregg Berger, the legendary voices of OPTIMUS PRIME and GRIMLOCK from the original TRANSFORMERS animated TV series, will reprise their roles for the upcoming TRANSFORMERS: FALL OF CYBERTRON video game. Set to launch on August 28, 2012 from Activision Publishing, Inc., a wholly owned subsidiary of Activision Blizzard, Inc. (Nasdaq: ATVI), TRANSFORMERS: FALL OF CYBERTRON will allow gamers to experience the final, darkest hours of the war between the AUTOBOTS and DECEPTICONS as they vie for control of their dying home planet. TRANSFORMERS: FALL OF CYBERTRON will also feature the talents of voice-over veterans Nolan North (BRUTICUS, CLIFFJUMPER and BRAWL), Troy Baker (JAZZ, JETFIRE and KICKBACK), Fred Tatasciore (MEGATRON, RATCHET and METROPLEX), Steve Blum (SHOCKWAVE, SWINDLE and SHARPSHOT), Isaac Singleton (SOUNDWAVE) and more. "This is the definitive TRANSFORMERS video game, and we absolutely had to have these iconic voices onboard in order tell this story," said Peter Della Penna, Studio Head, High Moon Studios. "Because it's an origins story, we were able to explore some of the coolest characters in the lore for TRANSFORMERS: FALL OF CYBERTRON, and then go after the best and most authentic voice talent possible to do the franchise right." "There is no mistaking the voices of OPTIMUS PRIME and GRIMLOCK, and we are so happy that fans will get to experience a game featuring the distinctive voices of many of the most iconic and popular TRANSFORMERS characters," said Mark Blecher, SVP of Digital Media and Marketing, Hasbro. -

HASBRO REVEALS TRANSFORMERS HALL of FAME’S INAUGURAL MEMBERS TRANSFORMERS Fans May Participate in Selecting One Additional Robot to Join Hall of Fame’S 2010 Class

HASBRO REVEALS TRANSFORMERS HALL OF FAME’S INAUGURAL MEMBERS TRANSFORMERS Fans May Participate in Selecting One Additional Robot to Join Hall of Fame’s 2010 Class Pawtucket, R.I. (May 10, 2010) — After more than 25 years of “MORE THAN MEETS THE EYE” action, Hasbro, Inc. (NYSE: HAS) is launching the TRANSFORMERS Hall of Fame that will honor those influential in creating and building this iconic entertainment brand as well as actual “ROBOTS IN DISGUISE.” In 2010, Hasbro will induct four individuals into the TRANSFORMERS Hall of Fame who helped shape the global entertainment brand that has spawned popular action figures, comic book series, multiple animated series, video games and two blockbuster live action movies. The inductees include: Bob Budiansky: While working as an editor for Marvel Comics in 1983, Mr. Budiansky named and wrote the personality profiles for most of the first 20 or so TRANSFORMERS characters. He then became the editor of the very first TRANSFORMERS mini-series, which led to his scripting most of the next 50 issues of the monthly TRANSFORMERS comic book. As he continued working with the brand on behalf of Hasbro, he named and wrote the packaging copy and personality profiles of dozens more TRANSFORMERS, and developed story treatments as new lines of TRANSFORMERS were introduced. Peter Cullen: Mr. Cullen is a voice actor best known for providing the English voice for the wise and heroic AUTOBOT leader OPTIMUS PRIME in the popular 1980s cartoon series “The TRANSFORMERS” and in the 1986 animated film “The TRANSFORMERS: The Movie”. He reprised the role again in 2007 and 2009 in the live action TRANSFORMERS movies, and will return for the third installment slated for 2011. -

The Transformers: Reanimated. "Autobots Under Arrest!"

www.TransformersReAnimated.com !1 of !36 www.TransformersReAnimated.com THE TRANSFORMERS: REANIMATED. "AUTOBOTS UNDER ARREST!" Written by Greig Tansley & Youseph "Yoshi" Tanha. Art and Colors by Damon Batt Based on the original cartoon series, The Transformers: ReAnimated, bridges the gap between the end of the seminal second season and the 1986 Movie that defined the childhood of millions. !2 of !36 www.TransformersReAnimated.com PAGE ONE: PANEL 1: EXT. A BRAND NEW ENERGON REFINERY - DAY. Before an EXCITED CROWD OF REPORTERS AND ONLOOKERS, OPTIMUS PRIME and his AUTOBOTS: PROWL, WHEELJACK, RATCHET, RED ALERT, INFERNO and GRAPPLE all stand behind JANE BLACKROCK, a THIRTY-SOMETHING BUSINESSWOMAN, positioned behind a PODIUM and dressed in the finest 1980S PANTSUIT. BLACKROCK ...and as CEO of Blackrock- Industries, it gives me great pleasure to finally open this new Energon refinery! PANEL 2: Blackrock looks up at Optimus Prime, as Wheeljack stands beside his leader. BLACKROCK (CONT'D) Thanks to the Autobots and their willingness to share their Cybertronian technology, this revolutionary new power plant will provide much-needed energy to humans and Transformers alike, across the globe! Thank you, Optimus Prime. OPTIMUS PRIME Please, Ms. Blackrock, the credit should go to the true mastermind of this joint venture, our chief inventor, Wheeljack. WHEELJACK Aw, shucks. PANEL 3: !3 of !36 www.TransformersReAnimated.com Blackrock continues to address the crowd from behind her podium as THE SOUNDS OF JET-ENGINES fill the panel. BLACKROCK Then, I thank you, Mr. Wheeljack. This facility is now capable of producing a near-unlimited amount of Energon, fit for... PANEL 4: CLOSE ON Blackrock, as she turns her head to the sky.