Proceedings of the 17 Large Installation Systems Administration Conference

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Webnet 2000 World Conference on the WWW and Internet Proceedings (San Antonio, Texas, October 30-November 4Th, 2000)

DOCUMENT RESUME ED 448 744 IR 020 507 AUTHOR Davies, Gordon, Ed.; Owen, Charles, Ed. TITLE WebNet 2000 World Conference on the WWW and Internet Proceedings (San Antonio, Texas, October 30-November 4th, 2000) . INSTITUTION Association for the Advancement of Computing in Education, Charlottesville, VA. ISBN ISBN-1-880094-40-1 PUB DATE 2000-11-00 NOTE 1005p.; For individual papers, see IR 020 508-527. For the 1999 conference, see IR 020 454. AVAILABLE FROM Association for the Advancement of Computing in Education (AACE), P.O. Box 3728, Norfolk, VA 23514-3728; Web site: http://www.aace.org. PUB TYPE Collected Works Proceedings (021) EDRS PRICE MF07/PC41 Plus Postage. DESCRIPTORS *Computer Uses in Education; Courseware; Distance Education; *Educational Technology; Electronic Libraries; Electronic Publishing; *Information Technology; Internet; Multimedia Instruction; Multimedia Materials; Postsecondary Education; *World Wide Web IDENTIFIERS Electronic Commerce; Technology Utilization; *Web Based Instruction ABSTRACT The 2000 WebNet conference addressed research, new developments, and experiences related to the Internet and World Wide Web. The 319 contributions of WebNet 2000 contained in this proceedings comprise the full and short papers accepted for presentation at the conference, as well as poster/demonstration abstracts. Major topics covered include: commercial, business, professional, and community applications; education applications; electronic publishing and digital libraries; ergonomic, interface, and cognitive issues; general Web tools and facilities; medical applications of the Web; \personal applications and environments;, societal issues, including legal, standards, and international issues; and Web technical facilities. (MES) Reproductions supplied by EDRS are the best that can be made from the original document. Web Net 2 World Conference Alb U.S. -

ADASS Web Database XML Project

Astronomical Data Analysis Software and Systems X ASP Conference Series, Vol. 238, 2001 F. R. Harnden Jr., F. A. Primini, and H. E. Payne, eds. ADASS Web Database XML Project M. Irene Barg, Elizabeth B. Stobie, Anthony J. Ferro, Earl J. O’Neil University of Arizona, Steward Observatory, Tucson, AZ 85721 Abstract. In the spring of 2000, at the request of the ADASS Program Organizing Committee (POC), we began organizing information from previous ADASS conferences in an effort to create a centralized database. The beginnings of this database originated from data (invited speakers, participants, papers, etc.) extracted from HyperText Markup Language (HTML) documents from past ADASS host sites. Unfortunately, not all HTML documents are well formed and parsing them proved to be an iterative process. It was evident at the beginning that if these Web doc- uments were organized in a standardized way, such as XML (Extensible Markup Language), the processing of this information across the Web could be automated, more efficient, and less error prone. This paper will briefly review the many programming tools available for processing XML, including Java, Perl and Python, and will explore the mapping of relational data from our MySQL database to XML. 1. Introduction The ADASS POC formed a Web Site Working Group (WSWG), chaired by Richard A. Shaw (STScI), whose charter is to define the scope of the existing www.adass.org Web presence. Two of the many issues the WSWG will be addressing are the focus of this paper. These are: 1) the development of an ADASS Conference database, and 2) www.adass.org site management. -

Transfert De L'intranet Institutionnel Des Hôpitaux Universitaires De Genève

Transfert de l’intranet institutionnel des Hôpitaux universitaires de Genève d’une technologie JAVA vers une technologie PHP Travail de Bachelor réalisé en vue de l’obtention du Bachelor HES par : Maurizio Velletri Conseiller au travail de Bachelor : Patrick Ruch, professeur HES Genève, le 15 juillet 2010 Haute École de Gestion de Genève (HEG-GE) Filière Information Documentaire Déclaration Ce travail de Bachelor est réalisé dans le cadre de l’examen final de la Haute école de gestion de Genève, en vue de l’obtention du titre de Spécialiste en information documentaire. L’étudiant accepte, le cas échéant, la clause de confidentialité. L'utilisation des conclusions et recommandations formulées dans le travail de Bachelor, sans préjuger de leur valeur, n'engage ni la responsabilité de l'auteur, ni celle du conseiller au travail de Bachelor, du juré et de la HEG. « J’atteste avoir réalisé seul le présent travail, sans avoir utilisé des sources autres que celles citées dans la bibliographie. » Fait à Genève, le 15 juillet 2010 Maurizio Velletri Transfert de l’intranet institutionnel des Hôpitaux universitaires de Genève d’une technologie JAVA vers une technologie PHP VELLETRI, Maurizio i Remerciements Je tiens à remercier toutes les personnes qui m’ont aidé à réaliser ce travail de bachelor: Patrick Ruch, mon conseiller Ariel Richard-Arlaud, ma mandante Raphaël Grolimund, mon juré Ma femme Johanna, mes enfants Stella Maria, Elisa et Markus qui ont su me donner force, amour et courage pour arriver au bout de ce long chemin. Mes parents pour leur grande disponibilité. Toute l’équipe du secteur production multimédia des HUG qui m’ont accordé leur confiance et m’ont fait sentir un des leurs: Ariel, Olivier, Anne, Amandine, Joan, Franck et Bernard. -

A Presentation Service for Rapidly Building Interactive Collaborative Web Applications

A Presentation Service for Rapidly Building Interactive Collaborative Web Applications SCIENTIA MANU E T MENTE A thesis submitted to the School of Computer Science University College University of New South Wales Australian Defence Force Academy for the degree of Doctor of Philosophy By Michael Joseph Sweeney 31 March 2008 c Copyright 2008 by Michael Joseph Sweeney i Certi¯cate of Originality I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person, nor material which to a substantial extent has been accepted for the award of any other degree or diploma at UNSW or any other educational institution, except where due acknowledgement is made in the thesis. Any contribution made to the research by colleagues, with whom I have worked at UNSW or elsewhere, during my candidature, is fully acknowledged. I also declare that the intellectual content of this thesis is the product of my own work, except to the extent that assistance from others in the project's design and conception or in style, presentation and linguistic expression is acknowledged. Michael Joseph Sweeney ii Abstract Web applications have become a large segment of the software development domain but their rapid rise in popularity has far exceeded the support in software engineer- ing. There are many tools and techniques for web application development, but the developer must still learn and use many complex protocols and languages. Products still closely bind data operations, business logic, and the user interface, limiting integration and interoperability. -

E.2 Instalación Del Sistema De Monitoreo Web De Signos Vitales 168 E.2.1 Instalación De Noisette 168

INSTITUTO POLITÉCNICO NACIONAL UNIDAD PROFESIONAL INTERDISCIPLINARIA EN INGENIERÍA Y TECNOLOGÍAS AVANZADAS UPIITA Trabajo Terminal Desarrollo de un Sistema de Monitoreo Web de Signos Vitales Que para obtener el título de “Ingeniero en Telemática” Presenta Mario Alberto García Torrea Asesores Ing. Francisco Antonio Polanco Montelongo M. en C. Noé Sierra Romero Dr. en F. Fernando Martínez Piñón México D. F. a 29 de mayo del 2008 INSTITUTO POLITÉCNICO NACIONAL UNIDAD PROFESIONAL INTERDISCIPLINARIA EN INGENIERÍA Y TECNOLOGÍAS AVANZADAS UPIITA Trabajo Terminal Desarrollo de un Sistema de Monitoreo Web de Signos Vitales Que para obtener el título de “Ingeniero en Telemática” Presenta Mario Alberto García Torrea Asesores Ing. Francisco Antonio M. en C. Noé Sierra Dr. en F. Fernando Polanco Montelongo Romero Martínez Piñón Presidente del Jurado Profesor Titular M. en C. Miguel Félix Mata M. en C. Susana Araceli Sánchez Rivera Nájera Agradecimientos A mi familia Por enseñarme a creer y ayudarme a crecer; porque siempre han estado ahí cuando los he necesitado; por enseñarme que las mejores cosas de la vida no son más que aquellas que hacemos con el corazón, en las que podemos soñar y alcanzar, y por las que debemos de luchar. Gracias papá por tu sabiduría y por todos los consejos que me has brindado. Gracias mamá por procurarnos sencillez y por enseñarnos a amar. Gracias hermano porque – aunque siempre buscas la forma de molestarme – estás ahí creyendo en mí. A mis amigos Porque han creido en mí y me han apoyado con su compañía, su alegría y consejos. Gracias por ayudarme a crecer y a creer que todo es posible si realmente queremos que así lo sea; y sobre todo si creemos en nosotros mismos. -

Foundations of Agile Python Development.Pdf

CYAN YELLOW MAGENTA BLACK PANTONE 123 C BOOKS FOR PROFESSIONALS BY PROFESSIONALS® THE EXPERT’S VOICE® IN OPEN SOURCE Companion eBook Available Foundations of Agile Python Development Foundations of Dear Reader, Python is your chosen development language. You love its power, clarity, and interactivity. But what is the best way to build and maintain Python applications? How can you blend its unique strengths with the best of agile methods to reach still higher levels of productivity and quality? And, at a practical level, where are the tools to automate it all? In this book, I give answers to these questions, Foundations of backed up by a wealth of down-to-earth examples and working code. The short development cycles of agile projects require far more automation than traditional processes. There’s simply no way to have a two-week release cycle if development involves a day of integration, a week of QA, and three days Agile Python Development for production deployment. You must automate to succeed. But all too often, the best-known tools are language specific. For this reason, this book gives you a complete set of open source tools to turbocharge your Python projects, and shows you how to integrate them into a smoothly functioning whole. Agile Python Eclipse and Pydev make an excellent Python IDE. Python ships with an xUnit-based unit-testing framework. Nose is great for running tests, supplemented by PyFit for functional testing. Setuptools is your build harness and packaging mechanism, with functionality similar to Maven in Java. Subversion provides a place to store your code, and Buildbot is an ideal continuous integration server. -

SAN Host Utilities Notices

Notices About this information The following copyright statements and licenses apply to software components that are distributed with various versions of the NetApp® AltaVault® Cloud Integrated Storage v4.1 software demo products. Your product does not necessarily use all the software components referred to below. Copyrights and licenses The following components are subject to the Apache License 2.0: Apache HTTP server http://httpd.apache.org/ Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION Definitions "License" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. "Licensor" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. "Legal Entity" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, "control" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. "You" (or "Your") shall mean an individual or Legal Entity exercising permissions granted by this License. "Source" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. Notices 1 215-10601_A0_ur001-Copyright © 2015 NetApp, Inc. All rights reserved. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. -

NEAR EAST UNIVERSITY Faculty of Engineering

NEAR EAST UNIVERSITY Faculty of Engineering Department of Computer Engineering AUTO GALLERY MANAGEMENT SYSTEM Graduation Project COM 400 Student: Ugur Emrah CAKMAK Supervisor : Assoc. Prof. Dr. Rahib ABIYEV Nicosia - 2008 ACKNOWLEDGMENTS "First, I would like to thank my supervisor Assoc. Prof. Dr. Rahib Abiyev for his invaluable advice and belief in my work and myself over the course of this Graduation Project.. Second, I would like to express my gratitude to Near East University for the scholarship that made the work possible. Third, I thank my family for their constant encouragement and support during the preparation of this project. Finally, I would like to thank Neu Computer Engineering Department academicians for their invaluable advice and support. TABLE OF CONTENT ACKNOWLEDGEMENT i TABLE OF CONTENTS ii ABSTRACT iii INTRODUCTION 1 CHAPTER ONE - PHP - Personal Home Page 2 1.1 History Of PHP 2 1.2 Usage 5 1.3 Security 6 1 .4 Syntax 7 1.5 Data Types 8 1.6 Functions 9 1.7 Objects 9 1.8 Resources 10 1.9 Certification 12 1 .1 O List of Web Applications 12 1.11 PHP Code Samples 19 CHAPTER TWO - MySQL 35 2.1 Uses 35 2.2 Platform and Interfaces 36 2.3 Features 37 2.4 Distinguishing Features 38 2.5 History 40 2.6 Future Releases 41 2.7 Support and Licensing .41 2.8 Issues 43 2.9Criticism 44 2.10 Creating the MySQL Database 45 2.11 Database Code of a Sample CMS 50 CHAPTER THREE - Development of Auto Gallery Management System 72 CONCLUSION 77 REFERENCES 78 APPENDIX 79 ii ABSTRACT Auto Gallery Management System is a unique Content Management System which supports functionality for auto galleries. -

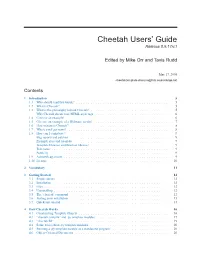

Cheetah Users' Guide

Cheetah Users’ Guide Release 0.9.17rc1 Edited by Mike Orr and Tavis Rudd May 17, 2005 [email protected] Contents 1 Introduction 5 1.1 Who should read this Guide? ....................................... 5 1.2 What is Cheetah? ............................................. 5 1.3 What is the philosophy behind Cheetah? ................................. 5 Why Cheetah doesn’t use HTML-style tags ............................... 6 1.4 Give me an example! ........................................... 6 1.5 Give me an example of a Webware servlet! ............................... 7 1.6 How mature is Cheetah? ......................................... 8 1.7 Where can I get news? .......................................... 8 1.8 How can I contribute? ........................................... 9 Bug reports and patches .......................................... 9 Example sites and tutorials ........................................ 9 Template libraries and function libraries ................................. 9 Test cases ................................................. 9 Publicity .................................................. 9 1.9 Acknowledgements ............................................ 9 1.10 License .................................................. 10 2 Vocabulary 11 3 Getting Started 12 3.1 Requirements ............................................... 12 3.2 Installation ................................................ 12 3.3 Files .................................................... 12 3.4 Uninstalling ............................................... -

LUNARC Application Portal Installation Manual

NORDUGRID NORDUGRID-MANUAL-12 20/7/2009 Lunarc Application Portal Installation manual Jonas Lindemann∗ ∗[email protected] Contents 1 Installation 2 2 Prerequisites 2 3 Installing WebWare for Python 2 4 Setting up a WebWare application instance 3 5 Configuring the Webware application instance 3 6 Configuring the application instance for LAP 4 7 Building the mod webkit module for the Apache web server 4 8 Configuring the Apache web server 4 9 Installing the Lunarc Application Portal 5 10 Configuring the portal 5 11 Creating a user 7 1 1 Installation This document provides manual instructions for installing the portal. This can be useful when custom setups or different Linux distributions are used. 2 Prerequisites This installation manual requires that ARC-1.x is built and configured with the python-bindings for libarcclient. This manual does not cover how to configure SELinux to work with Apache and the WebWare application server. 3 Installing WebWare for Python The first step in installing the portal is to install and configure the WebWare for Python application server. Webware tarballs can be downloaded from: http://sourceforge.net/projects/webware/ The tar-package can be downloaded and extracted with the following commands: [root@grid sw]# wget [sourceforge url] --13:57:29-- http://[...sourceforge...]/Webware-1.0.2.tar.gz => `Webware-1.0.2.tar.gz' . 100%[=============>] 936,493 164.42K/s ETA 00:00 13:57:35 (168.79 KB/s) - `Webware-1.0.2.tar.gz' saved ... [root@grid sw]# tar xvzf Webware-1.0.2.tar.gz Webware-1.0.2/ Webware-1.0.2/KidKit/ Webware-1.0.2/KidKit/Docs/ Webware-1.0.2/KidKit/Docs/RelNotes-1.0.2.phtml . -

Web Application Framework

UU - IT - UDBL 1 E-COMMERCE and SECURITY - 1DL018 Spring 2008 An introductury course on e-commerce systems alt. http://www.it.uu.se/edu/course/homepage/ehandel/vt08/ Kjell Orsborn Uppsala Database Laboratory Department of Information Technology, Uppsala University, Uppsala, Sweden Kjell Orsborn 4/24/08 UU - IT - UDBL 2 Web Servers ch 6, 7 Kjell Orsborn Department of Information Technology Uppsala University, Uppsala, Sweden Kjell Orsborn 4/24/08 UU - IT - UDBL 3 Web clients and Web servers Clients running browsers Web server Requests sent pages received Kjell Orsborn 4/24/08 UU - IT - UDBL 4 Web server • The term web server can mean one of two things: – A computer program that is responsible for accepting HTTP requests from clients, which are known as web browsers, and serving them HTTP responses along with optional data contents, which usually are web pages such as HTML documents and linked objects (images, etc.). – A computer that runs a computer program as described above. Kjell Orsborn 4/24/08 UU - IT - UDBL 5 Common features of Web servers • Web server programs might differ in detail, but they all share some basic common features: – HTTP: every web server program operates by accepting HTTP requests from the client, and providing an HTTP response to the client. • The HTTP response usually consists of an HTML document, but can also be a raw file, an image, or some other type of document (defined by MIME-types). – Logging: usually web servers have also the capability of logging some detailed information, about client requests and server responses, to log files; • this allows the webmaster to collect statistics by running log analyzers on log files. -

2010 CMS Intelligence Report

2010 CMS Intelligence Report Contents Licensing / Terms of Use ..................................................................................................... 3 Introduction ........................................................................................................................ 4 The CMS Evaluations ........................................................................................................... 5 walter&stone, CMSWire Open Source CMS Market Share Report ................................ 5 Packt Publishing Open Source CMS Awards ................................................................. 16 NTEN CMS Satisfaction Report ...................................................................................... 17 Idealware Comparing Content Management Systems Report ..................................... 19 Forrester Web Content Management and Open Source Report .................................. 21 IBM developerWorks Using open source software ...................................................... 22 Webology Drupal vs. Joomla! Survey ............................................................................ 24 Secondary Evaluations .................................................................................................. 26 SourceForge.net Community Choice Awards ............................................................ 26 CNET Webware 100 ................................................................................................... 26 A frank comparison from an IBM consultant ...........................................................