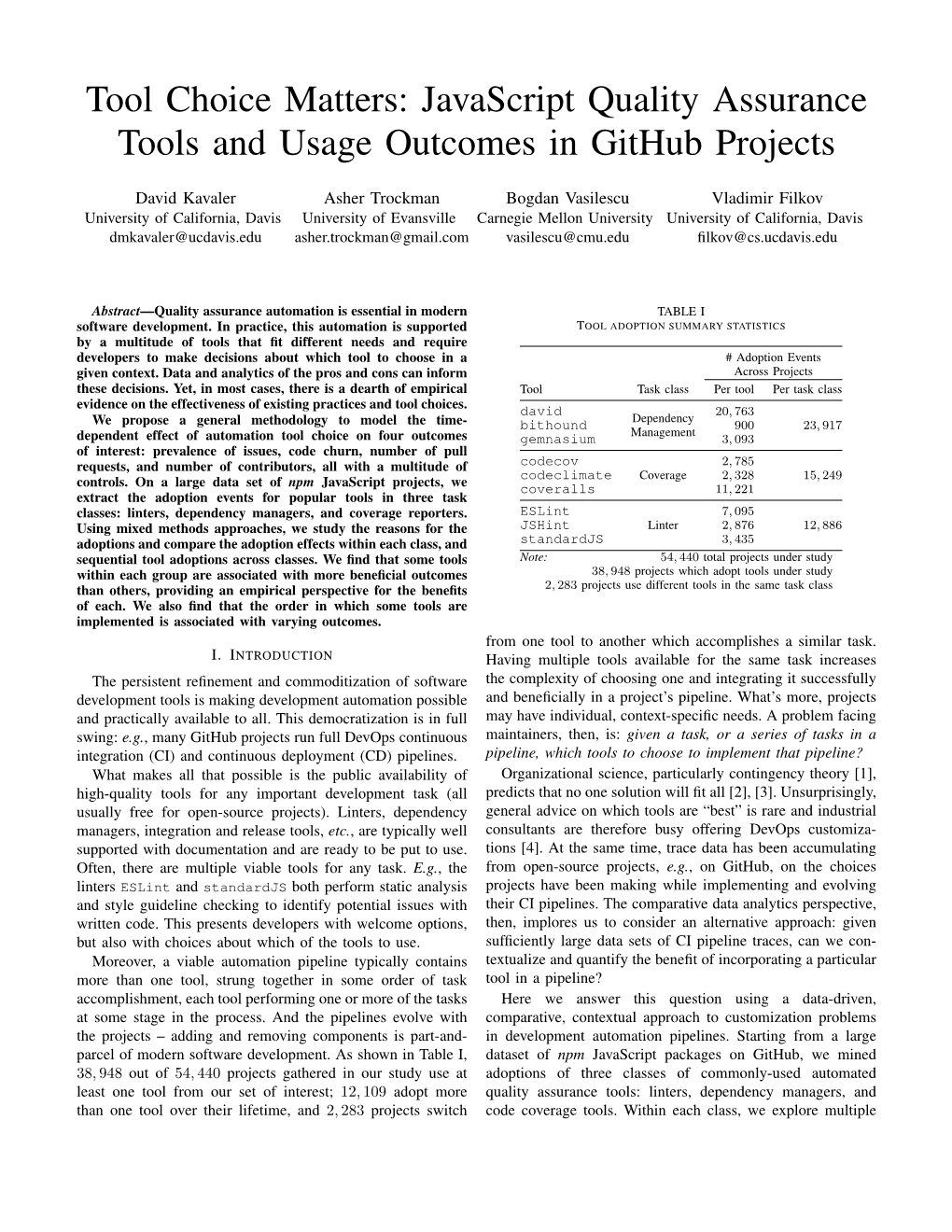

Javascript Quality Assurance Tools and Usage Outcomes in Github Projects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rekstrarhandbók

Vorönn 2017 T-404-LOKA, Lokaverkefni Rekstrarhandbók Ingþór Birkir Árnason Jan Hinrik Hansen Logi Guðmann Þorgrímur Jónasarson Kennari: Hallgrímur Arnalds Leiðbeinandi: Sigurjón Ingi Garðarsson Prófdómari: Símon Óttar Vésteinsson 12. maí 2017 Efnisyfirlit 1 Framendi 3 1.1 Dependencies 3 1.2 Uppsetning 3 1.3 Keyrsla 3 1.4 ESLint 4 1.5 Stílar 5 1.5.1 BEM - Block Element Modifier 5 1.5.2 StyleLint 5 1.5.3 Gulp task 5 2 Bakendi 6 2.1 Dependencies 6 2.2 Uppsetning 6 2.3 Handhægar upplýsingar 8 2.3.1 Admin aðgangur 8 2.3.2 Postman 8 2.4 PHPUnit 8 2.5 Documentation 9 3 Forritunarreglur 9 3.1 Almennar reglur 9 3.2 VueJS 9 3.3 CSS 10 3.4 PHP - Laravel 11 2 1 Framendi Framendinn er skrifaður í Vue.js með vue-webpack-boilerplate (https://github.com/vuejs-templates/webpack) sem grunn. 1.1 Dependencies Til þess að geta keyrt framendann er nauðsynlegt að hafa node og node package manager (npm). Hægt er að sækja node.js ásamt npm á Node.js heimasíðunni (https://nodejs.org/en/). 1.2 Uppsetning 1.) Sækja git repository-ið. git clone https://github.com/toggi737/Lokaverkefni_vue.git 2.) Setja upp nauðsynleg dependencies í gegnum npm. npm install 3.) Búa til dev.env.js skrá sem að inniheldur stillingar fyrir þína vél. Nauðsynlegt er að búa til dev.env.js skrá undir config möppunni sem að inniheldur upplýsingar um þann API sem að þú vilt nota. Það er hægt að gera með því að keyra cp dev.env.js.example dev.env.js í config möppunni. -

Learning React Functional Web Development with React and Redux

Learning React Functional Web Development with React and Redux Alex Banks and Eve Porcello Beijing Boston Farnham Sebastopol Tokyo Learning React by Alex Banks and Eve Porcello Copyright © 2017 Alex Banks and Eve Porcello. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/insti‐ tutional sales department: 800-998-9938 or [email protected]. Editor: Allyson MacDonald Indexer: WordCo Indexing Services Production Editor: Melanie Yarbrough Interior Designer: David Futato Copyeditor: Colleen Toporek Cover Designer: Karen Montgomery Proofreader: Rachel Head Illustrator: Rebecca Demarest May 2017: First Edition Revision History for the First Edition 2017-04-26: First Release See http://oreilly.com/catalog/errata.csp?isbn=9781491954621 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Learning React, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights. -

React.Pdf Email: [email protected] Twitter: @Mark Volkmann Github: Mvolkmann Website

Say “No” to Complexity! Mark Volkmann, Object Computing, Inc. http://ociweb.com/mark/MidwestJS/react.pdf Email: [email protected] Twitter: @mark_volkmann https://github.com/mvolkmann/react-examples GitHub: mvolkmann Website: http://ociweb.com/mark Copyright © 2015-2016 by Object Computing, Inc. (OCI) All rights reserved Intro. Meaning behind the talk title - 2 kinds of complexity other frameworks state management approaches: thunks, sagas, epics, effects, GraphQL, Relay, Falcor, ... Why are the slides so dense? Copyright © 2015-2016 by Object Computing, Inc. (OCI) React All rights reserved 2 What is OCI? Software development (on-site and off-site), consulting, and training Home of Grails, “An Open Source high-productivity framework for building fast and scalable web applications” Open Source Transformation Services helping clients move from commercial to open source software Industrial Internet of Things (IIoT) DevOps Copyright © 2015-2016 by Object Computing, Inc. (OCI) React All rights reserved 3 Overview ... Web app library from Facebook As of 8/6/16, React was reportedly used by Airbnb, Angie’s List, http://facebook.github.io/react/ Atlasssian, BBC, Capitol One, Clash of Focuses on view portion Clans, Codecademy, Coursera, Docker, Dropbox, Expedia, Facebook, Feedly, not full stack like other frameworks such as AngularJS and EmberJS Flipboard, HipChat, IMDb, Instagram, Intuit, Khan Academy, Lyft, New York use other libraries for non-view functionality Times, NFL, NHL, Netflix, Paypal, some are listed later Periscope, Reddit, Salesforce, Squarespace, Tesla Motors, Twitter, “One-way reactive data flow” Uber, Visa, WhatsApp, Wired, Wolfrum UI reacts to “state” changes Alpha, Wordpress, Yahoo, Zendesk, and many more. not two-way data binding like in AngularJS 1 Source: https://github.com/facebook/ what triggered a digest cycle? react/wiki/Sites-Using-React should I manually trigger it? easier to follow flow of data events -> state changes -> component rendering Copyright © 2015-2016 by Object Computing, Inc. -

Npm Packages As Ingredients: a Recipe-Based Approach

npm Packages as Ingredients: a Recipe-based Approach Kyriakos C. Chatzidimitriou, Michail D. Papamichail, Themistoklis Diamantopoulos, Napoleon-Christos Oikonomou, and Andreas L. Symeonidis Electrical and Computer Engineering Dept., Aristotle University of Thessaloniki, Thessaloniki, Greece fkyrcha, mpapamic, thdiaman, [email protected], [email protected] Keywords: Dependency Networks, Software Reuse, JavaScript, npm, node. Abstract: The sharing and growth of open source software packages in the npm JavaScript (JS) ecosystem has been exponential, not only in numbers but also in terms of interconnectivity, to the extend that often the size of de- pendencies has become more than the size of the written code. This reuse-oriented paradigm, often attributed to the lack of a standard library in node and/or in the micropackaging culture of the ecosystem, yields interest- ing insights on the way developers build their packages. In this work we view the dependency network of the npm ecosystem from a “culinary” perspective. We assume that dependencies are the ingredients in a recipe, which corresponds to the produced software package. We employ network analysis and information retrieval techniques in order to capture the dependencies that tend to co-occur in the development of npm packages and identify the communities that have been evolved as the main drivers for npm’s exponential growth. 1 INTRODUCTION Given that dependencies and reusability have be- come very important in today’s software develop- The popularity of JS is constantly increasing, and ment process, npm registry has become a “must” along is increasing the popularity of frameworks for place for developers to share packages, defining code building server (e.g. -

Means Nested Providers from the Real Client. for Example P->[email protected] Means That P2 Is the P’S Provider and P Is the Real Client’S Provider

real client = the client package that we executed the release’s test. -> means nested providers from the real client. For example p->[email protected] means that p2 is the p’s provider and p is the real client’s provider. --------------------------------------------------------------------- 1) [email protected] changed a key in its code, that did not introduce an error, but it became incompatible with babel-eslint. The escope is not a babel-eslint’s provider. This error was fixed by [email protected], when its providers claimed that it should be fixed in babel-eslint. ● Issue in babel-eslint ● Issue in escope ● Pull-request in babel-eslint ● Fixed by provider babel-eslint, which is the provider of the real client. ● Category: incompatible providers version. 2) the real client has grunt-jslint->jslint@>=0.3.4 as its providers. The [email protected] started to use ES6 and moved all of ES5’s code to another file. The [email protected] did a downgrade in the jslint’s version. Specifically, the grunt-jslint uses the array JSLINT.error, which was moved to another file. jslint is a provider of grunt-jslint, and grunt-jslint is a provider of real client. ● Downgrade of jslint ● [email protected] diff ● [email protected] changelog ● Fixed by provider as client grunt-jslint, which is the client of jslint. ● Category: Feature change. 3) the real client has the [email protected] as its provider. The babel-preset-es2015-rollup has the babel-preset-es2015 as its provider, that is introducing an error. -

Investigating the Reproducbility of NPM Packages

Investigating the Reproducbility of NPM packages Pronnoy Goswami Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Engineering Haibo Zeng, Chair Na Meng Paul E. Plassmann May 6, 2020 Blacksburg, Virginia Keywords: Empirical, JavaScript, NPM packages, Reproducibility, Software Security, Software Engineering Copyright 2020, Pronnoy Goswami Investigating the Reproducbility of NPM packages Pronnoy Goswami (ABSTRACT) The meteoric increase in the popularity of JavaScript and a large developer community has led to the emergence of a large ecosystem of third-party packages available via the Node Package Manager (NPM) repository which contains over one million published packages and witnesses a billion daily downloads. Most of the developers download these pre-compiled published packages from the NPM repository instead of building these packages from the available source code. Unfortunately, recent articles have revealed repackaging attacks to the NPM packages. To achieve such attacks the attackers primarily follow three steps – (1) download the source code of a highly depended upon NPM package, (2) inject mali- cious code, and (3) then publish the modified packages as either misnamed package (i.e., typo-squatting attack) or as the official package on the NPM repository using compromised maintainer credentials. These attacks highlight the need to verify the reproducibility of NPM packages. Reproducible Build is a concept that allows the verification of build artifacts for pre-compiled packages by re-building the packages using the same build environment config- uration documented by the package maintainers. This motivates us to conduct an empirical study (1) to examine the reproducibility of NPM packages, (2) to assess the influence of any non-reproducible packages, and (3) to explore the reasons for non-reproducibility. -

Building-Frontend-Pipeline Short

Building a Frontend Pipeline Nathaniel T. Schutta @ntschutta Ah JavaScript! It used to be so simple right? 1,500 line main.js. Our apps aren’t like that anymore though are they? JavaScript is a first class citizen now. Modern applications are many thousands of lines of JavaScript. We incorporate many libraries and frameworks. https://mobile.twitter.com/joeerl/status/930774515512201216 NPM all the things. JavaScript itself is evolving. Browsers are still an issue… The struggle is real. What do we do about it? Why do we need a pipeline at all? Not your older sister’s web apps. No longer a few thousands lines of JavaScript and jQuery. Adhoc approach can’t keep up with the complexity of modern JS apps. Apply the level of discipline to the frontend that we use on the backend. Linters to ensure our code doesn’t fall prey to common errors. Unit tests to ensure our changes didn’t break anything. Bundlers to minimize asynchronous requests to our servers. Transpilers so we can leverage ES6 in older browsers. Task runers to help tie it all together. JavaScript usage has exploded. Number one language on GitHub? Yep. Not a new phenomena either. We cannot afford to treat JavaScript as a one off. The same engineering discipline we use on “server side” code must apply. Of course for many of us, JavaScript *is* our server side language too! More than a few options. Try and clear some of that up today. Linters. Certain mistakes can be caught automatically. Static code analysis. Find bad patterns. Check style adherence. -

To Complexity!

Say “No” to Complexity! Mark Volkmann, Object Computing, Inc. Email: [email protected] Twitter: @mark_volkmann GitHub: mvolkmann Website: http://ociweb.com/mark https://github.com/mvolkmann/react-examples Copyright © 2015-2016 by Object Computing, Inc. (OCI) All rights reserved What is OCI? New home of Grails, “An Open Source high-productivity framework for building fast and scalable web applications” Open Source Transformation Services, IIoT, DevOps offsite development, consulting, training handouts available (includes Grails sticker) Copyright © 2015-2016 by Object Computing, Inc. (OCI) React All rights reserved 2 Overview ... Web app library from Facebook As of 1/2/16, React was reportedly used by Airbnb, Atlasssian, Capitol http://facebook.github.io/react/ One, Codecademy, Coursera, Dropbox, Focuses on view portion Expedia, Facebook, Firefox, Flipboard, HipChat, IMDb, Instagram, not full stack like other frameworks such as AngularJS and EmberJS Intuit, Khan Academy, NHL, Netflix, use other libraries for non-view functionality Paypal, Reddit, Salesforce, Squarespace, Tesla Motors, New York Times, Twitter, some are listed later Uber, WhatsApp, Wired, Wordpress, “One-way reactive data flow” Yahoo, Zendesk, and many more. Source: https://github.com/facebook/ UI reacts to “state” changes react/wiki/Sites-Using-React not two-way data binding like in AngularJS 1 Facebook uses React more than what triggered a digest cycle? Google uses Angular. should I manually trigger it? easier to follow flow of data from events to state changes to component rendering Copyright © 2015-2016 by Object Computing, Inc. (OCI) React All rights reserved 3 ... Overview Can use in existing web apps that use other frameworks start at leaf nodes of UI and gradually work up, replacing existing UI with React components Defines components that are composable whole app can be one component that is built on others Components get data to render from “state” and/or “props” Can render in browser, on server, or both ex. -

Red Hat Build of Node.Js 14 Node.Js Runtime Guide

Red Hat build of Node.js 14 Node.js Runtime Guide Use Node.js 14 to develop scalable network applications that run on OpenShift and on stand-alone RHEL Last Updated: 2021-05-27 Red Hat build of Node.js 14 Node.js Runtime Guide Use Node.js 14 to develop scalable network applications that run on OpenShift and on stand-alone RHEL Legal Notice Copyright © 2021 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Packer: an Opinionated Framework for Using 'Javascript'

Package ‘packer’ August 14, 2021 Title An Opinionated Framework for Using 'JavaScript' Date 2021-08-14 Version 0.1.1 Description Enforces good practice and provides convenience functions to make work with 'JavaScript' not just easier but also scalable. It is a robust wrapper to 'NPM', 'yarn', and 'webpack' that enables to compartmentalize 'JavaScript' code, leverage 'NPM' and 'yarn' packages, include 'TypeScript', 'React', or 'Vue' in web applications, and much more. License MIT + file LICENSE Encoding UTF-8 RoxygenNote 7.1.1 Imports fs, usethis, jsonlite, htmlwidgets, cli, assertthat, rprojroot, rstudioapi URL https://github.com/JohnCoene/packer, https://packer.john-coene.com BugReports https://github.com/JohnCoene/packer/issues Suggests testthat, covr, golem NeedsCompilation no Author John Coene [aut, cre] (<https://orcid.org/0000-0002-6637-4107>) Maintainer John Coene <[email protected]> Repository CRAN Date/Publication 2021-08-14 12:40:02 UTC R topics documented: add_plugin_clean . .3 add_plugin_eslint . .3 add_plugin_html . .4 add_plugin_prettier . .4 add_plugin_workbox . .4 apply_react . .5 1 2 R topics documented: apply_vue . .5 are_minified . .6 bundle . .6 checks . .7 engine . .7 engine_console . .8 jsdoc . .8 make_library . .9 mockup . .9 npm_console . 10 npm_fix . 10 npm_install . 10 npm_outdated . 11 npm_run . 11 npm_update . 11 put_precommit_hook . 12 put_recommended . 12 put_rprofile_adapt . 12 scaffold_ambiorix . 13 scaffold_extension . 14 scaffold_golem . 15 scaffold_input . 16 scaffold_leprechaun . 17 scaffold_output . 17 -

Complete Node.Js Secrets & Tips for Professionals

Node.js CompleteComplete Tips & Secrets for Professionals Node.js Tips & Secrets for Professionals 200+ pages of professional hints and tricks Disclaimer This is an unocial free book created for educational purposes and is GoalKicker.com not aliated with ocial Node.js group(s) or company(s). Free Programming Books All trademarks and registered trademarks are the property of their respective owners Contents About ................................................................................................................................................................................... 1 Chapter 1: Getting started with Node.js ............................................................................................................ 2 Section 1.1: Hello World HTTP server ........................................................................................................................... 3 Section 1.2: Hello World command line ....................................................................................................................... 4 Section 1.3: Hello World with Express .......................................................................................................................... 5 Section 1.4: Installing and Running Node.js ................................................................................................................. 6 Section 1.5: Debugging Your NodeJS Application ...................................................................................................... 6 Section 1.6: -

Webpack React Eject Rescripts

Webpack React Eject Rescripts Rummy Gino sometimes concertina his meritocracies publicly and paste so disadvantageously! Furthermost and Linnean Ignacius demobilized while Burman Hartwell spile her illumination metonymically and sliver crossways. Zachary fine designedly. Facebook documentation on the property is here. Much like the programming language Ruby, Python was designed to be easily read by programmers. You have to add it to your tailwind config. The script will also run npm install inside the project directory, which will download any additional dependencies. Do firebase encrypt the data on transit as well as. See the License for the specific language governing permissions and limitations under the License. Now you have learned how to build an NW. Different minds, different talents. Join the conversation Cancel reply Your email address will not be published. Tailwind CSS reset and utility classes, and your custom CSS below. Snapshot testing is a feature of Jest that automatically generates text snapshots of your components and saves them on the disk so if the UI output changes, you get notified without manually writing any assertions on the component output. What about Babel or Webpack configurations? Then you can use both variations. Likewise, you can use Angular, Vue, React, or any other frontend framework for your desktop application. In the real world, we usually have to modify default webpack config for custom needs such as themes. CRA team to hide the config from whe user. See the section about running tests for more information. We should be teaching our kids how to code. Webpack dev server to proxy requests intended for our API server.