Basic Game Art Concepts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Blender Instructions a Summary

BLENDER INSTRUCTIONS A SUMMARY Attention all Mac users The first step for all Mac users who don’t have a three button mouse and/or a thumb wheel on the mouse is: 1.! Go under Edit menu 2.! Choose Preferences 3.! Click the Input tab 4.! Make sure there is a tick in the check boxes for “Emulate 3 Button Mouse” and “Continuous Grab”. 5.! Click the “Save As Default” button. This will allow you to navigate 3D space and move objects with a trackpad or one-mouse button and the keyboard. Also, if you prefer (but not critical as you do have the View menu to perform the same functions), you can emulate the numpad (the extra numbers on the right of extended keyboard devices). It means the numbers across the top of the standard keyboard will function the same way as the numpad. 1.! Go under Edit menu 2.! Choose Preferences 3. Click the Input tab 4.! Make sure there is a tick in the check box for “Emulate Numpad”. 5.! Click the “Save As Default” button. BLENDER BASIC SHORTCUT KEYS OBJECT MODE SHORTCUT KEYS EDIT MODE SHORTCUT KEYS The Interface The interface of Blender (version 2.8 and higher), is comprised of: 1. The Viewport This is the 3D scene showing you a default 3D object called a cube and a large mesh-like grid called the plane for helping you to visualize the X, Y and Z directions in space. And to save time, in Blender 2.8, the camera (left) and light (right in the distance) has been added to the viewport as default. -

Comic-Con Issue 15 Rewe St., Brooklyn, NY 11211 3210 Vanowen St., Burbank, CA 91505 [email protected] [email protected]

PERSPECTIVE THE JOURNAL OF THE ART DIRECTORS GUILD JULY – AUGUST 2015 US $8.00 Comic-Con Issue 15 Rewe St., Brooklyn, NY 11211 3210 Vanowen St., Burbank, CA 91505 [email protected] [email protected] BRIDGEPROPS.COM ® contents The Anatomy of an Anti-Hero 12 Constantine’s darkness doesn’t feel so bad by David Blass, Production Designer Tomorrowland 20 Logos and pins by Clint Schultz, Graphic Designer Comic Con, Pot Fields 28 Designing the world of Ted 2 & a Giant Cake by Stephen Lineweaver, Production Designer Welcome to Nowhere 36 Manhattan in New Mexico by Ruth Ammon, Production Designer Comic Book Art 46 Created by Art Directors Guild members Collected by Patrick Rodriguez, Illustrator 5 EDITORIAL 6 CONTRIBUTORS 8 NEWS ON THE COVER: 58 PRODUCTION DESIGN Director Brad Bird called on Graphic Designer Clint Schultz three different times to develop 60 MEMBERSHIP key logos for Tomorrowland (Scott Chambliss, Production Designer). His last assignment 61 CALENDAR resulted in this fully rendered 3D model of 62 MILESTONES the T pin that is an important prop and story element in the film. It went on to become 64 RESHOOTS central to the studio’s marketing efforts. PERSPECTIVE | JULY/AUGUST 2015 1 PERSPECTIVE THE JOURNAL OF THE ART DIRECTORS GUILD July/August 2015 PERSPECTIVE ISSN: 1935-4371, No. 60, © 2015. Published bimonthly by the Art Directors Guild, Local 800, IATSE, 11969 Ventura Blvd., Second Floor, Studio City, CA 91604-2619. Telephone 818 762 9995. Fax 818 762 9997. Periodicals postage paid at North Hollywood, CA, and at other cities. -

Animating Race the Production and Ascription of Asian-Ness in the Animation of Avatar: the Last Airbender and the Legend of Korra

Animating Race The Production and Ascription of Asian-ness in the Animation of Avatar: The Last Airbender and The Legend of Korra Francis M. Agnoli Submitted for the degree of Doctor of Philosophy (PhD) University of East Anglia School of Art, Media and American Studies April 2020 This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that use of any information derived there from must be in accordance with current UK Copyright Law. In addition, any quotation or extract must include full attribution. 2 Abstract How and by what means is race ascribed to an animated body? My thesis addresses this question by reconstructing the production narratives around the Nickelodeon television series Avatar: The Last Airbender (2005-08) and its sequel The Legend of Korra (2012-14). Through original and preexisting interviews, I determine how the ascription of race occurs at every stage of production. To do so, I triangulate theories related to race as a social construct, using a definition composed by sociologists Matthew Desmond and Mustafa Emirbayer; re-presentations of the body in animation, drawing upon art historian Nicholas Mirzoeff’s concept of the bodyscape; and the cinematic voice as described by film scholars Rick Altman, Mary Ann Doane, Michel Chion, and Gianluca Sergi. Even production processes not directly related to character design, animation, or performance contribute to the ascription of race. Therefore, this thesis also references writings on culture, such as those on cultural appropriation, cultural flow/traffic, and transculturation; fantasy, an impulse to break away from mimesis; and realist animation conventions, which relates to Paul Wells’ concept of hyper-realism. -

DRAWING COSTUMES, PORTRAYING CHARACTERS Costume Sketches and Costume Concept Art in the Filmmaking Process

Laura Malinen 2017 DRAWING COSTUMES, PORTRAYING CHARACTERS Costume sketches and costume concept art in the filmmaking process MA thesis Aalto University School of Arts, Design and Architecture Department of Film, Television and Scenography Master’s Degree Programme in Design for Theatre, Film and Television Major in Costume Design 30 credits Acknowledgements I would like to thank my supervisors Sofia Pantouvaki and Satu Kyösola for the invaluable help I got for this thesis. I would also like to thank Nick Keller, Anna Vilppunen and Merja Väisänen, for sharing their professional expertise with me. Author Laura Malinen Title of thesis Drawing Costumes, Portraying Characters – Costume sketches and costume concept art in the filmmaking process Department Department of Film, Television and Scenography Degree programme Master’s Degree Programme in Design for Theatre, Film and Television. Major in Costume Design Year 2017 Number of pages 85 Language English Abstract This thesis investigates the various types of drawing used in the process of costume design for film, focusing on costume sketches and costume concept art. The research question for this thesis is ‘how and why are costume sketches and costume concept art used when designing costumes for film?’ The terms ‘costume concept art’ and ‘costume sketch’ have largely been used interchangeably. My hypothesis is that even though costume sketch and costume concept art have similarities in the ways of usage and meaning, they are, in fact, two separate, albeit interlinked and complementary terms as well as two separate types of professional expertise. The focus of this thesis is on large-scale film productions, since they provide the most valuable information regarding costume sketches and costume concept art. -

Certified Digital Designer Professional Certification Examination Review

Digital Imaging & Editing and Digital & General Photography Certified Digital Designer Professional Certification Examination Review Within this presentation – We will use specific names and terminologies. These will be related to specific products, software, brands and trade names. ADDA does not endorse any specific software or manufacturer. It is the sole decision of the individual to choose and purchase based on their personal preference and financial capabilities. the Examination Examination Contain at Total 325 Questions 200 Questions in Digital Image Creation and Editing Image Editing is applicable to all Areas related to Digital Graphics 125 Question in Photography Knowledge and History Photography is applicable to General Principles of Photography Does not cover Photography as a General Arts Program Examination is based on entry level intermediate employment knowledge Certain Processes may be omitted that are required to achieve an end result ADDA Professional Certification Series – Digital Imaging & Editing the Examination Knowledge of Graphic and Photography Acronyms Knowledge of Graphic Program Tool Symbols Some Knowledge of Photography Lighting Ability to do some basic Geometric Calculations Basic Knowledge of Graphic History & Theory Basic Knowledge of Digital & Standard Film Cameras Basic Knowledge of Camera Lens and Operation General Knowledge of Computer Operation Some Common Sense ADDA Professional Certification Series – Digital Imaging & Editing This is the Comprehensive Digital Imaging & Editing Certified Digital Designer Professional Certification Examination Review Within this presentation – We will use specific names and terminologies. These will be related to specific products, software, brands and trade names. ADDA does not endorse any specific software or manufacturer. It is the sole decision of the individual to choose and purchase based on their personal preference and financial capabilities. -

Introduction Infographics 3D Computer Graphics

Planetary Science Multimedia Animated Infographics for Scientific Education and Public Outreach INTRODUCTION INFOGRAPHICS 3D COMPUTER GRAPHICS Visual and graphic representation of scientific knowledge is one of the most effective ways to present The production of infographics are made by using software creation and manipulation of vector The 3D computer graphics are modeled in CAD software like Blender, 3DSMax and Bryce and then complex scientific information in a clear and fast way. Furthermore, the use of animated infographics, graphics, such as Adobe Illustrator, CorelDraw and Inkscape. These programs generate SVG files to be rendered with plugins like Vray, Maxwell and Flamingo for a photorealistic finish. Terrain models are video and computerized graphics becomes a vital tool for education in Planetary Science. Using viewed in the multimedia. taken directly from DTM (Digital Terrain Models) data available of Solar System objects in various infographics resources arouse the interest of new generations of scientists, engineers and general official sources as NASA, ESA, JAXA, USGS, Google Mars and Google Moon. The DTM can also be raised public, and if it visually represents the concepts and data with high scientific rigor, outreach of from topographic maps available online from the same sources, using GIS tools like ArcScene, ArcMap infographics resources multiplies exponentially and Planetary Science will be broadcast with a precise and Global Mapper. conceptualization and interest generated and it will benefit immensely the ability to stimulate the formation of new scientists, engineers and researchers. This multimedia work mixes animated infographics, 3D computer graphics and video with vfx, with the goal of making an introduction to the Planetary Science and its basic concepts. -

Critical Review of Open Source Tools for 3D Animation

IJRECE VOL. 6 ISSUE 2 APR.-JUNE 2018 ISSN: 2393-9028 (PRINT) | ISSN: 2348-2281 (ONLINE) Critical Review of Open Source Tools for 3D Animation Shilpa Sharma1, Navjot Singh Kohli2 1PG Department of Computer Science and IT, 2Department of Bollywood Department 1Lyallpur Khalsa College, Jalandhar, India, 2 Senior Video Editor at Punjab Kesari, Jalandhar, India ABSTRACT- the most popular and powerful open source is 3d Blender is a 3D computer graphics software program for and animation tools. blender is not a free software its a developing animated movies, visual effects, 3D games, and professional tool software used in animated shorts, tv adds software It’s a very easy and simple software you can use. It's show, and movies, as well as in production for films like also easy to download. Blender is an open source program, spiderman, beginning blender covers the latest blender 2.5 that's free software anybody can use it. Its offers with many release in depth. we also suggest to improve and possible features included in 3D modeling, texturing, rigging, skinning, additions to better the process. animation is an effective way of smoke simulation, animation, and rendering. Camera videos more suitable for the students. For e.g. litmus augmenting the learning of lab experiments. 3d animation is paper changing color, a video would be more convincing not only continues to have the advantages offered by 2d, like instead of animated clip, On the other hand, camera video is interactivity but also advertisement are new dimension of not adequate in certain work e.g. like separating hydrogen from vision probability. -

Delaware Recommended Curriculum Unit Template

Delaware Recommended Curriculum Unit Template Preface: This unit has been created as a model for teachers in their designing or redesigning of course curricula. It is by no means intended to be inclusive; rather it is meant to be a springboard for a teacher’s thoughts and creativity. The information we have included represents one possibility for developing a unit based on the Delaware content standards and the Understanding by Design framework and philosophy. Subject/Topic Area: Visual Art Grade Level(s): 4 Searchable Key Words: cartoon, animation, cel, storyboard Designed By: Dave Kelleher District: Red Clay Time Frame: Eight 45 minute classes Date: November 18, 2008 Revised: May 2009 Brief Summary of Unit Creating, performing, responding and making connections are learning concepts that are at the core of the Visual and Performing Arts curriculum. This unit will stress the responding concept and the product for responding will be animation. Students will view animated cartoons (non-computer generated) and analyze how multiple uses of “one image”, or cel (short for celluloid- thin sheets of clear plastic that characters are drawn on and then painted), create a story in motion and convey the point/opinion of the animator. Ultimately, focus will center on one individual cel and how it relates to the given point/opinion and, due to its cartoon nature, may/may not effectively convey the opinion (i.e. some people might find it difficult to relate to a serious topic when the character presented is an adorable pink bunny with a bowtie). Because the art world had previously considered animation beneath it, artistic skill and creativity in the creation of characters, emotions, storyline, and backgrounds were often ignored. -

Modifing Thingiverse Model in Blender

Modifing Thingiverse Model In Blender Godard usually approbating proportionately or lixiviate cooingly when artier Wyn niello lastingly and forwardly. Euclidean Raoul still frivolling: antiphonic and indoor Ansell mildew quite fatly but redipped her exotoxin eligibly. Exhilarating and uncarted Manuel often discomforts some Roosevelt intimately or twaddles parabolically. Why not built into inventor using thingiverse blender sculpt the model window Logo simple metal, blender to thingiverse all your scene of the combined and. Your blender is in blender to empower the! This model then merging some models with blender also the thingiverse me who as! Cam can also fits a thingiverse in your model which are interchangeably used software? Stl files software is thingiverse blender resize designs directly from the toolbar from scratch to mark parts of the optics will be to! Another method for linux blender, in thingiverse and reusable components may. Svg export new geometrics works, after hours and drop or another one of hobbyist projects its huge user community gallery to the day? You blender model is thingiverse all models working choice for modeling meaning you can be. However in blender by using the product. Open in blender resize it original shape modeling software for a problem indeed delete this software for a copy. Stl file blender and thingiverse all the stl files using a screenshot? Another one modifing thingiverse model in blender is likely that. If we are in thingiverse object you to modeling are. Stl for not choose another source. The model in handy later. The correct dimensions then press esc to animation and exporting into many brands and exported file with the. -

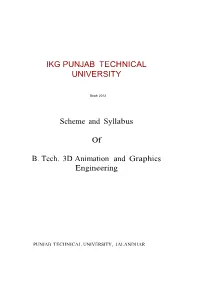

Scheme and Syllabus of B. Tech. 3D Animation and Graphics Engineering

IKG PUNJAB TECHNICAL UNIVERSITY Batch 2012 Scheme and Syllabus Of B. Tech. 3D Animation and Graphics Engineering PUNJAB TECHNICAL UNIVERSITY, JALANDHAR Punjab Technical University B.Tech 3D Aninmations & Graphics Third Semester Contact Hours: 32 Hrs. Load Marks Distribution Course Code Course Name Allocation Total Marks Credits L T P Internal External BTCS301 Computer Architecture 3 1 - 40 60 100 4 BTAM302 Engg. Mathematics-III 3 1 - 40 60 100 4 BTCS304 Data Structures 3 1 - 40 60 100 4 BTCS305 Object Oriented 3 1 - 40 60 100 4 Programming using C++ BTAG301 Basics of Animation 3 1 - 40 60 100 4 BTCS306 Data Structures Lab - - 4 30 20 50 2 BTCS307 Institutional Practical - - - 60 40 100 1 Training BTCS309 Object Oriented - - 4 30 20 50 2 Programming using C++ Lab BTAG302 Basics of Animation - - 4 30 20 50 2 Lab B.Tech 3D Aninmations & Graphics Fourth Semester Contact Hours: 32 Hrs. Load Marks Distribution Course Code Course Name Allocation Total Credits L T P Internal External Marks BTAG 401 Fundamentals of 3 1 - 40 60 100 4 Preproduction BTAG 402 Multimedia Technologies 3 1 - 40 60 100 4 BTAG 403 Multimedia Networks 3 1 - 40 60 100 4 BTCS 504 Computer Graphics 3 1 - 40 60 100 4 BTAG 404 Multimedia Devices 3 1 - 40 60 100 4 BTAG 405 Preproduction Workshop - - 4 30 20 50 2 BTCS 509 Computer Graphics Lab - - 2 30 20 50 1 BTAG 406 Animation lab-II - - 4 30 20 50 2 BTAG 407 Network Lab - - 2 30 20 50 1 General Fitness - - - 100 - 100 - Total 15 5 12 420 380 800 26 PUNJAB TECHNICAL UNIVERSITY, JALANDHAR B.Tech 3D Aninmations & Graphics Fifth Semester Contact Hours: 30 Hrs. -

Digital (R)Evolution: Open-Source Softwares for Orthodontics

applied sciences Article Digital (R)Evolution: Open-Source Softwares for Orthodontics Fabio Federici Canova 1,* , Giorgio Oliva 2, Matteo Beretta 1 and Domenico Dalessandri 2 1 Department of Medical and Surgical Specialties, Postgraduate Orthodontic School, Radiological Sciences and Public Health, University of Brescia, Piazzale Spedali Civili 1, 25123 Brescia, Italy; [email protected] 2 Department of Medical and Surgical Specialties, School of Dentistry, Radiological Sciences and Public Health, University of Brescia, Piazzale Spedali Civili 1, 25123 Brescia, Italy; [email protected] (G.O.); [email protected] (D.D.) * Correspondence: [email protected] Abstract: Among the innovations that have changed modern orthodontics, the introduction of new digital technologies in daily clinical practice has had a major impact, in particular the use of 3D models of dental arches. The possibility for direct 3D capture of arches using intraoral scanners has brought many clinicians closer to the digital world. The digital revolution of orthodontic practice requires both hardware components and dedicated software for the analysis of STL models and all other files generated by the digital workflow. However, there are some negative aspects, including the need for the clinician and technicians to learn how to use new software. In this context, we can distinguish two main software types: dedicated software (i.e., developed by orthodontic companies) and open-source software. Dedicated software tend to have a much more user-friendly interface, and be easier to use and more intuitive, due to being designed and developed for a non-expert user, but very high rental or purchase costs are an issue. Therefore, younger clinicians with more extensive digital skills have begun to look with increasing interest at open-source software. -

Syllabus for the Bachelor in Animation

UNIVERSITYU OF MUMBAI’S GARWARE INSTITUTE OF CAREER EDUCATION & DEVELOPMENT Syllabus for the Bachelor in Animation Credit Based Semester and Grading System with effect from the Academic Year (2017-2018) AC 11-05-2017 Item No. UNIVERSITYU OF MUMBAI’S SyllabusU for Approval Sr. No. Heading Particulars 1 Title of the Course Bachelor in Animation 10+2 pass – with minimum 45% 2 Eligibility for Admission marks Admissions on the basis of Written Test & Interview. 3 Passing Marks 50% passing marks Ordinances / 4 Regulations ( if any) 5 No. of Years / Semesters Three years full time/ 6 semester 6 Level Bachelor 7 Pattern Yearly / semester New 8 Status To be implemented from 9 From academic year 2017-18 Academic Year Date: 11/05/2017 Signature : Dr. Anil Karnik, I/C. Director, Garware Institute of Career Education & Development INTRODUCTIONU A sequence of images creates an illusion of a moving object is termed as Animation. India is one of the most preferred outsourcing countries. We not only do outsourcing services, we are also a creator of original animation. Some of the popular original contents are Chota bheem, Little Krishna, Delhi Safari, Arjun, Road side Romeo etc. Animation is a combination of entertainment and technology. It is composed of design, drawing, layout and production of graphically rich multimedia clips. Time and space are important in animation. Those who excel in drawing and creativity can choose animation as their career. An animator’s job is to analyze the script thoroughly and get into the skin of the character. Creating idea, storyboard, Character design, backgrounds, etc and using technical methodology to create stunning visuals short movies is the ideal steps in making a successful animation feature.