A Template Based Voice Trigger System Using Bhattacharyya Edit Distance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Opta Map Algemeen

Marktanalyse Omroep Delta N.V. - Ontwerpbesluit - (OPENBARE VERSIE) (VOORONTWERP) 6 augustus 2008 Ontwerpbesluitbetreffende het opleggenvan verplichtingenvoor ondernemingendie beschikkenover een aanmerkelijkemarktmacht als bedoeld in hoofdstuk6A van de Telecommunicatiewet. Onafhankelijke Post en Telecommunicatie Autoriteit OPENBAAR Marktanalyse Omroep Analyse van de wholesalemarkt voor doorgifte van rtv-signalen en het op wholesaleniveau leveren van de aansluiting op een omroeptransmissieplatform in het verzorgingsgebied van DELTA – Ontwerpbesluit – (voorontwerp) Ontwerpbesluit betreffende het opleggen van verplichtingen voor ondernemingen die beschikken over een aanmerkelijke marktmacht als bedoeld in hoofdstuk 6A van de Telecommunicatiewet 6 augustus 2008 OPTA/AM/2008/201542 OPENBARE VERSIE Openbare versie Inhoudsopgave 1 Inleiding en samenvatting........................................................................................................... 6 1.1 Inleiding................................................................................................................................. 6 1.2 Hoofdpunten van het besluit..................................................................................................6 1.3 Verplichtingen op de wholesalemarkt.................................................................................... 7 1.4 Geen veranderingen ten opzichte van 2006.......................................................................... 8 1.5 Advies NMa.......................................................................................................................... -

Rp. 149.000,- Rp

Indovision Basic Packages SUPER GALAXY GALAXY VENUS MARS Rp. 249.000,- Rp. 179.000,- Rp. 149.000,- Rp. 149.000,- Animax Animax Animax Animax AXN AXN AXN AXN BeTV BeTV BeTV BeTV Channel 8i Channel 8i Channel 8i Channel 8i E! Entertainment E! Entertainment E! Entertainment E! Entertainment FOX FOX FOX FOX FOXCrime FOXCrime FOXCrime FOXCrime FX FX FX FX Kix Kix Kix Kix MNC Comedy MNC Comedy MNC Comedy MNC Comedy MNC Entertainment MNC Entertainment MNC Entertainment MNC Entertainment One Channel One Channel One Channel One Channel Sony Entertainment Television Sony Entertainment Television Sony Entertainment Television Sony Entertainment Television STAR World STAR World STAR World STAR World Syfy Universal Syfy Universal Syfy Universal Syfy Universal Thrill Thrill Thrill Thrill Universal Channel Universal Channel Universal Channel Universal Channel WarnerTV WarnerTV WarnerTV WarnerTV Al Jazeera English Al Jazeera English Al Jazeera English Al Jazeera English BBC World News BBC World News BBC World News BBC World News Bloomberg Bloomberg Bloomberg Bloomberg Channel NewsAsia Channel NewsAsia Channel NewsAsia Channel NewsAsia CNBC Asia CNBC Asia CNBC Asia CNBC Asia CNN International CNN International CNN International CNN International Euronews Euronews Euronews Euronews Fox News Fox News Fox News Fox News MNC Business MNC Business MNC Business MNC Business MNC News MNC News MNC News MNC News Russia Today Russia Today Russia Today Russia Today Sky News Sky News Sky News Sky News BabyTV BabyTV BabyTV BabyTV Boomerang Boomerang Boomerang Boomerang -

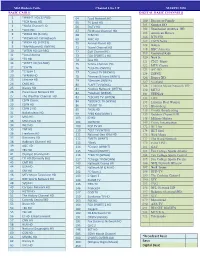

2020 March Channel Line up with Pricing Color

B is Mid-Hudson Cable Channel Line UP MARCH 2020 BASIC CABLE DIGITAL BASIC CHANNELS 2 *WMHT HD (17 PBS) 64 Food Network HD 100 Discovery Family 3 *FOX News HD 65 TV Land HD 101 Science HD 4 *NASA Channel HD 66 TruTV HD 102 Destination America HD 5 *QVC HD 67 FX Movie Channe l HD 105 American Heroes 6 *WRGB HD (6-CBS) 68 TCM HD 106 BTN HD 7 *WCWN HD CW Network 69 AMC HD 107 ESPN News 8 *WXXA HD (FOX23) 70 Animal Planet HD 108 Babytv 9 *My4AlbanyHD (WNYA) 71 Travel Channel HD 118 BBC America 10 *WTEN HD (10-ABC) 72 Golf Channel HD 119 Universal Kids 11 *Local Access 73 FOX SPORTS 1 HD 12 *FX HD 120 Nick Jr. 74 fuse HD 121 CMT Music 13 *WNYT HD (13-NBC) 75 Tennis Channel HD 122 MTV Classic 17 *EWTN 76 *LIGHTtv (WNYA) 123 IFC HD 19 *C-Span 1 77 *Comet TV (WCWN) 124 ESPNU 20 *WRNN HD 78 *Heroes & Icons (WNYT) 126 Disney XD 23 Lifetime HD 79 *Decades (WNYA) 127 Viceland 24 CNBC HD 80 *LAFF TV (WXXA) 128 Lifetime Movie Network HD 25 Disney HD 81 *Justice Network (WTEN) 130 MTV2 26 Paramount Network HD 82 *Stadium (WRGB) 131 TEENick 27 The Weather Channel HD 83 *ESCAPE TV (WTEN) 132 LIFE 28 ESPN Classic 84 *BOUNCE TV (WXXA) 133 Lifetime Real Women 29 ESPN HD 86 *START TV 135 Bloomberg 30 ESPN 2 HD 95 *HSN HD 138 Trinity Broadcasting 31 Nickelodeon HD 99 *PBS Kids(WMHT) 139 Outdoor Channel HD 32 MSG HD 103 ID HD 148 Military History 33 MSG PLUS HD 104 OWN HD 149 Crime Investigation 34 WE! HD 109 POP TV HD 172 BET her 35 TNT HD 110 *GET TV (WTEN) 174 BET Soul 36 Freeform HD 111 National Geo Wild HD 175 Nick Music 37 Discovery HD 112 *METV (WNYT) -

Annex 2: Providers Required to Respond (Red Indicates Those Who Did Not Respond Within the Required Timeframe)

Video on demand access services report 2016 Annex 2: Providers required to respond (red indicates those who did not respond within the required timeframe) Provider Service(s) AETN UK A&E Networks UK Channel 4 Television Corp All4 Amazon Instant Video Amazon Instant Video AMC Networks Programme AMC Channel Services Ltd AMC Networks International AMC/MGM/Extreme Sports Channels Broadcasting Ltd AXN Northern Europe Ltd ANIMAX (Germany) Arsenal Broadband Ltd Arsenal Player Tinizine Ltd Azoomee Barcroft TV (Barcroft Media) Barcroft TV Bay TV Liverpool Ltd Bay TV Liverpool BBC Worldwide Ltd BBC Worldwide British Film Institute BFI Player Blinkbox Entertainment Ltd BlinkBox British Sign Language Broadcasting BSL Zone Player Trust BT PLC BT TV (BT Vision, BT Sport) Cambridge TV Productions Ltd Cambridge TV Turner Broadcasting System Cartoon Network, Boomerang, Cartoonito, CNN, Europe Ltd Adult Swim, TNT, Boing, TCM Cinema CBS AMC Networks EMEA CBS Reality, CBS Drama, CBS Action, Channels Partnership CBS Europe CBS AMC Networks UK CBS Reality, CBS Drama, CBS Action, Channels Partnership Horror Channel Estuary TV CIC Ltd Channel 7 Chelsea Football Club Chelsea TV Online LocalBuzz Media Networks chizwickbuzz.net Chrominance Television Chrominance Television Cirkus Ltd Cirkus Classical TV Ltd Classical TV Paramount UK Partnership Comedy Central Community Channel Community Channel Curzon Cinemas Ltd Curzon Home Cinema Channel 5 Broadcasting Ltd Demand5 Digitaltheatre.com Ltd www.digitaltheatre.com Discovery Corporate Services Discovery Services Play -

Optik TV Channel Listing Guide 2020

Optik TV ® Channel Guide Essentials Fort Grande Medicine Vancouver/ Kelowna/ Prince Dawson Victoria/ Campbell Essential Channels Call Sign Edmonton Lloydminster Red Deer Calgary Lethbridge Kamloops Quesnel Cranbrook McMurray Prairie Hat Whistler Vernon George Creek Nanaimo River ABC Seattle KOMODT 131 131 131 131 131 131 131 131 131 131 131 131 131 131 131 131 131 Alberta Assembly TV ABLEG 843 843 843 843 843 843 843 843 ● ● ● ● ● ● ● ● ● AMI-audio* AMIPAUDIO 889 889 889 889 889 889 889 889 889 889 889 889 889 889 889 889 889 AMI-télé* AMITL 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 2288 AMI-tv* AMIW 888 888 888 888 888 888 888 888 888 888 888 888 888 888 888 888 888 APTN (West)* ATPNP 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 9125 — APTN HD* APTNHD 125 125 125 125 125 125 125 125 125 125 125 125 125 125 125 125 — BC Legislative TV* BCLEG — — — — — — — — 843 843 843 843 843 843 843 843 843 CBC Calgary* CBRTDT ● ● ● ● ● 100 100 100 ● ● ● ● ● ● ● ● ● CBC Edmonton* CBXTDT 100 100 100 100 100 ● ● ● ● ● ● ● ● ● ● ● ● CBC News Network CBNEWHD 800 800 800 800 800 800 800 800 800 800 800 800 800 800 800 800 800 CBC Vancouver* CBUTDT ● ● ● ● ● ● ● ● 100 100 100 100 100 100 100 100 100 CBS Seattle KIRODT 133 133 133 133 133 133 133 133 133 133 133 133 133 133 133 133 133 CHEK* CHEKDT — — — — — — — — 121 121 121 121 121 121 121 121 121 Citytv Calgary* CKALDT ● ● ● ● ● 106 106 106 ● ● ● ● ● ● ● ● — Citytv Edmonton* CKEMDT 106 106 106 106 106 ● ● ● ● ● ● ● ● ● ● ● — Citytv Vancouver* -

Ziggo CI+ Module Installeren

Ziggo CI+ module. Het installeren. Binnen 30 minuten geïnstalleerd. 2 Inhoud. Inhoud van het installatiepakket pag. 4 x HET AANSLUITEN 1 Verwijder de huidige coaxkabel pag. 7 2 Aansluiten nieuwe coaxkabel pag. 8 # DIGITALE ZENDERS INSTELLEN 3a Zoek de digitale zenders pag. 10 3b Zenders instellen op uw Bang & Olufsen-tv pag. 11 3c Zenders instellen op uw LG-tv pag. 12 3d Zenders instellen op uw Loewe-tv pag. 13 3e Zenders instellen op uw Panasonic-tv pag. 14 3f Zenders instellen op uw Philips-tv pag. 15 3g Zenders instellen op uw Salora-tv pag. 16 3h Zenders instellen op uw Samsung-tv pag. 17 3i Zenders instellen op uw Sharp-tv (oud model) pag. 18 3j Zenders instellen op uw Sharp-tv (nieuw model) pag. 19 3k Zenders instellen op uw Sony-tv pag. 20 3l Zenders instellen op uw Toshiba-tv pag. 21 G CI+ MODULE PLAATSEN 4 Plaatsen van de CI+ module pag. 23 5 De zenders worden geïnstalleerd pag. 24 A MEER INFORMATIE Hulp bij installatie pag. 27 Veelgestelde vragen pag. 28 Storingen pag. 30 Zenderoverzicht pag. 32 3 Inhoud van het installatiepakket. Tijdens uw bestelling is aangegeven dat de CI+ module alleen werkt als u een geschikt tv-toestel met de juiste software heeft. Weet u niet zeker of uw tv geschikt is, dan kunt u dit op ziggo.nl/ciplushulp controleren. Staat uw toestel hier niet bij, neem dan contact op met onze klantenservice om de CI+ module te retourneren. Bel 0900-1884 (normaal tarief) of 1200 (gratis met een Ziggo lijn). -

E02630506 Fiber Creative Templates Q2 2021 Spring Channel

Channel Listings for Atlanta All channels available in HD unless otherwise noted. Download the latest version at SD Channel available in SD only google.com/fiber/channels ES Spanish language channel As of Summer 2021, channels and channel listings are subject to change. Local A—C ESPN Deportes ES 215 National Geographic 327 ESPNews 211 Channel C-Span 131 A&E 298 ESPNU 213 NBC Sports Network 203 ES C-Span 2 132 ACC Network 221 EWTN 456 NBC Universo 487 C-Span 3 133 AMC 288 EWTN en Espanol ES 497 NewsNation 303 Clayton County 144 American Heroes 340 NFL Network 219 SD Food Network 392 Access TV Channel SD FOX Business News 120 Nick2 422 COBB TV 140 Animal Planet 333 ES Nickelodeon 421 FOX Deportes 470 Fulton County Schools 145 Bally Sports South 204 Nick Jr. 425 SD FOX News Channel 119 TV (FCSTV) Bally Sports Southeast 205 SD FOX Sports 1 208 Nick Music 362 Fulton Government TV 141 BBC America 287 FOX Sports 2 209 Nicktoons 423 HSN 23 BBC World News 112 Freeform 286 HSN2 24 BET 355 O—T Fusion 105 NASA 321 BET Gospel SD 378 FX 282 QVC 25 BET Her SD 356 Olympic Channel 602 FX Movie Channel 281 QVC2 26 BET Jams SD 363 OWN: Oprah Winfrey 334 FXX 283 TV24 143 BET Soul SD 369 Oxygen 404 WAGADT (FOX) 5 FYI 299 Boomerang 431 Paramount Network 341 SD WAGADT2 (Movies!) 72 GAC: Great American 373 SD Bravo 296 Country POSITIV TV 453 WAGADT3 (Buzzr) SD 73 BTN2 623 Galavisión ES 467 Science Channel 331 WATCDT SD 2 BTN3 624 Golf Channel 249 SEC Network 216 WATCDT2 (WATC TOO) SD 83 BTN4 625 Hallmark Channel 291 SEC Overflow 617 WATLDT (MyNetworkTV) 36 SD -

1-2-3 TV 24 Horas 24 Kitchen 24 Kitchen HD 3Sat 7/8TV

1-2-3 TV 24 Horas 24 kitchen 24 kitchen HD 3sat 7/8TV Al Jazeera 7/8TV HD Agro TV Alfa TV Animal Planet Animal Planet HD Balkans HD Auto Motor ARD “Das Erste” ARTE GEIE Auto Motor Sport AXN AXN Black Sport HD AXN White B1B Action B1B Action HD BabyTv Balkanika Music Balkanika Music HD Bloomberg TV BG Music BG Music HD Black Diamondz HD BNT 1 BNT 1 -1ч Bulgaria BNT 1 -2ч. BNT 1 HD BNT 2 BNT 2 HD BNT 3 HD BNT 4 BNT 4 HD Bober TV Box TV BSTV BSTV HD bTV bTV -1ч. bTV -2ч. bTV Action bTV Action HD bTV Cinema bTV Cinema HD bTV Comedy bTV Comedy HD bTV HD bTV Lady bTV Lady HD Bulgare ` Bulgare HD Bulgaria On Air CCTV-4 CGTN CGTN - Español CGTN - Français CGTN – Documentary Chasse & Peche Chillayo Cinemania العرب - CGTN - Русский CGTN Cinemania HD City TV Classical Harmony HD Code Fashion TV HD Code Health Code Health HD Cubayo HD Detskiy Mir Diema Diema Family Diema Sport Diema Sport 2 Diema Sport 2 HD Diema Sport HD Discovery HD Discovery Science Discovery Science HD Discovery SD Disney Channel Disney Junior DM SAT Dom Kino Dom Kino Premium DSTV ECTV ECTV for kids HD EDGE Sport ekids English Club HD Epic Drama ERT World Eurosport Eurosport 2 Eurosport 2 HD Eurosport HD Evrokom Evrokom HD FEN Folk FEN Folk HD FEN TV FEN TV HD Flame HD Folklor TV Folklor TV HD Folx TV Food Netwоrk Food Netwоrk HD Fox Fox Crime Fox Crime HD Fox HD Fox Life Fox Life HD France 2 HD France 3 HD France 4 HD France 5 HD Galaxy HD Health & Wellness HD Hit Mix HD Homey's HD HRT 4 HD HRT INT ID Xtra ID Xtra HD Insight HD Kabel 1 (Kabel eins) Kabel Eins Doku Kanal 4 Kanal 4 HD Karusel KCN KCN 2 Music KCN 3 Svet plus KiKa KiKa HD Kino Nova Lolly Kids HD Luxe and Life HD M2 HD Magic TV MAX Sport 1 MAX Sport 1 HD MAX Sport 2 MAX Sport 2 HD MAX Sport 3 MAX Sport 3 HD MAX Sport 4 MAX Sport 4 HD Moviestar Moviestar HD MTV Hits Muzika N24 (WELT) N24 DOKU (WELT) Nache Lubimoe Kino National Geo National National Geo Wild National Geographic Chaneel Nick Jr Wild HD Geographic HD Nickelodeon Nova Nova -1ч. -

Zenderlijst Digitaal

Extra Alles in 1 Extra Zender Kanaal Zender Kanaal Nick Jr. 27 BBC Entertainment 46 Cartoon Network 28 AMC 69 Boomerang 29 NGC Wild 73 BabyTV# 31 Discovery World 75 Pebble TV 32 Discovery Science 76 CBBC 43 RTL Lounge 78 BBC 4 / Cbeebies 44 RTL Crime 79 BBC Entertainment 46 Horse & Country TV 80 RTL 2 +HbbTV 58 Mezzo 89 Kabel 1 +HbbTV 59 Eurosport 2 +HD 92 Vox +HbbTV 62 SLAM! TV 135 AMC 69 VH-1 Classic 138 Animal Planet 72 BBC 1 HD 241 NGC Wild 73 BBC 2 HD 242 Discovery World 75 CBBC HD 243 Discovery Science 76 BBC 4 / Cbeebies HD 244 RTL Lounge 78 Animal Planet HD 272 RTL Crime 79 Travel Channel HD 281 Horse & Country TV 80 iConcerts HD 292 Travel Channel 81 Stingray dJazz HD 293 Fashion TV# 82 NPO 101 502 Mezzo 89 NPO Cultura 503 Stingray Brava 90 NPO Zapp Xtra 517 Eurosport 2 +HD 92 FOX Sports Eredivisie 1 +HD 541 Motors TV HD 93 FOX Sports Eredivisie 3 +HD 543 MTV Brandnew 133 FOX Sports Eredivisie 5 +HD 545 SLAM! TV 135 VH-1 Classic 138 TV 538 139 Playboy TV* 161 Adult Channel* 162 Brazzers TV* 163 NPO 101 502 NPO Cultura 503 NPO Zapp Xtra 517 Instellen van de ontvanger De door u aangeschafte digitale ontvanger of televisie moet worden ingesteld om de digitale zenders van Kabelnet Veendam te kunnen * Deze zenders kunnen op verzoek geblokkeerd worden ontvangen. # Kan alleen met een HD-ontvanger bekeken worden In het menu van de digitale ontvanger of televisie voert u de volgende codes in: Frequentie : 234.000 Net ID : 9641 Qamformaat : Qam 64 Symboolrate (K-Baud) : 6875 Basispakket TV Internationaal Film Zender Kanaal Zender Kanaal -

Packages & Channel Lineup

™ ™ ENTERTAINMENT CHOICE ULTIMATE PREMIER PACKAGES & CHANNEL LINEUP ESNE3 456 • • • • Effective 6/17/21 ESPN 206 • • • • ESPN College Extra2 (c only) (Games only) 788-798 • ESPN2 209 • • • • • ENTERTAINMENT • ULTIMATE ESPNEWS 207 • • • • CHOICE™ • PREMIER™ ESPNU 208 • • • EWTN 370 • • • • FLIX® 556 • FM2 (c only) 386 • • Food Network 231 • • • • ™ ™ Fox Business Network 359 • • • • Fox News Channel 360 • • • • ENTERTAINMENT CHOICE ULTIMATE PREMIER FOX Sports 1 219 • • • • A Wealth of Entertainment 387 • • • FOX Sports 2 618 • • A&E 265 • • • • Free Speech TV3 348 • • • • ACC Network 612 • • • Freeform 311 • • • • AccuWeather 361 • • • • Fuse 339 • • • ActionMAX2 (c only) 519 • FX 248 • • • • AMC 254 • • • • FX Movie 258 • • American Heroes Channel 287 • • FXX 259 • • • • Animal Planet 282 • • • • fyi, 266 • • ASPiRE2 (HD only) 381 • • Galavisión 404 • • • • AXS TV2 (HD only) 340 • • • • GEB America3 363 • • • • BabyFirst TV3 293 • • • • GOD TV3 365 • • • • BBC America 264 • • • • Golf Channel 218 • • 2 c BBC World News ( only) 346 • • Great American Country (GAC) 326 • • BET 329 • • • • GSN 233 • • • BET HER 330 • • Hallmark Channel 312 • • • • BET West HD2 (c only) 329-1 2 • • • • Hallmark Movies & Mysteries (c only) 565 • • Big Ten Network 610 2 • • • HBO Comedy HD (c only) 506 • 2 Black News Channel (c only) 342 • • • • HBO East 501 • Bloomberg TV 353 • • • • HBO Family East 507 • Boomerang 298 • • • • HBO Family West 508 • Bravo 237 • • • • HBO Latino3 511 • BYUtv 374 • • • • HBO Signature 503 • C-SPAN2 351 • • • • HBO West 504 • -

Choose the Channels You Want with Fibe TV

Choose the channels you want with Fibe TV. Add individual channels to your Starter or Basic package. Channel Name Channel Group Price Channel Name Channel Group Price Channel Name Channel Group Price A&E PrimeTime 1 $ 2.99 CMT PrimeTime 2 $ 4.00 DistracTV Apps on TV $ 4.99 South Asia Premier Aapka Colors $ 6.00 CNBC News $ 4.00 DIY Network Explore $ 1.99 Package CNN PrimeTime 1 $ 7.00 documentary Cinema $ 1.99 ABC Seattle Time Shift West $ 2.99 Comedy Gold Replay $ 1.99 Dorcel TV More Adult $21.99 ABC Spark Movie Picks $ 1.99 Cooking Movie Picks $ 1.99 DTOUR PrimeTime 2 $ 1.99 Action Movie Flicks $ 1.99 CosmoTV Life $ 1.99 DW (Deutsch+) German $ 5.99 AMC Movie Flicks $ 7.00 Cottage Life Places $ 1.99 E! Entertainment $ 6.00 American Heroes Channel Adventure $ 1.99 CP24 Information $ 8.00 ESPN Classic Sports Enthusiast $ 1.99 Animal Planet Kids Plus $ 1.99 Crave Apps on TV $ 7.99 EWTN Faith $ 2.99 A.Side TV Medley $ 1.99 Crave + Movies + HBO Crave + Movies + $18/6 Pack Exxxtasy Adult $21.99 ATN South Asia Premier $16.99 HBO Fairchild Television Chinese $19.99 Package Crime + Investigation Replay $ 1.99 B4U Movies South Asia Package $ 6.00 Family Channel (East/West) Family $ 2.99 CTV BC Time Shift West $ 2.99 BabyTV Kids Plus $ 1.99 Family Jr. Family $ 2.99 CTV Calgary Time Shift West $ 2.99 BBC Canada Places $ 1.99 Fashion Television Channel Lifestyle $ 1.99 CTV Halifax Time Shift East $ 2.99 BBC Earth HD HiFi $ 1.99 Fight Network Sports Fans $ 1.99 CTV Kitchener Time Shift East $ 2.99 BBC World News Places $ 1.99 Food Network Life $ 1.99 CTV Moncton Time Shift East $ 2.99 beIN Sports Soccer & Wrestling $14.99 Fox News News $ 1.99 CTV Montreal Time Shift East $ 2.99 BET Medley $ 2.99 Fox Seattle Time Shift West $ 2.99 CTV News Channel News $ 2. -

Kanalen Overzicht

HUTV is now upgraded to Sterling TV for better server and performance! Channel List Updated September 16, 2020 Channel Channel List Number 1 A&E 2 ABC News 3 ABC WEST 4 AMC 5 American Heroes Channel 6 ANIMAL PLANET WEST 7 Animal Planet 8 AXS TV 9 ABC EAST 10 AWE 11 BBC AMERICA 12 BBC World News 13 BET 14 Boomerang 15 Bravo 16 Cartoon Network East 17 Cartoon Network West 18 CBS EAST 19 CBS WEST 20 Cheddar Business 21 Cheddar News 22 CINE SONY 23 CINEMAX 5STAR MAX 24 CINEMAX ACTIONMAX 25 Cinemax East 26 CINEMAX MORE MAX 27 CINEMAX OUTERMAX 28 CINEMAX THRILLERMAX 29 Cleo TV 30 CMT 31 CNBC World 32 CNBC 33 CNN en Espa?ol 34 CNN 35 COMEDY CENTRAL 36 Comet 37 Cooking Channel 38 COZI TV 39 C-SPAN 2 40 C-SPAN 3 41 C-SPAN 42 Destination America 43 DISCOVERY CHANNEL WEST 44 DISCOVERY CHANNEL 45 Discovery en Espanol HD 46 Discovery Familia HD 47 DISCOVERY FAMILY 48 Discovery Life Channel 49 DISCOVERY SCIENCE 50 Discovery 51 DISNEY CHANNEL WEST 52 Disney Channel 53 DISNEY JR 54 Disney Junior 55 Disney XD 56 DIY Network 57 DOG TV 58 E! East 59 E! West 60 EL REY NETWORK 61 EPIX 2 62 EPIX Hits 63 Epix 64 Food Network 65 FOX Business Network 66 FOX EAST 67 Fox Life 68 FOX News Channel 69 FOX WEST 70 FREE SPEECH TV 71 Freeform 72 Fusion 73 FX East 74 FX MOVIE 75 FXM 76 FXX East 77 FYI 78 GAME SHOW NETWORK 79 GINX eSPORTS 80 Hallmark Channel 81 Hallmark Drama 82 Hallmark Movies 83 HBO 2 84 HBO COMEDY 85 HBO East 86 HBO FAMILY 87 HBO SIGNATURE 88 HGTV East 89 HGTV WEST 90 HISTORY 91 HLN 92 IFC 93 Investigation Discovery 94 ION TV 95 Law and Crime 96 LIFETIME