Semantics-Based Summarization of Entities in Knowledge Graphs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Be Sure to MW-Lv/Ilci

Page 12 The Canadian Statesman. Bowmanville. April 29, 1998 Section Two From page 1 bolic vehicle taking its riders into other promised view from tire window." existences. Fit/.patriek points to a tiny figure peer In the Attic ing out of an upstairs window on an old photograph. He echoes that image in a Gwen MacGregor’s installation in the new photograph, one he took looking out attic uses pink insulation, heavy plastic of the same window with reflection in the and a very slow-motion video of wind glass. "I was playing with the idea of mills along with audio of a distant train. absence and presence and putting shifting The effect is to link the physical elements images into the piece." of the mill with the artist’s own memories growing up on the prairies. Train of Thought In her role as curator for the VAC, Toronto area artists Millie Chen and Rodgers attempts to balance the expecta Evelyn Michalofski collaborated on the tions of serious artists in the community Millery, millery, dustipoll installation with the demands of the general popula which takes its title from an old popular tion. rhyme. “There has to be a cultural and intel TeddyBear Club Holds Workshop It is a five-minute video projected onto lectual feeding," she says, and at the same Embroidered noses, button eyes, fuzzy cars, and adorable faces arc the beginnings of a beautiful teddy bear. three walls in a space where the Soper time, we have to continue to offer the So say the members of the Bowmanville Teddy Bear Club. -

Introduction to the Complete Directory to Prime Time Network and Cable TV Shows

Broo_9780345497734_2p_fm_r1.qxp 7/31/07 10:32 AM Page ix INTRODUCTION In the following pages we present, in a sin- eral headings. For example, newscasts are gle volume, a lifetime (or several lifetimes) of summarized under News, movie series under television series, from the brash new medium Movies and sports coverage under Football, of the 1940s to the explosion of choice in the Boxing, Wrestling, etc. All other series are 2000s. More than 6,500 series can be found arranged by title in alphabetical order. There here, from I Love Lucy to Everybody Loves is a comprehensive index at the back to every Raymond, The Arthur Murray [Dance] Party cast member, plus appendixes showing an- to Dancing with the Stars, E/R to ER (both nual network schedules at a glance, the top with George Clooney!), Lost in Space to Lost 30 rated series each season, Emmy Awards on Earth to Lost Civilizations to simply Lost. and other information. Since the listings are alphabetical, Milton Network series are defined as those fed out Berle and The Mind of Mencia are next-door by broadcast or cable networks and seen si- neighbors, as are Gilligan’s Island and The multaneously across most of the country. Gilmore Girls. There’s also proof that good Broadcast networks covered are ABC, CBS, ideas don’t fade away, they just keep coming NBC, Fox, CW, MyNetworkTV, ION (for- back in new duds. American Idol, meet merly PAX) and the dear, departed DuMont, Arthur Godfrey’s Talent Scouts. UPN and WB. We both work, or have worked, in the TV Original cable series are listed in two dif- industry, care about its history, and have ferent ways. -

Click to Download

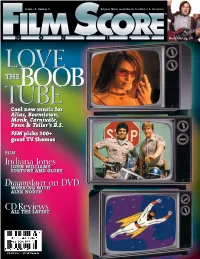

Volume 8, Number 8 Original Music Soundtracks for Movies & Television Rock On! pg. 10 LOVE thEBOOB TUBE Cool new music for Alias, Boomtown, Monk, Carnivàle, Penn & Teller’s B.S. FSM picks 100+ great great TTV themes plus Indiana Jones JO JOhN WIllIAMs’’ FOR FORtuNE an and GlORY Dragonslayer on DVD WORKING WORKING WIth A AlEX NORth CD Reviews A ALL THE L LAtEST $4.95 U.S. • $5.95 Canada CONTENTS SEPTEMBER 2003 DEPARTMENTS COVER STORY 2 Editorial 20 We Love the Boob Tube The Man From F.S.M. Video store geeks shouldn’t have all the fun; that’s why we decided to gather the staff picks for our by-no- 4 News means-complete list of favorite TV themes. Music Swappers, the By the FSM staff Emmys and more. 5 Record Label 24 Still Kicking Round-up Think there’s no more good music being written for tele- What’s on the way. vision? Think again. We talk to five composers who are 5 Now Playing taking on tough deadlines and tight budgets, and still The Man in the hat. Movies and CDs in coming up with interesting scores. 12 release. By Jeff Bond 7 Upcoming Film Assignments 24 Alias Who’s writing what 25 Penn & Teller’s Bullshit! for whom. 8 The Shopping List 27 Malcolm in the Middle Recent releases worth a second look. 28 Carnivale & Monk 8 Pukas 29 Boomtown The Appleseed Saga, Part 1. FEATURES 9 Mail Bag The Last Bond 12 Fortune and Glory Letter Ever. The man in the hat is back—the Indiana Jones trilogy has been issued on DVD! To commemorate this event, we’re 24 The girl in the blue dress. -

Searching for Truth in the Post-Truth Era: an Examination of Detective Fiction from Poe to Present

WATSON, DAVID RIDDLE, Ph.D. Searching for Truth in the Post-Truth Era: An Examination of Detective Fiction from Poe to Present. (2019) Directed by Dr. Christian Moraru.176 pp. During the 2016 election, terms such as “fake news” and “post-truth” became commonplace as well as talks of “two Americas,” suggesting that truth and reality were relative to one’s perspective. Trust in foundational institutions like church, school, and government has become shaky at best, leading many scholars to believe we have entered a post-truth age. In my dissertation, I attempt to tackle the question of truth by examining people whose job it is to uncover the truth: detectives. I trace a philosophical history of detective novels through three different time periods described as modern, postmodern, and contemporary in order to argue that truth is located in intersubjectivity, explaining that successful detectives, through their ability to identify another’s perspectives, can discover motive and belief in order to bring cases to closure, where others cannot. In the modern period, I examine ways in which Edgar Allan Poe’s detective August C. Dupin and Arthur Conan Doyle’s Sherlock Holmes solve mysteries by assuming a rational world where everything is neatly ordered. This allows truth to be a function of rationality and solvable by applying logic. Following this analysis, I turn to the hard-boiled novels of Raymond Chandler and Dashiell Hammett to examine the way order and meaning became increasingly elusive after two world wars and the atomic bomb, leading to an existential crisis and the postmodern era. -

Tomasz Skupień (Nr Albumu 1055180)

1999823012(1) 5027952739(2) 1015145544(3) 3508229698(4) 8754195246(5) 1885260304(6) 2584767519(7) 1589385556(8) 3654648020(9) W badaniach fanów można wyróżnić trzy główne nurty: falę dewiacji, falę oporu i falę głównego nurtu18. W każdej z nich inaczej patrzono na fana. W fali dewiacji, której początek wiąże się z pierwszymi ruchami fanowskim (lata 20. XX wieku), fana traktowano jako dewianta. Uważano go za groźnego odmieńca, posiadającego pewnego rodzaju zaburzenia (czy to osobowości, czy to seksualne). Miało to swoje podstawy w podejściu ówczesnych naukowców do kultury masowej (wokół której powstawali fani). Traktowano ją jak coś gorszego, wręcz wypaczonego. Fan był też postrzegany jako marionetka w rękach producenta.19 Całkowicie inaczej ta sprawa wyglądała w czasie fali oporu (zapoczątkowanej w 1992 roku). Teraz fan był postrzegany jako partyzant w walce z producentami. Jak to wyglądało? Fan tworzył swoje własne teksty, próbował na własny sposób interpretować to, co lubi. Nie poddawał się władzy producentów, często wchodził z nimi w konflikt. Głównym narzędziem, jakie wykorzystywał, stał się Internet (jeszcze w bardzo raczkującej formie). Zmiana podejścia akademickiego miała swe główne podłoże między innymi w tym, że wielu naukowców samych ujawniało się wtedy jako fani (między innymi Henry Jenkisn ukuwa wspominane już określenie „akafan”).20 Obecnie dominuje trzecie podejście – fala głównego nurtu. Zaczęło się ono w 2006 roku (wydanie książki Jenkinsa Kultura konwergencji). Można go scharakteryzować jako pokojową koegzystencje fanów i producentów. Ci drudzy już nie chcą na siłę narzucać fanom, jak mają myśleć. Zaczynają ich słuchać i często postępować według ich rad. Fan zaś nie jest traktowany jako bierna jednostka - ale jednostka która ciągle coś tworzy.21 1.1.2 Cechy fana Główną cechą fanów jest, jak uważa Piotr Siuda, że „znaczenia przekazów kulturowych są przez nich negocjonowane, interpretowane i jakby ponownie produkowane”22, a „przyjemność i rozrywka bowiem to najważniejsza kategoria odbiorcza” którą się kierują23. -

Knight Rider 2008 the Complete Series Dvd

Knight rider 2008 the complete series dvd Knight Rider - The Complete Series DVD Knight Rider - Season Four DVD From to ; Knight Rider - Legacy; Knight Rider Commentary With. : Knight Rider: The Complete Series: David Hasselhoff, Edward Home Entertainment; DVD Release Date: October 21, ; Run Time: Find great deals on eBay for Knight Rider DVD in DVDs and Movies for DVD and Blu-ray Disc Knight Rider - The Complete Series (DVD, , Disc Set). Find great deals for Knight Rider - The Complete Series (DVD, , Disc Set). Shop with confidence on eBay! Knight Rider - The Complete Box Set ( Repackage) [DVD] [] DVD Episodes comprise; 'Knight Rider Feature Length Pilot', 'A Knight in Shining. Shop Knight Rider - The Complete Box Set [DVD]. Everyday low prices and Synopsis. Contains all four series of the hugely popular '80s show Knight Rider. Back in , Universal Studios first released Knight Rider - The Complete Series on DVD in a gift set which has been discontinued. Now Mill. The Series: In a young man named Michael Knight (played by a big Reviews» DVD Video Reviews» Knight Rider: The Complete Series. Knight Rider: The Complete Series. Universal // Unrated // October 21, Review: Knight Rider Series One DVD scripts had been requested by NBC, and by Oct 21st they had approved a full season order. Knight Rider: The Complete Series DVD was released on July 28, on a four disc set with bonus material. Highlights. Knight Rider-complete Series [dvd/16 Disc] (Mill Creek Entertainment). Read more. Video Release Date: Animated: Live- action. Episodes: 1. Knight Rider []: A Knight in Shining Armor (Season 1: Ep. -

Programming GUIDE : Full Length Episodes, Clips, and Feature Films

Watch your favorites. Anytime. For Free. PROGRAMMING GUIDE : Full Length Episodes, Clips, and Feature Films HULU TV SHOWS (in both full-length episodes or clips) include but are not limited to: 24 The Captain and Casey Show Galactica 1980 Caught On Tape Ghost Hunters 30 Days Celebrity Apprentice Ghost Hunters International 30 Rock Charlie’s Angels The Girls Next Door Chelsea Lately Chicago Hope Academy, The Hart to Hart Chuck Access Hollywood Hell’s Kitchen Conviction Adam-12 Heroes Cops The Addams Family Hey Paula! The Crow: Stairway To Heaven Airwolf Highlander Alfred Hitchcock Hour Hill Street Blues Alfred Hitchcock Presents The Dana Carvey Show House All-For-Nots Days of our Lives American Dad! Deadline I Dream of Jeannie American Gladiators Deal or No Deal Iconoclasts American Gothic Decision House In Living Color Amnesia The Dick Van Dyke Show Inside the Actors Studio Andy Barker, P.I. Dirt The Invisible Man Archie Bunker’s Place Doogie Howser, M.D. It Takes a Thief Are You Smarter Than A 5th Grader? Dr. 90210 It’s Always Sunny in Philadelphia Arrested Development Dr. Steve-O Dragnet Astro Boy Jack of All Trades Dream On The A-Team The Janice Dickinson Modeling Agency Dress My Nest Jerry Springer Babylon 5 John Doe Back To You E! News Now Johnny Sokko and His Flying Robot The Bad Girls Club Emergency! Journeyman Bad Girls Road Trip Equal Justice Eureka Barney Miller Kathy Griffin Exosquad Battlestar Galactica Keeping Up with The Kardashians Battlestar Galactica Classic King Of The Hill BCS NCAA Football Games Fame Kitchen Confidential Beauty and the Geek Family Guy Kitchen Nightmares Benson Fantasy Island Knight Rider Bewitched Firefly Kojak Big Ideas for a Small Planet Firsthand Kojak 2004 The Biggest Loser Flash Gordon K-Ville Bionic Woman Flipper The Bob Newhart Show Flipping Out L.A. -

The BG News September 4, 1998

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 9-4-1998 The BG News September 4, 1998 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News September 4, 1998" (1998). BG News (Student Newspaper). 6358. https://scholarworks.bgsu.edu/bg-news/6358 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. Friday,The Sept. 4 1998 A dailyBG independent student News press Volume 85* No. 9 Vandals strike cars in lot 9 □ Several cars parked in Uni- my resident adviser told me (the cars) were vandalized. I went to check versity lot 9 were broken into and mine was one of them." and stereo equipment was Matz said his windows were broken out and his stereo and radio equipment stolen. were stolen. He said he noticed between eight and ten cars broken into. He said he does not believe the car By BRANDI BARHITE break-ins had anything to do with the The BG News recent problems with parking, yet noted Broken glass and missing stereo equip- the suspects knew what they were doing. ment brought some students out of bed "I felt that the lot was safe because it early Thursday morning. Three Universi- was in a high-traffic area. -

Crtnttp Cnpb

Crtnttp Cnpb VOL. XCVI NO. 10 PUBLISHED BY THE STUDENTS OF TRINITY COLLEGE SINCE 1904 NOVEMBEH 24.1997 Students Address Academic Excellence Rewarded Needs Of Homeless cluding substance use and lack BY RENAY SMALLCOMB of education. News Writer Asked what the most diffi- cult part of their job is, The week of November 16 to Poventud answered, "The rep- 22 marked a joint initiative by etition. Trying to help these ConnPIRG and the SG A to raise people and seeing them resort to awareness and aid the homeless their old behavior. But if we can during National Hunger and help one or two people change Homelessness Week. Many their lives, it's worth it. We cer- Trinity students, faculty and tainly don't do it for money- we staff united to show their sup- do it because we've been there port and raise awareness among and we want to give from our the Trinity community through heart to help someone else." their participation in several Smith added, "It's frustrating events. because they are eager to blame This initiative began everyone else for their situation, Wednesday night with two even the people helping them. speakers, Melvin Smith and You have to find a trigger, some- Leon Poventud, followed by a thing to give them hope and a sleepout on the Cave Patio. As reason to fight." workers at the House of the Im- Discussing the cycle of maculate Conception Shelter, homelessness, Smith said, they discussed homelessness "Something usually causes and their experiences working someone to be homeless. It's a with the homeless. -

Crazy Like A

T. 26-NOV. 1, 1997 THE DETROIT VOL. 2 NO. 50 75 CENTS S u n d a y Io u r n a l CONTINUING THE STRUGGLE FOR JUSTICE AND CONTRACTS ©TDSJ Crazy like a Fox Murdoch-Ilitch power play squeezes out a cable sports competitor By Jim McFarlin Journal Television Writer For the past 13 years, sports fans in analysis metro Detroit and outstate could bet their cable boxes on one thing: TheNFL teams, have no cable affiliation.) majority of games played by their As a result, PASS will pass away beloved Red Wings, Pistons and TigersFriday, turning off its lights for the would eventually come to PASS. last time Halloween night. But Fox has raided Michigan’s cable “It’s sad, but I think it’s just unfor TV corral, striking with all the sudtunately an indication of where the denness and upheaval of its sly aniregional sports network business is mal namesake. In less than two going,” says Jeff Genthner, outgoing months, Fox Sports Detroit, the 10thPASS general manager. and newest regional sports franchise “For the last 17 years, regional in zillionaire Rupert Murdoch’s plansports networks have operated inde for world media domination, invadedpendently in their markets. Fox’s the Detroit market, acquired the cable agenda is to own them all, rebrand rights to Wings, Pistons and Tigersthem under the name Fox Sports and broadcasts and got the majority ofmake a national service comprised of area cable systems to carry their sig all the regional networks. That’s nal, effectively leaving PASS withoutwhere the business is heading.” a home or a home team to call its own. -

TRANSFORMING to the NEXT STEP REALITY VIA AUTONOMOUS Carsi

European Journal of Education Studies ISSN: 2501 - 1111 ISSN-L: 2501 - 1111 Available on-line at: www.oapub.org/edu DOI: 10.46827/ejes.v7i12.3399 Volume 7 │ Issue 12 │ 2020 CARS AS HEROES: TRANSFORMING TO THE NEXT STEP REALITY VIA AUTONOMOUS CARSi Nilüfer Pembecioğlu1ii, Uğur Gündüz2 iProf. Dr., Istanbul University, Faculty of Communication, Department of Radio, TV and Cinema, Turkey orcid.org/0000-0001-7510-6529 2Prof. Dr., Istanbul University, Faculty of Communication, Department of Journalism, Turkey orcid.org/0000-0002-6138-6758 Abstract: The beginning of modern mankind is usually connected to the invention of the wheel making mobility possible. Men always wanted to be mobile through the vehicles of different kinds. Throughout time, cars became not only a means of simple transportation but a sign of hope, a symbol of status, a proof of richness etc. Different types, colors and styles were produced, and a competition occurred among the car producers to develop talented cars as well as its advertisements. In these ones, not only the car itself but also the users of such heroic cars were promoted as outstanding personalities. These unique characters had a certain charm and convincing power on the ordinary ones. Thus, how the advertisements of these new trends effect the new models and the desires of the mobility is another question to be analyzed. The use of the automobiles within the films, made some models and some styles very famous and created trends touching the mind or heart of the people. Some of these “automatic heroes” are discussed in this article. The paper aims to analyze how these autonomous cars are introduced to the masses, imposing premises and benefits, etc. -

The Captain America Conundrum: Issues of Patriotism, Race, and Gender in Captain America Comic Books, 1941-2001

The Captain America Conundrum: Issues of Patriotism, Race, and Gender in Captain America Comic Books, 1941-2001 by Richard A. Hall A dissertation submitted to the Graduate Faculty of Auburn University in partial fulfillment of the requirements for the Degree of Doctor of Philosophy Auburn, Alabama August 6, 2011 Keywords: Captain America, comic books, patriotism, popular culture Copyright 2011 by Richard A. Hall Approved by Larry Gerber, Chair, Professor Emeritus of History Ruth Crocker, Professor of History David Carter, Associate Professor of History Abstract “The Captain America Conundrum: Issues of Patriotism, Race, and Gender in Captain America Comic Books, 1941-2001” represents a comprehensive examination of Captain America comic books as a primary source for the study of United States history from just prior to World War II to the aftermath of the terrorist attacks of September 11, 2001. Just as Time magazine or the New York Times newspaper could be used as primary sources to examine American society, mores, and culture, it is the argument of this study that comic books – heretofore considered primarily children’s literature – can also be used in this regard. Through the pages of this essentially monthly publication, accepted norms of contemporary American society can be seen, as well as (post-1960) attempts on the part of the writers and artists to influence those norms. Within the zeitgeist of American culture, the character of Captain America has been considered a representation of strong government and ardent conservatism. The reality, however, is that the character has become increasingly just left-of-center politically, promoting strong nationalism and American leadership alongside ideas of racial and gender equality.