Experiences with a User-Adaptive Assistant Agent

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Investigation of the Biosynthesis of Bacterial Natural Products

Investigation of the biosynthesis of bacterial natural products Dissertation zur Erlangung des Doktorgrades der Naturwissenschaften vorgelegt beim Fachbereich für Biowissenschaften (15) der Johann Wolfgang Goethe-Universität in Frankfurt am Main von Sebastian Winfried Fuchs aus Hanau (2013) (D 30) vom Fachbereich für Biowissenschaften (15) der Johann Wolfgang Goethe-Universität als Dissertation angenommen. Dekanin: Professorin Anna Starzinski-Powitz Gutachter: Professor Dr. Helge B. Bode, Professor Dr. Eckhard Boles, Professor Dr. Christian Hertweck Datum der Disputation: 16.12.2013 ii Danksagung Danksagung Meinen Eltern und Großeltern möchte ich meinen herzlichen Dank für ihre Unterstützung ausdrücken. Herrn Professor Dr. Helge B. Bode und der gesamten Arbeitsgruppe danke ich für die überaus freundliche und motivierende Arbeitsatmosphäre, und die Unterstützung, die mir im Laufe der Zeit von Euch zugekommen ist. Herrn Professor Dr. Helge B. Bode möchte ich besonders für die Möglichkeit zur Bearbeitung der hierin beschriebenen Projekte danken. Herrn Professor Dr. Eckhard Boles danke ich für die Übernahme des Zweitgutachtens zu dieser Arbeit. Herrn Professor Dr. Michael Karas möchte ich für die Möglichkeit zur Nutzung der MALDI- Massenspektrometer danken. Herrn Dr. Thorsten Jaskolla möchte ich für seine freundliche Unterstützung danken, die mir das Feld der Massenspektrometrie näher gebracht hat. Weiterhin möchte ich meinen Freunden für unsere gemeinsamen Erlebnisse danken. iii Table of contents Table of contents Danksagung .............................................................................................................................. -

Recent Developments in Identification of Genuine Odor- and Taste-Active Compounds in Foods

Recent Developments in Identification of Genuine Odor- and Taste-Active Compounds in Foods Edited by Remedios Castro-Mejías and Enrique Durán-Guerrero Printed Edition of the Special Issue Published in Foods www.mdpi.com/journal/foods Recent Developments in Identification of Genuine Odor- and Taste-Active Compounds in Foods Recent Developments in Identification of Genuine Odor- and Taste-Active Compounds in Foods Editors Remedios Castro-Mej´ıas Enrique Dur´an-Guerrero MDPI Basel Beijing Wuhan Barcelona Belgrade Manchester Tokyo Cluj Tianjin • • • • • • • • • Editors Remedios Castro-Mej´ıas Enrique Duran-Guerrero´ Analytical Chemistry Analytical Chemistry Universidad de Cadiz´ Department Puerto Real University of Cadiz Spain Puerto Real Spain Editorial Office MDPI St. Alban-Anlage 66 4052 Basel, Switzerland This is a reprint of articles from the Special Issue published online in the open access journal Foods (ISSN 2304-8158) (available at: www.mdpi.com/journal/foods/special issues/Recent Developments Identification Genuine Odor- Taste-Active Compounds Foods). For citation purposes, cite each article independently as indicated on the article page online and as indicated below: LastName, A.A.; LastName, B.B.; LastName, C.C. Article Title. Journal Name Year, Volume Number, Page Range. ISBN 978-3-0365-1668-4 (Hbk) ISBN 978-3-0365-1667-7 (PDF) © 2021 by the authors. Articles in this book are Open Access and distributed under the Creative Commons Attribution (CC BY) license, which allows users to download, copy and build upon published articles, as long as the author and publisher are properly credited, which ensures maximum dissemination and a wider impact of our publications. The book as a whole is distributed by MDPI under the terms and conditions of the Creative Commons license CC BY-NC-ND. -

CPI Mini Product Catalog

PRODUCT CATALOG v.28 CPI WEBSITE Visit Chatsworth Products’ (CPI) website at chatsworth.com to find, filter and compare thousands of products, as well as view documentation, create a shopping cart and purchase from an authorized CPI partner. You will also find product configurators, selectors, estimators and downloadable design tools. Online Chat The CPI website now offers online chat for customers who need assistance while using our website. You will benefit from one-on-one, real-time interaction with CPI’s helpful employees. CPI Product Designer Many of our enclosures can be configured online using the CPI Product Designer. CPI Product Designer will generate bills of material, drawings, 3D models and sales documents automatically. Once the design is finished, you will receive a confirmation email with the product’s description, part number, and bill of material with pricing and related documents. Visit chatsworth.com/product-designer. Power Selector Visit selectapdu.com to select the best power product for your application by narrowing down options based on your requirements. You can compare several products at one time and then email or print the results. CPI Video Library Take advantage of the many videos on video.chatsworth.com. You’ll find helpful how-to videos, product feature videos and solution overviews. On-Demand Courses CPI offers courses that have been approved by BICSI and the American Institute of Architects (AIA) for continuing education credits (CECs). These courses are presented by CPI’s highly-trained and experienced -

La Brea and Beyond: the Paleontology of Asphalt-Preserved Biotas

La Brea and Beyond: The Paleontology of Asphalt-Preserved Biotas Edited by John M. Harris Natural History Museum of Los Angeles County Science Series 42 September 15, 2015 Cover Illustration: Pit 91 in 1915 An asphaltic bone mass in Pit 91 was discovered and exposed by the Los Angeles County Museum of History, Science and Art in the summer of 1915. The Los Angeles County Museum of Natural History resumed excavation at this site in 1969. Retrieval of the “microfossils” from the asphaltic matrix has yielded a wealth of insect, mollusk, and plant remains, more than doubling the number of species recovered by earlier excavations. Today, the current excavation site is 900 square feet in extent, yielding fossils that range in age from about 15,000 to about 42,000 radiocarbon years. Natural History Museum of Los Angeles County Archives, RLB 347. LA BREA AND BEYOND: THE PALEONTOLOGY OF ASPHALT-PRESERVED BIOTAS Edited By John M. Harris NO. 42 SCIENCE SERIES NATURAL HISTORY MUSEUM OF LOS ANGELES COUNTY SCIENTIFIC PUBLICATIONS COMMITTEE Luis M. Chiappe, Vice President for Research and Collections John M. Harris, Committee Chairman Joel W. Martin Gregory Pauly Christine Thacker Xiaoming Wang K. Victoria Brown, Managing Editor Go Online to www.nhm.org/scholarlypublications for open access to volumes of Science Series and Contributions in Science. Natural History Museum of Los Angeles County Los Angeles, California 90007 ISSN 1-891276-27-1 Published on September 15, 2015 Printed at Allen Press, Inc., Lawrence, Kansas PREFACE Rancho La Brea was a Mexican land grant Basin during the Late Pleistocene—sagebrush located to the west of El Pueblo de Nuestra scrub dotted with groves of oak and juniper with Sen˜ora la Reina de los A´ ngeles del Rı´ode riparian woodland along the major stream courses Porciu´ncula, now better known as downtown and with chaparral vegetation on the surrounding Los Angeles. -

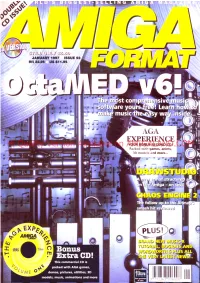

Hi Quality Version Available on AMIGALAND.COMYOUR BONUS SECOND CD! Packed with Games, Anims, ^ 3D Models and M Ore

' A G A EXPERIENCE Hi Quality Version Available on AMIGALAND.COMYOUR BONUS SECOND CD! Packed with games, anims, ^ 3D models and m ore... P L U S n @ AMIGA • J U T D J t 'jJUhD'j'jSxni D W This commercial CD is packed with AGA games, 9771363006008 ^ demos, pictures, utilities, 3D models, music, animations and more 9 771363 006008 Please make checks to COSOFT or O (01702) 300441 n 300441 order by credit card / switch & delta Most titles are despatched same day. ^ ^ - 5 217 - 219 Hamstel Rd - Southend-on-Sea, ESSEX, SS2 4LB Vat is INCLUDED on all titles, e&oe q . ^ er [email protected] Give us your email for monthly feb Page: Hnp://www.pdsoft m updated catalogue reports. Office & Retail Outlet open Monday to Saturday 9:30 to 7pm - Tel (01702) 306060 & 306061 - Fax (01702) 300115 Please add 1.00 per title for UK P&P & 2.00 for oversea's Airmail - Order via email & get the most upto date prices. Check our Web pages (updated every day) for special ofers and new releases. Special offers running every day. JUNGLE STRIKE SPECIAL FEATURE (1 4 .ff CAPTIAL PUNISHMENT Only (24.99 688 ATTACK SUPER SIOMARKS LEGENDS LURE OF THE SUB (12 DATA DISK (S B * f 17.BB T.TRESS (12 SABRE TEAM PLAYER ON MANAGER 2 OOYSSEY 1199 RUGBY SYNDICATE ( 12.M EURO KICKOFF 3 Hi Quality Version Available on AMIGALAND.COMC7.BB INTER OFFICE UPNtl BLACK CRYPT M r ( I f f * Me (11.00 INTER SPREAD WORLD CUP M r ( 9 99 Inc SOCCER CM2 - (3.99 A ll - (3 99 IN TER WORD K240 (7.U M r u n w CHESS SYSTEM SCREEHBAT 4 Give us a ring if you do not see what you want ACTIVE STEREO Some titles are limited and will go out of stock quickly. -

View July 2014 Report

MOBILE SMART FUNDAMENTALS MMA MEMBERS EDITION JULY 2014 messaging . advertising . apps . mcommerce www.mmaglobal.com NEW YORK • LONDON • SINGAPORE • SÃO PAULO MOBILE MARKETING ASSOCIATION JULY 2014 REPORT The Playbook Over the last few months we’ve been building a unique resource that will help our brand marketer members successfully develop and execute a mobile strategy, allowing them to deliver a consistent mobile brand experience on a global scale. Enter our Mobile Marketing Playbook (Press Release). Launched last week and created in partnership with global sporting goods giant, adidas, it aims to explain when, where and how companies can use mobile as core to their marketing efforts. Whilst we’ve seen some incredible work this year, as evidenced by the many great mobile campaigns submitted to our 2014 Smarties Awards Program (currently in pre-screening), one of the challenges marketers still face is how to make mobile an integral part of their mix. The Playbook takes marketers through the process of mobile strategy development from start to finish. It provides best practices around mobile executions, ways to leverage the myriad mobile vehicles, insights into mobile creative effectiveness and how companies can effectively measure and optimize mobile. To address the ever changing needs of and challenges faced by marketers, the Playbook will be regularly updated to reflect shifts in consumer behavior, mobile trends as they are introduced, and innovations that are continuously being developed through and with mobile. This will be accomplished in part by the annual addition of well over 500 case studies into our Case Study Hub, helping to define best practice and to serve as a source of inspiration to our marketer members Members can access the entire Playbook by logging in using your member login and password where directed. -

Release Notes for Iplanet XML Adapter Designer (XAD)

Release Notes for iPlanet™ XML Adapter Designer (XAD) Version 2.0 Updated March 2002 These release notes contain important information available at the time of the release of iPlanet XML Adapter Designer (XAD), version 2.0. General information about this release, known problems, and other information are addressed here. Read this document before you begin using XAD. These release notes contain the following sections: • “General Information” • “Supported Systems and Software” • “Installation Topics” • “Known Problems” • “Documentation Errata” • “Internationalization/Localization” • “How to Report Problems” • “For More Information” General Information This release is available to all supported iPlanet Integration Server (iIS) EAI customers through iPlanet's SubscribeNet service. The XAD distribution contains: • XAD version 2.0 software • A test client • Example adapters built with XAD 1 General Information • An iPlanet Integration Server (iIS) client that you can use to demonstrate communication with the example adapters • Documentation Documentation The XAD documentation is available at http://docs.iplanet.com/docs/manuals/xad.html. This documentation consists of the: • XAD User’s Guide • XAD Installation Guide •XAD Release Notes • XAD Platform Support Matrix XAD License Read your license for XAD version 2.0 carefully. XAD Examples and Test Client The XAD distribution provides example adapters that were generated with XAD. It also provides the API implementations for which the adapter examples were generated. The XAD documentation explains how to configure and run these example adapters and test them by sending XML requests with the XAD test client. The test client is a universal client for XAD adapters. You can use it to test any adapter generated by XAD. -

AMIGAOS 3.1.4 EMULACJA Krzysztof Radzikowski

Krzysztof Radzikowski AMIGAOS 3.1.4 EMULACJA Krzysztof Radzikowski AMIGAOS 3.1.4 EMULACJA © 2020 Krzysztof Radzikowski Wszystkie nazwy i znaki handlowe wykorzystane w książce należą do ich właścicieli, zostały użyte wyłącznie w celach informacyjnych. Powielanie w całości lub części bez pisemnej zgody Wydawcy zabronione. Autor i Wydawca nie ponoszą odpowiedzialności za skutki wynikłe z wykorzy- stania informacji oraz technik programowania zawartych w książce. Krzysztof Radzikowski Poznań All rights reserved www.amigapodcast.com Wydanie I 3 AmigaOS 3.1.4 to następca kultowego systemu 3.1 wydanego w roku 1994. 4 PROLOG Nowy AmigaOS 3.1.4 wydany przez Hyperion Entertainment CVBA należy traktować jako duchowego następcę AmigaOS 3.1 wydanego jeszcze za czasów Commodore International. Filozo(ia, która stoi za AmigaOS 3.1.4 jest inna od tej z sys- temów w wersji 3.5 oraz 3.9 stworzonych przez (irmę Haage & Partner Computer GmbH. Najnowszy produkt (irmy Hype- rion Entertainment CVBA w przeciwieństwie do AmigaOS 3.5 jak i 3.9 również działa na procesorach Motorola 68000. Programiści odpowiedzialni za nowy system wprowadzili szereg poprawek i ulepszeń, co uczyniło AmigaOS 3.1.4 atrakcyjnym zakupem dla szerokiego grona użytkowników komputerów Amiga opartych o serie procesorów 680xx. 5 ROZDZIAŁ 1 WSTĘP Emulacja to nie wszystko dy tworzyłem książki o AmigaOS 4.1 (najnowsza gene- G racja przewidziana dla procesorów PowerPC) nie przy- puszczałem, że będę miał okazje kolejny raz zafascynować się odmianą systemu dla komputerów produkcji kultowego Commodore. Firma Hyperion Entertainment wzięta na warsztat ponad dwudziestopięcioletni system o numeracji 3.1 i stworzyła na jego podstawie wersję odświeżona. Tak powstał AmigaOS 3.1.4. -

M&A Transactions

MOBILE SMART FUNDAMENTALS MMA MEMBERS EDITION AUGUST 2014 messaging . advertising . apps . mcommerce www.mmaglobal.com NEW YORK • LONDON • SINGAPORE • SÃO PAULO MOBILE MARKETING ASSOCIATION AUGUST 2014 REPORT The Innovators As you’ll have seen from the release of this year’s Smarties™ Awards shortlist, we had an exceptional response to this year’s programs. With close to 100 campaigns from 30 countries, just for the Global program shortlist alone, interest in this year’s programs has grown dramatically. With programs in North America, EMEA, UK, Turkey, South Africa, APAC, India, Vietnam, LATAM, in addition to our anchor Global program, the Smarties Awards have truly become a global platform for recognizing those marketers who are blazing new trails and challenging the status quo. This year’s Global Gala & Ceremony will be held on October 1 in New York and will serve as a fitting finale to our SM2 Mobile Innovation Summit – which you can secure your seat for here. The Smarties Awards programs support the Industry and our members in two important ways. Being able to recognize and communicate globally that there is fierce competition in this space, is key to the MMA’s marketer first mission and to communicating mobile’s importance to a brands ongoing success. Connected to this is our ability to then share these submissions via our Case Study Hub, allowing members to search by mobile vehicle used, geographic markets served and industry vertical. This serves as an inspiration center as well as a creative and results driven benchmarking tool that our members can use to accelerate the success of their own mobile campaigns and strategy, again, essential to our marketer first mission. -

D-05-Thermal-Desorption-Tubes.Pdf

177 Air Monitoring Thermal Desorption Tubes Thermal Desorption Tubes for DYNATHERM Instruments Supelco offers a complete line of both prepacked and empty tubes for the DYNATHERM Thermal Desorbers. Each tube is individually numbered, thermally conditioned, and batch tested for back-pressure and background. The prepacked Standard Sampling Tubes are sealed in our exclusive 3TDS storage containers and the Fast-Flow Tubes are sealed with Swagelok fittings. All of the prepacked sampling tubes incorporate a glass frit at the inlet, which increases their performance. The FocusingTtubes are used to refocus the sample for better chromatography of the early eluting compounds. 22832 DYNATHERM Standard Sampling Tubes Fits: Models 850 / 890 TDU and ACEM-900 and MTDU. TUBE QTY CAT. NO. PRICE 9960299 6mm OD x 4.5” Long, 4mm ID Tube Conditioning Units Cabotrap 100 1 20872 These units allow you to re-condition your DYNATHERM Sample Preparation Cabotrap 150 1 20381 Standard Tubes (6mm OD x 11.5cm long) Carbotrap 200 1 20873 Carbotrap 217 1 20895-U DESCRIPTION CAT. NO. PRICE Carbotrap 300 1 20875 Single-Tube Conditioning Unit, 115 VAC 22832 Carbotrap 302 1 20356 Six-Tube Conditioning Unit, 115 VAC 22833 Carbotrap 317 1 20877 Carbotrap 349 1 20243 Carbotrap 400 1 20882 Tenax TA 1 20896-U Empty fritted tubes 3 20380-U 21998 Empty non-fritted tubes 5 20235-U Note: Tubes listed above also fit the OI Analytical Air Tube Desorber or DMP-16. 21993 DYNATHERM Fast-Flow Sampling Tubes These tube have a 7mm ID that allow faster flow rates to pass through the tubes while sampling. -

A Bibliography of Publications in Byte Magazine: 1990–1994

A Bibliography of Publications in Byte Magazine: 1990{1994 Nelson H. F. Beebe University of Utah Department of Mathematics, 110 LCB 155 S 1400 E RM 233 Salt Lake City, UT 84112-0090 USA Tel: +1 801 581 5254 FAX: +1 801 581 4148 E-mail: [email protected], [email protected], [email protected] (Internet) WWW URL: http://www.math.utah.edu/~beebe/ 05 September 2013 Version 2.12 Title word cross-reference 100 [Ano94-129, Bry93b, HK94]. 100-Mbps [Bry93b]. 100-MHz [Ano94-129, HK94]. 1000 [Ano91u]. 10A [Ano92-73]. 110 [Nad90a]. 1120NX [Ano91e]. 1200C /Views [Api94b]. [Rei93b]. 13-pound [Nad90d]. 144 [Tho93b]. 14th [Ano92-185]. 15-to 040/120 [Ano93-60]. 040/200 [Ano93-60]. [Ano94-89]. 15th [Ano90m]. 16 [Tho90e]. 16-and [Gre94e]. 16-bit 1 [Ano90w, Ano90-51, Ano90a, Ano90-110, [Ano93-48, Ano94-88, Ano94-119, Gla90b, Ano90-113, Ano91-29, Ano93-61, Ano93-130, Sha94b, Tho94b, Wsz90]. 16-Million-Color Ano94a, Del93a, Del93b, Die90b, Far90, [Tho90e]. 16.7 [Ano91-131]. Nan90b, Pep91, Ref94]. 1-2-3 16.7-Million-Color [Ano91-131]. 165c [AR91, Gas93, Ano90-51, Ano90-110, [Tho93a]. 17-ppm [Ano93-92]. 180 Ano90-113, Ano91-29, Ano93-61, Ano94a, [Ano93-93]. 180-MBps [Ano93-93]. 1954 Del93a, Del93b, Die90b, Far90, Pep91]. [Hal94d]. 1954-1994 [Hal94d]. 1990s 1-2-3/G [Ano90-51, Die90b]. 1-megabyte [Cra91k, Lip90b, Osm90, Ras91b, Ras91o, [Tho90d, Ano90a]. 1/2 [Ano92-45]. Tho90f, VC90s, Woo91b]. 1994 [Hal94d]. 1/2-inch [Ano92-45]. 10 [Ano94-165, Nan94b]. 10-Mbps 2 [Ano91-117, Ano91-76, Ano91-131, [Ano94-165, Nan94b]. -

2008–2009 Safety Equipment Catalog

2008–2009 SAFETY EQUIPMENT CATALOG volume 12 | number 1 90+ Years of Products, Service and Expertise MSA offers more than safety Helping first responders is part of our history MSA offers more than safety on the job Mine Safety Appliances Great Products Company was founded MSA began making high-quality safety products in in 1914 by two mining 1914. Technology enhances our innovation in engineers who designed product development, with computer-aided or improved safety, design and stereo lithography, a technology rescue and protective which produces prototypes rapidly. MSA responds gear. MSA has continued quickly as your needs change. Within most product to help protect people from groups is a wide selection of models, sizes, colors, hazards great and small, materials, options, accessories and prices. Many and today helps protect components of MSA products are formulated, machined, millions of workers in many tooled or molded in-house from raw materials, allowing for greater industries worldwide. manufacturing control as well as quality assurance. MSA processes are MSA has sold gas masks certified as meeting ISO 9001-2000 quality assurance requirements of the for military use since International Standard Organization (ISO). World War I. We have always understood the Great Plans for the Future demands placed on crucial MSA continues to enhance our operations and expand/ improve our protective equipment in product lines by acquiring companies and/or new capabilities to better extremely hazardous serve our customers. In 2002, MSA began manufacturing specialized conditions. MSA helmets for military and law-enforcement use, and in 2004 added passive historically and steadfastly has helped first responders before, during, and electronic hearing protection.