Approxtuner: a Compiler and Runtime System for Adaptive Approximations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Executable Code Is Not the Proper Subject of Copyright Law a Retrospective Criticism of Technical and Legal Naivete in the Apple V

Executable Code is Not the Proper Subject of Copyright Law A retrospective criticism of technical and legal naivete in the Apple V. Franklin case Matthew M. Swann, Clark S. Turner, Ph.D., Department of Computer Science Cal Poly State University November 18, 2004 Abstract: Copyright was created by government for a purpose. Its purpose was to be an incentive to produce and disseminate new and useful knowledge to society. Source code is written to express its underlying ideas and is clearly included as a copyrightable artifact. However, since Apple v. Franklin, copyright has been extended to protect an opaque software executable that does not express its underlying ideas. Common commercial practice involves keeping the source code secret, hiding any innovative ideas expressed there, while copyrighting the executable, where the underlying ideas are not exposed. By examining copyright’s historical heritage we can determine whether software copyright for an opaque artifact upholds the bargain between authors and society as intended by our Founding Fathers. This paper first describes the origins of copyright, the nature of software, and the unique problems involved. It then determines whether current copyright protection for the opaque executable realizes the economic model underpinning copyright law. Having found the current legal interpretation insufficient to protect software without compromising its principles, we suggest new legislation which would respect the philosophy on which copyright in this nation was founded. Table of Contents INTRODUCTION................................................................................................. 1 THE ORIGIN OF COPYRIGHT ........................................................................... 1 The Idea is Born 1 A New Beginning 2 The Social Bargain 3 Copyright and the Constitution 4 THE BASICS OF SOFTWARE .......................................................................... -

The LLVM Instruction Set and Compilation Strategy

The LLVM Instruction Set and Compilation Strategy Chris Lattner Vikram Adve University of Illinois at Urbana-Champaign lattner,vadve ¡ @cs.uiuc.edu Abstract This document introduces the LLVM compiler infrastructure and instruction set, a simple approach that enables sophisticated code transformations at link time, runtime, and in the field. It is a pragmatic approach to compilation, interfering with programmers and tools as little as possible, while still retaining extensive high-level information from source-level compilers for later stages of an application’s lifetime. We describe the LLVM instruction set, the design of the LLVM system, and some of its key components. 1 Introduction Modern programming languages and software practices aim to support more reliable, flexible, and powerful software applications, increase programmer productivity, and provide higher level semantic information to the compiler. Un- fortunately, traditional approaches to compilation either fail to extract sufficient performance from the program (by not using interprocedural analysis or profile information) or interfere with the build process substantially (by requiring build scripts to be modified for either profiling or interprocedural optimization). Furthermore, they do not support optimization either at runtime or after an application has been installed at an end-user’s site, when the most relevant information about actual usage patterns would be available. The LLVM Compilation Strategy is designed to enable effective multi-stage optimization (at compile-time, link-time, runtime, and offline) and more effective profile-driven optimization, and to do so without changes to the traditional build process or programmer intervention. LLVM (Low Level Virtual Machine) is a compilation strategy that uses a low-level virtual instruction set with rich type information as a common code representation for all phases of compilation. -

A Status Update of BEAMJIT, the Just-In-Time Compiling Abstract Machine

A Status Update of BEAMJIT, the Just-in-Time Compiling Abstract Machine Frej Drejhammar and Lars Rasmusson <ffrej,[email protected]> 140609 Who am I? Senior researcher at the Swedish Institute of Computer Science (SICS) working on programming tools and distributed systems. Acknowledgments Project funded by Ericsson AB. Joint work with Lars Rasmusson <[email protected]>. What this talk is About An introduction to how BEAMJIT works and a detailed look at some subtle details of its implementation. Outline Background BEAMJIT from 10000m BEAMJIT-aware Optimization Compiler-supported Profiling Future Work Questions Just-In-Time (JIT) Compilation Decide at runtime to compile \hot" parts to native code. Fairly common implementation technique. McCarthy's Lisp (1969) Python (Psyco, PyPy) Smalltalk (Cog) Java (HotSpot) JavaScript (SquirrelFish Extreme, SpiderMonkey, J¨agerMonkey, IonMonkey, V8) Motivation A JIT compiler increases flexibility. Tracing does not require switching to full emulation. Cross-module optimization. Compiled BEAM modules are platform independent: No need for cross compilation. Binaries not strongly coupled to a particular build of the emulator. Integrates naturally with code upgrade. Project Goals Do as little manual work as possible. Preserve the semantics of plain BEAM. Automatically stay in sync with the plain BEAM, i.e. if bugs are fixed in the interpreter the JIT should not have to be modified manually. Have a native code generator which is state-of-the-art. Eventually be better than HiPE (steady-state). Plan Use automated tools to transform and extend the BEAM. Use an off-the-shelf optimizer and code generator. Implement a tracing JIT compiler. BEAM: Specification & Implementation BEAM is the name of the Erlang VM. -

Architectural Support for Scripting Languages

Architectural Support for Scripting Languages By Dibakar Gope A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Electrical and Computer Engineering) at the UNIVERSITY OF WISCONSIN–MADISON 2017 Date of final oral examination: 6/7/2017 The dissertation is approved by the following members of the Final Oral Committee: Mikko H. Lipasti, Professor, Electrical and Computer Engineering Gurindar S. Sohi, Professor, Computer Sciences Parameswaran Ramanathan, Professor, Electrical and Computer Engineering Jing Li, Assistant Professor, Electrical and Computer Engineering Aws Albarghouthi, Assistant Professor, Computer Sciences © Copyright by Dibakar Gope 2017 All Rights Reserved i This thesis is dedicated to my parents, Monoranjan Gope and Sati Gope. ii acknowledgments First and foremost, I would like to thank my parents, Sri Monoranjan Gope, and Smt. Sati Gope for their unwavering support and encouragement throughout my doctoral studies which I believe to be the single most important contribution towards achieving my goal of receiving a Ph.D. Second, I would like to express my deepest gratitude to my advisor Prof. Mikko Lipasti for his mentorship and continuous support throughout the course of my graduate studies. I am extremely grateful to him for guiding me with such dedication and consideration and never failing to pay attention to any details of my work. His insights, encouragement, and overall optimism have been instrumental in organizing my otherwise vague ideas into some meaningful contributions in this thesis. This thesis would never have been accomplished without his technical and editorial advice. I find myself fortunate to have met and had the opportunity to work with such an all-around nice person in addition to being a great professor. -

Using JIT Compilation and Configurable Runtime Systems for Efficient Deployment of Java Programs on Ubiquitous Devices

Using JIT Compilation and Configurable Runtime Systems for Efficient Deployment of Java Programs on Ubiquitous Devices Radu Teodorescu and Raju Pandey Parallel and Distributed Computing Laboratory Computer Science Department University of California, Davis {teodores,pandey}@cs.ucdavis.edu Abstract. As the proliferation of ubiquitous devices moves computation away from the conventional desktop computer boundaries, distributed systems design is being exposed to new challenges. A distributed system supporting a ubiquitous computing application must deal with a wider spectrum of hardware architectures featuring structural and functional differences, and resources limitations. Due to its architecture independent infrastructure and object-oriented programming model, the Java programming environment can support flexible solutions for addressing the diversity among these devices. Unfortunately, Java solutions are often associated with high costs in terms of resource consumption, which limits the range of devices that can benefit from this approach. In this paper, we present an architecture that deals with the cost and complexity of running Java programs by partitioning the process of Java program execution between system nodes and remote devices. The system nodes prepare a Java application for execution on a remote device by generating device-specific native code and application-specific runtime system on the fly. The resulting infrastructure provides the flexibility of a high-level programming model and the architecture independence specific to Java. At the same time the amount of resources consumed by an application on the targeted device are comparable to that of a native implementation. 1 Introduction As a result of the proliferation of computation into the physical world, ubiquitous computing [1] has become an important research topic. -

CDC Build System Guide

CDC Build System Guide Java™ Platform, Micro Edition Connected Device Configuration, Version 1.1.2 Foundation Profile, Version 1.1.2 Optimized Implementation Sun Microsystems, Inc. www.sun.com December 2008 Copyright © 2008 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S. patents listed at http://www.sun.com/patents and one or more additional patents or pending patent applications in the U.S. and in other countries. U.S. Government Rights - Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. This distribution may include materials developed by third parties. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and in other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, Java, Solaris and HotSpot are trademarks or registered trademarks of Sun Microsystems, Inc. or its subsidiaries in the United States and other countries. The Adobe logo is a registered trademark of Adobe Systems, Incorporated. Products covered by and information contained in this service manual are controlled by U.S. Export Control laws and may be subject to the export or import laws in other countries. Nuclear, missile, chemical biological weapons or nuclear maritime end uses or end users, whether direct or indirect, are strictly prohibited. -

Using Ld the GNU Linker

Using ld The GNU linker ld version 2 January 1994 Steve Chamberlain Cygnus Support Cygnus Support [email protected], [email protected] Using LD, the GNU linker Edited by Jeffrey Osier (jeff[email protected]) Copyright c 1991, 92, 93, 94, 95, 96, 97, 1998 Free Software Foundation, Inc. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions. Chapter 1: Overview 1 1 Overview ld combines a number of object and archive files, relocates their data and ties up symbol references. Usually the last step in compiling a program is to run ld. ld accepts Linker Command Language files written in a superset of AT&T’s Link Editor Command Language syntax, to provide explicit and total control over the linking process. This version of ld uses the general purpose BFD libraries to operate on object files. This allows ld to read, combine, and write object files in many different formats—for example, COFF or a.out. Different formats may be linked together to produce any available kind of object file. See Chapter 5 [BFD], page 47, for more information. Aside from its flexibility, the gnu linker is more helpful than other linkers in providing diagnostic information. -

EXE: Automatically Generating Inputs of Death

EXE: Automatically Generating Inputs of Death Cristian Cadar, Vijay Ganesh, Peter M. Pawlowski, David L. Dill, Dawson R. Engler Computer Systems Laboratory Stanford University Stanford, CA 94305, U.S.A {cristic, vganesh, piotrek, dill, engler} @cs.stanford.edu ABSTRACT 1. INTRODUCTION This paper presents EXE, an effective bug-finding tool that Attacker-exposed code is often a tangled mess of deeply- automatically generates inputs that crash real code. Instead nested conditionals, labyrinthine call chains, huge amounts of running code on manually or randomly constructed input, of code, and frequent, abusive use of casting and pointer EXE runs it on symbolic input initially allowed to be “any- operations. For safety, this code must exhaustively vet in- thing.” As checked code runs, EXE tracks the constraints put received directly from potential attackers (such as sys- on each symbolic (i.e., input-derived) memory location. If a tem call parameters, network packets, even data from USB statement uses a symbolic value, EXE does not run it, but sticks). However, attempting to guard against all possible instead adds it as an input-constraint; all other statements attacks adds significant code complexity and requires aware- run as usual. If code conditionally checks a symbolic ex- ness of subtle issues such as arithmetic and buffer overflow pression, EXE forks execution, constraining the expression conditions, which the historical record unequivocally shows to be true on the true branch and false on the other. Be- programmers reason about poorly. cause EXE reasons about all possible values on a path, it Currently, programmers check for such errors using a com- has much more power than a traditional runtime tool: (1) bination of code review, manual and random testing, dy- it can force execution down any feasible program path and namic tools, and static analysis. -

An Opencl Runtime System for a Heterogeneous Many-Core Virtual Platform Kuan-Chung Chen and Chung-Ho Chen Inst

An OpenCL Runtime System for a Heterogeneous Many-Core Virtual Platform Kuan-Chung Chen and Chung-Ho Chen Inst. of Computer & Communication Engineering, National Cheng Kung University, Tainan, Taiwan, R.O.C. [email protected], [email protected] Abstract—We present a many-core full system is executed on a Linux PC while the kernel code execution is simulation platform and its OpenCL runtime system. The simulated on an ESL many-core system. OpenCL runtime system includes an on-the-fly compiler and resource manager for the ARM-based many-core The rest of this paper consists of the following sections. In platform. Using this platform, we evaluate approaches of Section 2, we briefly introduce the OpenCL execution model work-item scheduling and memory management in and our heterogeneous full system simulation platform. Section OpenCL memory hierarchy. Our experimental results 3 discusses the OpenCL runtime implementation in detail and show that scheduling work-items on a many-core system its enhancements. Section 4 presents the evaluation system and using general purpose RISC CPU should avoid per work- results. We conclude this paper in Section 5. item context switching. Data deployment and work-item coalescing are the two keys for significant speedup. II. OPENCL MODEL AND VIRTUAL PLATFORM Keywords—OpenCL; runtime system; work-item coalescing; In this section, we first introduce the OpenCL programming full system simulation; heterogeneous integration; model and followed by our SystemC-based virtual platform architecture. I. INTRODUCTION A. OpenCL Platform Overview OpenCL is a data parallel programming model introduced Fig. 1 shows a conceptual OpenCL platform which has two for heterogeneous system architecture [1] which may include execution components: a host processor and the compute CPUs, GPUs, or other accelerator devices. -

Optimizing Function Placement for Large-Scale Data-Center Applications

Optimizing Function Placement for Large-Scale Data-Center Applications Guilherme Ottoni Bertrand Maher Facebook, Inc., USA ottoni,bertrand @fb.com { } Abstract While the large size and performance criticality of such Modern data-center applications often comprise a large applications make them good candidates for profile-guided amount of code, with substantial working sets, making them code-layout optimizations, these characteristics also im- good candidates for code-layout optimizations. Although pose scalability challenges to optimize these applications. recent work has evaluated the impact of profile-guided intra- Instrumentation-based profilers significantly slow down the module optimizations and some cross-module optimiza- applications, often making it impractical to gather accurate tions, no recent study has evaluated the benefit of function profiles from a production system. To simplify deployment, placement for such large-scale applications. In this paper, it is beneficial to have a system that can profile unmodi- we study the impact of function placement in the context fied binaries, running in production, and use these data for of a simple tool we created that uses sample-based profiling feedback-directed optimization. This is possible through the data. By using sample-based profiling, this methodology fol- use of sample-based profiling, which enables high-quality lows the same principle behind AutoFDO, i.e. using profil- profiles to be gathered with minimal operational complexity. ing data collected from unmodified binaries running in pro- This is the approach taken by tools such as AutoFDO [1], duction, which makes it applicable to large-scale binaries. and which we also follow in this work. Using this tool, we first evaluate the impact of the traditional The benefit of feedback-directed optimizations for some Pettis-Hansen (PH) function-placement algorithm on a set of data-center applications has been evaluated in some pre- widely deployed data-center applications. -

LLVM, Clang and Embedded Linux Systems

LLVM, Clang and Embedded Linux Systems Bruno Cardoso Lopes University of Campinas What’s LLVM? What’s LLVM • Compiler infrastructure To o l s Frontend Optimizer IR Assembler Backends (clang) Disassembler JIT What’s LLVM • Virtual Instruction set (IR) • SSA • Bitcode C source code IR Frontend int add(int x, int y) { define i32 @add(i32 %x, i32 %y) { return x+y; Clang %1 = add i32 %y, %x } ret i32 %1 } What’s LLVM • Optimization oriented compiler: compile time, link-time and run-time • More than 30 analysis passes and 60 transformation passes. Why LLVM? Why LLVM? • Open Source • Active community • Easy integration and quick patch review Why LLVM? • Code easy to read and understand • Transformations and optimizations are applied in several parts during compilation Design Design • Written in C++ • Modular and composed of several libraries • Pass mechanism with pluggable interface for transformations and analysis • Several tools for each part of compilation To o l s To o l s Front-end • Dragonegg • Gcc 4.5 plugin • llvm-gcc • GIMPLE to LLVM IR To o l s Front-end • Clang • Library approach • No cross-compiler generation needed • Good diagnostics • Static Analyzer To o l s Optimizer • Optimization are applied to the IR • opt tool $ opt -O3 add.bc -o add2.bc optimizations Aggressive Dead Code Elmination Tail Call Elimination Combine Redudant Instructions add.bc add2.bc Dead Argument Elimination Type-Based Alias Analysis ... To o l s Low Level Compiler • llc tool: invoke the static backends • Generates assembly or object code $ llc -march=arm add.bc -

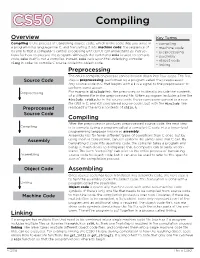

Compiling.Pdf

CS50 Compiling Overview Key Terms Compiling is the process of translating source code, which is the code that you write in • compiling a programming language like C, and translating it into machine code: the sequence of • machine code 0s and 1s that a computer's central processing unit (CPU) can understand as instruc- • preprocessing tions for how to execute the program. Although the command make is used to compile • assembly code, make itself is not a compiler. Instead, make calls upon the underlying compiler • object code in order to compile C source code into object code. clang • linking Preprocessing The entire compilation process can be broken down into four steps. The first Source Code step is preprocessing, performed by a program called the preprocessor. Any source code in C that begins with a # is a signal to the preprocessor to perform some action. Preprocessing For example, #include tells the preprocessor to literally include the contents of a different file in the preprocessed file. When a program includes a line like #include <stdio.h> in the source code, the preprocessor generates a new file (still in C, and still considered source code), but with the #include line Preprocessed replaced by the entire contents of stdio.h. Source Code Compiling After the preprocessor produces preprocessed source code, the next step Compiling is to compile (using a program called a compiler) C code into a lower-level programming language known as assembly. Assembly has far fewer different types of operations than C does, but by Assembly using them in conjunction, can still perform the same tasks that C can.