Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Histcoroy Pyright for Online Information and Ordering of This and Other Manning Books, Please Visit Topwicws W.Manning.Com

www.allitebooks.com HistCoroy pyright For online information and ordering of this and other Manning books, please visit Topwicws w.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Tutorials Special Sales Department Offers & D e al s Manning Publications Co. 20 Baldwin Road Highligh ts PO Box 761 Shelter Island, NY 11964 Email: [email protected] Settings ©2017 by Manning Publications Co. All rights reserved. Support No part of this publication may be reproduced, stored in a retrieval system, or Sign Out transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acidfree paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental chlorine. Manning Publications Co. PO Box 761 Shelter Island, NY 11964 www.allitebooks.com Development editor: Cynthia Kane Review editor: Aleksandar Dragosavljević Technical development editor: Stan Bice Project editors: Kevin Sullivan, David Novak Copyeditor: Sharon Wilkey Proofreader: Melody Dolab Technical proofreader: Doug Warren Typesetter and cover design: Marija Tudor ISBN 9781617292576 Printed in the United States of America 1 2 3 4 5 6 7 8 9 10 – EBM – 22 21 20 19 18 17 www.allitebooks.com HistPoray rt 1. -

Fira Code: Monospaced Font with Programming Ligatures

Personal Open source Business Explore Pricing Blog Support This repository Sign in Sign up tonsky / FiraCode Watch 282 Star 9,014 Fork 255 Code Issues 74 Pull requests 1 Projects 0 Wiki Pulse Graphs Monospaced font with programming ligatures 145 commits 1 branch 15 releases 32 contributors OFL-1.1 master New pull request Find file Clone or download lf- committed with tonsky Add mintty to the ligatures-unsupported list (#284) Latest commit d7dbc2d 16 days ago distr Version 1.203 (added `__`, closes #120) a month ago showcases Version 1.203 (added `__`, closes #120) a month ago .gitignore - Removed `!!!` `???` `;;;` `&&&` `|||` `=~` (closes #167) `~~~` `%%%` 3 months ago FiraCode.glyphs Version 1.203 (added `__`, closes #120) a month ago LICENSE version 0.6 a year ago README.md Add mintty to the ligatures-unsupported list (#284) 16 days ago gen_calt.clj Removed `/**` `**/` and disabled ligatures for `/*/` `*/*` sequences … 2 months ago release.sh removed Retina weight from webfonts 3 months ago README.md Fira Code: monospaced font with programming ligatures Problem Programmers use a lot of symbols, often encoded with several characters. For the human brain, sequences like -> , <= or := are single logical tokens, even if they take two or three characters on the screen. Your eye spends a non-zero amount of energy to scan, parse and join multiple characters into a single logical one. Ideally, all programming languages should be designed with full-fledged Unicode symbols for operators, but that’s not the case yet. Solution Download v1.203 · How to install · News & updates Fira Code is an extension of the Fira Mono font containing a set of ligatures for common programming multi-character combinations. -

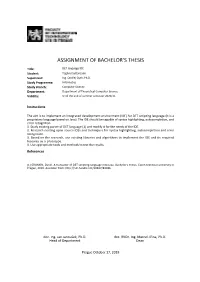

Assignment of Bachelor's Thesis

ASSIGNMENT OF BACHELOR’S THESIS Title: DET language IDE Student: Toghrul Sultanzade Supervisor: Ing. Ondřej Guth, Ph.D. Study Programme: Informatics Study Branch: Computer Science Department: Department of Theoretical Computer Science Validity: Until the end of summer semester 2020/21 Instructions The aim is to implement an integrated development environment (IDE) for DET scripting language (it is a proprietary language based on Java). The IDE should be capable of syntax highlighting, autocompletion, and error recognition. 1. Study existing parser of DET language [1] and modify it for the needs of the IDE. 2. Research existing open-source IDEs and techniques for syntax highlighting, autocompletion and error recognition. 3. Based on the research, use existing libraries and algorithms to implement the IDE and its required features as a prototype. 4. Use appropriate tools and methods to test the results. References [1] GRANKIN, Daniil. A translator of DET scripting language into Java. Bachelor's thesis. Czech technical university in Prague, 2019. Available from: http://hdl.handle.net/10467/83386. doc. Ing. Jan Janoušek, Ph.D. doc. RNDr. Ing. Marcel Jiřina, Ph.D. Head of Department Dean Prague October 17, 2019 Czech Technical University in Prague Faculty of Information Technology Department of Computer Science Bachelor's thesis DET language IDE Toghrul Sultanzade Supervisor: Ing. Ondrej Guth, Ph.D. 21st February 2020 Acknowledgements Firstly, I would like to express my appreciation and thanks to my thesis su- pervisor, Ing. Ondrej Guth, for his professional attitude and dedication to help me. The door to his office was always open, whenever I had troubles and obstacles in the process of writing the thesis. -

Programming in HTML5 with Javascript and CSS3 Ebook

spine = 1.28” Programming in HTML5 with JavaScript and CSS3 and CSS3 JavaScript in HTML5 with Programming Designed to help enterprise administrators develop real-world, About You job-role-specific skills—this Training Guide focuses on deploying This Training Guide will be most useful and managing core infrastructure services in Windows Server 2012. to IT professionals who have at least Programming Build hands-on expertise through a series of lessons, exercises, three years of experience administering and suggested practices—and help maximize your performance previous versions of Windows Server in midsize to large environments. on the job. About the Author This Microsoft Training Guide: Mitch Tulloch is a widely recognized in HTML5 with • Provides in-depth, hands-on training you take at your own pace expert on Windows administration and has been awarded Microsoft® MVP • Focuses on job-role-specific expertise for deploying and status for his contributions supporting managing Windows Server 2012 core services those who deploy and use Microsoft • Creates a foundation of skills which, along with on-the-job platforms, products, and solutions. He experience, can be measured by Microsoft Certification exams is the author of Introducing Windows JavaScript and such as 70-410 Server 2012 and the upcoming Windows Server 2012 Virtualization Inside Out. Sharpen your skills. Increase your expertise. • Plan a migration to Windows Server 2012 About the Practices CSS3 • Deploy servers and domain controllers For most practices, we recommend using a Hyper-V virtualized • Administer Active Directory® and enable advanced features environment. Some practices will • Ensure DHCP availability and implement DNSSEC require physical servers. -

Lab 6: Testing Software Studio Datalab, CS, NTHU Notice

Lab 6: Testing Software Studio DataLab, CS, NTHU Notice • This lab is about software development good practices • Interesting for those who like software development and want to go deeper • Good to optimize your development time Outline • Introduction to Testing • Simple testing in JS • Testing with Karma • Configuring Karma • Karma demo: Simple test • Karma demo: Weather Mood • Karma demo: Timer App • Conclusions Outline • Introduction to Testing • Simple testing in JS • Testing with Karma • Configuring Karma • Karma demo: Simple test • Karma demo: Weather Mood • Karma demo: Timer App • Conclusions What is testing? Is it important? Actually, we are always doing some kind of testing.. And expecting some result.. And expecting some result.. Sometimes we just open our app and start clicking to see results Why test like a machine? We can write programs to automatically test our application! Most common type of test: Unit test Unit test • “Unit testing is a method by which individual units of source code are tested to determine if they are fit for use. A unit is the smallest testable part of an application.” - Wikipedia Good unit test properties • It should be automated and repeatable • It should be easy to implement • Once it’s written, it should remain for future use • Anyone should be able to run it • It should run at the push of a button • It should run quickly The art of Unit Testing, Roy Osherove Many frameworks for unit tests in several languages Common procedure • Assertion: A statement that a predicate is expected to always be true at that point in the code. -Wikipedia Common procedure • We write our application and want it to do something, so we write a test to see if it’s behaving as we expect Common procedure • Pseudocode: But how to do that in JS? Outline • Introduction to Testing • Simple testing in JS • Testing with Karma • Configuring Karma • Karma demo: Simple test • Karma demo: Weather Mood • Karma demo: Timer App • Conclusions Simple testing: expect library and jsbin Test passed: Test didn’t pass: This is ok for testing some simple functions. -

Open Source Used in SD-AVC 2.2.0

Open Source Used In SD-AVC 2.2.0 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-189242242 Open Source Used In SD-AVC 2.2.0 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-189242242 Contents 1.1 @angular/angular 5.1.0 1.1.1 Available under license 1.2 @angular/material 5.2.3 1.2.1 Available under license 1.3 angular 1.5.9 1.3.1 Available under license 1.4 angular animate 1.5.9 1.5 angular cookies 1.5.9 1.6 angular local storage 0.5.2 1.7 angular touch 1.5.9 1.8 angular ui router 0.3.2 1.9 angular ui-grid 4.0.4 1.10 angular-ui-bootstrap 2.3.2 1.10.1 Available under license 1.11 angular2-moment 1.7.0 1.11.1 Available under license 1.12 angularJs 1.5.9 1.13 bootbox.js 4.4.0 1.14 Bootstrap 3.3.7 1.14.1 Available under license 1.15 Bootstrap 4 4.0.0 1.15.1 Available under license 1.16 chart.js 2.7.2 1.16.1 Available under license 1.17 commons-io 2.5 1.17.1 Available under license Open Source Used In SD-AVC 2.2.0 2 1.18 commons-text 1.4 1.18.1 -

Ztex-Ezusb-Fx2-Firmware-Kitztex-Bmp 0. Sdcc-Libraries 0. Sdcc 0

libkst2math2 libhugs-base-bundled libmono-security2.0-cil liboce-modeling-dev 0. libclass-c3-perl nkf python-peak.util 0. 0.0. 0. 0. 0. libkst2core2 0. libgcrypt11 libtasn1-3 kvirc-modules 0. python-lazr.uri live-boot-initramfs-tools 0. 4.59363957597 0. 0. hugs 0. gir1.2-cogl-1.0 0. cl-alexandria 0. python-syfi 0.12619888945 libmono-posix2.0-cil liboce-foundation-dev libmodule-runtime-perl libalgorithm-c3-perl 0. 0. 0. cmigemo 0. python-peak.rules 0. 0. 0.373989624804 clinica-common 0. ttf-unifont 0. libaa1-dev libggi2-dev libgii1-dev libgnutls26 kvirc kvirc-data libkst2widgets2 libsmokekhtml3 2.12765957447 gcj-4.6-jre-lib 0. 0. 0. 0. librasqal3 0. 0. 0. kget gnome-mime-data 0. 4.98485613327 0. 0. paw gnustep-back-common 0. 0. 0. 0. 0. 0. 0. 0. 0. python-lazr.restfulclient kfreebsd-headers-8.2-1 0.138818778395 live-boot 0.230414746544 0. clinica gir1.2-clutter-1.0 multiarch-support sugar-presence-service-0.90 0. 0. 1.04166666667 0. sendmail-cf libmro-compat-perl cmigemo-common libexo-helpers libexo-1-0 libexo-common python-turbojson 0.3340757238312.56124721604 libgpg-error0 libp11-kit0 libhugs-haskell98-bundled libkvilib4 libmono-system2.0-cil liboce-ocaf-lite-dev ecj-gcj libecj-java-gcj 0. 0. 0. 5. 5. 0. 0. 0. 0. 0. 0. 0. 0. python-swiginac sfc cl-babel cl-cffi 0.688073394495 libmodule-implementation-perl gnustep-base-runtime 0. 0. 0. kxterm 0. 0. 0. unifont jfbterm 1.06382978723 libc6 libraptor2-0 libmhash2 0. 0. 0. 0.00316766448098 0.447928331467 libgnomevfs2-0 node-contextify node-jquery 0. -

Background Goal Unit Testing Regression Testing Automation

Streamlining a Workflow Jeffrey Falgout Daniel Wilkey [email protected] [email protected] Science, Discovery, and the Universe Bloomberg L.P. Computer Science and Mathematics Major Background Regression Testing Jenkins instance would begin running all Bloomberg L.P.'s main product is a Another useful tool to have in unit and regression tests. This Jenkins job desktop application which provides sufficiently complex systems is a suite would then report the results on the financial tools. Bloomberg L.P. of regression tests. Regression testing Phabricator review and signal the author employs many computer programmers allows you to test very high level in an appropriate way. The previous to maintain, update, and add new behavior which can detect low level workflow required manual intervention to features to this product. bugs. run tests. This new workflow only the The product the code base was developer to create his feature branch in implementing has a record/playback order for all tests to be run automatically. Goal feature. This was leveraged to create a Many of the tasks that a programmer regression testing framework. This has to perform as part of a team can be framework leveraged the unit testing automated. The goal of this project was framework wherever possible. to work with a team to automate tasks At various points during the playback, where possible and make them easier callback methods would be invoked. An example of the new workflow, where a Jenkins where impossible. These methods could have testing logic to instance will automatically run tests and update the make sure the application was in the peer review. -

Edit and Download Html File 14 Best Free WYSIWYG HTML Editing Software for Windows

edit and download html file 14 Best Free WYSIWYG HTML Editing Software For Windows. It is difficult to invest in some high-end WYSIWYG HTML editing software, but it is not at all difficult to download them when the freeware has all the required features bundled into it. The following are the 14 best WYSIWYG freeware HTML editor serving you at their finest possible areas. All these WYSIWYG HTML editing freeware have some or the other distinctive features like inbuilt file transfer protocol (FTP), SEO friendly tool, supporting file formats like HTML, CSS, PHP, java, JavaScript, XHTML, etc. Below mentioned software are free to use and don’t come with any kind of trial or expiration date. You can also try these best free Dictionary, FTP and File Explorers software. Here Are The 14 Best Free WYSIWYG HTML Editing Software For Windows: MoreMotion Application Studio. MoreMotion Application Studio is one stop HTML editor having all the basic and advanced features a fully functional HTML editor must have. MoreMotion Application Studio enables you to work on new projects or work on your previously build projects. It lets you test what you’ve been working on using its Build and Test feature. The interface of MoreMotion Web Express is similar to the Adobe Dreamweaver and it is a free alternative to it. Some distinctive features of MoreMotion Application Studio are grouping particular elements in a panel, drag and draw elements on the page, create forms easily, resize elements and panels according to the area you want them to cover, drag and resize elements, etc. -

Bootstrap-Accessible Blocks

Building an Accessible Block Environment Multi-Language, Fully-Accessible AST-based Editing in the Browser Emmanuel Schanzer Sina Bahram Shriram Krishnamurthi Bootstrap Prime Access Consulting Department of Computer Science Brown University New York, NY USA Brown University Providence, RI USA [email protected] Providence, RI USA [email protected] [email protected] ABSTRACT challenge even for sighted programmers, who use an array of visual cues such as syntax highlighting, bracket matching, and UncleGoose is a toolkit that provides a fully-accessible block auto-indenting to help keep track of the AST. environment, for multiple languages. The toolkit generates (1) a block editor that uses standard drag-and-drop conventions familiar But for visually-impaired (VI) users, these cues are of little help. to sighted users while also (2) using keyboard navigation and They are hit particularly hard by the loss of structure [5, 8], and spoken feedback that is familiar to visually-impaired users. The prior work has shown improved comprehension when they are mechanism used creates unique opportunities for (3) separating able to navigate the structure of the program rather than the the description of a block from the visual or textual syntax of that syntax [2]. Block environments would seem to be a solution, as block. This effectively provides a third representation (beyond they also represent a tree structure. Ironically, blocks rely even text and blocks), which is spoken aloud and can be tailored to a more heavily on visual metaphors, making many block tools a specific audience. The toolkit lives entirely in the browser and step backwards for the 65,000 VI students in the US alone [6]. -

Javascript Unlocked Table of Contents

JavaScript Unlocked Table of Contents JavaScript Unlocked Credits About the Author About the Reviewer www.PacktPub.com Support files, eBooks, discount offers, and more Why subscribe? Free access for Packt account holders Preface What this book covers What you need for this book Who this book is for Conventions Reader feedback Customer support Downloading the example code Errata Piracy Questions 1. Diving into the JavaScript Core Make your code readable and expressive Function argument default value Conditional invocation Arrow functions Method definitions The rest operator The spread operator Mastering multiline strings in JavaScript Concatenation versus array join Template literal Multi-line strings via transpilers Manipulating arrays in the ES5 way Array methods in ES5 Array methods in ES6 Traversing an object in an elegant, reliable, safe, and fast way Iterating the key-value object safely and fast Enumerating an array-like object The collections of ES6 The most effective way of declaring objects Classical approach Approach with the private state Inheritance with the prototype chain Inheriting from prototype with Object.create Inheriting from prototype with Object.assign Approach with ExtendClass Classes in ES6 How to – magic methods in JavaScript Accessors in ES6 classes Controlling access to arbitrary properties Summary 2. Modular Programming with JavaScript How to get out of a mess using modular JavaScript Modules Cleaner global scope Packaging code into files Reuse Module patterns Augmentation Module standards How to use asynchronous modules in the browser Pros and cons How to – use synchronous modules on the server Pros and cons UMD JavaScript’s built-in module system Named exports Default export The module loader API Conclusion Transpiling CommonJS for in-browser use Bundling ES6 modules for synchronous loading Summary 3. -

International Journal for Scientific Research & Development| Vol. 6, Issue 01, 2018 | ISSN (Online): 2321-0613

IJSRD - International Journal for Scientific Research & Development| Vol. 6, Issue 01, 2018 | ISSN (online): 2321-0613 Cloud Based Integrated Development Environment for C, C++, Java, C# Er Makrand Samvatsar1 Er Sorabh Gotam2 1Assistant Professor 2M.Tech Student 1,2Department of Computer Science & Engineering 1,2PCST, Indore (MP), India Abstract— Cloud based Integrated Development programs online. Once the language is chosen by the user environments is a cloud based application that provides the request is forwarded to the various compiler. Multiple facilities to engineer for computer code development like users will write programs in several programming languages code finishing and fixing, its source code editor and and can also compile and run the program. management, machine-driven testing, etc. computer code is quickly moving from the desktop to the online. The online II. WHY CLOUD BASED IDE provides a generic interface that enables present access, Software development is a very important activity in today’s instant collaboration, integration with different on-line world. In recent days, programmers using write codes into services, and avoids installation and configuration on the text files and so by use compiler and similar tools that desktop computers. Moving day to the online isn't simply a are command based mostly, these written codes were matter of porting desktop day, a basic reconsideration of the changed into software system programs. Because the IDE design is critical so as to understand the complete computers evolve, size and also the quality of software potential that the mix of recent day and therefore the internet system production raised. With this increasing quality, offers.