Ubuntunet-Connect 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Redclara Went from Being an Illusion to Become a Mature Institution”

Invitation - Call Shall we talk seriously about natural disasters and the end of the world? Rafael Ibarra, RAICES president “RedCLARA went from being an illusion to become a mature institution” MERCOSUR’s Virtual School was launched March 2012 - n°30, year 8 This Project is funded by the European Union A project implemented by RedCLARA European Commission Press Contact: EuropeAid Cooperation Office María José López Pourailly Directorate B2 - Latin America PR & Communications Manager - CLARA @LIS Programme [email protected] Rue Joseph II, 54 J54 4/13 (+56) 2 584 86 18, extension 504 B-1049 Brussels Avenida del Parque 4680-A BELGIUM Edifico Europa, oficina 505 Ciudad Empresarial Huechuraba Santiago CHILE «The European Union is constituted by 27 member states which have decided to progressively join their practical knowledge, their resources and their destinies. Over an expansion period of 50 years, together they have built a stability, democracy and sustainable development zone, and have also preserved cultural diversity, tolerance and individual liberties. The European Union is committed to sharing its achievements and values with countries and peoples which are beyond its borders». The European Commission is the executive body of the European Union. Contents 6 Open Call to present papers for TICAL 2012 Conference 7 Register and participate in the First Virtual Day of Culture Fernando Liello, ELLA Project Coordinator 8 “Latin America needs the new submarine connection to Europe because it cannot rely only on connectivity to -

20 Linux System Monitoring Tools Every Sysadmin Should Know by Nixcraft on June 27, 2009 · 315 Comments · Last Updated November 6, 2012

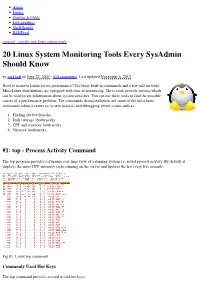

About Forum Howtos & FAQs Low graphics Shell Scripts RSS/Feed nixcraft - insight into linux admin work 20 Linux System Monitoring Tools Every SysAdmin Should Know by nixCraft on June 27, 2009 · 315 comments · Last updated November 6, 2012 Need to monitor Linux server performance? Try these built-in commands and a few add-on tools. Most Linux distributions are equipped with tons of monitoring. These tools provide metrics which can be used to get information about system activities. You can use these tools to find the possible causes of a performance problem. The commands discussed below are some of the most basic commands when it comes to system analysis and debugging server issues such as: 1. Finding out bottlenecks. 2. Disk (storage) bottlenecks. 3. CPU and memory bottlenecks. 4. Network bottlenecks. #1: top - Process Activity Command The top program provides a dynamic real-time view of a running system i.e. actual process activity. By default, it displays the most CPU-intensive tasks running on the server and updates the list every five seconds. Fig.01: Linux top command Commonly Used Hot Keys The top command provides several useful hot keys: Hot Usage Key t Displays summary information off and on. m Displays memory information off and on. Sorts the display by top consumers of various system resources. Useful for quick identification of performance- A hungry tasks on a system. f Enters an interactive configuration screen for top. Helpful for setting up top for a specific task. o Enables you to interactively select the ordering within top. r Issues renice command. -

UC2B Policy Board Agenda Regular Meeting October 19, 2011 – 12:00 Noon Council Chambers, 102 N

UC2B Policy Board Agenda Regular Meeting October 19, 2011 – 12:00 noon Council Chambers, 102 N. Neil Street, Champaign, Illinois I. Call to Order II. Roll Call (By Roster) – Determine Quorum III. Approve Agenda IV. Approval of Minutes from September 28, 2011 V. ACTION*/DISCUSSION ITEMS (note in this section we will go to audience for comment prior to discussion by Board of each item, comments are limited to 5 minutes in length per person) a) *A Resolution Recommending a Business Planning Consultant – NEOFiber w/Gigabit Squared – Teri Legner b) FTTC Construction Update – Beth Reinke, Tony Vandeventer c) Canvassing Update – Dr. Jon Gant d) Marketing Committee Update – Brandon Bowersox/John Kersh e) NTIA/Grant Update – Mike Smeltzer VII. Tasks to complete for next meeting VIII. Items for next meeting’s agenda IX. Public Participation X. Adjournment XI. Next Meeting: November 2, 2011- 12:00 p.m. to 1:30 p.m. Council Chambers, 102 N. Neil Street, Champaign, Illinois XII. Items for future Action/Discussion: a) Grant Required Approvals – Mike Smeltzer b) Technical Committee Report and Request for approvals of items forwarded from Technical Committee and its sub-committees – Tracy Smith c) FTTP RFP Decisions – should construction and management be included together as one RFP or not d) Discussion of pursuing a change order for ring design in order to include a production class facility – Rough Cost Estimates e) Update on FTTP Construction Process UC2B is an inter-governmental body. The City of Champaign serves as its administrative agent. The City of Champaign strives to ensure that its programs, services, and activities are accessible to individuals with disabilities. -

Observing the Clouds: a Survey and Taxonomy of Cloud Monitoring Jonathan Stuart Ward† and Adam Barker*†

Ward and Barker Journal of Cloud Computing: Advances, Systems and Applications (2014) 3:24 DOI 10.1186/s13677-014-0024-2 RESEARCH Open Access Observing the clouds: a survey and taxonomy of cloud monitoring Jonathan Stuart Ward† and Adam Barker*† Abstract Monitoring is an important aspect of designing and maintaining large-scale systems. Cloud computing presents a unique set of challenges to monitoring including: on-demand infrastructure, unprecedented scalability, rapid elasticity and performance uncertainty. There are a wide range of monitoring tools originating from cluster and high-performance computing, grid computing and enterprise computing, as well as a series of newer bespoke tools, which have been designed exclusively for cloud monitoring. These tools express a number of common elements and designs, which address the demands of cloud monitoring to various degrees. This paper performs an exhaustive survey of contemporary monitoring tools from which we derive a taxonomy, which examines how effectively existing tools and designs meet the challenges of cloud monitoring. We conclude by examining the socio-technical aspects of monitoring, and investigate the engineering challenges and practices behind implementing monitoring strategies for cloud computing. Keywords: Cloud computing; Monitoring Introduction most accepted description of the general properties of Monitoring large-scale distributed systems is challeng- cloud computing comes from the US based National Insti- ing and plays a crucial role in virtually every aspect of tution of Standards and Technology (NIST) and other a software orientated organisation. It requires substantial contributors [3,4]: engineering effort to identify pertinent information and to • obtain, store and process that information in order for it On-demand self service: A consumer is able to to become useful. -

Download Vol 3, No 3&4, Year 2010

The International Journal on Advances in Networks and Services is published by IARIA. ISSN: 1942-2644 journals site: http://www.iariajournals.org contact: [email protected] Responsibility for the contents rests upon the authors and not upon IARIA, nor on IARIA volunteers, staff, or contractors. IARIA is the owner of the publication and of editorial aspects. IARIA reserves the right to update the content for quality improvements. Abstracting is permitted with credit to the source. Libraries are permitted to photocopy or print, providing the reference is mentioned and that the resulting material is made available at no cost. Reference should mention: International Journal on Advances in Networks and Services, issn 1942-2644 vol. 3, no. 3 & 4, year 2010, http://www.iariajournals.org/networks_and_services/ The copyright for each included paper belongs to the authors. Republishing of same material, by authors or persons or organizations, is not allowed. Reprint rights can be granted by IARIA or by the authors, and must include proper reference. Reference to an article in the journal is as follows: <Author list>, “<Article title>” International Journal on Advances in Networks and Services, issn 1942-2644 vol. 3, no. 3 & 4, year 2010, <start page>:<end page> , http://www.iariajournals.org/networks_and_services/ IARIA journals are made available for free, proving the appropriate references are made when their content is used. Sponsored by IARIA www.iaria.org Copyright © 2010 IARIA International Journal on Advances in Networks and Services Volume -

Bulletin Year 13

Bulletin Year 13 Pure life and knowledge! Europe and Latin America RedCLARA partner TICAL's Costa Rican expand their collaboration networks have fast and edition will host two more for open science direct access to Microsoft events services n˚ 49 RedCLARA announces peering with Google April 2017 Contents Editorial - Mariano José Sánchez 5 Bontempo, Executive Director of RedCONARE Pure life and knowledge! TICAL's Costa 6 Rican edition will host two more events 11 BELLA-T opens tender for infrastructure "The mother of the Internet": Former 12 president of RedCLARA was honored by the Uruguayan television on Women's Day RedCLARA partner networks have fast and 13 direct access to Microsoft services RedCLARA announces peering with 14 Google RedCLARA: Europe and Latin America expand their Editing 15 María José López Pourailly collaboration for open science Contents A clear path for your data María José López Pourailly Using RedCUDI in the Pierre Auger Luiz Alberto Rasseli 16 Observatory Remote Control Room Translation into Portuguese located at UNAM Luiz Alberto Rasseli Translation into English We empower your research and María José López Pourailly Leonardo Rodríguez: "We hope to developments Luiz Alberto Rasseli 17 strengthen the articulation with the Graphic design scientific communities of the other María José López Pourailly national networks" 19 Agenda Press Contact: María José López Pourailly Communications and Public Relations Manager [email protected] (+56) 2 2584 86 18 # 504 Avenida del Parque 4680-A Edifico Europa, oficina 108 Ciudad Empresarial Huechuraba Santiago, CHILE Editorial TICAL will be held in Costa Rica this year! For Another event that will be held during these RedCLARA, the National Council of Rectors days is the meeting of Internet Society, called (CONARE) and RedCONARE (the Costa Rican “ION Costa Rica 2017”. -

Pan-Islamic Research and Education Network (PIREN)” ICMHESR-6/2012/2.3

Under the Patronage of H.E. Field Marshal Omar Hassan Ahmed Al-Bashir, President of the Republic of the Sudan Sixth Islamic Conference OF MINISTERS OF HIGHER EDUCATION AND SCIENTIFIC RESEARCH “The Role of Higher Education in the Development of Science and Technology for a Prosperous Future” Draft proposal on the establishment of « Pan-Islamic Research and Education Network (PIREN)” ICMHESR-6/2012/2.3 Khartoum, Republic of the Sudan Muharram 1434 A.H / 20-21 November 2012 The concept of having a Pan-Islamic Research and Education Network (PIREN) is to establish R&E connectivity between all the Islamic Countries, to form a consortium of National Research and Education Networks (NRENs) of the countries of Islamic World. The concept, background, need and objectives of PIREN are explained in the Part A of the document. The First Meeting of Country Coordinators for the Establishment of the Pan- Islamic Research and Education Network was held on 19-20 March, 2012, at the Headquarter of the Islamic Educational, Scientific and Cultural Organization (ISESCO) in Rabat, Morocco. A report of the Country Coordinators Meeting is enclose as Part B of the document. CONTENTS I. Summary …………………………………………………………………………… 9 II. Introduction ………………………………………………………………………… 9 III. Research & Education Networks (RENs) ………………………………………… 10 CalREN - USA …………………………………………………………………… 10 ORION - CANADA ……………………………………………………………… 10 RNP - Brazil ……………………………………………………………………… 11 JANET/UKERNA - UK ………………………………………………………… 11 SingAREN ………………………………………………………………………… 11 AARNET – Australia -

Escribir El Título De La Tesis

UNIVERSIDAD TÉCNICA DE AMBATO FACULTAD DE INGENIERÍA EN SISTEMAS ELECTRÓNICA E INDUSTRIAL CARRERA DE INGENIERÍA EN SISTEMAS COMPUTACIONALES E INFORMÁTICOS TEMA: “SISTEMA DE ANÁLISIS Y CONTROL DE RED DE DATOS & VoIP PARA EL GOBIERNO PROVINCIAL DE TUNGURAHUA” Trabajo de Graduación. Modalidad: Proyecto de Investigación, presentado previo la obtención del título de Ingeniero en Sistemas Computacionales e Informáticos. SUBLÍNEA DE INVESTIGACIÓN: Redes de Computadoras AUTOR: Franklin Edmundo Escobar Vega TUTOR: Ing. David Omar Guevara Aulestia Mg. Ambato - Ecuador Diciembre, 2015 APROBACIÓN DEL TUTOR En mi calidad de Tutor del Trabajo de Investigación sobre el Tema: “SISTEMA DE ANÁLISIS Y CONTROL DE RED DE DATOS & VoIP PARA EL GOBIERNO PROVINCIAL DE TUNGURAHUA.”, del señor Franklin Edmundo Escobar Vega, estudiante de la Carrera de Ingeniería en Sistemas Computacionales e Informáticos, de la Facultad de Ingeniería en Sistemas, Electrónica e Industrial, de la Universidad Técnica de Ambato, considero que el informe investigativo reúne los requisitos suficientes para que continúe con los trámites y consiguiente aprobación de conformidad con el Art. 16 del Capítulo II, del Reglamento de Graduación para Obtener el Título Terminal de Tercer Nivel de la Universidad Técnica de Ambato. Ambato, Diciembre de 2015 EL TUTOR Ing. David O. Guevara A., Mg. ii AUTORÍA El presente trabajo de investigación titulado: “SISTEMA DE ANÁLISIS Y CONTROL DE RED DE DATOS & VoIP PARA EL GOBIERNO PROVINCIAL DE TUNGURAHUA”. Es absolutamente original, auténtico y personal, en tal virtud, el contenido, efectos legales y académicos que se desprenden del mismo son de exclusiva responsabilidad del autor. Ambato, Diciembre 2015 Franklin Edmundo Escobar Vega CC: 0503474827 iii DERECHOS DE AUTOR Autorizo a la Universidad Técnica de Ambato, para que haga uso de este Trabajo de Titulación como un documento disponible para la lectura, consulta y procesos de investigación. -

Redclara: Regional Network in Latin America

RedCLARA: regional network in Latin America I2MM Spring 2009 Michael Stanton [email protected] Chair, CLARA Techical Committee CLARA and CKLN/ C@ribnet • CLARA is the regional association of NRENs in Latin America (LA) • CLARA operates the LA regional R&E network – RedCLARA – which joins together NRENs from several countries bordering on the Caribbean • Many of our international links cross the Caribbean • We would like to offer our support for R&E networking initiatives in the Caribbean The LA experience in advanced R&E networking • After the establishment of Internet2 in 1996, “advanced networks” became a new paradigm to be pursued by the Reasearch and Education (R&E) community • The first steps in LA were taken by Mexico, with cross-border connections to the US, and by the AMPATH project at FIU, Miami, which began linking South American networks to the US Internet2 Connectivity in Latin America by 2002 AMPATH • used new submarine cable • connected Argentina, Brazil (2), Chile, Venezuela • 45 Mbps AMPATH • all connections are point to point from Miami, and thence to Abilene Mexico • cross-border connections to USA (Texas and California) State of Latin American NRENs in 2002 Established education and research networks: • With dedicated Internet2 connections: Argentina, Brazil, Chile, Mexico, Venezuela • Some with dedicated int’l connectivity: Cuba, Uruguay Education and research networks were being re-established (present nat’l/int’l connectivity through commercial ISPs) • Bolivia, Colombia, Costa Rica, Ecuador, Guatemala, Panama, Peru, Paraguay, El Salvador No education/research network (most connected to Internet via commercial ISPs): Nicaragua, Honduras Europe and the @LIS iniciative • Through GÉANT, the European R&E community enjoyed high bandwidth connectivity with N. -

Munin Documentation Release 2.999.10-Detached-2018-12-16-C13-G47debb5

Munin Documentation Release 2.999.10-detached-2018-12-16-c13-g47debb5 The Munin project and its contributors Dec 16, 2018 Contents 1 Preface 3 1.1 What is Munin ?...........................................3 1.2 Conventions.............................................3 1.3 Further Information.........................................3 1.4 Bug Reporting Guidelines......................................6 1.5 Documenting Munin.........................................7 2 Tutorial 11 2.1 Getting Started............................................ 11 2.2 Wildcard Plugins........................................... 13 2.3 Using SNMP plugins......................................... 14 2.4 Let Munin croak alarm........................................ 15 2.5 Munin and Nagios.......................................... 20 2.6 Advanced Features.......................................... 24 2.7 Extraordinary Usage......................................... 25 2.8 Monitoring the “unreachable” hosts................................. 25 2.9 Troubleshooting........................................... 28 3 Munin’s Architecture 35 3.1 Overview............................................... 35 3.2 Components............................................. 36 3.3 Protocols............................................... 60 3.4 Syntax................................................ 62 3.5 API.................................................. 64 4 Munin Installation 65 4.1 Prerequisites............................................. 65 4.2 Installing Munin.......................................... -

Performance-Analyse in Großen Umgebungen Mit Collectd

Performance-Analyse in großen Umgebungen mit collectd Performance-Analyse in großen Umgebungen mit collectd Sebastian tokkee\ Harl " <[email protected]> FrOSCon 2009 2009-08-22 Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Gliederung Was ist collectd? Wichtige Eigenschaften Wichtige Plugins Eigene Erweiterungen Uber¨ den Tellerrand Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Was ist collectd? I collectd sammelt Leistungsdaten von Rechnern I Leistungsdaten sind zum Beispiel: I CPU-Auslastung I Speichernutzung I Netzwerkverkehr I Daten werden erhoben, verarbeitet und gespeichert I H¨aufig: Darstellung als Graphen I Nicht verwechseln mit Monitoring! Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Kontakt I Homepage: http://collectd.org/ I Mailinglist: [email protected] I IRC: #collectd auf irc.freenode.net Web 2.0\: http://identi.ca/collectd I " Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Wichtige Eigenschaften Wichtige Eigenschaften I Daemon I Freie Software (gr¨oßtenteils GPLv2) I Portierbar (Linux, *BSD, Solaris, . ) I Skalierbar (OpenWrt, . , Cluster / Cloud) I Effizient (Default-Aufl¨osung: 10 Sekunden) I Modular (Uber¨ 70 Plugins) Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Wichtige Eigenschaften Wichtige Eigenschaften I Daemon I Freie Software (gr¨oßtenteils GPLv2) I Portierbar (Linux, *BSD, Solaris, . ) I Skalierbar (OpenWrt, . , Cluster / Cloud) I Effizient (Default-Aufl¨osung: 10 Sekunden) I Modular (Uber¨ 70 Plugins) Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Wichtige Eigenschaften Wichtige Eigenschaften: 10-Sekunden-Aufl¨osung Performance-Analyse in großen Umgebungen mit collectd Was ist collectd? Wichtige Eigenschaften Wichtige Eigenschaften I Daemon I Freie Software (gr¨oßtenteils GPLv2) I Portierbar (Linux, *BSD, Solaris, . -

D.4.2 - Schedule and Organisation of Round Tables

D.4.2 - Schedule and organisation of Round Tables Grant Agreement number: 246644 Project acronym: PRO-IDEAL PLUS Project title: PROmotion of an ICT Dialogue between Europe and America Latina – extension towards Mexico, Colombia, Cuba, Costa Rica Funding Scheme: Support Action Due date: 1/09/2010 Actual date: 30/11/2010 Document Author/s: ITESM Version: 1.0 Dissemination level: PU Status: Final Schedule of Round Tables TABLE OF CONTENTS Page 1 INTRODUCTION .................................................................................................................. 3 2 ROUND TABLES ORGANISATION .................................................................................... 4 3 TENTATIVE SCHEDULE OF ROUND TABLES ................................................................. 5 3.1 Mexico .............................................................................................................................. 5 3.2 Colombia .......................................................................................................................... 5 3.3 Cuba................................................................................................................................. 5 3.4 Costa Rica........................................................................................................................ 5 4 CONCLUSIONS ................................................................................................................... 6 WP4_D4.2_ITESM_v.1 © PRO-IDEAL PLUS Page 2 of 6 Schedule of Round Tables 1 INTRODUCTION