ACTIVE MOBILE INTERFACE for SMART HEALTH a THESIS in Computer Science Presented to the Faculty of the University of Missouri-Ka

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Validity and Reliability of Wii Fit Balance Board for the Assessment of Balance of Healthy Young Adults and the Elderly

J. Phys. Ther. Sci. 25: 1251–1253, 2013 Validity and Reliability of Wii Fit Balance Board for the Assessment of Balance of Healthy Young Adults and the Elderly WEN-DIEN CHANG, PhD1), WAN-YI CHANG, MS2), CHIA-LUN LEE, PhD3), CHI-YEN FENG, MD4)* 1) Department of Sports Medicine, China Medical University, Taiwan (ROC) 2) Graduate Institute of Networking and Multimedia, National Taiwan University, Taiwan (ROC) 3) Center for General Education, National Sun Yat-sen University, Taiwan (ROC) 4) Department of General Surgery, Da Chien General Hospital: No. 36, Gongjing Road, Miaoli City, Miaoli County, Taiwan (ROC) Abstract. [Purpose] Balance is an integral part of human ability. The smart balance master system (SBM) is a balance test instrument with good reliability and validity, but it is expensive. Therefore, we modified a Wii Fit bal- ance board, which is a convenient balance assessment tool, and analyzed its reliability and validity. [Subjects and Methods] We recruited 20 healthy young adults and 20 elderly people, and administered 3 balance tests. The corre- lation coefficient and intraclass correlation of both instruments were analyzed. [Results] There were no statistically significant differences in the 3 tests between the Wii Fit balance board and the SBM. The Wii Fit balance board had a good intraclass correlation (0.86–0.99) for the elderly people and positive correlations (r = 0.58–0.86) with the SBM. [Conclusions] The Wii Fit balance board is a balance assessment tool with good reliability and high validity for elderly people, and we recommend it as an alternative tool for assessing balance ability. -

Interacción Con El Computador Utilizando El Wiimote: Instalación Y Uso

Interacción con el computador utilizando el WiiMote: instalación y uso Apellidos, nombre Agustí i Melchor, Manuel ([email protected]) Departamento Departamento de Informática de Sistemas y Computadores (DISCA) Centro Escola Tècnica Superior d'Enginyeria Informàtica Universitat Politècnica de València 1 Resumen de las ideas clave En este artículo vamos a presentar el uso del WiiMote, el mando de la Wii (la consola de Nintendo), como un periférico del computador personal. A modo de dispositivo apuntador, lo haremos servir como extensión del ratón en el escritorio tradicional. El interés de utilizar este dispositivo reside en: . Su bajo coste. Las versiones clásicas están alrededor de los 35€ como promedio visto en tres tiendas locales diferentes. Su bajo consumo de recursos del computador, ya que el trabajo “duro” lo hace este dispositivo. 2 Objetivos Una vez que el lector haya seguido los ejemplos de uso que se exploran en este documento, será capaz de: . Identificar los elementos que componen el dispositivo WiiMote. Analizar qué es necesario para conectarlo a un computador personal. En particular hablaremos de la plataforma GNU/Linux y daremos enlaces para otras. Analizar algunas aplicaciones de uso de este dispositivo en el computador. 3 Introducción El WiiMote (también llamado Wii Remote) forma parte de los accesorios (véase Figura 1) de la consola Nintendo Wii clásica1 aparecida en el 2006 [1]. En adelante nos centraremos en el WiiMote y, ocasionalmente, en la barra de sensores. El WiiMote envía a la consola la posición del mando. Lo hace a través de Bluetooh, a una velocidad de hasta 100 veces por segundo [2]. -

Geometry Friends a Cooperative Game Using the Wii Remote

Geometry Friends A cooperative game using the Wii Remote José Bernardo Guimarães Rocha Dissertação para obtenção do Grau de Mestre em Engenharia Informática e de Computadores Júri Presidente: Professor Doutor Joaquim Armando Pires Jorge Orientador: Professor Doutor Rui Filipe Fernandes Prada Co-Orientador: Professor Doutor Mário Rui Fonseca Dos Santos Gomes Vogal: Professor Doutor Nuno Manuel Robalo Correia Maio de 2009 Acknowledgements I would like to acknowledge Samuel Mascarenhas for the work done in the development of Geometry Friends. I would also like to thank all the people that were involved with testing Geometry Friends. Additionally, I would like to thank Sandra Gama for supplying me with the LaTeX model for this document, invaluable LaTeX tips and proof reading this document. i ii Resumo e palavras-chave Resumo Nestre trabalho, apresentamos a nossa experiência no desenho e desenvolvimento de um jogo cooperativo simples e co-localizado que utiliza o Wii Remote para controlar as personagens. De acordo com um estudo recente, existe uma demografia inteira de pontenciais jogadores que perfere este tipo de jogo e não joga actualmente devido a falta de ofertas. Começamos com a exposição do trabalho relacionado - Desafios de Gameplay; Padrões de De- senho para Jogos; Teorias de Tarefas de Grupo (do campo de Psicologia Social); Análise de jogos cooperativos; A Wii, o seu comando e o software para o utilizar num PC. Continuamos através da exploração do potencial para a cooperação de cada um dos tipos de Tarefas de Grupo e através da formalização de várias mecânicas de jogo que encontrámos durante a análise de jogos. -

Studienarbeit: Entwicklung Eines Räumlichen Interaktionskonzeptes

Studienarbeit Entwicklung eines räumlichen Interaktionskonzeptes im Studiengang Softwaretechnik und Medieninformatik Studienschwerpunkt Softwaretechnik der Fakultät Informationstechnik Sommersemester 2009 Björn Abheiden Datum: 30. Juli 2009 Prüfer: Prof. Dr.-Ing. Andreas Rößler Zweitprüfer: Prof. Dr.-Ing. Reinhard Schmidt Eidesstattliche Erklärung Hiermit versichere ich, die vorliegende Arbeit selbstständig und unter ausschließlicher Ver- wendung der angegebenen Literatur und Hilfsmittel erstellt zu haben. Die Arbeit wurde bisher in gleicher oder ähnlicher Form keiner anderen Prüfungsbehörde vorgelegt und auch nicht veröffentlicht. Esslingen, den 30. Juli 2009 Unterschrift ii Kurz-Zusammenfassung Diese Arbeit zeigt Wege und Überlegungen zur Entwicklung eines räumlichen Interakti- onskonzeptes auf. Als Basis diente die Vorgabe eines Eingabegerätes, der Fernbedienung der Spielekonsole Wii von Nintendo. Die Wii-Fernbedienung, auch Wiimote genannt, dient dem Benutzer einer Wii-Konsole als primäres Spiel- und Steuerinstrument. Die Tatsache, dass die Wiimote über die standardisierte Bluetooth-Schnittstelle mit ihrer Konsole kommuniziert, brachte bereits eine Vielzahl von quelloffenen Projekten hervor, die es ermöglichen, die Daten der Wiimote auf einem PC oder einem Smartphone aus- zulesen. Anhand einiger Beispiele und einer konkreten Umsetzung erfahren Sie, wie sich die Computernutzung in den kommenden Jahren verändern könnte und welcher Art neue Benutzungsoberflächen sein könnten. Bisherige Benutzerschnittstellen „fesseln“ den Nutzer oft an eine Sitzgelegenheit. So würde sich beispielsweise niemand auf Dauer mit einer Tastatur in der Hand durch den Raum bewegen. Was wäre also, wenn die Bewegung bereits teil der Eingabe wäre? Die Wiimote hat mit ihren eingebauten Bewegungssensoren diese Bedienungsart einem breitem Publi- kum zugänglich gemacht, so dass der erste Schritt bereits gemacht sein dürfte, die eigenen motorischen Fähigkeiten als Eingabemethode zu nutzen. iii Inhaltsverzeichnis 1 Einleitung 1 2 Grundlagen 2 2.1 Umdenken - Oder: Aus Vertrautem ausbrechen . -

Composing for Piano and Wii- Remote

WII PLAY PIANO :: COMPOSING FOR PIANO AND WII- REMOTE Christopher Jette Keith Kirchoff Music Composition Department Pianist & Composer University of California Santa Barbara Boston MA [email protected] [email protected] ABSTRACT This paper describes how the Wii-remote was used to generate the compositional material and how it provides ancillary tracking of the pianist during performance of the composition In Vitro Oink. In Vitro Oink uses hand built granular sound objects, organized through improvisation, as the material for the piano score. These hand built sound files are improvised with by using the Wii-remote’s accelerometers to trigger windowing of two streams of audio within a MaxMSP patch. The audio streams are comprised of a semi-indeterminate organization of the Figure 1. A flow chart depicting the composition hand built audio files. The computer is used to translate process from A through E. these recordings into notation via a FFT. This material is edited organized by the composer to form the performance score. This improvisation patch is an early Figure 1 traces In Vitro Oinks’ creation from beginning to performance. At A, a microphone records iteration of what becomes the performance patch. the piano to a sound file. The recorded soundfiles are The performance of In Vitro Oink uses piano, manipulated by the composer using IRIN [2] to generate Wii-remote, midi foot-pedal and live sampling. By derivative soundfiles. At B, a Wii uses Bluetooth to mounting the Wii-remote on the left arm of the pianist, communicate with DarwiinRemote [4], which in turn ancillary and direct data can influence the evolution of uses OSC to communicate with MaxMSP. -

Emulator Issues #5011 Wii Remote Plus and Some 3Rd-Party Wii Remotes Do Not Work on Windows 11/20/2011 10:57 PM - Greg

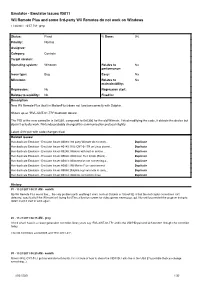

Emulator - Emulator Issues #5011 Wii Remote Plus and some 3rd-party Wii Remotes do not work on Windows 11/20/2011 10:57 PM - greg Status: Fixed % Done: 0% Priority: Normal Assignee: Category: Controls Target version: Operating system: Windows Relates to No performance: Issue type: Bug Easy: No Milestone: Relates to No maintainability: Regression: No Regression start: Relates to usability: No Fixed in: Description New Wii Remote Plus (built in MotionPlus) does not function correctly with Dolphin. Shows up as "RVL-CNT-01-TR" bluetooth device. The PID of the new controller is 0x0330, compared to 0x0306 for the old Wiimote. I tried modifying the code, it detects the device but doesn't actually work. Nintendo probably changed the communication protocol slightly. Latest SVN pull with code changes tried. Related issues: Has duplicate Emulator - Emulator Issues #4833: 3rd party Wiimote do not work... Duplicate Has duplicate Emulator - Emulator Issues #5143: RVL-CNT-01-TR on Linux doesnt... Duplicate Has duplicate Emulator - Emulator Issues #5248: Wiimote with built-in motion ... Duplicate Has duplicate Emulator - Emulator Issues #5820: Wiimotion Plus Inside (Black)... Duplicate Has duplicate Emulator - Emulator Issues #5913: Wiimote plus not connecting a... Duplicate Has duplicate Emulator - Emulator Issues #5961: Wii Motion Plus cant connect Duplicate Has duplicate Emulator - Emulator Issues #6030: Dolphin says wii mote is conn... Duplicate Has duplicate Emulator - Emulator Issues #6314: Wiimote connection issue Duplicate History #1 - 11/21/2011 04:11 AM - eodeth My Wii Remote Plus works fine... the only problem (with anything it uses, such as Dolphin or GlovePIE) is that the motionplus sometimes isn't detected, specifically if the Wiimote isn't laying flat (The calibration screen for video games never pops up). -

Wii Nand Dolphin Version 30

Wii Nand Dolphin Version 3.0 Wii Nand Dolphin Version 3.0 1 / 2 Wii Menu Type Pre-installed November 19, 2006 December 2, 2006 December 7, 2006 December 8, 2006,, 4 Playable See also. The Wii Menu, commonly .... The Dolphin Emulator development team announced today the release ... abandons that by taking advantage of the D3D11 and OpenGL 3 (or newer) ... Blank NAND Netplay: Without Wiimotes and saves on .... Now Wii games can be booted using extracted files instead of a disc image. 3.0-631, Download ... If in doubt, install the wad to the nand. 3.0-592, Download .... The GameCube/Wii emulator of your choice! ... Dolphin would have to sync the entire NAND (512MB) for stable wii netplay with game saves.. Kyle Orland - 3/21/2017, 8:40 AM ... The team behind the open source Dolphin emulator took a major step closer to reaching that ... homebrew software on an actual Wii to dump the contents of the system's NAND memory.. r/WiiHacks: This reddit is for people interested in modifying their Wii. ... Does anyone have a NAND dump that I can use for my instance of Dolphin? ... View entire discussion (3 comments) ... I couldn't find much on NeoGamma but I have version "R9 beta 56" and just that format alone tells me there's probably been a number .... The Dolphin emulator is well known in its particular scene, especially for its ability to run Wii and GameCube titles in HD - that was an .... Dolphin, an open source GameCube and Wii emulator, has reached the 5.0 stable release. -

Robonova-I” Mediante Wiimote

ESCUELA TÉCNICA SUPERIOR DE INGENIERÍA DE TELECOMUNICACIÓN UNIVERSIDAD POLITÉCNICA DE CARTAGENA Proyecto Fin de Carrera Teleoperacion del Robot “Robonova-I” mediante Wiimote AUTOR: Francisco José Esparza San Nicolás DIRECTORA: Mª Francisca Rosique Contreras CODIRECTOR: Diego Alonso Cáceres Septiembre / 2011 Autor Francisco José Esparza San Nicolás E-mail del Autor [email protected] Directora María Francisca Rosique Contreras E-mail del Director [email protected] Codirector Diego Alonso Cáceres Título del PFC Teleoperacion del robot “Robonova-I” mediante Wiimote Descriptores Resumen Este Proyecto Fin de Carrera (PFC) presenta el estudio realizado sobre la utilización de nuevos dispositivos como alternativas de interfaz de control a bajo coste para sistemas teleoperados. El caso de estudio seleccionado se centra en el desarrollo de una aplicación que permita la comunicación entre el mando de la consola Wii (Wiimote) y el robot teleoperado Robonova-I. I.T. Telecomunicaciones. Especialidad Telemática Titulación Intensificación Departamento de Tecnologías de la Información y las Comunicaciones Departamento Septiembre– 2011 Fecha de Presentación 2 3 INDICE Índice..............................................................................................................................................4 Anexos............................................................................................................................................6 Índice de Figuras............................................................................................................................7 -

Composing for Piano and Wii- Remote

Proceedings of the International Computer Music Conference 2011, University of Huddersfield, UK, 31 July - 5 August 2011 WII PLAY PIANO :: COMPOSING FOR PIANO AND WII- REMOTE Christopher Jette Keith Kirchoff Music Composition Department Pianist & Composer University of California Santa Barbara Boston MA [email protected] [email protected] ABSTRACT This paper describes how the Wii-remote was used to generate the compositional material and how it provides ancillary tracking of the pianist during performance of the composition In Vitro Oink. In Vitro Oink uses hand built granular sound objects, organized through improvisation, as the material for the piano score. These hand built sound files are improvised with by using the Wii-remote’s accelerometers to trigger windowing of two streams of audio within a MaxMSP patch. The audio streams are comprised of a semi-indeterminate organization of the Figure 1. A flow chart depicting the composition hand built audio files. The computer is used to translate process from A through E. these recordings into notation via a FFT. This material is edited organized by the composer to form the performance score. This improvisation patch is an early Figure 1 traces In Vitro Oinks’ creation from beginning to performance. At A, a microphone records iteration of what becomes the performance patch. the piano to a sound file. The recorded soundfiles are The performance of In Vitro Oink uses piano, manipulated by the composer using IRIN [2] to generate Wii-remote, midi foot-pedal and live sampling. By derivative soundfiles. At B, a Wii uses Bluetooth to mounting the Wii-remote on the left arm of the pianist, communicate with DarwiinRemote [4], which in turn ancillary and direct data can influence the evolution of uses OSC to communicate with MaxMSP. -

Embodied Interfaces for Interactive Percussion Instruction

Embodied Interfaces for Interactive Percussion Instruction Thesis by Justin R. Belcher Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Science and Applications Steve Harrison, Chair Dr. Scott McCrickard Dr. James Miley May 9, 2007 Blacksburg, VA Keywords: HCI, Percussion, Embodiment, Interaction c Copyright 2007, Justin R. Belcher ! Embodied Interfaces for Interactive Percussion Instruction Justin R. Belcher Abstract For decades, the application of technology to percussion curricula has been substan- tially hindered by the limitations of conventional input devices. With the need for specialized percussion instruction at an all-time high, investigation of this domain can open the doors to an entirely new educational approach for percussion. This research frames the foundation of an embodied approach to percussion in- struction manifested in a system called Percussive. Through the use of body-scale interactions, percussion students can connect with pedagogical tools at the most fun- damental level—leveraging muscle memory, kinesthetics, and embodiment to present engaging and dynamic instructional sessions. The major contribution of this work is the exploration of how a system which uses motion-sensing to replicate the experiential qualities of drumming can be applied to existing pedagogues. Techniques for building a system which recognizes drumming input are discussed, as well as the system’s application to a successful contemporary instructional model. In addition to the specific results that are presented, it is felt that the collective wisdom provided by the discussion of the methodology throughout this thesis provides valuable insight for others in the same area of research.