Stanford Artificial Intelligence Laboratory Memo AIM-337

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Design Principles Behind Beauty and Joy of Computing

Paper Session: CS0 SIGCSE ’20, March 11–14, 2020, Portland, OR, USA Design Principles behind Beauty and Joy of Computing Paul Goldenberg June Mark Brian Harvey Al Cuoco Mary Fries EDC EDC UCB EDC EDC Waltham, MA, USA Waltham, MA, USA Berkeley, CA, USA Waltham, MA, USA Waltham, MA, USA [email protected] [email protected] [email protected] [email protected] [email protected] ABSTRACT Technical Symposium on Computer Science Education (SIGCSE’20), March 11–14, 2020, Portland, OR, USA. ACM, NewYork, NY, USA, 7 pages. ACM, This paper shares the design principles of one Advanced Placement New York, NY, USA, 7 pages https://doi.org/10.1145/3328778.3366794 Computer Science Principles (AP CSP) course, Beauty and Joy of Computing (BJC), both for schools considering curriculum, and for 1 Introduction developers in this still-new field. BJC students not only learn about The National Science Foundation (NSF) and College Board (CB) in- CS, but do some and analyze its social implications; we feel that the troduced the Advanced Placement Computer Science Principles (AP job of enticing students into the field isn’t complete until students CSP) course to broaden participation in CS by appealing to high find programming, itself, something they enjoy and know they can school students who didn’t see CS as an inviting option—especially do, and its key ideas accessible. Students must feel invited to use female, black, and Latinx students who have been typically un- their own creativity and logic, and enjoy the power of their logic derrepresented in computing. The AP CSP course was the center- and the beauty and elegance of the code by which they express it. -

BERKELEY LOGO 6.1 Berkeley Logo User Manual

BERKELEY LOGO 6.1 Berkeley Logo User Manual Brian Harvey i Short Contents 1 Introduction :::::::::::::::::::::::::::::::::::::::::: 1 2 Data Structure Primitives::::::::::::::::::::::::::::::: 9 3 Communication :::::::::::::::::::::::::::::::::::::: 19 4 Arithmetic :::::::::::::::::::::::::::::::::::::::::: 29 5 Logical Operations ::::::::::::::::::::::::::::::::::: 35 6 Graphics:::::::::::::::::::::::::::::::::::::::::::: 37 7 Workspace Management ::::::::::::::::::::::::::::::: 49 8 Control Structures :::::::::::::::::::::::::::::::::::: 67 9 Macros ::::::::::::::::::::::::::::::::::::::::::::: 83 10 Error Processing ::::::::::::::::::::::::::::::::::::: 87 11 Special Variables ::::::::::::::::::::::::::::::::::::: 89 12 Internationalization ::::::::::::::::::::::::::::::::::: 93 INDEX :::::::::::::::::::::::::::::::::::::::::::::::: 97 iii Table of Contents 1 Introduction ::::::::::::::::::::::::::::::::::::: 1 1.1 Overview ::::::::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.2 Getter/Setter Variable Syntax :::::::::::::::::::::::::::::::::: 2 1.3 Entering and Leaving Logo ::::::::::::::::::::::::::::::::::::: 5 1.4 Tokenization:::::::::::::::::::::::::::::::::::::::::::::::::::: 6 2 Data Structure Primitives :::::::::::::::::::::: 9 2.1 Constructors ::::::::::::::::::::::::::::::::::::::::::::::::::: 9 word ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 9 list ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 9 sentence :::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 9 fput :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: -

2018 General Local Elections

LOCAL ELECTIONS CAMPAIGN FINANCING CANDIDATES 2018 General Local Elections JURISDICTION ELECTION AREA OFFICE EXPENSE LIMIT CANDIDATE NAME FINANCIAL AGENT NAME FINANCIAL AGENT MAILING ADDRESS 100 Mile House 100 Mile House Councillor $5,000.00 Wally Bramsleven Wally Bramsleven 5538 Park Dr 100 Mile House, BC V0K 2E1 100 Mile House Councillor $5,000.00 Leon Chretien Leon Chretien 6761 McMillan Rd Lone Butte, BC V0K 1X3 100 Mile House Councillor $5,000.00 Ralph Fossum Ralph Fossum 5648-103 Mile Lake Rd 100 Mile House, BC V0K 2E1 100 Mile House Councillor $5,000.00 Laura Laing Laura Laing 6298 Doman Rd Lone Butte, BC V0K 1X3 100 Mile House Councillor $5,000.00 Cameron McSorley Cameron McSorley 4481 Chuckwagon Tr PO Box 318 Forest Grove, BC V0K 1M0 100 Mile House Councillor $5,000.00 David Mingo David Mingo 6514 Hwy 24 Lone Butte, BC V0K 1X1 100 Mile House Councillor $5,000.00 Chris Pettman Chris Pettman PO Box 1352 100 Mile House, BC V0K 2E0 100 Mile House Councillor $5,000.00 Maureen Pinkney Maureen Pinkney PO Box 735 100 Mile House, BC V0K 2E0 100 Mile House Councillor $5,000.00 Nicole Weir Nicole Weir PO Box 545 108 Mile Ranch, BC V0K 2Z0 100 Mile House Mayor $10,000.00 Mitch Campsall Heather Campsall PO Box 865 100 Mile House, BC V0K 2E0 100 Mile House Mayor $10,000.00 Rita Giesbrecht William Robertson 913 Jens St PO Box 494 100 Mile House, BC V0K 2E0 100 Mile House Mayor $10,000.00 Glen Macdonald Glen Macdonald 6007 Walnut Rd 100 Mile House, BC V0K 2E3 Abbotsford Abbotsford Councillor $43,928.56 Jaspreet Anand Jaspreet Anand 2941 Southern Cres Abbotsford, BC V2T 5H8 Abbotsford Councillor $43,928.56 Bruce Banman Bruce Banman 34129 Heather Dr Abbotsford, BC V2S 1G6 Abbotsford Councillor $43,928.56 Les Barkman Les Barkman 3672 Fife Pl Abbotsford, BC V2S 7A8 This information was collected under the authority of the Local Elections Campaign Financing Act and the Freedom of Information and Protection of Privacy Act. -

Logo Tree Project

LOGO TREE PROJECT Written by P. Boytchev e-mail: pavel2008-AT-elica-DOT-net Rev 1.82 July, 2011 We’d like to thank all the people all over the globe and all over the alphabet who helped us build the Logo Tree: A .........Daniel Ajoy, Eduardo de Antueno, Hal Abelson B .........Andrew Begel, Carl Bogardus, Dominique Bille, George Birbilis, Ian Bicking, Imre Bornemisza, Joshua Bell, Luis Belmonte, Vladimir Batagelj, Wayne Burnett C .........Charlie, David Costanzo, John St. Clair, Loïc Le Coq, Oliver Schmidt-Chevalier, Paul Cockshott D .........Andy Dent, Kent Paul Dolan, Marcelo Duschkin, Mike Doyle E..........G. A. Edgar, Mustafa Elsheikh, Randall Embry F..........Damien Ferey, G .........Bill Glass, Jim Goebel, H .........Brian Harvey, Jamie Hunter, Jim Howe, Markus Hunke, Rachel Hestilow I........... J..........Ken Johnson K .........Eric Klopfer, Leigh Klotz, Susumu Kanemune L..........Janny Looyenga, Jean-François Lucas, Lionel Laské, Timothy Lipetz M.........Andreas Micheler, Bakhtiar Mikhak, George Mills, Greg Michaelson, Lorenzo Masetti, Michael Malien, Sébastien Magdelyns, Silvano Malfatti N .........Chaker Nakhli ,Dani Novak, Takeshi Nishiki O ......... P..........Paliokas Ioannis, U. B. Pavanaja, Wendy Petti Q ......... R .........Clem Rutter, Emmanuel Roche S..........Bojidar Sendov, Brian Silverman, Cynthia Solomon, Daniel Sanderson, Gene Sullivan, T..........Austin Tate, Gary Teachout, Graham Toal, Marcin Truszel, Peter Tomcsanyi, Seth Tisue, Gene Thail U .........Peter Ulrich V .........Carlo Maria Vireca, Álvaro Valdes W.........Arnie Widdowson, Uri Wilensky X ......... Y .........Andy Yeh, Ben Yates Z.......... Introduction The main goal of the Logo Tree project is to build a genealogical tree of new and old Logo implementations. This tree is expected to clearly demonstrate the evolution, the diversity and the vitality of Logo as a programming language. -

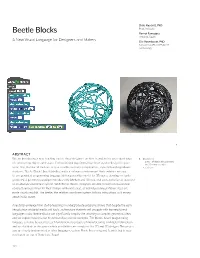

Beetle Blocks Bernat Ramagosa Arduino, Spain a New Visual Language for Designers and Makers Eric Rosenbaum, Phd Massachusetts Institute of Technology

Duks Koschitz, PhD Pratt Institute Beetle Blocks Bernat Ramagosa Arduino, Spain A New Visual Language for Designers and Makers Eric Rosenbaum, PhD Massachusetts Institute of Technology 1 ABSTRACT We are introducing a new teaching tool to show designers, architects, and artists procedural ways 1 Example of: of constructing objects and space. Computational algorithms have been used in design for quite – a stack of blocks (the program) – the 3D rendered object some time, but not all tools are very accessible to novice programmers, especially undergraduate – a 3D print students. ‘Beetle Blocks’ (beetleblocks.com) is a software environment that combines an easy- to-use graphical programming language with a generative model for 3D space, drawing on ‘turtle geometry,’ a geometry paradigm introduced by Abelson and Disessa, that uses a relative as opposed to an absolute coordinate system. With Beetle Blocks, designers are able to learn computational concepts and use them for their designs with more ease, as individual computational steps are made visually explicit. The beetle, the relative coordinate system, follows instructions as it moves about in 3D space. Anecdotal evidence from studio teaching in undergraduate programs shows that despite the early introduction of digital media and tools, architecture students still struggle with learning formal languages today. Beetle Blocks can significantly simplify the teaching of complex geometric ideas and we explain how this can be achieved via several examples. The blocks-based programming language can also be used to teach fundamental concepts of manufacturing and digital fabrication and we elucidate in this paper which possibilities are conducive for 2D and 3D designs. -

Wednesday, July 25

Wednesday, July 25 12:00 Registration and Lunch 1:00 Pre-Conference Workshops Each pre-conference workshop is three hours long (1pm to 4pm) and includes a bag lunch (12pm to 1pm). There are only 25 seats in each workshop; all of the workshops are now sold out. Getting Started with Scratch New to Scratch? Not sure how to get started? In this session, members of the MIT Scratch Team will introduce you to the big ideas behind Scratch and share stories of how it is being used across many VHWWLQJV<RXZLOOZRUNRQDKDQGVRQDFWLYLW\WRFUHDWH\RXUÀUVW6FUDWFKSURMHFWDQGVKDUHLWRQWKH Scratch website. You will also learn about resources for diving deeper into Scratch and strategies for helping others get started. Getting to Know Scratch 2.0 Want to try out the next generation of Scratch? In this hands-on workshop, you’ll get a chance to explore and experiment with the basic features of Scratch 2.0. Learn how to create and remix Scratch projects directly in the web browser, make your own programming blocks, share sprites between projects, and much more. Members of the MIT Scratch Team will discuss ideas underlying Scratch 2.0 and strategies for using it. Camera, Motion, Action! A New Way to Interact with Scratch With Scratch 2.0, you can use a webcam to sense motion and color in the world around you. Create interactive games, art, or musical projects that respond as you move your body. Trigger sounds as you walk through a scene. Herd cats with the wave of your hand! Join members of the MIT Scratch Team to experiment with these new camera features. -

Symbolic Programming Vs. the A.P. Curriculum Brian Harvey

www.logofoundation.org Symbolic Programming Vs. the A.P. Curriculum by Brian Harvey University of California, Berkeley © 1990 Brian Harvey © 1991 Logo Foundation You may copy and distribute this document for educational purposes provided that you do not charge for such copies and that this copyright notice is reproduced in full. A popular metaphor in recent years compares the process of writing a computer program to that of designing and building a bridge. The point of the metaphor is that it's not acceptable to debug a new bridge design by building the bridge, opening it to traffic, and waiting to see whether or not it collapses. People who use this metaphor argue that the analogous technique isn't acceptable in computer programming either. The phrase software engineering was coined to evoke the comparison with civil engineering and other, similar disciplines. The view of programming as software engineering has had a profound influence on computer science education. The purpose of this paper is to examine that influence, particularly at the high school level through the College Board Advanced Placement curriculum, and suggest an alternative view. (I am focusing attention on the A.P. curriculum to make the point concrete, but it is just one of many similar influences. The College Board did not invent the software engineering view; it tries to follow the lead of its client colleges. At the college level, the ACM curriculum standard could be cited instead. I teach at both levels, but I feel most strongly about the inappropriateness of the software engineering approach in secondary education.) Software Engineering: Programming as Discipline What are the characteristics of the software engineering approach? One fundamental assumption is that the goal of a project is given in advance. -

Perspectives on Papert

Winter 1999 Volume 17 f Number 2 PERSPECTIVES ON PAPERT INSIDE The History of Mr. Papert Dreams, Realities, and Possibilities • Papert's Conjecture About the Variability of Piagetian Stages Papert, Logo, and Loving to Learn Mixed Feelings about Papert and Logo • Polygons and More • Explaining Yourself • Book Review, Logo News, Teacher Feature Gtste Volume 17 I Number 2 Editorial Publisher 1998-1999 ISTE BOARD OF DIRECTORS Logo Exchange is published quarterly by the In International Society for Technology in Education ISTE Executive Board Members ternational Society for Technology in Education Lynne Schrum, President University of Georgia Special Interest Group for Logo-Using Educa Editor-in-Chief tors. Logo Exchange solicits articles on all as Gary S. Stager, Pepperdine University Athens (GA) Heidi Rogers, President-Elect University of Idaho pects of Logo use in education. [email protected] Cheryl Lemke, Secretary Milken Family Submission of Manuscripts Foundation (CA) Copy Editing, Design, & Production Manuscripts should be sent by surface mail on Ron Richmond Michael Turzanski, Treasurer Cisco Systems, a 3.5-inch disk (where possible). Preferred for Inc. (MA) mat is Microsoft Word for the Macintosh. ASCII Founding Editor Chip Kimball, At Large Lake Washington files in either Macintosh or DOS format are also Tom Lough, Murray State University School District (WA) welcome. Submissions may also be made by elec Cathy Gunn, At Large Northern Arizona tronic mail. Where possible, graphics should be Design, Illustrations & Art Direction University Peter Reynolds, Fablevision Animation Studios submitted electronically. Please include elec pete @fablevision.com ISTE Board Members tronic copy, either on disk (preferred) or by elec Larry Anderson Mississippi State University tronic mail, with paper submissions. -

Computer Science Logo Style 2/E - 3 Vol

Computer Science Logo Style 2/e - 3 vol. set - The MIT Press Page 1 sur 2 HOME | YOUR PROFILE | TO ORDER | CONTACT US | FAQ Computer Science Logo Style 2/e - 3 vol. set Brian Harvey Title Volume 1: Symbolic Computing Volume 2: Advanced Techniques Volume 3: Beyond Programming Advanced Search This series is for people--adults and teenagers--who are interested in computer programming because it's fun. The three Join an E-mail Alert List volumes use the Logo programming language as the vehicle for an exploration of computer science from the perspective of symbolic computation and artificial intelligence. Logo is a dialect February 1997 of Lisp, a language used in the most advanced research projects 1066 pp., 134 illus. in computer science, especially in artificial intelligence. $110.00/£70.95 (PAPER) Throughout the series, functional programming techniques (including higher order functions and recursion) are emphasized, ISBN-10: but traditional sequential programming is also used when 0-262-58151-5 appropriate. ISBN-13: 978-0-262-58151-6 In the second edition, the first two volumes have been rearranged so that illustrative case studies appear with the techniques they demonstrate. Volume 1 includes a new chapter about higher order functions, and the recursion chapters have been reorganized for greater clarity. Volume 2 includes a new Related Links tutorial chapter about macros, an exclusive capability of Download Berkeley Logo Berkeley Logo, and two new projects. Throughout the series, the larger program examples have been rewritten for greater More about Volume 1: readability by more extensive use of data abstraction. Symbolic Computing Volume 1 Symbolic Computing, is addressed to a reader who More about Volume 2: has used computers and wants to learn the ideas behind them. -

Snap! Block Programming for Kids …And Parents

Snap! Block programming for kids …and parents Grzegorz Posyniak SAP Inside Track Walldorf: January, 13th 2018 – #sitwdf #sitkids Playing an instrument or coding? Why? • It is not about: • making kids “computer literate” • making kids into hard-working little app developers • raising the next generation of Silicon Valley entrepreneurs • It is about: • training and experience in problem solving • training and experience in breaking big project down to the minute tasks that, when put together, are what make it work • natural reality check: interpreters and compilers are unforgiving Block-based programming languages • visualizing the structure of control blocks • flow of algorithms • colorful • fun • easy to use • give an immediate feedback ideal motivation booster Snap! http://snap.berkeley.edu/ • ready-to-use block-based programming environment • web browser based, pure HTML Canvas and JavaScript • uses modern and open JavaScript application servers • free (libre), open-source • free cloud service (from MioSoft) SAP Cloud Platform • used as teaching tool and research platform around the world • translated in 38 languages • visually stunning • expandable • easy to use You can also download the latest source code with git clone https://github.com/jmoenig/Snap--Build-Your-Own-Blocks.git „Snap is Scheme disguised as Scratch” Brian Harvey Scratch “low floor” • LEGO - like blocks grammar • 2D cartoon themed microworld (stage, sprites, media) • events, parallelism • interactive, continuous feedback, “liveness” Scheme “no ceiling” • dynamically -

Stanford Artificial Intelligence Laboratory Memo AIM-.30 1

Stanford Artificial Intelligence Laboratory June 1977 Memo AIM-.30 1 Computer Science Department Report No. STAN-CS-77-624 Recent Research in Computer Science bY John McCarthy, Professor of Computer Science Principal Investigator Associate Investigators: Thomas Binford, Research Associate in Computer Science Cordell Green, Assistant Professor of Computer Science David Luckham, Senior Research Associate in Computer Science Zohar Manna, Research Associate in Computer Science Terry Winograd, Assistant Professor of Computer Science Edited by Lester Earnest, Research Computer Scientist Research sponsored by Advanced Research Projects Agency COMPUTER SCIENCE DEPARTMENT Stanford University Stanford Artificial Intelligence Laboratory June 1977 Memo AIM-30 1 Computer Science Department Report No. STAN-CS-77-624 Recent Research in Computer Science bY John McCarthy, Professor of Computer Science Principal Investigator Associate Investigators: Thomas Binford, Research Associate in Computer Science Cordell Green, Assistant Professor of Computer Science David Luckham, Senior Research Associate in Computer Science Zohar Manna, Research Associate in Computer Science Terry Winograd, Assistant Professor of Computer Science Edited by Lester Earnest, Research Computer Scientist ABSTRACT This report summarizes recent accomplishments in six related areas: (1) basic AI research and formal reasoning, (2) image understanding, (3) mathematical theory of computation, (4) program verification, (5) natural language understanding, and (6) knowledge based programming. This research zuas supported by the Advanced Research Projects Agency of the Department of Defense under ARPA Order No. 2494, Contract MDA903-76-C-0206. The views and conclusions co?jtai?zcd in this document are those of the author(s) and should not be interpreted as necessarily representing the oficial policies, either expressed or implied, of Stanford University or any agency of Zhe V. -

61A Lecture 25 -Brian Harvey, Berkeley CS Instructor Extraordinaire

Scheme is a Dialect of Lisp What are people saying about Lisp? • "The greatest single programming language ever designed." -Alan Kay, co-inventor of Smalltalk and OOP • "The only computer language that is beautiful." -Neal Stephenson, John's favorite sci-fi author • "God's programming language." 61A Lecture 25 -Brian Harvey, Berkeley CS instructor extraordinaire Friday, October 26 http://imgs.xkcd.com/comics/lisp_cycles.png 2 Scheme Fundamentals Special Forms Scheme programs consist of expressions, which can be: A combination that is not a call expression is a special form: • If expression: (if <predicate> <consequent> <alternative>) • Primitive expressions: 2, 3.3, true, +, quotient, ... • And and or: (and <e1> ... <en>), (or <e1> ... <en>) • Combinations: (quotient 10 2), (not true), ... • Binding names: (define <name> <expression>) Numbers are self-evaluating; symbols are bound to values. • New procedures: (define (<name> <formal parameters>) <body>) Call expressions have an operator and 0 or more operands. > (define pi 3.14) The name “pi” is bound to > (quotient 10 2) “quotient” names Scheme’s > (* pi 2) 3.14 in the global frame 5 built-in integer division 6.28 > (quotient (+ 8 7) 5) procedure (i.e., function) 3 > (define (abs x) A procedure is created and > (+ (* 3 (if (< x 0) bound to the name “abs” (+ (* 2 4) Combinations can span (- x) (+ 3 5))) multiple lines x)) (+ (- 10 7) (spacing doesn’t matter) > (abs -3) 3 6)) Demo Demo 3 4 Lambda Expressions Pairs and Lists In the late 1950s, computer scientists used confusing names. Lambda expressions evaluate to anonymous functions. • cons: Two-argument procedure that creates a pair • car: Procedure that returns the first element of a pair (lambda (<formal-parameters>) <body>) • cdr: Procedure that returns the second element of a pair • nil: The empty list Two equivalent expressions: λ They also used a non-obvious notation for recursive lists.