POSIX Shared Memory Course Module

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Chapter 5 Multiprocessors and Thread-Level Parallelism

Computer Architecture A Quantitative Approach, Fifth Edition Chapter 5 Multiprocessors and Thread-Level Parallelism Copyright © 2012, Elsevier Inc. All rights reserved. 1 Contents 1. Introduction 2. Centralized SMA – shared memory architecture 3. Performance of SMA 4. DMA – distributed memory architecture 5. Synchronization 6. Models of Consistency Copyright © 2012, Elsevier Inc. All rights reserved. 2 1. Introduction. Why multiprocessors? Need for more computing power Data intensive applications Utility computing requires powerful processors Several ways to increase processor performance Increased clock rate limited ability Architectural ILP, CPI – increasingly more difficult Multi-processor, multi-core systems more feasible based on current technologies Advantages of multiprocessors and multi-core Replication rather than unique design. Copyright © 2012, Elsevier Inc. All rights reserved. 3 Introduction Multiprocessor types Symmetric multiprocessors (SMP) Share single memory with uniform memory access/latency (UMA) Small number of cores Distributed shared memory (DSM) Memory distributed among processors. Non-uniform memory access/latency (NUMA) Processors connected via direct (switched) and non-direct (multi- hop) interconnection networks Copyright © 2012, Elsevier Inc. All rights reserved. 4 Important ideas Technology drives the solutions. Multi-cores have altered the game!! Thread-level parallelism (TLP) vs ILP. Computing and communication deeply intertwined. Write serialization exploits broadcast communication -

Beej's Guide to Unix IPC

Beej's Guide to Unix IPC Brian “Beej Jorgensen” Hall [email protected] Version 1.1.3 December 1, 2015 Copyright © 2015 Brian “Beej Jorgensen” Hall This guide is written in XML using the vim editor on a Slackware Linux box loaded with GNU tools. The cover “art” and diagrams are produced with Inkscape. The XML is converted into HTML and XSL-FO by custom Python scripts. The XSL-FO output is then munged by Apache FOP to produce PDF documents, using Liberation fonts. The toolchain is composed of 100% Free and Open Source Software. Unless otherwise mutually agreed by the parties in writing, the author offers the work as-is and makes no representations or warranties of any kind concerning the work, express, implied, statutory or otherwise, including, without limitation, warranties of title, merchantibility, fitness for a particular purpose, noninfringement, or the absence of latent or other defects, accuracy, or the presence of absence of errors, whether or not discoverable. Except to the extent required by applicable law, in no event will the author be liable to you on any legal theory for any special, incidental, consequential, punitive or exemplary damages arising out of the use of the work, even if the author has been advised of the possibility of such damages. This document is freely distributable under the terms of the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 License. See the Copyright and Distribution section for details. Copyright © 2015 Brian “Beej Jorgensen” Hall Contents 1. Intro................................................................................................................................................................1 1.1. Audience 1 1.2. Platform and Compiler 1 1.3. -

Mmap and Dma

CHAPTER THIRTEEN MMAP AND DMA This chapter delves into the area of Linux memory management, with an emphasis on techniques that are useful to the device driver writer. The material in this chap- ter is somewhat advanced, and not everybody will need a grasp of it. Nonetheless, many tasks can only be done through digging more deeply into the memory man- agement subsystem; it also provides an interesting look into how an important part of the kernel works. The material in this chapter is divided into three sections. The first covers the implementation of the mmap system call, which allows the mapping of device memory directly into a user process’s address space. We then cover the kernel kiobuf mechanism, which provides direct access to user memory from kernel space. The kiobuf system may be used to implement ‘‘raw I/O’’ for certain kinds of devices. The final section covers direct memory access (DMA) I/O operations, which essentially provide peripherals with direct access to system memory. Of course, all of these techniques requir e an understanding of how Linux memory management works, so we start with an overview of that subsystem. Memor y Management in Linux Rather than describing the theory of memory management in operating systems, this section tries to pinpoint the main features of the Linux implementation of the theory. Although you do not need to be a Linux virtual memory guru to imple- ment mmap, a basic overview of how things work is useful. What follows is a fairly lengthy description of the data structures used by the kernel to manage memory. -

An Introduction to Linux IPC

An introduction to Linux IPC Michael Kerrisk © 2013 linux.conf.au 2013 http://man7.org/ Canberra, Australia [email protected] 2013-01-30 http://lwn.net/ [email protected] man7 .org 1 Goal ● Limited time! ● Get a flavor of main IPC methods man7 .org 2 Me ● Programming on UNIX & Linux since 1987 ● Linux man-pages maintainer ● http://www.kernel.org/doc/man-pages/ ● Kernel + glibc API ● Author of: Further info: http://man7.org/tlpi/ man7 .org 3 You ● Can read a bit of C ● Have a passing familiarity with common syscalls ● fork(), open(), read(), write() man7 .org 4 There’s a lot of IPC ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 5 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 6 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memoryn attach ● Pseudoterminals tio a ● proc_vm_readv() / proc_vm_writev() ● Sockets ic n ● Signals ● Stream vs Datagram (vs uSeq. -

Chapter 5 Thread-Level Parallelism

Chapter 5 Thread-Level Parallelism Instructor: Josep Torrellas CS433 Copyright Josep Torrellas 1999, 2001, 2002, 2013 1 Progress Towards Multiprocessors + Rate of speed growth in uniprocessors saturated + Wide-issue processors are very complex + Wide-issue processors consume a lot of power + Steady progress in parallel software : the major obstacle to parallel processing 2 Flynn’s Classification of Parallel Architectures According to the parallelism in I and D stream • Single I stream , single D stream (SISD): uniprocessor • Single I stream , multiple D streams(SIMD) : same I executed by multiple processors using diff D – Each processor has its own data memory – There is a single control processor that sends the same I to all processors – These processors are usually special purpose 3 • Multiple I streams, single D stream (MISD) : no commercial machine • Multiple I streams, multiple D streams (MIMD) – Each processor fetches its own instructions and operates on its own data – Architecture of choice for general purpose mps – Flexible: can be used in single user mode or multiprogrammed – Use of the shelf µprocessors 4 MIMD Machines 1. Centralized shared memory architectures – Small #’s of processors (≈ up to 16-32) – Processors share a centralized memory – Usually connected in a bus – Also called UMA machines ( Uniform Memory Access) 2. Machines w/physically distributed memory – Support many processors – Memory distributed among processors – Scales the mem bandwidth if most of the accesses are to local mem 5 Figure 5.1 6 Figure 5.2 7 2. Machines w/physically distributed memory (cont) – Also reduces the memory latency – Of course interprocessor communication is more costly and complex – Often each node is a cluster (bus based multiprocessor) – 2 types, depending on method used for interprocessor communication: 1. -

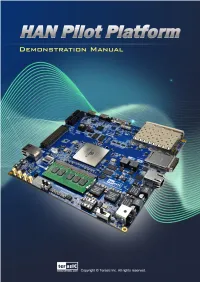

Table of Contents

TABLE OF CONTENTS Chapter 1 Introduction ............................................................................................. 3 Chapter 2 Examples for FPGA ................................................................................. 4 2.1 Factory Default Code ................................................................................................................................. 4 2.2 Nios II Control for Programmable PLL/ Temperature/ Power/ 9-axis ....................................................... 6 2.3 Nios DDR4 SDRAM Test ........................................................................................................................ 12 2.4 RTL DDR4 SDRAM Test ......................................................................................................................... 14 2.5 USB Type-C DisplayPort Alternate Mode ............................................................................................... 15 2.6 USB Type-C FX3 Loopback .................................................................................................................... 17 2.7 HDMI TX and RX in 4K Resolution ........................................................................................................ 21 2.8 HDMI TX in 4K Resolution ..................................................................................................................... 26 2.9 Low Latency Ethernet 10G MAC Demo .................................................................................................. 29 2.10 Socket -

Trafficdb: HERE's High Performance Shared-Memory Data Store

TrafficDB: HERE’s High Performance Shared-Memory Data Store Ricardo Fernandes Piotr Zaczkowski Bernd Gottler¨ HERE Global B.V. HERE Global B.V. HERE Global B.V. [email protected] [email protected] [email protected] Conor Ettinoffe Anis Moussa HERE Global B.V. HERE Global B.V. conor.ettinoff[email protected] [email protected] ABSTRACT HERE's traffic-aware services enable route planning and traf- fic visualisation on web, mobile and connected car appli- cations. These services process thousands of requests per second and require efficient ways to access the information needed to provide a timely response to end-users. The char- acteristics of road traffic information and these traffic-aware services require storage solutions with specific performance features. A route planning application utilising traffic con- gestion information to calculate the optimal route from an origin to a destination might hit a database with millions of queries per second. However, existing storage solutions are not prepared to handle such volumes of concurrent read Figure 1: HERE Location Cloud is accessible world- operations, as well as to provide the desired vertical scalabil- wide from web-based applications, mobile devices ity. This paper presents TrafficDB, a shared-memory data and connected cars. store, designed to provide high rates of read operations, en- abling applications to directly access the data from memory. Our evaluation demonstrates that TrafficDB handles mil- HERE is a leader in mapping and location-based services, lions of read operations and provides near-linear scalability providing fresh and highly accurate traffic information to- on multi-core machines, where additional processes can be gether with advanced route planning and navigation tech- spawned to increase the systems' throughput without a no- nologies that helps drivers reach their destination in the ticeable impact on the latency of querying the data store. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

IPC: Mmap and Pipes

Interprocess Communication: Memory mapped files and pipes CS 241 April 4, 2014 University of Illinois 1 Shared Memory Private Private address OS address address space space space Shared Process A segment Process B Processes request the segment OS maintains the segment Processes can attach/detach the segment 2 Shared Memory Private Private Private address address space OS address address space space space Shared Process A segment Process B Can mark segment for deletion on last detach 3 Shared Memory example /* make the key: */ if ((key = ftok(”shmdemo.c", 'R')) == -1) { perror("ftok"); exit(1); } /* connect to (and possibly create) the segment: */ if ((shmid = shmget(key, SHM_SIZE, 0644 | IPC_CREAT)) == -1) { perror("shmget"); exit(1); } /* attach to the segment to get a pointer to it: */ data = shmat(shmid, (void *)0, 0); if (data == (char *)(-1)) { perror("shmat"); exit(1); } 4 Shared Memory example /* read or modify the segment, based on the command line: */ if (argc == 2) { printf("writing to segment: \"%s\"\n", argv[1]); strncpy(data, argv[1], SHM_SIZE); } else printf("segment contains: \"%s\"\n", data); /* detach from the segment: */ if (shmdt(data) == -1) { perror("shmdt"); exit(1); } return 0; } Run demo 5 Memory Mapped Files Memory-mapped file I/O • Map a disk block to a page in memory • Allows file I/O to be treated as routine memory access Use • File is initially read using demand paging ! i.e., loaded from disk to memory only at the moment it’s needed • When needed, a page-sized portion of the file is read from the file system -

X86 Memory Protection and Translation

2/5/20 COMP 790: OS Implementation COMP 790: OS Implementation Logical Diagram Binary Memory x86 Memory Protection and Threads Formats Allocators Translation User System Calls Kernel Don Porter RCU File System Networking Sync Memory Device CPU Today’s Management Drivers Scheduler Lecture Hardware Interrupts Disk Net Consistency 1 Today’s Lecture: Focus on Hardware ABI 2 1 2 COMP 790: OS Implementation COMP 790: OS Implementation Lecture Goal Undergrad Review • Understand the hardware tools available on a • What is: modern x86 processor for manipulating and – Virtual memory? protecting memory – Segmentation? • Lab 2: You will program this hardware – Paging? • Apologies: Material can be a bit dry, but important – Plus, slides will be good reference • But, cool tech tricks: – How does thread-local storage (TLS) work? – An actual (and tough) Microsoft interview question 3 4 3 4 COMP 790: OS Implementation COMP 790: OS Implementation Memory Mapping Two System Goals 1) Provide an abstraction of contiguous, isolated virtual Process 1 Process 2 memory to a program Virtual Memory Virtual Memory 2) Prevent illegal operations // Program expects (*x) – Prevent access to other application or OS memory 0x1000 Only one physical 0x1000 address 0x1000!! // to always be at – Detect failures early (e.g., segfault on address 0) // address 0x1000 – More recently, prevent exploits that try to execute int *x = 0x1000; program data 0x1000 Physical Memory 5 6 5 6 1 2/5/20 COMP 790: OS Implementation COMP 790: OS Implementation Outline x86 Processor Modes • x86 -

A Machine-Independent DMA Framework for Netbsd

AMachine-Independent DMA Framework for NetBSD Jason R. Thorpe1 Numerical Aerospace Simulation Facility NASA Ames Research Center Abstract 1.1. Host platform details One of the challenges in implementing a portable In the example platforms listed above,there are at kernel is finding good abstractions for semantically- least three different mechanisms used to perform DMA. similar operations which often have very machine- The first is used by the i386 platform. This mechanism dependent implementations. This is especially impor- can be described as "what you see is what you get": the tant on modern machines which share common archi- address that the device uses to perform the DMA trans- tectural features, e.g. the PCI bus. fer is the same address that the host CPU uses to access This paper describes whyamachine-independent the memory location in question. DMA mapping abstraction is needed, the design consid- DMA address Host address erations for such an abstraction, and the implementation of this abstraction in the NetBSD/alpha and NetBSD/i386 kernels. 1. Introduction NetBSD is a portable, modern UNIX-likeoperat- ing system which currently runs on eighteen platforms covering nine processor architectures. Some of these platforms, including the Alpha and i3862,share the PCI busasacommon architectural feature. In order to share device drivers for PCI devices between different platforms, abstractions that hide the details of bus access must be invented. The details that must be hid- den can be broken down into twoclasses: CPU access Figure 1 - WYSIWYG DMA to devices on the bus (bus_space)and device access to host memory (bus_dma). Here we will discuss the lat- The second mechanism, employed by the Alpha, ter; bus_space is a complicated topic in and of itself, is very similar to the first; the address the host CPU and is beyond the scope of this paper.