Master Thesis Cyber Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elastic Storage for Linux on System Z IBM Research & Development Germany

Peter Münch T/L Test and Development – Elastic Storage for Linux on System z IBM Research & Development Germany Elastic Storage for Linux on IBM System z © Copyright IBM Corporation 2014 9.0 Elastic Storage for Linux on System z Session objectives • This presentation introduces the Elastic Storage, based on General Parallel File System technology that will be available for Linux on IBM System z. Understand the concepts of Elastic Storage and which functions will be available for Linux on System z. Learn how you can integrate and benefit from the Elastic Storage in a Linux on System z environment. Finally, get your first impression in a live demo of Elastic Storage. 2 © Copyright IBM Corporation 2014 Elastic Storage for Linux on System z Trademarks The following are trademarks of the International Business Machines Corporation in the United States and/or other countries. AIX* FlashSystem Storwize* Tivoli* DB2* IBM* System p* WebSphere* DS8000* IBM (logo)* System x* XIV* ECKD MQSeries* System z* z/VM* * Registered trademarks of IBM Corporation The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

What Is Peer-To-Peer File Transfer? Bandwidth It Can Use

sharing, with no cap on the amount of commonly used to trade copyrighted music What is Peer-to-Peer file transfer? bandwidth it can use. Thus, a single NSF PC and software. connected to NSF’s LAN with a standard The Recording Industry Association of A peer-to-peer, or “P2P,” file transfer 100Mbps network card could, with KaZaA’s America tracks users of this software and has service allows the user to share computer files default settings, conceivably saturate NSF’s begun initiating lawsuits against individuals through the Internet. Examples of P2P T3 (45Mbps) internet connection. who use P2P systems to steal copyrighted services include KaZaA, Grokster, Gnutella, The KaZaA software assesses the quality of material or to provide copyrighted software to Morpheus, and BearShare. the PC’s internet connection and designates others to download freely. These services are set up to allow users to computers with high-speed connections as search for and download files to their “Supernodes,” meaning that they provide a How does use of these services computers, and to enable users to make files hub between various users, a source of available for others to download from their information about files available on other create security issues at NSF? computers. users’ PCs. This uses much more of the When configuring these services, it is computer’s resources, including bandwidth possible to designate as “shared” not only the and processing capability. How do these services function? one folder KaZaA sets up by default, but also The free version of KaZaA is supported by the entire contents of the user’s computer as Peer to peer file transfer services are highly advertising, which appears on the user well as any NSF network drives to which the decentralized, creating a network of linked interface of the program and also causes pop- user has access, to be searchable and users. -

Oracle® ZFS Storage Appliance Security Guide, Release OS8.6.X

® Oracle ZFS Storage Appliance Security Guide, Release OS8.6.x Part No: E76480-01 September 2016 Oracle ZFS Storage Appliance Security Guide, Release OS8.6.x Part No: E76480-01 Copyright © 2014, 2016, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS. Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

BESIII Physical Analysis on Hadoop Platform

20th International Conference on Computing in High Energy and Nuclear Physics (CHEP2013) IOP Publishing Journal of Physics: Conference Series 513 (2014) 032044 doi:10.1088/1742-6596/513/3/032044 BESIII Physical Analysis on Hadoop Platform Jing HUO12, Dongsong ZANG12, Xiaofeng LEI12, Qiang LI12, Gongxing SUN1 1Institute of High Energy Physics, Beijing, China 2University of Chinese Academy of Sciences, Beijing, China E-mail: [email protected] Abstract. In the past 20 years, computing cluster has been widely used for High Energy Physics data processing. The jobs running on the traditional cluster with a Data-to-Computing structure, have to read large volumes of data via the network to the computing nodes for analysis, thereby making the I/O latency become a bottleneck of the whole system. The new distributed computing technology based on the MapReduce programming model has many advantages, such as high concurrency, high scalability and high fault tolerance, and it can benefit us in dealing with Big Data. This paper brings the idea of using MapReduce model to do BESIII physical analysis, and presents a new data analysis system structure based on Hadoop platform, which not only greatly improve the efficiency of data analysis, but also reduces the cost of system building. Moreover, this paper establishes an event pre-selection system based on the event level metadata(TAGs) database to optimize the data analyzing procedure. 1. Introduction 1.1 The current BESIII computing architecture High Energy Physics experiment is a typical data-intensive application. The BESIII computing system now consist of 3PB+ data, 6500 CPU cores, and it is estimated that there will be more than 10PB data produced in the future 5 years. -

A Generic Data Exchange System for F2F Networks

The Retroshare project The GXS system Decentralize your app! A Generic Data Exchange System for F2F Networks Cyril Soler C.Soler The GXS System 03 Feb. 2018 1 / 19 The Retroshare project The GXS system Decentralize your app! Outline I Overview of Retroshare I The GXS system I Decentralize your app! C.Soler The GXS System 03 Feb. 2018 2 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows, (+ Android). C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. C.Soler The GXS System 03 Feb. 2018 3 / 19 The Retroshare project The GXS system Decentralize your app! The Retroshare Project I Mesh computers using signed TLS over TCP/UDP/Tor/I2P; I anonymous end-to-end encrypted FT with swarming; I mail, IRC chat, forums, channels; I available on Mac OS, Linux, Windows. -

Backup Preso PMUG-NJ 2019

Backing Up Our Apple Devices with Dave Hamilton Who are we? • Dave says: I’ll go first • Mac Observer - Over 20 years • Mac Geek Gab Podcast - celebrating our 14th year • Geek... forever! Who are we? • Your turn • Mac owners? • Less than one year? • More than 5 years? • Do you back-up? What you’ll learn • Why we backup • What to backup • Different types of backups • How (and how often) to backup • How to make sure you’re backups will help! First... why? What are the benefits? • Start with the obvious: you don’t want to lose data • You also don’t want to lose time • Save you if hardware dies/malfunctions • Save you when hardware goes missing/stolen • Save you if YOU make a mistake What should I back up? • Anything you’re not willing to lose • Pictures, Movies, Data created by others • Anything you don’t want to recreate • Work documents, presentations, spreadsheets • Your system files, Applications, and Settings • Anything else? Definitions of a Back-up • Automated • Verified • Restorable Time Machine • Hourly, Daily, Weekly, Monthly • Requires additional drive as destination • Internal • External • Network • To Restore, you have to copy files back to original/other drive • Pros: Automated, Incremental, Easy, Free • Cons: Non-bootable, Not (really) Verified Cool Stuff Found TimeMachineEditor • Time Machine Backs Up Every Hour • Can slow down your machine • http:// timesoftware.free.fr/ timemachineeditor/ • Must Turn Time Machine Off • Demo-> Cool Stuff Found BackupLoupe • Time Machine is rather secretive • If only you knew WHAT was being backed -

The Edonkey File-Sharing Network

The eDonkey File-Sharing Network Oliver Heckmann, Axel Bock, Andreas Mauthe, Ralf Steinmetz Multimedia Kommunikation (KOM) Technische Universitat¨ Darmstadt Merckstr. 25, 64293 Darmstadt (heckmann, bock, mauthe, steinmetz)@kom.tu-darmstadt.de Abstract: The eDonkey 2000 file-sharing network is one of the most successful peer- to-peer file-sharing applications, especially in Germany. The network itself is a hybrid peer-to-peer network with client applications running on the end-system that are con- nected to a distributed network of dedicated servers. In this paper we describe the eDonkey protocol and measurement results on network/transport layer and application layer that were made with the client software and with an open-source eDonkey server we extended for these measurements. 1 Motivation and Introduction Most of the traffic in the network of access and backbone Internet service providers (ISPs) is generated by peer-to-peer (P2P) file-sharing applications [San03]. These applications are typically bandwidth greedy and generate more long-lived TCP flows than the WWW traffic that was dominating the Internet traffic before the P2P applications. To understand the influence of these applications and the characteristics of the traffic they produce and their impact on network design, capacity expansion, traffic engineering and shaping, it is important to empirically analyse the dominant file-sharing applications. The eDonkey file-sharing protocol is one of these file-sharing protocols. It is imple- mented by the original eDonkey2000 client [eDonkey] and additionally by some open- source clients like mldonkey [mlDonkey] and eMule [eMule]. According to [San03] it is with 52% of the generated file-sharing traffic the most successful P2P file-sharing net- work in Germany, even more successful than the FastTrack protocol used by the P2P client KaZaa [KaZaa] that comes to 44% of the traffic. -

IPFS and Friends: a Qualitative Comparison of Next Generation Peer-To-Peer Data Networks Erik Daniel and Florian Tschorsch

1 IPFS and Friends: A Qualitative Comparison of Next Generation Peer-to-Peer Data Networks Erik Daniel and Florian Tschorsch Abstract—Decentralized, distributed storage offers a way to types of files [1]. Napster and Gnutella marked the beginning reduce the impact of data silos as often fostered by centralized and were followed by many other P2P networks focusing on cloud storage. While the intentions of this trend are not new, the specialized application areas or novel network structures. For topic gained traction due to technological advancements, most notably blockchain networks. As a consequence, we observe that example, Freenet [2] realizes anonymous storage and retrieval. a new generation of peer-to-peer data networks emerges. In this Chord [3], CAN [4], and Pastry [5] provide protocols to survey paper, we therefore provide a technical overview of the maintain a structured overlay network topology. In particular, next generation data networks. We use select data networks to BitTorrent [6] received a lot of attention from both users and introduce general concepts and to emphasize new developments. the research community. BitTorrent introduced an incentive Specifically, we provide a deeper outline of the Interplanetary File System and a general overview of Swarm, the Hypercore Pro- mechanism to achieve Pareto efficiency, trying to improve tocol, SAFE, Storj, and Arweave. We identify common building network utilization achieving a higher level of robustness. We blocks and provide a qualitative comparison. From the overview, consider networks such as Napster, Gnutella, Freenet, BitTor- we derive future challenges and research goals concerning data rent, and many more as first generation P2P data networks, networks. -

Softnas Deployment Guide for High- Performance SQL Storage

SoftNAS Deployment Guide for High- Performance SQL Storage Introduction SoftNAS cloud NAS systems are based on an innovative, memory-centric storage architecture that delivers unparalleled NAS performance, efficiency, and value. They incorporate a hybrid disk storage technology that tailors the usage of data disks, log solid- state cache drives (SSDs), and read cache SSDs to the data share's specific needs. Additional features include variable storage record size, data compression, and multiple connectivity options. As a Cloud NAS solution, SoftNAS cloud NAS systems provide an excellent base for Microsoft Windows Server deployments by providing iSCSI or Fibre Channel block storage for Microsoft SQL Server, and network file system (NFS) or server message block (SMB) file storage for Microsoft Windows client access. This document covers the best practices to follow when deploying Microsoft SQL Server on a SoftNAS cloud NAS system. The intended audience is storage administrators and Microsoft SQL Server database administrators. Maintaining High Availability As with any business-critical application, high availability is a crucial design criterion to be considered when deploying a Microsoft SQL Server installation. Microsoft SQL Server 2016 can be installed on local and/or shared file systems, and SoftNAS cloud NAS systems can satisfy both of these options. Local file systems (from the Microsoft Windows Server perspective) are hosted as block volumes—iSCSI and/or Fibre-Channel-connected LUNs and file systems as SMB and/or NFS volumes. High availability starts with the network connectivity supporting the storage and server interconnectivity. Any design for the storage infrastructure should avoid single points of failure. Because many white papers and publications cover storage-area networking and network-attached storage resilience, those topics are not covered in detail in this paper. -

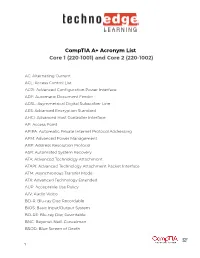

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

Improving Learning of Programming Through E-Learning by Using Asynchronous Virtual Pair Programming

Improving Learning of Programming Through E-Learning by Using Asynchronous Virtual Pair Programming Abdullah Mohd ZIN Department of Industrial Computing Faculty of Information Science and Technology National University of Malaysia Bangi, MALAYSIA Sufian IDRIS Department of Computer Science Faculty of Information Science and Technology National University of Malaysia Bangi, MALAYSIA Nantha Kumar SUBRAMANIAM Open University Malaysia (OUM) Faculty of Information Technology and Multimedia Communication Kuala Lumpur, MALAYSIA ABSTRACT The problem of learning programming subjects, especially through distance learning and E-Learning, has been widely reported in literatures. Many attempts have been made to solve these problems. This has led to many new approaches in the techniques of learning of programming. One of the approaches that have been proposed is the use of virtual pair programming (VPP). Most of the studies about VPP in distance learning or e-learning environment focus on the use of the synchronous mode of collaboration between learners. Not much research have been done about asynchronous VPP. This paper describes how we have implemented VPP and a research that has been carried out to study the effectiveness of asynchronous VPP for learning of programming. In particular, this research study the effectiveness of asynchronous VPP in the learning of object-oriented programming among students at Open University Malaysia (OUM). The result of the research has shown that most of the learners have given positive feedback, indicating that they are happy with the use of asynchronous VPP. At the same time, learners did recommend some extra features that could be added in making asynchronous VPP more enjoyable. Keywords: Pair-programming; Virtual Pair-programming; Object Oriented Programming INTRODUCTION Delivering program of Information Technology through distance learning or E- Learning is indeed a very challenging task. -

The Joe-E Language Specification (Draft)

The Joe-E Language Specification (draft) Adrian Mettler David Wagner famettler,[email protected] February 3, 2008 Disclaimer: This is a draft version of the Joe-E specification, and is subject to change. Sections 5 - 7 mention some (but not all) of the aspects of the Joe-E language that are future work or current works in progress. 1 Introduction We describe the Joe-E language, a capability-based subset of Java intended to make it easier to build secure systems. The goal of object capability languages is to support the Principle of Least Authority (POLA), so that each object naturally receives the least privilege (i.e., least authority) needed to do its job. Thus, we hope that Joe-E will support secure programming while remaining familiar to Java programmers everywhere. 2 Goals We have several goals for the Joe-E language: • Be familiar to Java programmers. To minimize the barriers to adoption of Joe-E, the syntax and semantics of Joe-E should be familiar to Java programmers. We also want Joe-E programmers to be able to use all of their existing tools for editing, compiling, executing, debugging, profiling, and reasoning about Java code. We accomplish this by defining Joe-E as a subset of Java. In general: Subsetting Principle: Any valid Joe-E program should also be a valid Java program, with identical semantics. This preserves the semantics Java programmers will expect, which are critical to keeping the adoption costs manageable. Also, it means all of today's Java tools (IDEs, debuggers, profilers, static analyzers, theorem provers, etc.) will apply to Joe-E code.