On the Performance Analysis of Large Scale, Dynamic, Distributed and Parallel Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Edsger Dijkstra: the Man Who Carried Computer Science on His Shoulders

INFERENCE / Vol. 5, No. 3 Edsger Dijkstra The Man Who Carried Computer Science on His Shoulders Krzysztof Apt s it turned out, the train I had taken from dsger dijkstra was born in Rotterdam in 1930. Nijmegen to Eindhoven arrived late. To make He described his father, at one time the president matters worse, I was then unable to find the right of the Dutch Chemical Society, as “an excellent Aoffice in the university building. When I eventually arrived Echemist,” and his mother as “a brilliant mathematician for my appointment, I was more than half an hour behind who had no job.”1 In 1948, Dijkstra achieved remarkable schedule. The professor completely ignored my profuse results when he completed secondary school at the famous apologies and proceeded to take a full hour for the meet- Erasmiaans Gymnasium in Rotterdam. His school diploma ing. It was the first time I met Edsger Wybe Dijkstra. shows that he earned the highest possible grade in no less At the time of our meeting in 1975, Dijkstra was 45 than six out of thirteen subjects. He then enrolled at the years old. The most prestigious award in computer sci- University of Leiden to study physics. ence, the ACM Turing Award, had been conferred on In September 1951, Dijkstra’s father suggested he attend him three years earlier. Almost twenty years his junior, I a three-week course on programming in Cambridge. It knew very little about the field—I had only learned what turned out to be an idea with far-reaching consequences. a flowchart was a couple of weeks earlier. -

Resource Bounds for Self-Stabilizing Message Driven Protocols

RESOURCE BOUNDS FOR SELF-STABILIZING MESSAGE DRIVEN PROTOCOLS SHLOMI DOLEV¤, AMOS ISRAELIy , AND SHLOMO MORANz Abstract. Self-stabilizing message driven protocols are defined and discussed. The class weak- exclusion that contains many natural tasks such as `-exclusion and token-passing is defined, and it is shown that in any execution of any self-stabilizing protocol for a task in this class, the configuration size must grow at least in a logarithmic rate. This last lower bound is valid even if the system is supported by a time-out mechanism that prevents communication deadlocks. Then we present three self-stabilizing message driven protocols for token-passing. The rate of growth of configuration size for all three protocols matches the aforementioned lower bound. Our protocols are presented for two processor systems but can be easily adapted to rings of arbitrary size. Our results have an interesting interpretation in terms of automata theory. Key words. self-stabilization, message passing, token-passing, shared-memory AMS subject classifications. 68M10, 68M15, 68Q10, 68Q20 1. Introduction. A distributed system is a set of state machines, called pro- cessors, which communicate either by shared variables or by message-passing. In the first case, the system is a shared memory system, in the second case the system is a message-passing system. A distributed system is self-stabilizing if it can be started in any possible global state. Once started, the system regains its consistency by itself, without any kind of an outside intervention. The self-stabilization property is very useful for systems in which processors may crash and then recover spontaneously in an arbitrary state. -

Optical PUF for Non-Forwardable Vehicle Authentication

Computer Communications 93 (2016) 52–67 Contents lists available at ScienceDirect Computer Communications journal homepage: www.elsevier.com/locate/comcom R Optical PUF for Non-Forwardable Vehicle Authentication ∗ Shlomi Dolev a,1, Łukasz Krzywiecki b,2, Nisha Panwar a, , Michael Segal c a Department of Computer Science, Ben-Gurion University of the Negev, Israel b Department of Computer Science, Wrocław University of Technology, Poland c Department of Communication Systems Engineering, Ben-Gurion University of the Negev, Israel a r t i c l e i n f o a b s t r a c t Article history: Modern vehicles are configured to exchange warning messages through IEEE 1609 Dedicated Short Range Available online 1 June 2016 Communication over IEEE 802.11p Wireless Access in Vehicular Environment. Essentially, these warning messages must associate an authentication factor such that the verifier authenticates the message origin Keywords: Authentication via visual binding. Interestingly, the existing vehicle communication incorporates the message forward- Certificate ability as a requested feature for numerous applications. On the contrary, a secure vehicular communica- Wireless radio channel tion relies on a message authentication with respect to the sender identity. Currently, the vehicle security Optical channel infrastructure is vulnerable to message forwarding in a way that allows an incorrect visual binding with Challenge response pairs the malicious vehicle, i.e., messages seem to originate from a malicious vehicle due to non-detectable Verification message relaying instead of the actual message sender. We introduce the non-forwardable authentication to avoid an adversary coalition attack scenario. These messages should be identifiable with respect to the immediate sender at every hop. -

Resource-Competitive Algorithms1 1 Introduction

Resource-Competitive Algorithms1 Michael A. Bender Varsha Dani Department of Computer Science Department of Computer Science Stony Brook University University of New Mexico Stony Brook, NY, USA Albuquerque, NM, USA [email protected] [email protected] Jeremy T. Fineman Seth Gilbert Department of Computer Science Department of Computer Science Georgetown University National University of Singapore Washington, DC, USA Singapore [email protected] [email protected] Mahnush Movahedi Seth Pettie Department of Computer Science Electrical Eng. and Computer Science Dept. University of New Mexico University of Michigan Albuquerque, NM, USA Ann Arbor, MI, USA [email protected] [email protected] Jared Saia Maxwell Young Department of Computer Science Computer Science and Engineering Dept. University of New Mexico Mississippi State University Albuquerque, NM, USA Starkville, MS, USA [email protected] [email protected] Abstract The point of adversarial analysis is to model the worst-case performance of an algorithm. Un- fortunately, this analysis may not always reflect performance in practice because the adversarial assumption can be overly pessimistic. In such cases, several techniques have been developed to provide a more refined understanding of how an algorithm performs e.g., competitive analysis, parameterized analysis, and the theory of approximation algorithms. Here, we describe an analogous technique called resource competitiveness, tailored for dis- tributed systems. Often there is an operational cost for adversarial behavior arising from band- width usage, computational power, energy limitations, etc. Modeling this cost provides some notion of how much disruption the adversary can inflict on the system. In parameterizing by this cost, we can design an algorithm with the following guarantee: if the adversary pays T , then the additional cost of the algorithm is some function of T . -

Cyber Security Cryptography and Machine Learning

Lecture Notes in Computer Science 12716 Founding Editors Gerhard Goos Karlsruhe Institute of Technology, Karlsruhe, Germany Juris Hartmanis Cornell University, Ithaca, NY, USA Editorial Board Members Elisa Bertino Purdue University, West Lafayette, IN, USA Wen Gao Peking University, Beijing, China Bernhard Steffen TU Dortmund University, Dortmund, Germany Gerhard Woeginger RWTH Aachen, Aachen, Germany Moti Yung Columbia University, New York, NY, USA More information about this subseries at http://www.springer.com/series/7410 Shlomi Dolev · Oded Margalit · Benny Pinkas · Alexander Schwarzmann (Eds.) Cyber Security Cryptography and Machine Learning 5th International Symposium, CSCML 2021 Be’er Sheva, Israel, July 8–9, 2021 Proceedings Editors Shlomi Dolev Oded Margalit Ben-Gurion University of the Negev Ben-Gurion University of the Negev Be’er Sheva, Israel Be’er Sheva, Israel Benny Pinkas Alexander Schwarzmann Bar-Ilan University Augusta University Tel Aviv, Israel Augusta, GA, USA ISSN 0302-9743 ISSN 1611-3349 (electronic) Lecture Notes in Computer Science ISBN 978-3-030-78085-2 ISBN 978-3-030-78086-9 (eBook) https://doi.org/10.1007/978-3-030-78086-9 LNCS Sublibrary: SL4 – Security and Cryptology © Springer Nature Switzerland AG 2021 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. -

Dagstuhl-Seminar-Report

Dagstuhl Seminar on Time Service Danny Dolev, Hebrew University R¨udiger Reischuk, Med. Universitdt zu L¨ubeck Fred B. Schneider, Cornell University H. Raymond Strong, IBM Almaden Research Schloß Dagstuhl, March 11. – March 15. 1996 Contents Introduction ................................................... ........ 3 Final Seminar Programme ............................................. 4 Abstracts of Presentation: David Mills: Precision Network Time Synchronization ................... 5 Keith Marzullo: Synchronizing Clocks and Reading Sensors .............. 5 John Rushby: Formal Verification of Clock Synchronization Algorithms .. 6 Ulrich Schmid: Interval-based Clock Synchronization..................... 7 Klaus Schossmaier: UTCSU - An ASIC for Supporting Clock Synchronization for Distributed Real-Time Systems ................................ 8 Wolfgang Halang: Time Services Based on Radio Transmitted Official UTC................................................... .......... 9 Christof Fetzer: Fail-Aware Clock Synchronization....................... 9 Paulo Verissimo: Cesium Spray: a Precise and Accurate Time Services on Large-Scaled Distributed Systems ............................. 10 Shlomi Dolev: Self-Stabilizing Clock Synchronization Algorithms ........ 10 Wolfgang Reisig: A Temporal Logic for “As Soon As Possible” ......... 11 Augusto Ciuffoletti: Self-Stabilization Issues in Clock Synchronization Algorithms ................................................... ... 11 Sergio Rajsbaum:Extending Causal Order with Real-Time Specifications and -

Fast and Compact Distributed Verification and Self-Stabilization Of

Fast and Compact Distributed Verification and Self-Stabilization of a DFS Tree Shay Kutten and Chhaya Trehan Faculty of Industrial Engineering and Management, Technion, Haifa, Israel [email protected], [email protected] Abstract. We present algorithms for distributed verification and silent- stabilization of a DFS(Depth First Search) spanning tree of a connected network. Computing and maintaining such a DFS tree is an important task, e.g., for constructing efficient routing schemes. Our algorithm im- proves upon previous work in various ways. Comparable previous work has space and time complexities of O(n log ∆) bits per node and O(nD) respectively, where ∆ is the highest degree of a node, n is the number of nodes and D is the diameter of the network. In contrast, our algo- rithm has a space complexity of O(log n) bits per node, which is optimal for silent-stabilizing spanning trees and runs in O(n) time. In addition, our solution is modular since it utilizes the distributed verification al- gorithm as an independent subtask of the overall solution. It is possible to use the verification algorithm as a stand alone task or as a subtask in another algorithm. To demonstrate the simplicity of constructing ef- ficient DFS algorithms using the modular approach, we also present a (non-silent) self-stabilizing DFS token circulation algorithm for general networks based on our silent-stabilizing DFS tree. The complexities of this token circulation algorithm are comparable to the known ones. Keywords: Fault Tolerance, Self-* Solutions, Silent-Stabilization, DFS, Spanning Trees 1 Introduction A clear separation is common between the notions of computing and verification in sequential systems. -

SYSTOR 2007 IBM Haifa Systems & Storage Conference Virtualization

IBM Haifa Leadership Seminar œ Call for Participation Keynote speakers: SYSTOR 2007 Willy Zwaenepoel, EPFL IBM Haifa Systems & Storage Conference Danny Dolev, HUJI Virtualization Workshop October 29, 2007 Organizing committee: Organized by the IBM Haifa Research Lab Erez Hadad, HRL The Systems Technologies and Services Department at IBM's Haifa Research Lab Muli Ben-Yehuda, HRL (HRL), in collaboration with the Technion - Israel Institute of Technology, cordially invite you to a full-day workshop on the subject of virtualization within the SYSTOR Eliezer Dekel, HRL 2007 conference. SYSTOR 2007 is the first high-quality refereed systems and storage Miriam Allalouf, HRL conference organized by IBM Haifa Labs, drawing upon the successful foundation of previous systems and storage seminars. The purpose of this conference is to forge Ben-Ami Yassour, HRL and nourish research and working relations within the academic and industrial Yaron Wolfsthal, HRL community in Israel and in the world, targeting researchers and practitioners alike. Dalit Naor, HRL The virtualization workshop in SYSTOR 2007 will present contemporary Assaf Schuster, Technion advancements in the field of systems virtualization and will feature two renowned keynote speakers: Danny Dolev from the Hebrew University of Jerusalem, Israel, Yitzhak (Tsahi) Birk, Technion and Willy Zwaenepoel from Lausanne Federal Institute of Technology (EPFL), Switzerland. Program committee: SYSTOR 2007 will be held on Monday and Tuesday, October 29-30, 2007, at the HRL site at the Haifa University campus from 09:15 to 17:15. The Virtualization Workshop Assaf Schuster, Technion will take place on the first day followed by the Storage: Market Trends and Future Yitzhak (Tsahi) Birk, Technion R&D Seminar for practitioners, organized by the IBM Haifa Storage Lab, on the second day. -

Blindly Follow: SITS CRT and FHE for DCLSMPC of DUFSM (Preliminary Version)

Blindly Follow: SITS CRT and FHE for DCLSMPC of DUFSM (Preliminary Version) Shlomi Dolev and Stav Doolman Department of Computer Science, Ben-Gurion University of the Negev, Israel Abstract. A Statistical Information Theoretic Secure (SITS) system utilizing the Chinese Remainder Theorem (CRT), coupled with Fully Ho- momorphic Encryption (FHE) for Distributed Communication-less Se- cure Multiparty Computation (DCLSMPC) of any Distributed Unknown Finite State Machine (DUFSM) is presented. Namely, secret shares of the input(s) and output(s) are passed to/from the computing parties, while there is no communication between them throughout the computation. We propose a novel approach of transition table representation and poly- nomial representation for arithmetic circuits evaluation, joined with a CRT secret sharing scheme and FHE to achieve SITS communication-less within computational secure execution of DUFSM. We address the severe limitation of FHE implementation over a single server to cope with a ma- licious or Byzantine server. We use several distributed memory-efficient solutions that are significantly better than the majority vote in replicated state machines, where each participant maintains an FHE replica. A Dis- tributed Unknown Finite State Machine (DUFSM) is achieved when the transition table is secret shared or when the (possible zero value) coeffi- cients of the polynomial are secret shared, implying communication-less SMPC of an unknown finite state machine. Keywords: Secure Multiparty Computation, Replicated State Machine, Chinese Remainder Theorem 1 Introduction The processing of encrypted information where the computation program is un- known is an important task that can be solved in a distributed fashion using communication among several participants, e.g., [14]. -

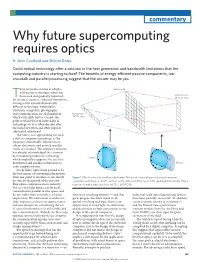

Why Future Supercomputing Requires Optics H

commentary Why future supercomputing requires optics H. John Caulfi eld and Shlomi Dolev Could optical technology off er a solution to the heat generation and bandwidth limitations that the computing industry is starting to face? The benefi ts of energy-effi cient passive components, low crosstalk and parallel processing suggest that the answer may be yes. here are points in time at which a Matrix SLM well-known technology, which has been used and gradually improved Output vector T detector array for decades, reaches its physical limitations, forcing a shift towards dramatically diff erent technology. Automobiles, television, computers, photography and communication are all domains in Input vector which such shift s have occurred. Th e VCSEL array point at which these massive shift s in technology occur is oft en decades aft er the initial invention and oft en requires substantial investment. Th e time is fast approaching for such a shift in computer technology, as the frequency (bandwidth) limitations of silicon electronics and printed metallic tracks are reached. Th e computer industry has already acknowledged the situation by introducing multicore technology Lenses which implicitly recognizes the use of a distributed and parallel architecture to Lenses scale computer power. For decades, optics have proved to be the best means of conveying information from one point to another, as can clearly Figure 1 | The Stanford Vector Matrix Multiplier. The use of a parallel approach greatly improves be seen by the growth of the massive computing performance. VCSEL, vertical cavity surface emitting laser; SLM, spatial light modulator. Figure fi bre optics communications industry. -

Towards In-Vivo Radio Transmitting Nanorobots

Towards In-Vivo Radio Transmitting Nanorobots (Brief Summary) Shlomi Dolev and Ram Prasadh Narayanan Department of Computer Science, Ben-Gurion University of the Negev, Israel fdolev,[email protected] ABSTRACT are used in cantilever based NEM devices for sensing and radio tranceiving applications [5, 6]. An implementable design of a Nano Electro Mechani- cal (NEM) Carbon Nanotube (CNT) cantilever for com- munication in nanorobots is sketched in this brief sum- mary. Nano communication devices can provide future 2 NANORADIO AND PULSE nanorobots with the ability to communicate its status GENERATION to an outside entity as well as synchronize in a medium, to form an intelligent swarm. We develop a model which There has been a growing interest in the miniaturiza- can help to tune the cantilever, based on its geometry tion of devices, specifically for active and passive elec- and other properties, for the intended oscillation fre- tronic systems, including devices used for radio com- quency. Electrostatic actuation over CNT cantilever is munication. Conventional nano particles in medicine used to omit radio signal transmission. The electric- are basically used for targeted treatment, imaging, flo- ity can be harvested from the blood sugar. The design rescence agents, magnetic and thermal induced actu- is envisioned to be a part of an autonomous inorganic ation [7]. Recent advances in material research and nanorobot for medical purposes. fabrication techniques has paved the way for testing newer combinations and material geometry to improve Keywords: nano-electro-mechanical systems (nems), the effect of nanoparticle-based treatment techniques. actuator, cancer, energy harvesting, carbon-nanotube Recently, researchers fabricated tiny autonomous DNA (cnt) detector, tumor detection nanorobots to be used in sensing and targeted delivery of drugs [8, 9, 10]. -

CURRICULUM VITAE and LIST of PUBLICATIONS July 2019 Personal Details: Name: Shlomi (Shlomo) Dolev

CURRICULUM VITAE AND LIST OF PUBLICATIONS July 2019 Personal Details: Name: Shlomi (Shlomo) Dolev. Date and place of birth: 5/12/58, Israel. Regular military service: 13/2/77 to 12/8/80 (Cap- tain). Address and telephone number at work: Department of Computer Science, Ben-Gurion University of the Negev, Beer-Sheva 84105, Israel, Tel: 08-6472718, Fax: 08-6477650, Email: [email protected]. Parent of: Noa, Yorai, Hagar and Eden. Short Biography: Shlomi Dolev received his B.Sc. in Engineering and B.A. in Computer Science in 1984 and 1985, and his M.Sc. and D.Sc. in computer Science in 1990 and 1992 from the Technion Israel Institute of Technology. From 1992 to 1995 he was at Texas A&M University as a visiting research specialist. In 1995 he joined the Department of Mathematics and Computer Science at Ben-Gurion University. Shlomi is the founder and the first department head of the Computer Science Department at Ben-Gurion University, established in 2000. After just 15 years, the department has been ranked among the first 150 best departments in the world. He is the author of a book entitled Self-Stabilization published by MIT Press in 2000. His publications, more than three hundred conferences, journals and patents, include papers in JACM, SIAM journal on computing, Nature Photonics, Nature Communications, Physical Review, Journal of the Optical Society of America A, Distributed Computing, IEEE/ACM Trans. on Networking, ACM Trans. on Information and System Security, Journal of Cryptology, Journal of Computer and System Sciences, ACM Trans. on Knowledge Discovery from Data, ACM Trans.