Lecture Notes Combinatorics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Principle of Inclusion-Exclusion

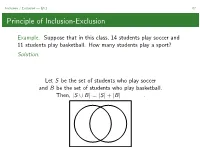

Inclusion / Exclusion — §3.1 67 Principle of Inclusion-Exclusion Example. Suppose that in this class, 14 students play soccer and 11 students play basketball. How many students play a sport? Solution. Let S be the set of students who play soccer and B be the set of students who play basketball. Then, |S ∪ B| = |S| + |B| . Inclusion / Exclusion — §3.1 68 Principle of Inclusion-Exclusion When A = A1 ∪···∪Ak ⊂U (U for universe) and the sets Ai are pairwise disjoint,wehave|A| = |A1| + ···+ |Ak |. When A = A1 ∪···∪Ak ⊂U and the Ai are not pairwise disjoint, we must apply the principle of inclusion-exclusion to determine |A|: |A1 ∪ A2| = |A1| + |A2|−|A1 ∩ A2| |A1 ∪ A2 ∪ A3| = |A1| + |A2| + |A3|−|A1 ∩ A2|−|A1 ∩ A3| −|A2 ∩ A3| + |A1 ∩ A2 ∩ A3| |A1 ∪···∪Am| = |Ai |− |Ai ∩ Aj | + Ai ∩ Aj ∩ Ak ··· ! ! ! " " " " It may be more convenient to apply inclusion/exclusion where the Ai are forbidden subsets of U,inwhichcase . Inclusion / Exclusion — §3.1 69 mmm...PIE The key to using the principle of inclusion-exclusion is determining the right choice of Ai .TheAi and their intersections should be easy to count and easy to characterize. Notation: π = p1p2 ···pn is the one-line notation for a permutation of [n] whose first element is p1,secondelementisp2,etc. Example. How many permutations p = p1p2 ···pn are there in which at least one of p1 and p2 are even? Solution. Let U be the set of n-permutations. Let A1 be the set of permutations where p1 is even. Let A2 be the set of permutations where p2 is even. -

On Fixed Points of Iterations Between the Order of Appearance and the Euler Totient Function

mathematics Article On Fixed Points of Iterations Between the Order of Appearance and the Euler Totient Function ŠtˇepánHubálovský 1,* and Eva Trojovská 2 1 Department of Applied Cybernetics, Faculty of Science, University of Hradec Králové, 50003 Hradec Králové, Czech Republic 2 Department of Mathematics, Faculty of Science, University of Hradec Králové, 50003 Hradec Králové, Czech Republic; [email protected] * Correspondence: [email protected] or [email protected]; Tel.: +420-49-333-2704 Received: 3 October 2020; Accepted: 14 October 2020; Published: 16 October 2020 Abstract: Let Fn be the nth Fibonacci number. The order of appearance z(n) of a natural number n is defined as the smallest positive integer k such that Fk ≡ 0 (mod n). In this paper, we shall find all positive solutions of the Diophantine equation z(j(n)) = n, where j is the Euler totient function. Keywords: Fibonacci numbers; order of appearance; Euler totient function; fixed points; Diophantine equations MSC: 11B39; 11DXX 1. Introduction Let (Fn)n≥0 be the sequence of Fibonacci numbers which is defined by 2nd order recurrence Fn+2 = Fn+1 + Fn, with initial conditions Fi = i, for i 2 f0, 1g. These numbers (together with the sequence of prime numbers) form a very important sequence in mathematics (mainly because its unexpectedly and often appearance in many branches of mathematics as well as in another disciplines). We refer the reader to [1–3] and their very extensive bibliography. We recall that an arithmetic function is any function f : Z>0 ! C (i.e., a complex-valued function which is defined for all positive integer). -

Ces`Aro's Integral Formula for the Bell Numbers (Corrected)

Ces`aro’s Integral Formula for the Bell Numbers (Corrected) DAVID CALLAN Department of Statistics University of Wisconsin-Madison Medical Science Center 1300 University Ave Madison, WI 53706-1532 [email protected] October 3, 2005 In 1885, Ces`aro [1] gave the remarkable formula π 2 cos θ N = ee cos(sin θ)) sin( ecos θ sin(sin θ) ) sin pθ dθ p πe Z0 where (Np)p≥1 = (1, 2, 5, 15, 52, 203,...) are the modern-day Bell numbers. This formula was reproduced verbatim in the Editorial Comment on a 1941 Monthly problem [2] (the notation Np for Bell number was still in use then). I have not seen it in recent works and, while it’s not very profound, I think it deserves to be better known. Unfortunately, it contains a typographical error: a factor of p! is omitted. The correct formula, with n in place of p and using Bn for Bell number, is π 2 n! cos θ B = ee cos(sin θ)) sin( ecos θ sin(sin θ) ) sin nθ dθ n ≥ 1. n πe Z0 eiθ The integrand is the imaginary part of ee sin nθ, and so an equivalent formula is π 2 n! eiθ B = Im ee sin nθ dθ . (1) n πe Z0 The formula (1) is quite simple to prove modulo a few standard facts about set par- n titions. Recall that the Stirling partition number k is the number of partitions of n n [n] = {1, 2,...,n} into k nonempty blocks and the Bell number Bn = k=1 k counts n k n n k k all partitions of [ ]. -

MTH 102A - Part 1 Linear Algebra 2019-20-II Semester

MTH 102A - Part 1 Linear Algebra 2019-20-II Semester Arbind Kumar Lal1 February 3, 2020 1Indian Institute of Technology Kanpur 1 2 • Matrix A = behaves like the scalar 3 when multiplied with 2 1 1 1 2 1 1 x = . That is, = 3 . 1 2 1 1 1 • Physically: The Linear function f (x) = Ax magnifies the nonzero 1 2 vector ∈ C three (3) times. 1 1 1 • Similarly, A = −1 . So, behaves by changing the −1 −1 1 direction of the vector −1 1 2 2 • Take A = . Do I have a nonzero x ∈ C which gets 1 3 magnified by A? • So I am looking for x 6= 0 and α s.t. Ax = αx. Using x 6= 0, we have Ax = αx if and only if [αI − A]x = 0 if and only if det[αI − A] = 0. √ α − 1 −2 2 • det[αI − A] = det = α − 4α + 1. So α = 2 ± 3. −1 α − 3 √ √ 1 + 3 −2 • Take α = 2 + 3. To find x, solve √ x = 0: −1 3 − 1 √ 3 − 1 using GJE, for instance. We get x = . Moreover 1 √ √ √ 1 2 3 − 1 3 + 1 √ 3 − 1 Ax = = √ = (2 + 3) . 1 3 1 2 + 3 1 n • We call λ ∈ C an eigenvalue of An×n if there exists x ∈ C , x 6= 0 s.t. Ax = λx. We call x an eigenvector of A for the eigenvalue λ. We call (λ, x) an eigenpair. • If (λ, x) is an eigenpair of A, then so is (λ, cx), for each c 6= 0, c ∈ C. -

MAT344 Lecture 6

MAT344 Lecture 6 2019/May/22 1 Announcements 2 This week This week, we are talking about 1. Recursion 2. Induction 3 Recap Last time we talked about 1. Recursion 4 Fibonacci numbers The famous Fibonacci sequence starts like this: 1; 1; 2; 3; 5; 8; 13;::: The rule defining the sequence is F1 = 1;F2 = 1, and for n ≥ 3, Fn = Fn−1 + Fn−2: This is a recursive formula. As you might expect, if certain kinds of numbers have a name, they answer many counting problems. Exercise 4.1 (Example 3.2 in [KT17]). Show that a 2 × n checkerboard can be tiled with 2 × 1 dominoes in Fn+1 many ways. Solution: Denote the number of tilings of a 2 × n rectangle by Tn. We check that T1 = 1 and T2 = 2. We want to prove that they satisfy the recurrence relation Tn = Tn−1 + Tn−2: Consider the domino occupying the rightmost spot in the top row of the tiling. It is either a vertical domino, in which case the rest of the tiling can be interpreted as a tiling of a 2 × (n − 1) rectangle, or it is a horizontal domino, in which case there must be another horizontal domino under it, and the rest of the tiling can be interpreted as a tiling of a 2 × (n − 2) rectangle. Therefore Tn = Tn−1 + Tn−2: Since the number of tilings satisfies the same recurrence relation as the Fibonacci numbers, and T1 = F2 = 1 and T2 = F3 = 2, we may conclude that Tn = Fn+1. -

Appendix a Tables of Fermat Numbers and Their Prime Factors

Appendix A Tables of Fermat Numbers and Their Prime Factors The problem of distinguishing prime numbers from composite numbers and of resolving the latter into their prime factors is known to be one of the most important and useful in arithmetic. Carl Friedrich Gauss Disquisitiones arithmeticae, Sec. 329 Fermat Numbers Fo =3, FI =5, F2 =17, F3 =257, F4 =65537, F5 =4294967297, F6 =18446744073709551617, F7 =340282366920938463463374607431768211457, Fs =115792089237316195423570985008687907853 269984665640564039457584007913129639937, Fg =134078079299425970995740249982058461274 793658205923933777235614437217640300735 469768018742981669034276900318581864860 50853753882811946569946433649006084097, FlO =179769313486231590772930519078902473361 797697894230657273430081157732675805500 963132708477322407536021120113879871393 357658789768814416622492847430639474124 377767893424865485276302219601246094119 453082952085005768838150682342462881473 913110540827237163350510684586298239947 245938479716304835356329624224137217. The only known Fermat primes are Fo, ... , F4 • 208 17 lectures on Fermat numbers Completely Factored Composite Fermat Numbers m prime factor year discoverer 5 641 1732 Euler 5 6700417 1732 Euler 6 274177 1855 Clausen 6 67280421310721* 1855 Clausen 7 59649589127497217 1970 Morrison, Brillhart 7 5704689200685129054721 1970 Morrison, Brillhart 8 1238926361552897 1980 Brent, Pollard 8 p**62 1980 Brent, Pollard 9 2424833 1903 Western 9 P49 1990 Lenstra, Lenstra, Jr., Manasse, Pollard 9 p***99 1990 Lenstra, Lenstra, Jr., Manasse, Pollard -

The Observability of the Fibonacci and the Lucas Cubes

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Discrete Mathematics 255 (2002) 55–63 www.elsevier.com/locate/disc The observability of the Fibonacci and the Lucas cubes Ernesto DedÃo∗;1, Damiano Torri1, Norma Zagaglia Salvi1 Dipartimento di Matematica, Politecnico di Milano, Piazza Leonardo da Vinci 32, 20133 Milano, Italy Received 5 April 1999; received inrevised form 31 July 2000; accepted 8 January2001 Abstract The Fibonacci cube n is the graph whose vertices are binary strings of length n without two consecutive 1’s and two vertices are adjacent when their Hamming distance is exactly 1. If the binary strings do not contain two consecutive 1’s nora1intheÿrst and in the last position, we obtainthe Lucas cube Ln. We prove that the observability of n and Ln is n, where the observability of a graph G is the minimum number of colors to be assigned to the edges of G so that the coloring is proper and the vertices are distinguished by their color sets. c 2002 Elsevier Science B.V. All rights reserved. MSC: 05C15; 05A15 Keywords: Fibonacci cube; Fibonacci number; Lucas number; Observability 1. Introduction A Fibonacci string of order n is a binary string of length n without two con- secutive ones. Let and ÿ be binary strings; then ÿ denotes the string obtained by concatenating and ÿ. More generally, if S is a set of strings, then Sÿ = {ÿ: ∈ S}. If Cn denotes the set of the Fibonacci strings of order n, then Cn+2 =0Cn+1 +10Cn and |Cn| = Fn, where Fn is the nth Fibonacci number. -

A Logical Approach to Asymptotic Combinatorics I. First Order Properties

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector ADVANCES IN MATI-EMATlCS 65, 65-96 (1987) A Logical Approach to Asymptotic Combinatorics I. First Order Properties KEVIN J. COMPTON ’ Wesleyan University, Middletown, Connecticut 06457 INTRODUCTION We shall present a general framework for dealing with an extensive set of problems from asymptotic combinatorics; this framework provides methods for determining the probability that a large, finite structure, ran- domly chosen from a given class, will have a given property. Our main concern is the asymptotic probability: the limiting value as the size of the structure increases. For example, a common problem in elementary probability texts is to show that the asymptotic probabity that a per- mutation will have no fixed point is l/e. We shall say nothing about the closely related problem of determining rates of convergence, although the methods presented here may extend to such problems. To develop a general approach we must fix a language for specifying properties of structures. Thus, our approach is logical; logic is the branch of mathematics that deals with problems of language. In this paper we con- sider properties expressible in the language of first order logic and speak of probabilities of first order sentences rather than properties. In the sequel to this paper we consider properties expressible in the more general language of monadic second order logic. Clearly, we must restrict the classes of structures we consider in order for questions about asymptotic probabilities to be meaningful and significant. Therefore, we choose to consider only classes closed under disjoint unions and components (see Sect. -

On Hardy's Apology Numbers

ON HARDY’S APOLOGY NUMBERS HENK KOPPELAAR AND PEYMAN NASEHPOUR Abstract. Twelve well known ‘Recreational’ numbers are generalized and classified in three generalized types Hardy, Dudeney, and Wells. A novel proof method to limit the search for the numbers is exemplified for each of the types. Combinatorial operators are defined to ease programming the search. 0. Introduction “Recreational Mathematics” is a broad term that covers many different areas including games, puzzles, magic, art, and more [31]. Some may have the impres- sion that topics discussed in recreational mathematics in general and recreational number theory, in particular, are only for entertainment and may not have an ap- plication in mathematics, engineering, or science. As for the mathematics, even the simplest operation in this paper, i.e. the sum of digits function, has application outside number theory in the domain of combinatorics [13, 26, 27, 28, 34] and in a seemingly unrelated mathematical knowledge domain: topology [21, 23, 15]. Pa- pers about generalizations of the sum of digits function are discussed by Stolarsky [38]. It also is a surprise to see that another topic of this paper, i.e. Armstrong numbers, has applications in “data security” [16]. In number theory, functions are usually non-continuous. This inhibits solving equations, for instance, by application of the contraction mapping principle because the latter is normally for continuous functions. Based on this argument, questions about solving number-theoretic equations ramify to the following: (1) Are there any solutions to an equation? (2) If there are any solutions to an equation, then are finitely many solutions? (3) Can all solutions be found in theory? (4) Can one in practice compute a full list of solutions? arXiv:2008.08187v1 [math.NT] 18 Aug 2020 The main purpose of this paper is to investigate these constructive (or algorith- mic) problems by the fixed points of some special functions of the form f : N N. -

Interval Reductions and Extensions of Orders: Bijections To

Interval Reductions and Extensions of Orders Bijections to Chains in Lattices Stefan Felsner Jens Gustedt and Michel Morvan Freie Universitat Berlin Fachbereich Mathematik und Informatik Takustr Berlin Germany Email felsnerinffub erlinde Technische Universitat Berlin Fachbereich Mathematik Strae des Juni MA Berlin Germany Email gustedtmathtub erlinde LIAFA Universite Paris Denis Diderot Case place Jussieu Paris Cedex France Email morvanliafajussieufr Abstract We discuss bijections that relate families of chains in lattices asso ciated to an order P and families of interval orders dened on the ground set of P Two bijections of this typ e havebeenknown The bijection b etween maximal chains in the antichain lattice AP and the linear extensions of P A bijection between maximal chains in the lattice of maximal antichains A P and minimal interval extensions of P M We discuss two approaches to asso ciate interval orders to chains in AP This leads to new bijections generalizing Bijections and As a consequence wechar acterize the chains corresp onding to weakorder extensions and minimal weakorder extensions of P Seeking for a way of representing interval reductions of P bychains wecameup with the separation lattice S P Chains in this lattice enco de an interesting sub class of interval reductions of P Let S P b e the lattice of maximal separations M in the separation lattice Restricted to maximal separations the ab ove bijection sp ecializes to a bijection whic h nicely complementsand A bijection between maximal -

The Twelvefold Way, the Nonintersecting Circles Problem, and Partitions of Multisets

Turkish Journal of Mathematics Turk J Math (2019) 43: 765 – 782 http://journals.tubitak.gov.tr/math/ © TÜBİTAK Research Article doi:10.3906/mat-1805-72 The twelvefold way, the nonintersecting circles problem, and partitions of multisets Toufik MANSOUR1,, Madjid MIRZVAZIRI2, Daniel YAQUBI2;∗ 1Department of Mathematics, University of Haifa, Haifa, Israel 2Department of Mathematics, Ferdowsi University of Mashhad, Mashhad, Iran Received: 14.05.2018 • Accepted/Published Online: 30.01.2019 • Final Version: 27.03.2019 Abstract: Let n be a nonnegative integer and A = fa1; : : : ; akg be a multiset with k positive integers such that a1 6 ··· 6 ak . In this paper, we give a recursive formula for partitions and distinct partitions of positive integer n with respect to a multiset A. We also consider the extension of the twelvefold way. By using this notion, we solve the nonintersecting circles problem, which asks to evaluate the number of ways to draw n nonintersecting circles in the plane regardless of their sizes. The latter also enumerates the number of unlabeled rooted trees with n + 1 vertices. Key words: Multiset, partitions and distinct partitions, twelvefold way, nonintersecting circles problem, rooted trees, Wilf partitions 1. Introduction A partition of n is a sequence λ1 > λ2 > ··· > λk of positive integers such that λ1 + λ2 + ··· + λk = n (see [2]). We write λ ` n to denote that λ is a partition of n. The nonzero integers λ in λ are called parts of λ. P k j j The number of parts of λ is the length of λ, denoted by `(λ), and λ = k>1 λk is the weight of λ. -

Dyck Paths and Positroids from Unit Interval Orders (Extended Abstract)

Dyck Paths and Positroids from Unit Interval Orders (Extended Abstract) Anastasia Chavez 1 and Felix Gotti 2 ∗ 1Department of Mathematics, UC Berkeley, Berkeley CA 94720 2 Department of Mathematics, UC Berkeley, Berkeley CA 94720 . Abstract. It is well known that the number of non-isomorphic unit interval orders on [n] equals the n-th Catalan number. Using work of Skandera and Reed and work of Postnikov, we show that each unit interval order on [n] naturally induces a rank n positroid on [2n]. We call the positroids produced in this fashion unit interval positroids. We characterize the unit interval positroids by describing their associated decorated permutations, showing that each one must be a 2n-cycle encoding a Dyck path of length 2n. 1 Introduction A unit interval order is a partially ordered set that captures the order relations among a collection of unit intervals on the real line. Unit interval orders were introduced by Luce [8] to axiomatize a class of utilities in the theory of preferences in economics. Since then they have been systematically studied (see [3,4,5,6, 14] and references therein). These posets exhibit many interesting properties; for example, they can be characterized as the posets that are simultaneously (3 + 1)-free and (2 + 2)-free. Moreover, it is well 1 2n known that the number of non-isomorphic unit interval orders on [n] equals n+1 ( n ), the n-th Catalan number (see [3, Section 4] or [15, Exercise 2.180]). In [14], motivated by the desire to understand the f -vectors of various classes of posets, Skandera and Reed showed that one can canonically label the elements of a unit interval order from 1 to n so that its n × n antiadjacency matrix is totally nonnegative (i.e., has all its minors nonnegative) and its zero entries form a right-justified Young diagram located strictly above the main diagonal and anchored in the upper-right corner.