Write the Title of the Thesis Here

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Interview with Frieder Nake

arts Editorial An Interview with Frieder Nake Glenn W. Smith Space Machines Corporation, 3443 Esplanade Ave., Suite 438, New Orleans, LA 70119, USA; [email protected] Received: 24 May 2019; Accepted: 26 May 2019; Published: 31 May 2019 Abstract: In this interview, mathematician and computer art pioneer Frieder Nake addresses the emergence of the algorithm as central to our understanding of art: just as the craft of computer programming has been irreplaceable for us in appreciating the marvels of the DNA genetic code, so too has computer-generated art—and with the algorithm as its operative principle—forever illuminated its practice by traditional artists. Keywords: algorithm; computer art; emergent phenomenon; Paul Klee; Frieder Nake; neural network; DNN; CNN; GAN 1. Introduction The idea that most art is to some extent algorithmic,1 whether produced by an actual computer or a human artist, may well represent the central theme of the Arts Special Issue “The Machine as Artist (for the 21st Century)” as it has evolved in the light of more than fifteen thoughtful contributions. This, in turn, brings forcibly to mind mathematician, computer scientist, and computer art pioneer Frieder Nake, one of a handful of pioneer artist-theorists who, for more than fifty years, have focused on algorithmic art per se, but who can also speak to the larger role of the algorithm within art. As a graduate student in mathematics at the University of Stuttgart in the mid-1960s (and from which he graduated with a PhD in Probability Theory in 1967), Nake was a core member, along with Siemens mathematician and philosophy graduate student Georg Nees, of the informal “Stuttgart School”, whose leader was philosophy professor Max Bense—one of the first academics to apply information processing principles to aesthetics. -

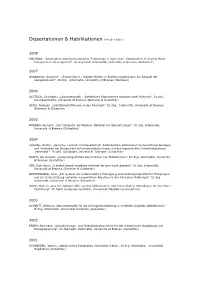

Dissertationen & Habilitationen (Frieder Nake)

Dissertationen & Habilitationen (Frieder Nake) 2008 KOLYANG: „Information and Communication Technology in Cameroon: Contribution of Second Hand Computers to Development“. Dr.Ing.habil. Informatik, University of Bremen (Gutachter) 2007 GRABOWSKI, Susanne: „ZeichenRaum. Digitale Medien in Studienumgebungen am Beispiel der Computerkunst“. Dr.Ing. Informatik, University of Bremen (Betreuer) 2006 KLÜTSCH, Christoph: „Computergrafik – Ästhetische Experimente zwischen zwei Kulturen“. Dr.phil. Kunstgeschichte, University of Bremen (Betreuer & Gutachter) GENZ, Andreas: „Sichtbarkeitsfilterung in der Raumzeit“. Dr.Ing. Informatik, University of Bremen (Betreuer & Gutachter) 2005 ROBBEN, Bernard: „Der Computer als Medium. Notation für Übersetzungen“. Dr.Ing. Informatik, University of Bremen (Gutachter) 2004 KRAUSE, Detlev: „Sprache, Technik und Gesellschaft. Soziologische Reflexionen zu Gestaltungszwängen und -freiheiten am Beispiel der Informationstechnologie und des maschinellen Dolmetschsystems ‚Verbmobil’“. Dr.phil. Soziologie, Universität Tübingen (Gutachter) PLATH, Jan Konrad: „Computergestützte Konstruktion von Maßschuhen“. Dr. Ing. Informatik, University of Bremen (Gutachter) KIM, Dae Huyn: „A sketch-based modeling interface for pen-input displays“. Dr.Ing. Informatik, University of Bremen (Betreuer & Gutachter) BREITENBORN, Jens: „Ein System zur automatischen Erzeugung untersuchungsspezifischer Hängungen und zur Unterstützung verteilten kooperativen Arbeitens in der klinischen Radiologie“. Dr.Ing. Informatik, University of Bremen (Gutachter) -

MANFRED MOHR B.1938 in Pforzheim, Germany Lives and Works in New York, Since 1981

MANFRED MOHR b.1938 in Pforzheim, Germany Lives and works in New York, since 1981 Manfred Mohr is a leader within the field of software-based art. Co-founder of the "Art et Informatique" seminar in 1968 at Vincennes University in Paris, he discovered Professor Max Bense's writing on information aesthetics in the early 1960s. These texts radically changed Mohr's artistic thinking, and within a few years, his art transformed from abstract expressionism to computer-generated algorithmic geometry. Encouraged by the computer music composer Pierre Barbaud, whom he met in 1967, Mohr programmed his first computer drawings in 1969. His first major museum exhibition, Une esthétique programmée, took place in 1971 at the Musée d'Art Moderne de la Ville de Paris. It has since become known as the first solo show in a museum of works entirely calculated and drawn by a digital computer. During the exhibition, Mohr demonstrated his process of drawing his computer-generated imagery using a Benson flatbed plotter for the first time in public. Mohr’s pieces have been based on the logical structure of cubes and hypercubes—including the lines, planes, and relationships among them—since 1973. Recently the subject of a retrospective at ZKM, Karlsruhe, Mohr’s work is in the collections of the Centre Pompidou, Paris; Joseph Albers Museum, Bottrop; Ludwig Museum, Cologne; Victoria and Albert Museum, London; Mary and Leigh Block Museum of Art; Wilhelm-Hack-Museum, Ludwigshafen; Kunstmuseum Stuttgart; Stedelijk Museum, Amsterdam; Museum Kulturspeicher, Würzburg; Kunsthalle Bremen; Musée d'Art Moderne et Contemporain, Strasbourg; Daimler Contemporary, Berlin; Musée d’Art Contemporain, Montreal; McCrory Collection, New York; and Esther Grether Collection, Basel. -

Manfred Mohr 1964- 2011, Réflexions Sur Une Esthétique Programmée

bitforms gallery nyc For Immediate Release 529 West 20th St Contact: Laura Blereau New York NY 10011 [email protected] (212) 366-6939 www.bitforms.com Manfred Mohr 1964- 2011, Réflexions sur une esthétique programmée Sept 9 – Oct 15, 2011 bitforms gallery nyc Opening Reception: Friday, Sept 9, 6:00 – 8:30 PM Gallery Hours: Tue – Sat, 11:00 AM – 6:00 PM It was May 11, 1971 when the Musée d'Art Moderne de la Ville de Paris opened the influential exhibition “Computer Graphics - Une Esthétique Programmée”. A solo exhibition by Manfred Mohr, it featured the first display in a museum of works entirely calculated and drawn by a digital (rather than analog) computer. Revolutionary for its time, these drawings were more than mere curiosities – they signaled a new era of image creation, setting in motion a trajectory of modernism and information aesthetics. Using Manfred Mohr’s work as a touchstone, "1964-2011, Réflexions sur une Esthétique Programmée" reveals, through his art work, a critical period of development in media arts. It examines shifting perspectives in art and the working methods that made the visual conversation of information aesthetics possible. How the computer emerged as a tool for art, results from its capacity for handling systems of high complexity, beyond our normal abilities. Discovering the theoretical writings of the German philosopher Max Bense in the early 1960’s, Mohr was fascinated by the idea of a programmed aesthetic, which coincided with his strong interest in designing electronic sound devices and using scientific imagery in his paintings. Mohr studied in Germany and in Paris at the Ecole de Beaux-Arts, and in 1968 he was a founding member of the seminar 'Art et Informatique' at the University of Vincennes. -

Manfred Mohr: a Formal Language Celebrating 50 Years of Artwork and Algorithms September 7 – November 3, 2019

Manfred Mohr: A Formal Language Celebrating 50 Years of Artwork and Algorithms September 7 – November 3, 2019 Opening Reception: Saturday, September 7, 6 – 8 PM Closing Reception: Sunday, November 3, 4:30 – 6 PM Gallery Hours: Wednesday – Saturday, 11 AM – 6 PM & Sunday 12 – 6 PM bitforms gallery is pleased to celebrate the 50th anniversary (1969 – 2019) of Manfred Mohr writing and creating artwork with algorithms. This exhibition showcases poignant moments throughout the artist’s career, presenting pre-computer artworks from the early 1960s alongside programmed film, wall reliefs, real-time screen-based works, computer-generated works on paper and canvas, and ephemeral objects. Mohr utilizes algorithms to engage rational aesthetics, inviting logic to produce visual outcomes. A Formal Language (named after his 1970 computer drawing) follows the evolution of the artist's programmed systems, including the first computer-generated drawings to the implementation of the hypercube. While Mohr’s career spans over sixty years, this exhibition distinguishes his pioneering use of algorithms decades before it became a tool of contemporary art. In the early 1960s, action painting, atonal music, and geometry were of great interest to Mohr. His early works indicate a confluence of interests in jazz and Abstract Expressionism through weighted strokes and graphic composition. Mohr was connected to instrumentation as a musician himself, playing tenor saxophone and oboe in jazz clubs across Europe. As his practice continued to progress, Max Bense’s theories about rational aesthetics greatly impacted Mohr, ushering in binary, black and white palettes of logical, geometric connections that would soon become his signature. Mohr wrote his first algorithm using the programming language Fortran IV in 1969. -

Manfred Mohr One and Zero 16Th November – 20Th December 2012

Press Release Manfred Mohr one and zero 16th November – 20th December 2012 P198a, plotter drawing, 1977, 60 x 60cm Press Preview: Thursday 15 November, 5 - 6:30pm All my relations to aesthetical decisions always go back to musical thinking, either active in that I played a musical instrument or theoretical in that I see my art as visual music… I was very impressed by Anton Webern's music from the 1920s where for the first time I realized that space, the pause, became as important to the musical construct as the sound itself. So there are these two poles, one and zero. Manfred Mohr one and zero, Manfred Mohr's first solo exhibition in London, presents a concise survey of his fifty-year practice. Harnessing the automatic processes of the computer, Mohr's work brings together his deep interest in music and mathematics to create works that are rigorously minimal but with an elegant lyricism that belie their formal underpinnings. Through drawing, painting, wall- reliefs and screen-based works, the show examines the artist's practice through the prism of music and the idea that what is left out is as important as what remains. Beginning in 1969, Mohr was one of the first visual artists to explore the use of algorithms and computer programs to make independent abstract artworks. His early computer plotter drawings - when he had access to one of the earliest computer driven plotter drawing machines at the Meteorology Institute in Paris - are delicate, spare monochrome works on paper derived from algorithms devised by the artist and executed by the computer. -

Geometries of the Sublime Museum of Contemporary Art Zagreb 17 EKİM–20 KASIM, 2011 Ziyaret Saatleri: 19:00–00:00

brokenstillness broken s t n i o r ISeA2011 l b l UNCONTAINABLE s n s e e s n Geometries of s l the sublime b l MuseUM OF CONTemporary Art ZagreB r o w 17 eKiM–20 KASiM, 2011 k ZiyAReT saatleRi: 19:00–00:00 e n i s t t BAŞ KÜRATÖRleR/ SeNIOR CURATORS lanfranco acetı s & tihomir milovac i n e SANATÇIlAR/ARTISTS Paul brown; charles csuri; l k o manfred mohr; roman verostko. l r n b e s s s SANAT DiReKTÖRÜ Ve KONFeRANS BAŞKANI / s e ARTISTIC DIReCTOR AND CONFeReNCe CHAIR b n lanfranco aceti KONFeRANS Ve PROGRAM DiReKTÖRÜ / r l CONFeReNCe AND PROGRAM DIReCTOR o Özden Şahin k l i t s n e k o r b s s e n l l i t s n From klangfarben by Manfred Mohr at e ISEA2011 Uncontainable: Geometries of the Sublime, Museum of Contemporary Art AVENIJA DUBROVNIK 17, p.p. 537 muzej suvremene umjetnosti HR – 10010 ZAGREB museum of contemporary art T 00385 1 60 52 701 Zagreb, 17 October – 20 November, 2011. T 00385 1 60 52 798 e-mail: [email protected] www.msu.hr Broj: 08-1/8-lbb Zagreb, 14.03.2012. Na temelju članka 22. Zakona o pravu na pristup informacijama (Narodne Novine br. 172/03, 144/10, 37/11 i 77/11) i članka 33. Statuta Muzeja suvremene umjetnosti od 30.03.2007. donosim 328 UNCONTAINABLE LEA VOL 18 NO 5 ISSN 1071-4391 ISBN 978-1-906897-19-2 ISSN 1071-4391 ISBN 978-1-906897-19-2 LEA VOL 18 NO 5 UNCONTAINABLE 329 O D L U K U o imenovanju službenika za informiranje u Muzeju suvremene umjetnosti I. -

Mathematics Is Beautiful: Symmetry in Art, the Work of Attila Kovacs

Form and Symmetry: Art and Science Buenos Aires Congress, 2007 MATHEMATICS IS BEAUTIFUL: SYMMETRY IN ART, THE WORK OF ATTILA KOVACS ATTILA KOVACS AND INGMAR LÄHNEMANN Name: Attila Kovacs, Artist, (b. Budapest, Hungary, 1938). Address: Görrestraße 8, D-50674 Köln, Germany E-mail: [email protected] Solo Exhibitions (Selection): Galerie Hansjörg Mayer Stuttgart 1969; Kölnischer Kunstverein 1973; albers– kovacs, Wallraf-Richartz-Museum Köln 1976; Kunstmuseum Hannover mit Sammlung Sprengel 1983; Wilhelm-Hack-Museum Ludwigshafen am Rhein 1987; Kassák Múzeum Budapest 1991. Group Exhibitions (Selection): operationen, Museum Fridericianum Kassel 1969; documenta 6, Kassel 1977; Deutsche Zeichnungen der Gegenwart, Museum Ludwig Köln 1982; Imaginer, construire, Musée d´art moderne de la ville de Paris 1985; Symmetry and asymmetry, National Gallery of Hungary Budapest 1989. Publications: Manifest der transmutativen Plastizität, in: Attila Kovacs – Synthetische Programme, Städtische Kunstsammlungen, Ludwigshafen 1974; Der ästhetische Raum, in: Galerie Hansjörg Mayer, Stuttgart 1969; Die visuelle Relativierbarkeit eines Kreises, in: Attila Kovacs – Synthetische Programme, Kölnischer Kunstverein 1973; Visuell, transformationell, in: documenta 6, Kassel 1977. Name: Dr. Ingmar Lähnemann, Art Historian, (b. Sarstedt, Germany, 1974). Address: Kunsthalle Bremen, Am Wall 207, D-28195 Bremen, Germany E-mail: [email protected] Fields of interest: 20th Century Art; American Conceptual, Minimal and Installation Art; Computer Art. Publications: The Interaction of Theory and Practice: A Dialogue between Brian O´Doherty´s Inside the White Cube and Patrick Ireland´s Rope Drawings, in: Beyond the White Cube. A Retrospective of Brian O´Doherty/Patrick Ireland, Dublin City Gallery The Hugh Lane 2006, pp. 106–113; Manfred Mohr. broken symmetry. Winner of the d.velop digital art award [ddaa], Kunsthalle Bremen 2007 Abstract: The talk deals with the work of Attila Kovacs, a German artist working in the field of abstract art since the 1960s. -

Manfred Mohr Artificiata - Sonata Visuelle 13 Septembre - 22 Octobre 2016 Vernissage 10 Septembre

Manfred Mohr Artificiata - Sonata Visuelle 13 Septembre - 22 Octobre 2016 Vernissage 10 Septembre Manfred Mohr P2200_1577 Encre pigmenté sur papier / Pigmented Ink on paper 2014-2015 80 x 80 cm Galerie Charlot, 47 rue Charlot 75003 Paris France www.galeriecharlot.com +33 1 42 76 02 67 Bien que “transgression” ait longtemps été le mot d’ordre des avant-gardes, il en reste au moins une à laquelle il serait encore transgressif de se vouer pour qui- conque appartient à la communauté artistique : je pense à ce genre de tete-à-tete avec un circuit logique qu’exemplifient les recherches de Manfred Mohr, dans les- quelles l’artiste pense en termes de codage binaire, de 0 et de 1, dans un effort pour transcender ses propres capacités Lauren Sedofsky, Manfred Mohr catalogue, 1998 Lors de son interview avec Catherine Millet dans Les Suivra en 1971 « Esthétique programmée », son ex- Lettres françaises, Manfred Mohr, jeune peintre ex- position à l’ARC, Musée d’art Moderne de la Ville de pressionniste, la trentaine, racontait qu’ « il se trouvait Paris, désormais reconnue comme la première ex- à l’aise dans son époque et qu’il était prêt à accueil- position personnelle d’art digital dans un musée. lir les robots comme ses semblables» de la même façon qu’il admettait « toute machine d’information En 1973, il place au centre de sa philosophie et de - calculateur électronique - comme dotée d’une véri- son esthétique une structure fixe : le cube, modèle table personnalité ». primordial de la pensée et création constructiviste. Vers 1977, il passe à l’exploration géométrique de Nous sommes en 1968 à la Galerie Daniel Templon, «l’hypercube » : un cube à quatre dimensions (plus à l’occasion de la première exposition de Manfred tard à des dimensions plus hautes) qui existe mathé- Mohr. -

Manfred Mohr: a Formal Language Celebrating 50 Years of Artwork and Algorithms September 7 – October 13, 2019 Opening Receptio

Manfred Mohr: A Formal Language Celebrating 50 Years of Artwork and Algorithms September 7 – October 13, 2019 Opening Reception: Saturday, September 7 , 6 – 8 PM Gallery Hours: Wednesday – Saturday, 11 AM – 6 PM & Sunday 12 – 6 PM bitforms gallery is pleased to celebrate the 50th anniversary (1969 – 2019) of Manfred Mohr writing and creating artwork with algorithms. This exhibition showcases poignant moments throughout the artist’s career, presenting pre-computer artworks from the early 1960s alongside programmed film, wall reliefs, real-time screen-based works, computer-generated works on paper and canvas, and ephemeral objects. Mohr utilizes algorithms to engage rational aesthetics, inviting logic to produce visual outcomes. A Formal Language (named after his 1970 computer drawing) follows the evolution of the artist's programmed systems, including the first computer-generated drawings to the implementation of the hypercube. While Mohr’s career spans over sixty years, this exhibition distinguishes his pioneering use of algorithms decades before it became a tool of contemporary art. In the early 1960s, action painting, atonal music, and geometry were of great interest to Mohr. His early works indicate a confluence of interests in jazz and Abstract Expressionism through weighted strokes and graphic composition. Mohr was connected to instrumentation as a musician himself, playing tenor saxophone and oboe in jazz clubs across Europe. As his practice continued to progress, Max Bense’s theories about rational aesthetics greatly impacted Mohr, ushering in binary, black and white palettes of logical, geometric connections that would soon become his signature. Mohr wrote his first algorithm using the programming language Fortran IV in 1969. -

Drawn from a Score

University of California, Irvine Claire Trevor School of the Arts 712 Arts Plaza, Irvine, CA 92697 beallcenter.uci.edu 949-824-6206 Hours: Monday – Saturday, 12-6pm Drawn from a Score Opening Reception: Saturday, October 7, 2017, 2-5pm On view through: Saturday, February 3, 2018 Holiday Closures: Nov. 10-11, 23-25; Dec. 18-Jan. 7, Jan. 15 Curated by David Familian Featuring: Alison Knowles, Casey Reas, Channa Horwitz, David Bowen, Frederick Hammersley, Frieder Nake, Guillermo Galindo, Hiroshi Kawano, Jason Salavon, Jean-Pierre Herbert, John Cage, Leon Harmon & Kenneth Knowlton, Manfred Mohr, Nathalie Miebach, Rafael Lozano-Hemmer, Sean Griffin, Shirley Shor, Sol LeWitt, and Vera Molnar Drawn from a Score features artists whose work emanates directly from a written, visual or code-based score. Ranging from event scores first developed by John Cage and Fluxus artists in the late 1950s to contemporary uses of code as a score for computational works, the exhibition includes drawings, sculptures, performances, video projections and computer-generated forms of art. The exhibition begins with John Cage’s Fontana Mix (1958). In his seminal course at the New School for Social Research, Cage taught young artists how to write event scores using chance operations, found sound, and everyday objects to produce live performances. The exhibition will include a reconstruction of Cage’s Reunion (1968) ––his chess board that triggers sound while the game is being played––underlining his idea of “indeterminacy.” John Cage coined this term during a series of lectures he gave in 1956, in which he outlined how indeterminacy played a role in performances since the era of Bach. -

Exposition Anniversaire ! Galerie Charlot Paris

Exposition anniversaire ! Galerie Charlot Paris Vernissage le 3 - 4 - 5 septembre, de 14h à 20h Ouverture exceptionnelle le dimanche 13 Septembre, de 14h à 19h Exposition du 3 septembre au 25 octobre 2020 Artistes : Antoine Schmitt, Eric Vernhes, Anne-Sarah Le Meur, Eduardo Kac, Flavien Théry, Quayola, Manfred Mohr, Mikhail Margolis, Sabrina Ratté, Nicolas Sassoon, Dominique Pétrin, Nicolas Chasser-Skilbeck, Zaven Paré, Thomas Israel, Laurent Mignonneau & Christa Sommerer. La Galerie Charlot, basée à Paris et Tel Aviv, célèbre son dixième anniversaire avec une exposition anniversaire à Paris, présentant le travail des artistes représentés, illustrant la diversité des approches aux médias sur plusieurs générations. Parmi les artistes exposés figurent Anne-Sarah Le Meur, Antoine Schmitt, Dominique Pétrin, Eduardo Kac, Eric Vernhes, Flavien Théry, Laurent Mignonneau & Christa Sommerer, Manfred Mohr, Nicolas Sassoon, Nikolas Chasser Skilbeck, Quayola, Sabrina Ratté, Thomas Israel, Zaven Paré. Depuis 2010, la Galerie Charlot développe son programme sur la relation entre l’art, la technologie et la science, en exposant des artistes établis, à mi-carrière et émergents, couvrant un éventail de médias allant du traditionnel à l’expérimental. Ce 10e anniversaire intervient au moment où l’art médiatique fait l’objet d’une attention nouvelle, grâce à des expositions marquantes de ces dernières années, telles que «Electronic Superhighway (2016)» à la galerie Whitechapel, «Artists & Robots» au Grand Palais (2018), «Coder le monde» au Centre Pompidou (2018) ou «Programmed : Rules, Codes and Choreographies in Art» au Whitney Museum (2019)... Si les premières œuvres de l’exposition remontent au début des années 1970 (dessins algorithmiques de Manfred Mohr), des œuvres d’artistes émergents - dont Quayola, Sabrina Ratté, Flavien Théry - témoignent de l’engagement d’une nouvelle génération d’artistes dans la technologie contemporaine.