Concerning the Laws of Contradiction and Excluded Middle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Classifying Material Implications Over Minimal Logic

Classifying Material Implications over Minimal Logic Hannes Diener and Maarten McKubre-Jordens March 28, 2018 Abstract The so-called paradoxes of material implication have motivated the development of many non- classical logics over the years [2–5, 11]. In this note, we investigate some of these paradoxes and classify them, over minimal logic. We provide proofs of equivalence and semantic models separating the paradoxes where appropriate. A number of equivalent groups arise, all of which collapse with unrestricted use of double negation elimination. Interestingly, the principle ex falso quodlibet, and several weaker principles, turn out to be distinguishable, giving perhaps supporting motivation for adopting minimal logic as the ambient logic for reasoning in the possible presence of inconsistency. Keywords: reverse mathematics; minimal logic; ex falso quodlibet; implication; paraconsistent logic; Peirce’s principle. 1 Introduction The project of constructive reverse mathematics [6] has given rise to a wide literature where various the- orems of mathematics and principles of logic have been classified over intuitionistic logic. What is less well-known is that the subtle difference that arises when the principle of explosion, ex falso quodlibet, is dropped from intuitionistic logic (thus giving (Johansson’s) minimal logic) enables the distinction of many more principles. The focus of the present paper are a range of principles known collectively (but not exhaustively) as the paradoxes of material implication; paradoxes because they illustrate that the usual interpretation of formal statements of the form “. → . .” as informal statements of the form “if. then. ” produces counter-intuitive results. Some of these principles were hinted at in [9]. Here we present a carefully worked-out chart, classifying a number of such principles over minimal logic. -

Two Sources of Explosion

Two sources of explosion Eric Kao Computer Science Department Stanford University Stanford, CA 94305 United States of America Abstract. In pursuit of enhancing the deductive power of Direct Logic while avoiding explosiveness, Hewitt has proposed including the law of excluded middle and proof by self-refutation. In this paper, I show that the inclusion of either one of these inference patterns causes paracon- sistent logics such as Hewitt's Direct Logic and Besnard and Hunter's quasi-classical logic to become explosive. 1 Introduction A central goal of a paraconsistent logic is to avoid explosiveness { the inference of any arbitrary sentence β from an inconsistent premise set fp; :pg (ex falso quodlibet). Hewitt [2] Direct Logic and Besnard and Hunter's quasi-classical logic (QC) [1, 5, 4] both seek to preserve the deductive power of classical logic \as much as pos- sible" while still avoiding explosiveness. Their work fits into the ongoing research program of identifying some \reasonable" and \maximal" subsets of classically valid rules and axioms that do not lead to explosiveness. To this end, it is natural to consider which classically sound deductive rules and axioms one can introduce into a paraconsistent logic without causing explo- siveness. Hewitt [3] proposed including the law of excluded middle and the proof by self-refutation rule (a very special case of proof by contradiction) but did not show whether the resulting logic would be explosive. In this paper, I show that for quasi-classical logic and its variant, the addition of either the law of excluded middle or the proof by self-refutation rule in fact leads to explosiveness. -

'The Denial of Bivalence Is Absurd'1

On ‘The Denial of Bivalence is Absurd’1 Francis Jeffry Pelletier Robert J. Stainton University of Alberta Carleton University Edmonton, Alberta, Canada Ottawa, Ontario, Canada [email protected] [email protected] Abstract: Timothy Williamson, in various places, has put forward an argument that is supposed to show that denying bivalence is absurd. This paper is an examination of the logical force of this argument, which is found wanting. I. Introduction Let us being with a word about what our topic is not. There is a familiar kind of argument for an epistemic view of vagueness in which one claims that denying bivalence introduces logical puzzles and complications that are not easily overcome. One then points out that, by ‘going epistemic’, one can preserve bivalence – and thus evade the complications. James Cargile presented an early version of this kind of argument [Cargile 1969], and Tim Williamson seemingly makes a similar point in his paper ‘Vagueness and Ignorance’ [Williamson 1992] when he says that ‘classical logic and semantics are vastly superior to…alternatives in simplicity, power, past success, and integration with theories in other domains’, and contends that this provides some grounds for not treating vagueness in this way.2 Obviously an argument of this kind invites a rejoinder about the puzzles and complications that the epistemic view introduces. Here are two quick examples. First, postulating, as the epistemicist does, linguistic facts no speaker of the language could possibly know, and which have no causal link to actual or possible speech behavior, is accompanied by a litany of disadvantages – as the reader can imagine. -

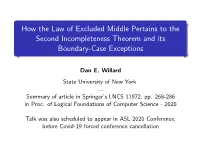

How the Law of Excluded Middle Pertains to the Second Incompleteness Theorem and Its Boundary-Case Exceptions

How the Law of Excluded Middle Pertains to the Second Incompleteness Theorem and its Boundary-Case Exceptions Dan E. Willard State University of New York .... Summary of article in Springer’s LNCS 11972, pp. 268-286 in Proc. of Logical Foundations of Computer Science - 2020 Talk was also scheduled to appear in ASL 2020 Conference, before Covid-19 forced conference cancellation S-0 : Comments about formats for these SLIDES 1 Slides 1-15 were presented at LFCS 2020 conference, meeting during Janaury 4-7, 2020, in Deerfield Florida (under a technically different title) 2 Published as refereed 19-page paper in Springer’s LNCS 11972, pp. 268-286 in Springer’s “Proceedings of Logical Foundations of Computer Science 2020” 3 Second presentaion of this talk was intended for ASL-2020 conference, before Covid-19 cancelled conference. Willard recommends you read Item 2’s 19-page paper after skimming these slides. They nicely summarize my six JSL and APAL papers. Manuscript is also more informative than the sketch outlined here. Manuscript helps one understand all of Willard’s papers 1. Main Question left UNANSWERED by G¨odel: 2nd Incomplet. Theorem indicates strong formalisms cannot verify their own consistency. ..... But Humans Presume Their Own Consistency, at least PARTIALLY, as a prerequisite for Thinking ? A Central Question: What systems are ADEQUATELY WEAK to hold some type (?) knowledge their own consistency? ONLY PARTIAL ANSWERS to this Question are Feasible because 2nd Inc Theorem is QUITE STRONG ! Let LXM = Law of Excluded Middle OurTheme: Different USES of LXM do change Breadth and LIMITATIONS of 2nd Inc Theorem 2a. -

A New Logic for Uncertainty

A New Logic for Uncertainty ∗ LUO Maokang and HE Wei† Institute of Mathematics Institute of Mathematics Sichuan University Nanjing Normal University Chengdu, 610064 Nanjing, 210046 P.R.China P.R.China Abstract Fuzziness and randomicity widespread exist in natural science, engineering, technology and social science. The purpose of this paper is to present a new logic - uncertain propositional logic which can deal with both fuzziness by taking truth value semantics and randomicity by taking probabilistic semantics or possibility semantics. As the first step for purpose of establishing a logic system which completely reflect the uncertainty of the objective world, this logic will lead to a set of logical foundations for uncertainty theory as what classical logic done in certain or definite situations or circumstances. Keywords: Fuzziness; randomicity; UL-algebra; uncertain propositional logic. Mathematics Subject Classifications(2000): 03B60. 1 Introduction As one of the most important and one of the most widely used concepts in the whole area of modern academic or technologic research, uncertainty has been involved into study and applications more than twenty years. Now along with the quickly expanding requirements of developments of science and technology, people are having to face and deal with more and more problems tangled with uncertainty in the fields of natural science, engineering or technology or even in social science. To these uncertain prob- arXiv:1506.03123v1 [math.LO] 9 Jun 2015 lems, many traditional theories and methods based on certain conditions or certain circumstances are not so effective and powerful as them in the past. So the importance of research on uncertainty is emerging more and more obviously and imminently. -

Russell's Theory of Descriptions

Russell’s theory of descriptions PHIL 83104 September 5, 2011 1. Denoting phrases and names ...........................................................................................1 2. Russell’s theory of denoting phrases ................................................................................3 2.1. Propositions and propositional functions 2.2. Indefinite descriptions 2.3. Definite descriptions 3. The three puzzles of ‘On denoting’ ..................................................................................7 3.1. The substitution of identicals 3.2. The law of the excluded middle 3.3. The problem of negative existentials 4. Objections to Russell’s theory .......................................................................................11 4.1. Incomplete definite descriptions 4.2. Referential uses of definite descriptions 4.3. Other uses of ‘the’: generics 4.4. The contrast between descriptions and names [The main reading I gave you was Russell’s 1919 paper, “Descriptions,” which is in some ways clearer than his classic exposition of the theory of descriptions, which was in his 1905 paper “On Denoting.” The latter is one of the optional readings on the web site, and I reference it below sometimes as well.] 1. DENOTING PHRASES AND NAMES Russell defines the class of denoting phrases as follows: “By ‘denoting phrase’ I mean a phrase such as any one of the following: a man, some man, any man, every man, all men, the present king of England, the centre of mass of the Solar System at the first instant of the twentieth century, the revolution of the earth around the sun, the revolution of the sun around the earth. Thus a phrase is denoting solely in virtue of its form.” (‘On Denoting’, 479) Russell’s aim in this article is to explain how expressions like this work — what they contribute to the meanings of sentences containing them. -

Florensky and Frank

View metadata, citation and similar papers at core.ac.uk brought to you by CORE Po zn ań skie Studia Teologiczne Tom 22, 2008 ADAM DROZDEK Duquesne University, Pittsburgh, Pennsylvania Defying Rationality: Florensky and Frank Przezwyciężyć racjonalność: Fiorenski i Frank What should be the basis of knowledge in theological matters? The question has been pondered upon by theologians for centuries and not a few proposals were made. One of these was made by the Orthodox priest and scientist Pavel Florensky in The pillar and ground of the truth (1914) considered to be the most original and influen tial work of the Russian religious renaissance1, marking “the beginning of a new era in Russian theology”2 and even “one of the most significant accomplishments of ec clesiastical thinking in the twentieth century”3. Proposals made in a work deemed to be so important certainly arrest attention. I. THE LAW OF IDENTITY A centerpiece of rationalism, according to Florensky, is the law of identity, A = A, which is the source of powerlessness of rational reasoning. “The law A = A be comes a completely empty schema of self-affirmation” so that “I = I turns out to be ... a cry of naked egotism: ‘I!’ For where there is no difference, there can be no con- 1 R. Slesinski, Pavel Florensky: a metaphysics o f love, Crestwood: St. Vladimir’s Seminary Press 1984, 22. 2 N. Zernov, The Russian religious renaissance of the twentieth century, London: Darton, Longman & Todd 1963, 101. 3 M. Silberer, Die Trinitatsidee im Werk von Pavel A. Florenskij: Versuch einer systematischen Darstellung in Begegnung mit Thomas von Aquin, Wtirzburg: Augustinus-Verlag 1984, 254. -

Overturning the Paradigm of Identity with Gilles Deleuze's Differential

A Thesis entitled Difference Over Identity: Overturning the Paradigm of Identity With Gilles Deleuze’s Differential Ontology by Matthew G. Eckel Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Arts Degree in Philosophy Dr. Ammon Allred, Committee Chair Dr. Benjamin Grazzini, Committee Member Dr. Benjamin Pryor, Committee Member Dr. Patricia R. Komuniecki, Dean College of Graduate Studies The University of Toledo May 2014 An Abstract of Difference Over Identity: Overturning the Paradigm of Identity With Gilles Deleuze’s Differential Ontology by Matthew G. Eckel Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Arts Degree in Philosophy The University of Toledo May 2014 Taking Gilles Deleuze to be a philosopher who is most concerned with articulating a ‘philosophy of difference’, Deleuze’s thought represents a fundamental shift in the history of philosophy, a shift which asserts ontological difference as independent of any prior ontological identity, even going as far as suggesting that identity is only possible when grounded by difference. Deleuze reconstructs a ‘minor’ history of philosophy, mobilizing thinkers from Spinoza and Nietzsche to Duns Scotus and Bergson, in his attempt to assert that philosophy has always been, underneath its canonical manifestations, a project concerned with ontology, and that ontological difference deserves the kind of philosophical attention, and privilege, which ontological identity has been given since Aristotle. -

The Development of Laws of Formal Logic of Aristotle

This paper is dedicated to my unforgettable friend Boris Isaevich Lamdon. The Development of Laws of Formal Logic of Aristotle The essence of formal logic The aim of every science is to discover the laws that could explain one or another phenomenon. Once these laws are discovered, then science proceed to study the other phenomena, which in the nature are of an infinite set. It is interesting to note that in the process of discovering a law, for example in physics, people make thousands of experiments, build proves, among them some experience, or evidence - useful for understanding a certain phenomenon, but other experiments or evidence proved fruitless. But it is found only with hindsight, when the law is already discovered. Therefore, with the discovering of the law it is enough to show 2 - 3 experiments or prove to verify its correctness. All other experiments were the ways of study and there is no need to repeat them, to understand how the law works. In the exact sciences, it is understood, and therefore the students studies only the information that is necessary to understand specific phenomena. By no means this is the case with the study of formal logic. Formal logic, as opposed to other sciences: physics, chemistry, mathematics, biology and so on, studies not an infinite number of phenomena in nature, but only one how a man thinks, how he learns the world surrounding us, and how people understand each other. In other words, what laws govern the logic of our thinking, i.e., our reasoning and judgments in any science or in everyday life. -

The Physics and Metaphysics of Identity and Individuality Steven French and De´Cio Krause: Identity in Physics: a Historical, Philosophical, and Formal Analysis

Metascience (2011) 20:225–251 DOI 10.1007/s11016-010-9463-7 BOOK SYMPOSIUM The physics and metaphysics of identity and individuality Steven French and De´cio Krause: Identity in physics: A historical, philosophical, and formal analysis. Oxford: Clarendon Press, 2006, 440 pp, £68.00 HB Don Howard • Bas C. van Fraassen • Ota´vio Bueno • Elena Castellani • Laura Crosilla • Steven French • De´cio Krause Published online: 3 November 2010 Ó Springer Science+Business Media B.V. 2010 Don Howard Steven French and De´cio Krause have written what bids fair to be, for years to come, the definitive philosophical treatment of the problem of the individuality of elementary particles in quantum mechanics (QM) and quantum-field theory (QFT). The book begins with a long and dense argument for the view that elementary particles are most helpfully regarded as non-individuals, and it concludes with an earnest attempt to develop a formal apparatus for describing such non-individual entities better suited to the task than our customary set theory. D. Howard (&) Department of Philosophy and Graduate Program in History and Philosophy of Science, University of Notre Dame, Notre Dame, IN 46556, USA e-mail: [email protected] B. C. van Fraassen Philosophy Department, San Francisco State University, 1600 Holloway Avenue, San Francisco, CA 94132, USA e-mail: [email protected] O. Bueno Department of Philosophy, University of Miami, Coral Gables, FL 33124, USA e-mail: [email protected] E. Castellani Department of Philosophy, University of Florence, Via Bolognese 52, 50139 Florence, Italy e-mail: elena.castellani@unifi.it L. Crosilla Department of Pure Mathematics, School of Mathematics, University of Leeds, Leeds LS2 9JT, UK e-mail: [email protected] 123 226 Metascience (2011) 20:225–251 Are elementary particles individuals? I do not know. -

Logic, Ontological Neutrality, and the Law of Non-Contradiction

Logic, Ontological Neutrality, and the Law of Non-Contradiction Achille C. Varzi Department of Philosophy, Columbia University, New York [Final version published in Elena Ficara (ed.), Contradictions. Logic, History, Actuality, Berlin: De Gruyter, 2014, pp. 53–80] Abstract. As a general theory of reasoning—and as a theory of what holds true under every possi- ble circumstance—logic is supposed to be ontologically neutral. It ought to have nothing to do with questions concerning what there is, or whether there is anything at all. It is for this reason that tra- ditional Aristotelian logic, with its tacit existential presuppositions, was eventually deemed inade- quate as a canon of pure logic. And it is for this reason that modern quantification theory, too, with its residue of existentially loaded theorems and inferential patterns, has been claimed to suffer from a defect of logical purity. The law of non-contradiction rules out certain circumstances as impossi- ble—circumstances in which a statement is both true and false, or perhaps circumstances where something both is and is not the case. Is this to be regarded as a further ontological bias? If so, what does it mean to forego such a bias in the interest of greater neutrality—and ought we to do so? Logic in the Locked Room As a general theory of reasoning, and especially as a theory of what is true no mat- ter what is the case (or in every “possible world”), logic is supposed to be ontolog- ically neutral. It should be free from any metaphysical presuppositions. It ought to have nothing substantive to say concerning what there is, or whether there is any- thing at all. -

The Logical Analysis of Key Arguments in Leibniz and Kant

University of Mississippi eGrove Electronic Theses and Dissertations Graduate School 2016 The Logical Analysis Of Key Arguments In Leibniz And Kant Richard Simpson Martin University of Mississippi Follow this and additional works at: https://egrove.olemiss.edu/etd Part of the Philosophy Commons Recommended Citation Martin, Richard Simpson, "The Logical Analysis Of Key Arguments In Leibniz And Kant" (2016). Electronic Theses and Dissertations. 755. https://egrove.olemiss.edu/etd/755 This Thesis is brought to you for free and open access by the Graduate School at eGrove. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of eGrove. For more information, please contact [email protected]. THE LOGICAL ANALYSIS OF KEY ARGUMENTS IN LEIBNIZ AND KANT A Thesis presented in partial fulfillment of requirements for the degree of Master of Arts in the Department of Philosophy and Religion The University of Mississippi By RICHARD S. MARTIN August 2016 Copyright Richard S. Martin 2016 ALL RIGHTS RESERVED ABSTRACT This paper addresses two related issues of logic in the philosophy of Gottfried Leibniz. The first problem revolves around Leibniz’s struggle, throughout the period of his mature philosophy, to reconcile his metaphysics and epistemology with his antecedent theological commitments. Leibniz believes that for everything that happens there is a reason, and that the reason God does things is because they are the best that can be done. But if God must, by nature, do what is best, and if what is best is predetermined, then it seems that there may be no room for divine freedom, much less the human freedom Leibniz wished to prove.