Natural Language Generation for Effective Knowledge Distillation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Backpropagation and Deep Learning in the Brain

Backpropagation and Deep Learning in the Brain Simons Institute -- Computational Theories of the Brain 2018 Timothy Lillicrap DeepMind, UCL With: Sergey Bartunov, Adam Santoro, Jordan Guerguiev, Blake Richards, Luke Marris, Daniel Cownden, Colin Akerman, Douglas Tweed, Geoffrey Hinton The “credit assignment” problem The solution in artificial networks: backprop Credit assignment by backprop works well in practice and shows up in virtually all of the state-of-the-art supervised, unsupervised, and reinforcement learning algorithms. Why Isn’t Backprop “Biologically Plausible”? Why Isn’t Backprop “Biologically Plausible”? Neuroscience Evidence for Backprop in the Brain? A spectrum of credit assignment algorithms: A spectrum of credit assignment algorithms: A spectrum of credit assignment algorithms: How to convince a neuroscientist that the cortex is learning via [something like] backprop - To convince a machine learning researcher, an appeal to variance in gradient estimates might be enough. - But this is rarely enough to convince a neuroscientist. - So what lines of argument help? How to convince a neuroscientist that the cortex is learning via [something like] backprop - What do I mean by “something like backprop”?: - That learning is achieved across multiple layers by sending information from neurons closer to the output back to “earlier” layers to help compute their synaptic updates. How to convince a neuroscientist that the cortex is learning via [something like] backprop 1. Feedback connections in cortex are ubiquitous and modify the -

Artificial Intelligence? 1 V2.0 © J

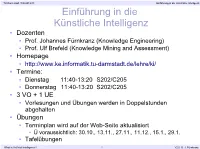

TU Darmstadt, WS 2012/13 Einführung in die Künstliche Intelligenz Einführung in die Künstliche Intelligenz Dozenten Prof. Johannes Fürnkranz (Knowledge Engineering) Prof. Ulf Brefeld (Knowledge Mining and Assessment) Homepage http://www.ke.informatik.tu-darmstadt.de/lehre/ki/ Termine: Dienstag 11:40-13:20 S202/C205 Donnerstag 11:40-13:20 S202/C205 3 VO + 1 UE Vorlesungen und Übungen werden in Doppelstunden abgehalten Übungen Terminplan wird auf der Web-Seite aktualisiert Ü voraussichtlich: 30.10., 13.11., 27.11., 11.12., 15.1., 29.1. Tafelübungen What is Artificial Intelligence? 1 V2.0 © J. Fürnkranz TU Darmstadt, WS 2012/13 Einführung in die Künstliche Intelligenz Text Book The course will mostly follow Stuart Russell und Peter Norvig: Artificial Intelligence: A Modern Approach. Prentice Hall, 2nd edition, 2003. Deutsche Ausgabe: Stuart Russell und Peter Norvig: Künstliche Intelligenz: Ein Moderner Ansatz. Pearson- Studium, 2004. ISBN: 978-3-8273-7089-1. 3. Auflage 2012 Home-page for the book: http://aima.cs.berkeley.edu/ Course slides in English (lecture is in German) will be availabe from Home-page What is Artificial Intelligence? 2 V2.0 © J. Fürnkranz TU Darmstadt, WS 2012/13 Einführung in die Künstliche Intelligenz What is Artificial Intelligence Different definitions due to different criteria Two dimensions: Thought processes/reasoning vs. behavior/action Success according to human standards vs. success according to an ideal concept of intelligence: rationality. Systems that think like humans Systems that think rationally Systems that act like humans Systems that act rationally What is Artificial Intelligence? 3 V2.0 © J. Fürnkranz TU Darmstadt, WS 2012/13 Einführung in die Künstliche Intelligenz Definitions of Artificial Intelligence What is Artificial Intelligence? 4 V2.0 © J. -

Layer-Level Knowledge Distillation for Deep Neural Network Learning

applied sciences Article Layer-Level Knowledge Distillation for Deep Neural Network Learning Hao-Ting Li, Shih-Chieh Lin, Cheng-Yeh Chen and Chen-Kuo Chiang * Advanced Institute of Manufacturing with High-tech Innovations, Center for Innovative Research on Aging Society (CIRAS) and Department of Computer Science and Information Engineering, National Chung Cheng University, Chiayi 62102, Taiwan; [email protected] (H.-T.L.); [email protected] (S.-C.L.); [email protected] (C.-Y.C.) * Correspondence: [email protected] Received: 1 April 2019; Accepted: 9 May 2019; Published: 14 May 2019 Abstract: Motivated by the recently developed distillation approaches that aim to obtain small and fast-to-execute models, in this paper a novel Layer Selectivity Learning (LSL) framework is proposed for learning deep models. We firstly use an asymmetric dual-model learning framework, called Auxiliary Structure Learning (ASL), to train a small model with the help of a larger and well-trained model. Then, the intermediate layer selection scheme, called the Layer Selectivity Procedure (LSP), is exploited to determine the corresponding intermediate layers of source and target models. The LSP is achieved by two novel matrices, the layered inter-class Gram matrix and the inter-layered Gram matrix, to evaluate the diversity and discrimination of feature maps. The experimental results, demonstrated using three publicly available datasets, present the superior performance of model training using the LSL deep model learning framework. Keywords: deep learning; knowledge distillation 1. Introduction Convolutional neural networks (CNN) of deep learning have proved to be very successful in many applications in the field of computer vision, such as image classification [1], object detection [2–4], semantic segmentation [5], and others. -

The Machine That Builds Itself: How the Strengths of Lisp Family

Khomtchouk et al. OPINION NOTE The Machine that Builds Itself: How the Strengths of Lisp Family Languages Facilitate Building Complex and Flexible Bioinformatic Models Bohdan B. Khomtchouk1*, Edmund Weitz2 and Claes Wahlestedt1 *Correspondence: [email protected] Abstract 1Center for Therapeutic Innovation and Department of We address the need for expanding the presence of the Lisp family of Psychiatry and Behavioral programming languages in bioinformatics and computational biology research. Sciences, University of Miami Languages of this family, like Common Lisp, Scheme, or Clojure, facilitate the Miller School of Medicine, 1120 NW 14th ST, Miami, FL, USA creation of powerful and flexible software models that are required for complex 33136 and rapidly evolving domains like biology. We will point out several important key Full list of author information is features that distinguish languages of the Lisp family from other programming available at the end of the article languages and we will explain how these features can aid researchers in becoming more productive and creating better code. We will also show how these features make these languages ideal tools for artificial intelligence and machine learning applications. We will specifically stress the advantages of domain-specific languages (DSL): languages which are specialized to a particular area and thus not only facilitate easier research problem formulation, but also aid in the establishment of standards and best programming practices as applied to the specific research field at hand. DSLs are particularly easy to build in Common Lisp, the most comprehensive Lisp dialect, which is commonly referred to as the “programmable programming language.” We are convinced that Lisp grants programmers unprecedented power to build increasingly sophisticated artificial intelligence systems that may ultimately transform machine learning and AI research in bioinformatics and computational biology. -

The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design

The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design Jeffrey Dean Google Research [email protected] Abstract The past decade has seen a remarkable series of advances in machine learning, and in particular deep learning approaches based on artificial neural networks, to improve our abilities to build more accurate systems across a broad range of areas, including computer vision, speech recognition, language translation, and natural language understanding tasks. This paper is a companion paper to a keynote talk at the 2020 International Solid-State Circuits Conference (ISSCC) discussing some of the advances in machine learning, and their implications on the kinds of computational devices we need to build, especially in the post-Moore’s Law-era. It also discusses some of the ways that machine learning may also be able to help with some aspects of the circuit design process. Finally, it provides a sketch of at least one interesting direction towards much larger-scale multi-task models that are sparsely activated and employ much more dynamic, example- and task-based routing than the machine learning models of today. Introduction The past decade has seen a remarkable series of advances in machine learning (ML), and in particular deep learning approaches based on artificial neural networks, to improve our abilities to build more accurate systems across a broad range of areas [LeCun et al. 2015]. Major areas of significant advances include computer vision [Krizhevsky et al. 2012, Szegedy et al. 2015, He et al. 2016, Real et al. 2017, Tan and Le 2019], speech recognition [Hinton et al. -

Backpropagation with Callbacks

Backpropagation with Continuation Callbacks: Foundations for Efficient and Expressive Differentiable Programming Fei Wang James Decker Purdue University Purdue University West Lafayette, IN 47906 West Lafayette, IN 47906 [email protected] [email protected] Xilun Wu Grégory Essertel Tiark Rompf Purdue University Purdue University Purdue University West Lafayette, IN 47906 West Lafayette, IN, 47906 West Lafayette, IN, 47906 [email protected] [email protected] [email protected] Abstract Training of deep learning models depends on gradient descent and end-to-end differentiation. Under the slogan of differentiable programming, there is an increas- ing demand for efficient automatic gradient computation for emerging network architectures that incorporate dynamic control flow, especially in NLP. In this paper we propose an implementation of backpropagation using functions with callbacks, where the forward pass is executed as a sequence of function calls, and the backward pass as a corresponding sequence of function returns. A key realization is that this technique of chaining callbacks is well known in the programming languages community as continuation-passing style (CPS). Any program can be converted to this form using standard techniques, and hence, any program can be mechanically converted to compute gradients. Our approach achieves the same flexibility as other reverse-mode automatic differ- entiation (AD) techniques, but it can be implemented without any auxiliary data structures besides the function call stack, and it can easily be combined with graph construction and native code generation techniques through forms of multi-stage programming, leading to a highly efficient implementation that combines the per- formance benefits of define-then-run software frameworks such as TensorFlow with the expressiveness of define-by-run frameworks such as PyTorch. -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

Similarity Transfer for Knowledge Distillation Haoran Zhao, Kun Gong, Xin Sun, Member, IEEE, Junyu Dong, Member, IEEE and Hui Yu, Senior Member, IEEE

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 1 Similarity Transfer for Knowledge Distillation Haoran Zhao, Kun Gong, Xin Sun, Member, IEEE, Junyu Dong, Member, IEEE and Hui Yu, Senior Member, IEEE Abstract—Knowledge distillation is a popular paradigm for following perspectives: network pruning [6][7][8], network de- learning portable neural networks by transferring the knowledge composition [9][10][11], network quantization and knowledge from a large model into a smaller one. Most existing approaches distillation [12][13]. Among these methods, the seminal work enhance the student model by utilizing the similarity information between the categories of instance level provided by the teacher of knowledge distillation has attracted a lot of attention due to model. However, these works ignore the similarity correlation its ability of exploiting dark knowledge from the pre-trained between different instances that plays an important role in large network. confidence prediction. To tackle this issue, we propose a novel Knowledge distillation (KD) is proposed by Hinton et al. method in this paper, called similarity transfer for knowledge [12] for supervising the training of a compact yet efficient distillation (STKD), which aims to fully utilize the similarities between categories of multiple samples. Furthermore, we propose student model by capturing and transferring the knowledge of to better capture the similarity correlation between different in- a large teacher model to a compact one. Its success is attributed stances by the mixup technique, which creates virtual samples by to the knowledge contained in class distributions provided by a weighted linear interpolation. Note that, our distillation loss can the teacher via soften softmax. -

Stuart Russell and Peter Norvig, Artijcial Intelligence: a Modem Approach *

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Artificial Intelligence ELSEVIER Artificial Intelligence 82 ( 1996) 369-380 Book Review Stuart Russell and Peter Norvig, Artijcial Intelligence: A Modem Approach * Nils J. Nilsson Robotics Laboratory, Department of Computer Science, Stanford University, Stanford, CA 94305, USA 1. Introductory remarks I am obliged to begin this review by confessing a conflict of interest: I am a founding director and a stockholder of a publishing company that competes with the publisher of this book, and I am in the process of writing another textbook on AI. What if Russell and Norvig’s book turns out to be outstanding? Well, it did! Its descriptions are extremely clear and readable; its organization is excellent; its examples are motivating; and its coverage is scholarly and thorough! End of review? No; we will go on for some pages-although not for as many as did Russell and Norvig. In their Preface (p. vii), the authors mention five distinguishing features of their book: Unified presentation of the field, Intelligent agent design, Comprehensive and up-to-date coverage, Equal emphasis on theory and practice, and Understanding through implementation. These features do indeed distinguish the book. I begin by making a few brief, summary comments using the authors’ own criteria as a guide. l Unified presentation of the field and Intelligent agent design. I have previously observed that just as Los Angeles has been called “twelve suburbs in search of a city”, AI might be called “twelve topics in search of a subject”. -

Understanding and Improving Knowledge Distillation

Understanding and Improving Knowledge Distillation Jiaxi Tang,∗ Rakesh Shivanna, Zhe Zhao, Dong Lin, Anima Singh, Ed H.Chi, Sagar Jain Google, Inc {jiaxit,rakeshshivanna,zhezhao,dongl,animasingh,edchi,sagarj}@google.com Abstract Knowledge Distillation (KD) is a model-agnostic technique to improve model quality while having a fixed capacity budget. It is a commonly used technique for model compression, where a larger capacity teacher model with better quality is used to train a more compact student model with better inference efficiency. Through distillation, one hopes to benefit from student’s compactness, without sacrificing too much on model quality. Despite the large success of knowledge distillation, better understanding of how it benefits student model’s training dy- namics remains under-explored. In this paper, we categorize teacher’s knowledge into three hierarchical levels and study its effects on knowledge distillation: (1) knowledge of the ‘universe’, where KD brings a regularization effect through label smoothing; (2) domain knowledge, where teacher injects class relationships prior to student’s logit layer geometry; and (3) instance specific knowledge, where teacher rescales student model’s per-instance gradients based on its measurement on the event difficulty. Using systematic analyses and extensive empirical studies on both synthetic and real-world datasets, we confirm that the aforementioned three factors play a major role in knowledge distillation. Furthermore, based on our findings, we diagnose some of the failure cases of applying KD from recent studies. 1 Introduction Recent advances in artificial intelligence have largely been driven by learning deep neural networks, and thus, current state-of-the-art models typically require a high inference cost in computation and memory. -

Fast Neural Network Emulation of Dynamical Systems for Computer Animation

Fast Neural Network Emulation of Dynamical Systems for Computer Animation Radek Grzeszczuk 1 Demetri Terzopoulos 2 Geoffrey Hinton 2 1 Intel Corporation 2 University of Toronto Microcomputer Research Lab Department of Computer Science 2200 Mission College Blvd. 10 King's College Road Santa Clara, CA 95052, USA Toronto, ON M5S 3H5, Canada Abstract Computer animation through the numerical simulation of physics-based graphics models offers unsurpassed realism, but it can be computation ally demanding. This paper demonstrates the possibility of replacing the numerical simulation of nontrivial dynamic models with a dramatically more efficient "NeuroAnimator" that exploits neural networks. Neu roAnimators are automatically trained off-line to emulate physical dy namics through the observation of physics-based models in action. De pending on the model, its neural network emulator can yield physically realistic animation one or two orders of magnitude faster than conven tional numerical simulation. We demonstrate NeuroAnimators for a va riety of physics-based models. 1 Introduction Animation based on physical principles has been an influential trend in computer graphics for over a decade (see, e.g., [1, 2, 3]). This is not only due to the unsurpassed realism that physics-based techniques offer. In conjunction with suitable control and constraint mechanisms, physical models also facilitate the production of copious quantities of real istic animation in a highly automated fashion. Physics-based animation techniques are beginning to find their way into high-end commercial systems. However, a well-known drawback has retarded their broader penetration--compared to geometric models, physical models typically entail formidable numerical simulation costs. This paper proposes a new approach to creating physically realistic animation that differs Emulation for Animation 883 radically from the conventional approach of numerically simulating the equations of mo tion of physics-based models. -

Optimising Hardware Accelerated Neural Networks with Quantisation and a Knowledge Distillation Evolutionary Algorithm

electronics Article Optimising Hardware Accelerated Neural Networks with Quantisation and a Knowledge Distillation Evolutionary Algorithm Robert Stewart 1,* , Andrew Nowlan 1, Pascal Bacchus 2, Quentin Ducasse 3 and Ekaterina Komendantskaya 1 1 Mathematical and Computer Sciences, Heriot-Watt University, Edinburgh, EH14 4AS, UK; [email protected] (A.N.); [email protected] (E.K.) 2 Inria Rennes-Bretagne Altlantique Research Centre, 35042 Rennes, France; [email protected] 3 Lab-STICC, École Nationale Supérieure de Techniques Avancées, 29200 Brest, France; [email protected] * Correspondence: [email protected] Abstract: This paper compares the latency, accuracy, training time and hardware costs of neural networks compressed with our new multi-objective evolutionary algorithm called NEMOKD, and with quantisation. We evaluate NEMOKD on Intel’s Movidius Myriad X VPU processor, and quantisation on Xilinx’s programmable Z7020 FPGA hardware. Evolving models with NEMOKD increases inference accuracy by up to 82% at the cost of 38% increased latency, with throughput performance of 100–590 image frames-per-second (FPS). Quantisation identifies a sweet spot of 3 bit precision in the trade-off between latency, hardware requirements, training time and accuracy. Parallelising FPGA implementations of 2 and 3 bit quantised neural networks increases throughput from 6 k FPS to 373 k FPS, a 62 speedup. × Citation: Stewart, R.; Nowlan, A.; Bacchus, P.; Ducasse, Q.; Keywords: quantisation; evolutionary algorithm; neural network; FPGA; Movidius VPU Komendantskaya, E. Optimising Hardware Accelerated Neural Networks with Quantization and a Knowledge Distillation Evolutionary 1. Introduction Algorithm. Electronics 2021, 10, 396. Neural networks have proved successful for many domains including image recog- https://doi.org/10.3390/ nition, autonomous systems and language processing.