A Study of Rootkit Stealth Techniques and Associated Detection Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trojan Vs Rat Vs Rootkit Mayuri More1, Rajeshwari Gundla2, Siddharth Nanda3 1U.G

IJRECE VOL. 7 ISSUE 2 (APRIL- JUNE 2019) ISSN: 2393-9028 (PRINT) | ISSN: 2348-2281 (ONLINE) Trojan Vs Rat Vs Rootkit Mayuri More1, Rajeshwari Gundla2, Siddharth Nanda3 1U.G. Student, 2 Senior Faculty, 3Senior Faculty SOE, ADYPU, Lohegaon, Pune, Maharashtra, India1 IT, iNurture, Bengaluru, India2,3 Abstract - Malicious Software is Malware is a dangerous of RATs completely and prevent confidential data being software which harms computer systems. With the increase leaked. So Dan Jiang and Kazumasa Omote researchers in technology in today’s days, malwares are also increasing. have proposed an approach to detect RAT in the early stage This paper is based on Malware. We have discussed [10]. TROJAN, RAT, ROOTKIT in detail. Further, we have discussed the adverse effects of malware on the system as III. CLASSIFICATION well as society. Then we have listed some trusted tools to Rootkit vs Trojan vs Rat detect and remove malware. Rootkit - A rootkit is a malicious software that permits a legitimate user to have confidential access to a system and Keywords - Malware, Trojan, RAT, Rootkit, System, privileged areas of its software. A rootkit possibly contains Computer, Anti-malware a large number of malicious means for example banking credential stealers, keyloggers, antivirus disablers, password I. INTRODUCTION stealers and bots for DDoS attacks. This software stays Nowadays, this world is full of technology, but with the hidden in the computer and allocates the remote access of advantages of technology comes its disadvantages like the computer to the attacker[2]. hacking, corrupting the systems, stealing of data etc. These Types of Rootkit: malpractices are possible because of malware and viruses 1. -

Cross-Platform Analysis of Indirect File Leaks in Android and Ios Applications

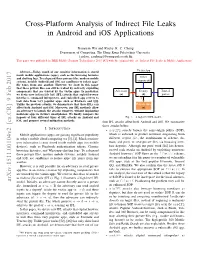

Cross-Platform Analysis of Indirect File Leaks in Android and iOS Applications Daoyuan Wu and Rocky K. C. Chang Department of Computing, The Hong Kong Polytechnic University fcsdwu, [email protected] This paper was published in IEEE Mobile Security Technologies 2015 [47] with the original title of “Indirect File Leaks in Mobile Applications”. Victim App Abstract—Today, much of our sensitive information is stored inside mobile applications (apps), such as the browsing histories and chatting logs. To safeguard these privacy files, modern mobile Other systems, notably Android and iOS, use sandboxes to isolate apps’ components file zones from one another. However, we show in this paper that these private files can still be leaked by indirectly exploiting components that are trusted by the victim apps. In particular, Adversary Deputy Trusted we devise new indirect file leak (IFL) attacks that exploit browser (a) (d) parties interfaces, command interpreters, and embedded app servers to leak data from very popular apps, such as Evernote and QQ. Unlike the previous attacks, we demonstrate that these IFLs can Private files affect both Android and iOS. Moreover, our IFL methods allow (s) an adversary to launch the attacks remotely, without implanting malicious apps in victim’s smartphones. We finally compare the impacts of four different types of IFL attacks on Android and Fig. 1. A high-level IFL model. iOS, and propose several mitigation methods. four IFL attacks affect both Android and iOS. We summarize these attacks below. I. INTRODUCTION • sopIFL attacks bypass the same-origin policy (SOP), Mobile applications (apps) are gaining significant popularity which is enforced to protect resources originating from in today’s mobile cloud computing era [3], [4]. -

ENDPOINT MANAGEMENT 1 Information Systems Managers

STAFF SYMPOSIUM SERIES INFORMATION TECHNOLOGY TRACK FACILITATORS Carl Brooks System Manager ‐ Detroit, MI Chapter 13 Standing Trustee – Tammy L. Terry Debbie Smith System Manager – Robinsonville, NJ Chapter 13 Standing Trustee – Al Russo Scot Turner System Manager –Las Vegas, NV Chapter 13 Standing Trustee – Rick Yarnall Tom O’Hern Program Manager, ICF International, Baltimore, MD STACS ‐ Standing Trustee Alliance for Computer Security STAFF SYMPOSIUM ‐ IT TRACK 5/11/2017 SESSION 4 ‐ ENDPOINT MANAGEMENT 1 Information Systems Managers Windows 10 Debbie Smith System Manager Regional Staff Symposium ‐ IT Track May 11 and 12, 2017 Las Vegas, NV STAFF SYMPOSIUM ‐ IT TRACK 5/11/2017 SESSION 4 ‐ ENDPOINT MANAGEMENT 2 Windows lifecycle fact sheet End of support refers to the date when Microsoft no longer provides automatic fixes, updates, or online technical assistance. This is the time to make sure you have the latest available update or service pack installed. Without Microsoft support, you will no longer receive security updates that can help protect your PC from harmful viruses, spyware, and other malicious software that can steal your personal information. Client operating systems Latest update End of mainstream End of extended or service pack support support Windows XP Service Pack 3April 14, 2009 April 8, 2014 Windows Vista Service Pack 2April 10, 2012 April 11, 2017 Windows 7* Service Pack 1 January 13, 2015 January 14, 2020 Windows 8 Windows 8.1 January 9, 2018 January 10, 2023 Windows 10, July 2015 N/A October 13, 2020 October 14, 2025 * Support for Windows 7 RTM without service packs ended on April 9, 2013. -

Adaptive Android Kernel Live Patching

Adaptive Android Kernel Live Patching Yue Chen Yulong Zhang Zhi Wang Liangzhao Xia Florida State University Baidu X-Lab Florida State University Baidu X-Lab Chenfu Bao Tao Wei Baidu X-Lab Baidu X-Lab Abstract apps contain sensitive personal data, such as bank ac- counts, mobile payments, private messages, and social Android kernel vulnerabilities pose a serious threat to network data. Even TrustZone, widely used as the se- user security and privacy. They allow attackers to take cure keystore and digital rights management in Android, full control over victim devices, install malicious and un- is under serious threat since the compromised kernel en- wanted apps, and maintain persistent control. Unfortu- ables the attacker to inject malicious payloads into Trust- nately, most Android devices are never timely updated Zone [42, 43]. Therefore, Android kernel vulnerabilities to protect their users from kernel exploits. Recent An- pose a serious threat to user privacy and security. droid malware even has built-in kernel exploits to take Tremendous efforts have been put into finding (and ex- advantage of this large window of vulnerability. An ef- ploiting) Android kernel vulnerabilities by both white- fective solution to this problem must be adaptable to lots hat and black-hat researchers, as evidenced by the sig- of (out-of-date) devices, quickly deployable, and secure nificant increase of kernel vulnerabilities disclosed in from misuse. However, the fragmented Android ecosys- Android Security Bulletin [3] in recent years. In ad- tem makes this a complex and challenging task. dition, many kernel vulnerabilities/exploits are publicly To address that, we systematically studied 1;139 An- available but never reported to Google or the vendors, droid kernels and all the recent critical Android ker- let alone patched (e.g., exploits in Android rooting nel vulnerabilities. -

Adware-Searchsuite

McAfee Labs Threat Advisory Adware-SearchSuite June 22, 2018 McAfee Labs periodically publishes Threat Advisories to provide customers with a detailed analysis of prevalent malware. This Threat Advisory contains behavioral information, characteristics and symptoms that may be used to mitigate or discover this threat, and suggestions for mitigation in addition to the coverage provided by the DATs. To receive a notification when a Threat Advisory is published by McAfee Labs, select to receive “Malware and Threat Reports” at the following URL: https://www.mcafee.com/enterprise/en-us/sns/preferences/sns-form.html Summary Detailed information about the threat, its propagation, characteristics and mitigation are in the following sections: Infection and Propagation Vectors Mitigation Characteristics and Symptoms Restart Mechanism McAfee Foundstone Services The Threat Intelligence Library contains the date that the above signatures were most recently updated. Please review the above mentioned Threat Library for the most up to date coverage information. Infection and Propagation Vectors Adware-SearchSuite is a "potentially unwanted program" (PUP). PUPs are any piece of software that a reasonably security- or privacy-minded computer user may want to be informed of and, in some cases, remove. PUPs are often made by a legitimate corporate entity for some beneficial purpose, but they alter the security state of the computer on which they are installed, or the privacy posture of the user of the system, such that most users will want to be aware of them. Mitigation Mitigating the threat at multiple levels like file, registry and URL could be achieved at various layers of McAfee products. Browse the product guidelines available here (click Knowledge Center, and select Product Documentation from the Support Content list) to mitigate the threats based on the behavior described in the Characteristics and symptoms section. -

UPLC™ Universal Power-Line Carrier

UPLC™ Universal Power-Line Carrier CU4I-VER02 Installation Guide AMETEK Power Instruments 4050 N.W. 121st Avenue Coral Springs, FL 33065 1–800–785–7274 www.pulsartech.com THE BRIGHT STAR IN UTILITY COMMUNICATIONS March 2006 Trademarks All terms mentioned in this book that are known to be trademarks or service marks are listed below. In addition, terms suspected of being trademarks or service marks have been appropriately capital- ized. Ametek cannot attest to the accuracy of this information. Use of a term in this book should not be regarded as affecting the validity of any trademark or service mark. This publication includes fonts and/or images from CorelDRAW which are protected by the copyright laws of the U.S., Canada and elsewhere. Used under license. IBM and PC are registered trademarks of the International Business Machines Corporation. ST is a registered trademark of AT&T Windows is a registered trademark of Microsoft Corp. Universal Power-Line Carrier Installation Guide ESD WARNING! YOU MUST BE PROPERLY GROUNDED, TO PREVENT DAMAGE FROM STATIC ELECTRICITY, BEFORE HANDLING ANY AND ALL MODULES OR EQUIPMENT FROM AMETEK. All semiconductor components used, are sensitive to and can be damaged by the discharge of static electricity. Be sure to observe all Electrostatic Discharge (ESD) precautions when handling modules or individual components. March 2006 Page i Important Change Notification This document supercedes the preliminary version of the UPLC Installation Guide. The following list shows the most recent publication date for the new information. A publication date in bold type indicates changes to that information since the previous publication. -

Advance Dynamic Malware Analysis Using Api Hooking

www.ijecs.in International Journal Of Engineering And Computer Science ISSN:2319-7242 Volume – 5 Issue -03 March, 2016 Page No. 16038-16040 Advance Dynamic Malware Analysis Using Api Hooking Ajay Kumar , Shubham Goyal Department of computer science Shivaji College, University of Delhi, Delhi, India [email protected] [email protected] Abstract— As in real world, in virtual world also there are type of Analysis is ineffective against many sophisticated people who want to take advantage of you by exploiting you software. Advanced static analysis consists of reverse- whether it would be your money, your status or your personal engineering the malware’s internals by loading the executable information etc. MALWARE helps these people into a disassembler and looking at the program instructions in accomplishing their goals. The security of modern computer order to discover what the program does. Advanced static systems depends on the ability by the users to keep software, analysis tells you exactly what the program does. OS and antivirus products up-to-date. To protect legitimate users from these threats, I made a tool B. Dynamic Malware Analysis (ADVANCE DYNAMIC MALWARE ANAYSIS USING API This is done by watching and monitoring the behavior of the HOOKING) that will inform you about every task that malware while running on the host. Virtual machines and software (malware) is doing over your machine at run-time Sandboxes are extensively used for this type of analysis. The Index Terms— API Hooking, Hooking, DLL injection, Detour malware is debugged while running using a debugger to watch the behavior of the malware step by step while its instructions are being processed by the processor and their live effects on I. -

Userland Hooking in Windows

Your texte here …. Userland Hooking in Windows 03 August 2011 Brian MARIANI SeniorORIGINAL Security SWISS ETHICAL Consultant HACKING ©2011 High-Tech Bridge SA – www.htbridge.ch SOME IMPORTANT POINTS Your texte here …. This document is the first of a series of five articles relating to the art of hooking. As a test environment we will use an english Windows Seven SP1 operating system distribution. ORIGINAL SWISS ETHICAL HACKING ©2011 High-Tech Bridge SA – www.htbridge.ch WHAT IS HOOKING? Your texte here …. In the sphere of computer security, the term hooking enclose a range of different techniques. These methods are used to alter the behavior of an operating system by intercepting function calls, messages or events passed between software components. A piece of code that handles intercepted function calls, is called a hook. ORIGINAL SWISS ETHICAL HACKING ©2011 High-Tech Bridge SA – www.htbridge.ch THE WHITE SIDE OF HOOKING TECHNIQUES? YourThe texte control here …. of an Application programming interface (API) call is very useful and enables programmers to track invisible actions that occur during the applications calls. It contributes to comprehensive validation of parameters. Reports issues that frequently remain unnoticed. API hooking has merited a reputation for being one of the most widespread debugging techniques. Hooking is also quite advantageous technique for interpreting poorly documented APIs. ORIGINAL SWISS ETHICAL HACKING ©2011 High-Tech Bridge SA – www.htbridge.ch THE BLACK SIDE OF HOOKING TECHNIQUES? YourHooking texte here can …. alter the normal code execution of Windows APIs by injecting hooks. This technique is often used to change the behavior of well known Windows APIs. -

Digital Vision Network 5000 Series BCM Motherboard BIOS Upgrade

Digital Vision Network 5000 Series BCM™ Motherboard BIOS Upgrade Instructions October, 2011 24-10129-128 Rev. – Copyright 2011 Johnson Controls, Inc. All Rights Reserved (805) 522-5555 www.johnsoncontrols.com No part of this document may be reproduced without the prior permission of Johnson Controls, Inc. Cardkey P2000, BadgeMaster, and Metasys are trademarks of Johnson Controls, Inc. All other company and product names are trademarks or registered trademarks of their respective owners. These instructions are supplemental. Some times they are supplemental to other manufacturer’s documentation. Never discard other manufacturer’s documentation. Publications from Johnson Controls, Inc. are not intended to duplicate nor replace other manufacturer’s documentation. Due to continuous development of our products, the information in this document is subject to change without notice. Johnson Controls, Inc. shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing or use of this material. Contents of this publication may be preliminary and/or may be changed at any time without any obligation to notify anyone of such revision or change, and shall not be regarded as a warranty. If this document is translated from the original English version by Johnson Controls, Inc., all reasonable endeavors will be used to ensure the accuracy of translation. Johnson Controls, Inc. shall not be liable for any translation errors contained herein or for incidental or consequential damages in connection -

Guide to Enterprise Patch Management Technologies

NIST Special Publication 800-40 Revision 3 Guide to Enterprise Patch Management Technologies Murugiah Souppaya Karen Scarfone C O M P U T E R S E C U R I T Y NIST Special Publication 800-40 Revision 3 Guide to Enterprise Patch Management Technologies Murugiah Souppaya Computer Security Division Information Technology Laboratory Karen Scarfone Scarfone Cybersecurity Clifton, VA July 2013 U.S. Department of Commerce Penny Pritzker, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Under Secretary of Commerce for Standards and Technology and Director Authority This publication has been developed by NIST to further its statutory responsibilities under the Federal Information Security Management Act (FISMA), Public Law (P.L.) 107-347. NIST is responsible for developing information security standards and guidelines, including minimum requirements for Federal information systems, but such standards and guidelines shall not apply to national security systems without the express approval of appropriate Federal officials exercising policy authority over such systems. This guideline is consistent with the requirements of the Office of Management and Budget (OMB) Circular A-130, Section 8b(3), Securing Agency Information Systems, as analyzed in Circular A- 130, Appendix IV: Analysis of Key Sections. Supplemental information is provided in Circular A-130, Appendix III, Security of Federal Automated Information Resources. Nothing in this publication should be taken to contradict the standards and guidelines made mandatory and binding on Federal agencies by the Secretary of Commerce under statutory authority. Nor should these guidelines be interpreted as altering or superseding the existing authorities of the Secretary of Commerce, Director of the OMB, or any other Federal official. -

A Review Paper on Effective Behavioral Based Malware Detection and Prevention Techniques for Android Platform

International Journal of Engineering Research and Technology. ISSN 0974-3154 Volume 10, Number 1 (2017) © International Research Publication House http://www.irphouse.com A Review Paper on Effective Behavioral Based Malware Detection and Prevention Techniques for Android Platform Mr. Sagar Vitthal Shinde1 M.Tech Comp. Department of Technology, Shivaji University, Kolhapur, Maharashtra, India. Email id: [email protected] Ms. Amrita A. Manjrekar2 Assistant Professor, Department of Technology, Shivaji University, Kolhapur, Maharashtra, India. Email Id: [email protected] Abstract late). It has been recently reported that almost 60% of Android is most popular platform for mobile devices. existing malware send stealthy premium rate SMS messages. Smartphone’s and mobile tablets are rapidly indispensable in Most of these behaviors are exhibited by a category of apps daily life. Android has been the most popular open sources called Trojanized that can be found in online marketplaces mobile operating system. On the one side android users are not controlled by Google. However, also Google Play, the increasing, but other side malicious activity also official market for Android apps, has hosted apps which have simultaneously increasing. The risk of malware (Malicious been found to be malicious [1] [21]. apps) is sharply increasing in Android platform, Android Existing system consist of some limited features of android mobile malware detection and prevention has become an app, malware detection is based on behavioral base. The important research topic. Some malware attacks can make the malware detection and prevention process is also static which phone partially or fully unusable, cause unwanted SMS/MMS create some problems such as it increase false positive rate. -

Crimeware on the Net

Crimeware on the Net The “Behind the scenes” of the new web economy Iftach Ian Amit Director, Security Research – Finjan BlackHat Europe, Amsterdam 2008 Who Am I ? (iamit) • Iftach Ian Amit – In Hebrew it makes more sense… • Director Security Research @ Finjan • Various security consulting/integration gigs in the past – R&D – IT • A helping hand when needed… (IAF) 2 BlackHat Europe – Amsterdam 2008 Today’s Agenda • Terminology • Past vs. Present – 10,000 feet view • Business Impact • Key Characteristics – what does it look like? – Anti-Forensics techniques – Propagation methods • What is the motive (what are they looking for)? • Tying it all up – what does it look like when successful (video). • Anything in it for us to learn from? – Looking forward on extrusion testing methodologies 3 BlackHat Europe – Amsterdam 2008 Some Terminology • Crimeware – what we refer to most malware these days is actually crimeware – malware with specific goals for making $$$ for the attackers. • Attackers – not to be confused with malicious code writers, security researchers, hackers, crackers, etc… These guys are the Gordon Gecko‟s of the web security field. The buy low, and capitalize on the investment. • Smart (often mislead) guys write the crimeware and get paid to do so. 4 BlackHat Europe – Amsterdam 2008 How Do Cybercriminals Steal Business Data? Criminals’ activity in the cyberspace Federal Prosecutor: “Cybercrime Is Funding Organized Crime” 5 BlackHat Europe – Amsterdam 2008 The Business Impact Of Crimeware Criminals target sensitive business data